k8s中安装consul集群

一、准备知识

headless services一般结合StatefulSet来部署有状态的应用,比如kafka集群,mysql集群,zk集群等,也包括本文要部署的consul集群。

0、consul集群

consul集群的分布式协议算法采用的是raft协议,这意味着必然有leader、follow节点,每个节点对应的状态不一样,涉及到选举过程,各个参与选举或者加入集群的节点地址需要固定或通过类似dns、vip的方式访问到。

这就要求我们部署有状态服务,而不能使用无状态服务。另外需要创建Headless Service。

1、有状态服务

StatefulSet下的Pod有DNS地址,通过解析Pod的DNS可以返回Pod的IP。

StatefulSet会为关联的Pod保持一个不变的Pod Name,格式是{StatefulSet name}-{pod序号}

StatefulSet会为关联的Pod分配一个dnsName。

域名格式是:{pod-name}.{svc}.{namespace}.svc.cluster.local。

2、Headless Services

Headless Services是一种特殊的service,其spec:clusterIP表示为None,这样在实际运行时就不会被分配ClusterIP。也被称为无头服务。

第一种是vip的方式,即虚拟IP,通过虚拟ip的方式绑定到该service代理的某一个pod上。

这种是我们一般实现service的通常方式。

第二种方式是DNS的方式,对于k8s的服务注册与发现机制,不同应用pod之间互访可以通过{svc}.{namespace}.svc.cluster.local进行访问。这个访问类似VIP的方式,后面绑定的是一堆pod地址。

通过负载均衡机制进行访问。如果需要访问到特定的pod,就需要headless机制,此时可以通过 {pod-name}.{svc}.{namespace}.svc.cluster.local的定位到该pod。

比如本文consul的三个pod节点地址见下表,namespace=“default”

| pod-name | serviceName | 访问地址 |

|---|---|---|

| consul-0 | consul | consul-0.consul.default.svc.cluster.local |

| consul-1 | consul | consul-1.consul.default.svc.cluster.local |

| consul-2 | consul | consul-2.consul.default.svc.cluster.local |

3、数据持久化

对consul节点的数据、配置和日志等进行持久化。

- 搭建NFS

略。

本文使用的NFS搭建在192.168.80.170上,目录是/srv/nfs/disk

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: defalut-resources

namespace: default

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: managed-nfs-storage

volumeMode: Filesystem

kind: PersistentVolume

apiVersion: v1

metadata:

name: pvc-e5c5a6c9-d083-407a-86d7-25bf26e62ad4

spec:

capacity:

storage: 10Gi

nfs:

server: 192.168.80.170

path: >-

/srv/nfs/disk/default-defalut-resources-pvc-e5c5a6c9-d083-407a-86d7-25bf26e62ad4

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Delete

storageClassName: managed-nfs-storage

volumeMode: Filesystem

status:

phase: Bound

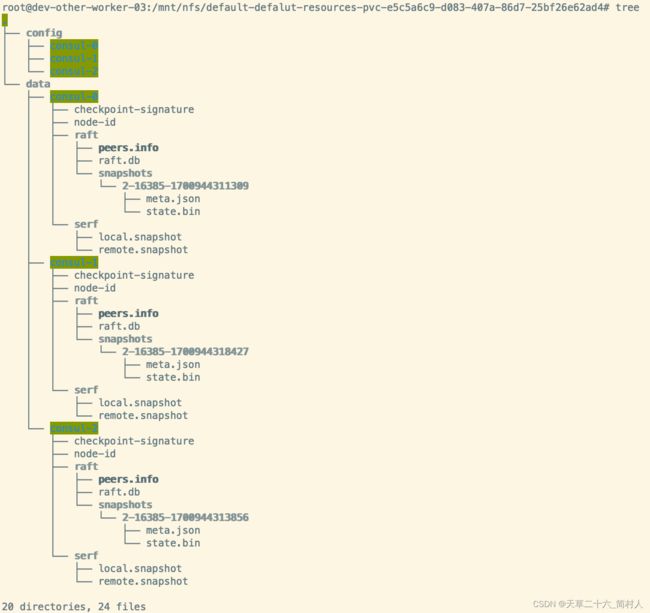

验证:

在根目录下,会创建一个目录“default-defalut-resources-pvc-e5c5a6c9-d083-407a-86d7-25bf26e62ad4”。

![]()

4、反亲和性podAntiAffinity

pod会基于topologyKey进行反亲和,如下述配置表示,标签k8s-app为consul的pod不会被调度到同一个node节点。

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values:

- consul

topologyKey: kubernetes.io/hostname

这个很有必要,也适用于其他有状态的服务部署。

二、目标

搭建三个server节点的consul集群,对内供Pod上的服务注册,对外提供UI管理。

三、搭建consul集群

1、完整的创建有状态服务yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: consul

namespace: default

spec:

serviceName: consul

replicas: 3

selector:

matchLabels:

k8s-app: consul

template:

metadata:

labels:

k8s-app: consul

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values:

- consul

topologyKey: kubernetes.io/hostname

terminationGracePeriodSeconds: 10

containers:

- name: consul

image: consul:latest

imagePullPolicy: IfNotPresent

args:

- "agent"

- "-server"

- "-bootstrap-expect=3"

- "-ui"

- "-config-file=/consul/config"

- "-data-dir=/consul/data"

- "-log-file=/consul/log"

- "-bind=0.0.0.0"

- "-client=0.0.0.0"

- "-advertise=$(PODIP)"

- "-retry-join=consul-0.consul.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-1.consul.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-2.consul.$(NAMESPACE).svc.cluster.local"

- "-domain=cluster.local"

- "-disable-host-node-id"

volumeMounts:

- name: nfs

mountPath: /consul/data

subPathExpr: data/$(PODNAME)

- name: nfs

mountPath: /consul/config

subPathExpr: config/$(PODNAME)

env:

- name: PODIP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: PODNAME

valueFrom:

fieldRef:

fieldPath: metadata.name

resources:

limits:

cpu: "2G"

memory: "2Gi"

requests:

cpu: "200m"

memory: "1Gi"

lifecycle:

preStart:

exec:

command:

- /bin/sh

- -c

- consul reload

preStop:

exec:

command:

- /bin/sh

- -c

- consul leave

ports:

- containerPort: 8500

name: ui-port

- containerPort: 8400

name: alt-port

- containerPort: 53

name: udp-port

- containerPort: 8443

name: https-port

- containerPort: 8080

name: http-port

- containerPort: 8301

name: serflan

- containerPort: 8302

name: serfwan

- containerPort: 8600

name: consuldns

- containerPort: 8300

name: server

volumes:

- name: nfs

persistentVolumeClaim:

claimName: defalut-resources

2、创建services

kind: Service

apiVersion: v1

metadata:

name: consul

namespace: default

labels:

app: consul

spec:

ports:

- name: http

protocol: TCP

port: 8500

targetPort: 8500

- name: https

protocol: TCP

port: 8443

targetPort: 8443

- name: rpc

protocol: TCP

port: 8400

targetPort: 8400

- name: serflan-tcp

protocol: TCP

port: 8301

targetPort: 8301

- name: serflan-udp

protocol: UDP

port: 8301

targetPort: 8301

- name: serfwan-tcp

protocol: TCP

port: 8302

targetPort: 8302

- name: serfwan-udp

protocol: UDP

port: 8302

targetPort: 8302

- name: server

protocol: TCP

port: 8300

targetPort: 8300

- name: consuldns

protocol: TCP

port: 8600

targetPort: 8600

selector:

k8s-app: consul

clusterIP: None

---

apiVersion: v1

kind: Service

metadata:

name: consul-dns

namespace: default

labels:

app: consul

spec:

selector:

k8s-app: consul

ports:

- name: dns-tcp

protocol: TCP

port: 53

targetPort: dns-tcp

- name: dns-udp

protocol: UDP

port: 53

targetPort: dns-udp

---

apiVersion: v1

kind: Service

metadata:

name: consul-ui

namespace: default

labels:

app: consul

spec:

selector:

k8s-app: consul

ports:

- name: http

port: 80

targetPort: 8500

3、创建一个nodeport类型的service

这样,我们可以通过ip地址+端口的方式直接访问consul ui。

kind: Service

apiVersion: v1

metadata:

name: consul-ui-ip

namespace: default

labels:

app: consul

spec:

ports:

- name: consul-ui-ip

protocol: TCP

port: 8500

targetPort: 8500

selector:

k8s-app: consul

type: NodePort

sessionAffinity: None

四、验证

1、日志

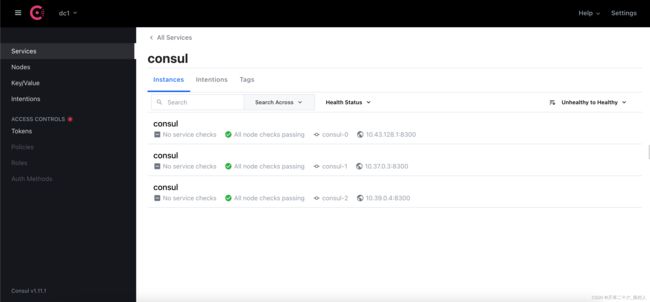

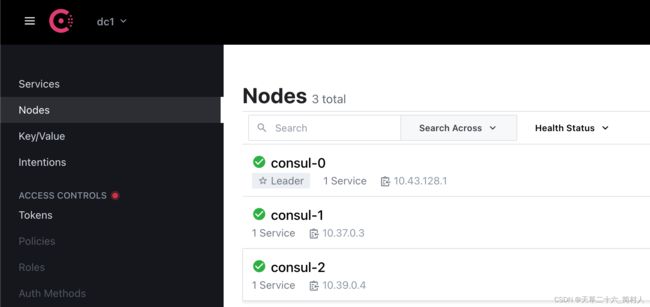

这里以第一个节点为例,从日志里可以看出,node name是 consul-0,consul的版本是1.11.1。我们没有指定datacenter的名称,默认是dc1。

- leader节点是10.43.128.1

- follow节点是10.37.0.3和10.39.0.4

2、命令

- 命名空间是default

// 查看service,注意 headless service

kubectl get svc -n default

// 针对consul ui 增加NodePort对外的访问地址

[admin@jenkins]$ kubectl get svc -n default

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

consul ClusterIP None <none> 8500/TCP,8443/TCP,8400/TCP,8301/TCP,8301/UDP,8302/TCP,8302/UDP,8300/TCP,8600/TCP 2d20h

consul-dns ClusterIP 10.107.173.90 <none> 53/TCP,53/UDP 2d19h

consul-ui ClusterIP 10.111.174.132 <none> 80/TCP 2d19h

consul-ui-ip NodePort 10.102.74.43 <none> 8500:30487/TCP 2d17h

// 三个Pod节点启动OK

kubectl get pod -n default

[admin@jenkins]$ kubectl get pod -n default

NAME READY STATUS RESTARTS AGE

consul-0 1/1 Running 0 2d17h

consul-1 1/1 Running 0 2d17h

consul-2 1/1 Running 0 2d17h

// 查看consul集群的成员列表

kubectl exec -n default consul-0 -- consul members

kubectl exec -n default consul-1 -- consul members

kubectl exec -n default consul-2 -- consul members

[admin@jenkins]$ kubectl exec -n default consul-0 -- consul members

Node Address Status Type Build Protocol DC Partition Segment

consul-0 10.43.128.1:8301 alive server 1.11.1 2 dc1 default <all>

consul-1 10.37.0.3:8301 alive server 1.11.1 2 dc1 default <all>

consul-2 10.39.0.4:8301 alive server 1.11.1 2 dc1 default <all>

[admin@jenkins]$ kubectl exec -n default consul-1 -- consul members

Node Address Status Type Build Protocol DC Partition Segment

consul-0 10.43.128.1:8301 alive server 1.11.1 2 dc1 default <all>

consul-1 10.37.0.3:8301 alive server 1.11.1 2 dc1 default <all>

consul-2 10.39.0.4:8301 alive server 1.11.1 2 dc1 default <all>

[admin@jenkins]$ kubectl exec -n default consul-2 -- consul members

Node Address Status Type Build Protocol DC Partition Segment

consul-0 10.43.128.1:8301 alive server 1.11.1 2 dc1 default <all>

consul-1 10.37.0.3:8301 alive server 1.11.1 2 dc1 default <all>

consul-2 10.39.0.4:8301 alive server 1.11.1 2 dc1 default <all>

3、consul server node

五、遇到的问题

三个consul节点加入集群失败

-

报错信息:

agent: grpc: addrConn.createTransport failed to connect to {dc1-10.43.128.1:8300 0 consul-0.dc1 }. Err :connection error: desc = “transport: Error while dialing dial tcp ->10.43.128.1:8300: operation was canceled”. Reconnecting… -

解决办法:

# 在启动consul容器的时候,增加以下语句

lifecycle:

preStart:

exec:

command:

- /bin/sh

- -c

- consul reload

preStop:

exec:

command:

- /bin/sh

- -c

- consul leave

六、对内访问

java服务接入consul示例:

consul_acl_token=xxxxxx

consul_service_ip=10.43.128.1或10.37.0.3或10.39.0.4

java -Dspring.cloud.consul.config.acl-token=$consul_acl_token \

-Dspring.cloud.consul.discovery.acl-token=$consul_acl_token \

-Dserver.ip=${LOCAL_IP} -Xms${Xms} -Xmx${Xmx} \

-XX:+HeapDumpOnOutOfMemoryError \

-DCONFIG_SERVICE_HOST=${consul_service_ip} \

-DCONFIG_SERVICE_ENABLED=true -jar xxx.jar

七、UI界面

- 访问地址:http://{ip}:30487

至此,三个server节点组成的consul集群就搭建完成了。