【DevOps】基于 KubeSphere 的 Kubernetes 生产实践之旅(万字长文)

基于 KubeSphere 的 Kubernetes 生产实践

- 1.KubeSphere 简介

-

- 1.1 全栈的 Kubernetes 容器云 PaaS 解决方案

- 1.2 选型理由(从运维的角度考虑)

- 2.部署架构图

- 3.节点规划

-

- 3.1 软件版本

- 3.2 规划说明

-

- 3.2.1 K8s 集群规划

- 3.2.2 存储集群

- 3.2.3 中间件集群

- 3.2.4 网络规划

- 3.2.5 存储选型说明

- 3.3 Kubernetes 集群节点规划

- 3.4 存储集群节点规划

- 3.5 中间件节点规划

- 4.K8s 集群服务器基础配置

-

- 4.1 操作系统基础配置

- 4.2 基本的安全配置

- 4.3 Docker 安装配置

- 5.安装配置负载均衡

-

- 5.1 三种解决方案

- 5.2 安装配置

-

- 5.2.1 安装软件包(所有负载均衡节点)

- 5.2.2 配置 HAproxy(所有负载均衡节点,配置相同)

- 5.2.3 配置 Keepalived

- 5.2.4 验证

- 6.KubeSphere 安装 Kubernetes

-

- 6.1 下载 KubeKey

- 6.2 创建包含默认配置的示例配置文件 config-sample.yaml

- 6.3 根据规划,编辑修改配置文件

- 6.4 安装 KubeSphere 和 Kubernetes 集群

- 6.5 验证安装结果

1.KubeSphere 简介

1.1 全栈的 Kubernetes 容器云 PaaS 解决方案

KubeSphere 是在 Kubernetes 之上构建的以应用为中心的 多租户容器平台,提供全栈的 IT 自动化运维 的能力,简化企业的 DevOps 工作流。KubeSphere 提供了运维友好的向导式操作界面,帮助企业快速构建一个强大和功能丰富的容器云平台。

- ✅ 完全开源:通过 CNCF 一致性认证的 Kubernetes 平台, 100 100% 100 开源,由社区驱动与开发。

- ✅ 简易安装:支持部署在任何基础设施环境,提供在线与离线安装,支持一键升级与扩容集群。

- ✅ 功能丰富:在一个平台统一纳管 DevOps、云原生可观测性、服务网格、应用生命周期、多租户、多集群、存储与网络。

- ✅ 模块化 & 可插拔。

- ✅ 平台中的所有功能都是可插拔与松耦合,您可以根据业务场景可选安装所需功能组件。

官网地址:https://kubesphere.io/zh/

1.2 选型理由(从运维的角度考虑)

- 安装简单,使用简单。

- 具备构建一站式企业级的 DevOps 架构与可视化运维能力(省去自己用开源工具手工搭积木)。

- 提供从平台到应用维度的日志、监控、事件、审计、告警与通知,实现集中式与多租户隔离的可观测性。

- 简化应用的持续集成、测试、审核、发布、升级与弹性扩缩容。

- 为云原生应用提供基于微服务的灰度发布、流量管理、网络拓扑与追踪。

- 提供易用的界面命令终端与图形化操作面板,满足不同使用习惯的运维人员。

- 可轻松解耦,避免厂商绑定。

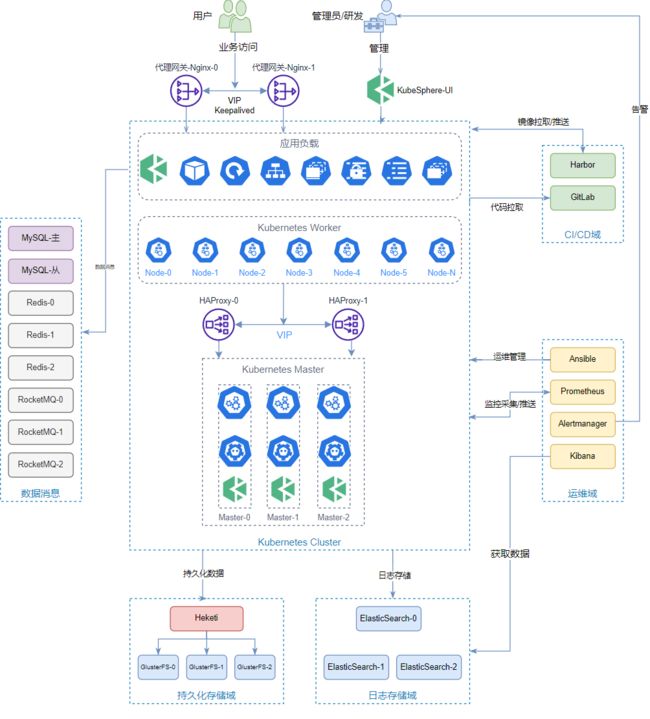

2.部署架构图

3.节点规划

3.1 软件版本

-

操作系统版本:

centos 7.9 -

KubeSphere:

v3.1.1 -

KubeKey 版本:

v1.1.1 -

Kubernetes 版本:

v1.20.4 -

Docker 版本:

v19.03.15

3.2 规划说明

3.2.1 K8s 集群规划

- 负载均衡

- 2 2 2 节点,HAProxy,使用

keepalived实现高可用。

- 2 2 2 节点,HAProxy,使用

- Master 节点

- 3 3 3 节点,部署 KubeSphere 和 Kubernetes 的管理组件,

etcd等服务。 - 本方案并没有把

etcd单独部署,有条件或是规模较大的场景可以单独部署etcd。

- 3 3 3 节点,部署 KubeSphere 和 Kubernetes 的管理组件,

- Worker 节点

- 6 6 6 节点,部署应用,根据实际需求决定数量。

3.2.2 存储集群

- 3 3 3 节点,GlusterFS

- 每个节点 1 T 1T 1T 数据盘

3.2.3 中间件集群

- 在 K8s 集群之外,独立部署的常见中间件。

- Nginx 代理节点,使用

keepalived实现高可用,不采用Ingress。 - MySQL 数据库,主从架构,中小规模使用,大规模需要专业运维人员或是使用云上成熟的产品,最好使用云服务商的产品。

- Ansible,单独的自动化运维管理节点,执行日常批量运维管理操作。

- GitLab,运维代码管理,实现 GitOps。

- Harbor,镜像仓库。

- Elasticsearch, 3 3 3 节点,存储日志。

- Prometheus,单独部署,用于 K8s 集群和 Pod 的监控。

- Redis 集群, 3 3 3 节点哨兵模式,该集群暂时还是部署在 K8s 上,后期考虑单独部署,因此预先规划预留机器,建议考虑云服务商的产品。

- RocketMQ 集群, 3 3 3 节点,该集群暂时还是部署在 K8s 上,后期考虑单独部署,因此预先规划预留机器,建议考虑云服务上的产品。

3.2.4 网络规划

我们网络要求比较多。因此,不同功能模块,规划了不同的网段,各位可根据需求合理规划。

| 功能域 | 网段 | 说明 |

|---|---|---|

| K8s 集群 | 192.168.9.0/24 |

K8s 集群内部节点使用 |

| 存储集群 | 192.168.10.0/24 |

存储集群内部节点使用 |

| 中间件集群 | 192.168.11.0/24 |

独立在 K8s 集群外的,各种中间件节点使用 |

3.2.5 存储选型说明

- 候选者

| 存储方案 | 优点 | 缺点 | 说明 |

|---|---|---|---|

| Ceph | 资源多 | 没有 Ceph 集群故障处理能力,最好不要碰 | 曾经,经历过 3 3 3 副本全部损坏数据丢失的惨痛经历,因此没有能力处理各种故障之前不会再轻易选择 |

| GlusterFS | 部署、维护简单;多副本高可用 | 资料少 | 部署和维护简单,出了问题找回数据的可能性大一些 |

| NFS | 使用广泛 | 单点、网络抖动 | 据说生产环境用的很多,但是单点和网络抖动风险,隐患不小,暂不考虑 |

| MinIO | 官宣全球领先的对象存储先锋,还未实践 | ||

| Longhorn | 官宣企业级云原生容器存储解决方案,还未实践 |

-

入选者(暂定):GlusterFS

-

说明

- 以上方案为初期初选,属于摸着石头过河,选一个先用着,后期根据运行情况再重新调整。

- 大家请根据自己的存储需求和团队运维能力选择适合的方案。

- 因为我们的业务场景对于持久化存储的需求也就是存放一些 Log 日志,能承受一定的数据损失,因此综合选择了 GlusterFS。

- 存储规划中假设 1 T 1T 1T 数据满足需求,没考虑扩容,后续会做补充。

3.3 Kubernetes 集群节点规划

| 节点角色 | 主机名 | CPU(核) | 内存(GB) | 系统盘(GB) | 数据盘(GB) | IP | 备注 |

|---|---|---|---|---|---|---|---|

| 负载均衡 | k8s-slb-0 | 2 | 4 | 50 | 192.168.9.2 / 192.168.9.1 |

||

| 负载均衡 | k8s-slb-1 | 2 | 4 | 50 | 192.168.9.3 / 192.168.9.1 |

||

| Master | k8s-master-0 | 8 | 32 | 50 | 500 | 192.168.9.4 |

|

| Master | k8s-master-1 | 8 | 32 | 50 | 500 | 192.168.9.5 |

|

| Master | k8s-master-2 | 8 | 32 | 50 | 500 | 192.168.9.6 |

|

| Worker | k8s-node-0 | 8 | 32 | 50 | 500 | 192.168.9.7 |

|

| Worker | k8s-node-1 | 8 | 32 | 50 | 500 | 192.168.9.8 |

|

| Worker | k8s-node-2 | 8 | 32 | 50 | 500 | 192.168.9.9 |

|

| Worker | k8s-node-3 | 8 | 32 | 50 | 500 | 192.168.9.10 |

|

| Worker | k8s-node-4 | 8 | 32 | 50 | 500 | 192.168.9.11 |

|

| Worker | k8s-node-5 | 8 | 32 | 50 | 500 | 192.168.9.12 |

|

| Worker | k8s-node-n | 8 | 32 | 50 | 500 | … | 根据自己的业务需求增加节点 |

3.4 存储集群节点规划

| 节点角色 | 主机名 | CPU(核) | 内存(GB) | 系统盘(GB) | 数据盘(GB) | IP | 备注 |

|---|---|---|---|---|---|---|---|

| 存储节点 | glusterfs-node-0 | 4 | 16 | 50 | 1000 | 192.168.10.1 |

|

| 存储节点 | glusterfs-node-1 | 4 | 16 | 50 | 1000 | 192.168.10.2 |

|

| 存储节点 | glusterfs-node-2 | 4 | 16 | 50 | 1000 | 192.168.10.3 |

3.5 中间件节点规划

| 节点角色 | 主机名 | CPU(核) | 内存(GB) | 系统盘(GB) | 数据盘(GB) | IP | 备注 |

|---|---|---|---|---|---|---|---|

| nginx 代理 | nginx-0 | 4 | 16 | 50 | 192.168.11.2 / 192.168.11.1 |

自建域名网关,不采用 Ingress | |

| nginx 代理 | nginx-1 | 4 | 16 | 50 | 192.168.11.3 / 192.168.11.1 |

自建域名网关,不采用 Ingress | |

| MySQL-主 | db-master | 4 | 16 | 50 | 500 | 192.168.11.4 |

|

| MySQL-从 | db-slave | 4 | 16 | 50 | 500 | 192.168.11.5 |

|

| Elasticsearch | elastic-0 | 4 | 16 | 50 | 1000 | 192.168.11.6 |

|

| Elasticsearch | elastic-1 | 4 | 16 | 50 | 1000 | 192.168.11.7 |

|

| Elasticsearch | elastic-2 | 4 | 16 | 50 | 1000 | 192.168.11.8 |

|

| 自动化运维 | ansible | 2 | 4 | 50 | 192.168.11.9 |

安装 ansible,用于自动化运维 | |

| 配置管理 | harbor | 4 | 16 | 50 | 500 | 192.168.11.10 |

安装 gitlab 和 harbor |

| Prometheus | monitor | 4 | 16 | 50 | 500 | 192.168.11.11 |

|

| Redis | redis-0 | 4 | 16 | 50 | 200 | 192.168.11.12 |

预留 |

| Redis | redis-1 | 4 | 16 | 50 | 200 | 192.168.11.13 |

预留 |

| Redis | redis-2 | 4 | 16 | 50 | 200 | 192.168.11.14 |

预留 |

| RocketMQ | rocketmq-0 | 4 | 16 | 50 | 200 | 192.168.11.15 |

预留 |

| RocketMQ | rocketmq-1 | 4 | 16 | 50 | 200 | 192.168.11.16 |

预留 |

| RocketMQ | rocketmq-2 | 4 | 16 | 50 | 200 | 192.168.11.17 |

预留 |

4.K8s 集群服务器基础配置

4.1 操作系统基础配置

(1)以下操作在 K8s 集群的 Master 和 Worker 节点均执行。

(2)以下操作为了记录博客,采用的手工命令的方式,实践中都采用的 Ansible 进行的自动化配置。

- 关闭防火墙和 SELinux

本环境没有考虑更多的安全配置,因此关闭了防火墙和 SELinux,有更高安全要求的环境不需要关闭,而是需要进行更多的安全配置。

[root@k8s-master-0 ~]# systemctl stop firewalld && systemctl disable firewalld

[root@k8s-master-0 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

- 配置主机名

[root@k8s-master-0 ~]# hostnamectl set-hostname 规划的主机名

-

配置主机名解析(可选)

-

挂载数据盘

# 查看数据盘盘符

[root@k8s-master-0 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 40G 0 disk

├─vda1 253:1 0 4G 0 part

└─vda2 253:2 0 36G 0 part /

vdb 253:16 0 500G 0 disk

# 分区

[root@k8s-master-0 ~]# fdisk /dev/vdb

n

p

一路回车

....

w

# 格式化文件系统(可选 ext4 或是 xfs)

[root@k8s-master-0 ~]# mkfs.ext4 /dev/vdb1

# 创建挂载目录

[root@k8s-master-0 ~]# mkdir /data

# 挂载磁盘

[root@k8s-master-0 ~]# mount /dev/vdb1 /data

# 开机自动挂载

[root@k8s-master-0 ~]# echo '/dev/vdb1 /data ext4 defaults 0 0' >> /etc/fstab

- 更新操作系统并重启

[root@k8s-master-0 ~]# yum update

[root@k8s-master-0 ~]# reboot

- 安装依赖软件包

[root@k8s-master-0 ~]# yum install socat conntrack ebtables ipset

4.2 基本的安全配置

基线加固配置

- 每个企业的基线扫描标准和工具不尽相同,因此本节内容请自行根据漏扫报告的整改要求进行配置。

- 如有有需要,后期可以分享我们使用的基线加固的自动化配置脚本。

4.3 Docker 安装配置

容器运行时,我们生产环境保守的选择了 19.03 版本的 Docker,安装时选择最新版的即可。

- 配置

docker yum源

[root@k8s-master-0 ~]# vi /etc/yum.repods.d/docker.repo

[docker-ce-stable]

baseurl=https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/$releasever/$basearch/stable

gpgcheck=1

gpgkey=https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/gpg

enabled=1

[root@k8s-master-0 ~]# yum clean all

[root@k8s-master-0 ~]# yum makecache

- 创建 Docker 的配置文件目录和配置文件

[root@k8s-master-0 ~]# mkdir -p /etc/docker/

[root@k8s-master-0 ~]# vi /etc/docker/daemon.json

{

"data-root": "/data/docker",

"registry-mirrors":["https://docker.mirrors.ustc.edu.cn"],

"log-opts": {

"max-size": "5m",

"max-file":"3"

},

"exec-opts": ["native.cgroupdriver=systemd"]

}

- 安装 Docker

[root@k8s-master-0 ~]# yum install docker-ce-19.03.15-3.el7 docker-ce-cli-19.03.15-3.el7 -y

- 启动服务并设置开机自启动

[root@k8s-master-0 ~]# systemctl restart docker.service && systemctl enable docker.service

- 验证

[root@k8s-master-0 ~]# docker version

Client: Docker Engine - Community

Version: 19.03.15

API version: 1.40

Go version: go1.13.15

Git commit: 99e3ed8919

Built: Sat Jan 30 03:17:57 2021

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.15

API version: 1.40 (minimum version 1.12)

Go version: go1.13.15

Git commit: 99e3ed8919

Built: Sat Jan 30 03:16:33 2021

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.4.12

GitCommit: 7b11cfaabd73bb80907dd23182b9347b4245eb5d

runc:

Version: 1.0.2

GitCommit: v1.0.2-0-g52b36a2

docker-init:

Version: 0.18.0

GitCommit: fec3683

5.安装配置负载均衡

5.1 三种解决方案

- 采用公有云或是私有云平台上自带的弹性负载均衡服务

配置监听器监听的端口

| 服务 | 协议 | 端口 |

|---|---|---|

apiserver |

TCP | 6443 6443 6443 |

ks-console |

TCP | 30880 30880 30880 |

http |

TCP | 80 80 80 |

https |

TCP | 443 443 443 |

-

采用 HAProxy 或是 Nginx 自建负载均衡(此次选择)

-

使用 KubeSphere 自带的解决方案部署 HAProxy

- KubeKey

v1.2.1开始支持 - 参考 使用 KubeKey 内置 HAproxy 创建高可用集群

- KubeKey

5.2 安装配置

5.2.1 安装软件包(所有负载均衡节点)

[root@k8s-master-0 ~]# yum install haproxy keepalived

5.2.2 配置 HAproxy(所有负载均衡节点,配置相同)

- 编辑配置文件

[root@k8s-master-0 ~]# vi /etc/haproxy/haproxy.cfg

- 配置示例

global

log /dev/log local0 warning

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

log global

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

frontend kube-apiserver

bind *:6443

mode tcp

option tcplog

default_backend kube-apiserver

backend kube-apiserver

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server kube-apiserver-1 192.168.9.4:6443 check # Replace the IP address with your own.

server kube-apiserver-2 192.168.9.5:6443 check # Replace the IP address with your own.

server kube-apiserver-3 192.168.9.6:6443 check # Replace the IP address with your own.

frontend ks-console

bind *:30880

mode tcp

option tcplog

default_backend ks-console

backend ks-console

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server kube-apiserver-1 192.168.9.4:30880 check # Replace the IP address with your own.

server kube-apiserver-2 192.168.9.5:30880 check # Replace the IP address with your own.

server kube-apiserver-3 192.168.9.6:30880 check # Replace the IP address with your own.

- 启动服务并设置开机自启动(所有负载均衡节点)

[root@k8s-master-0 ~]# systemctl restart haproxy && systemctl enable haproxy

5.2.3 配置 Keepalived

- 编辑配置文件(所有负载均衡节点)

[root@k8s-master-0 ~]# vi /etc/keepalived/keepalived.conf

- LB 节点 1 1 1 配置文件示例

global_defs {

notification_email {

}

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance haproxy-vip {

state MASTER # 主服务器的初始状态

priority 100 # 优先级主服务器的要高

interface eth0 # 网卡名称,根据实际情况替换

virtual_router_id 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_src_ip 192.168.9.2 # 本机eth0网卡的IP地址

unicast_peer {

192.168.9.3 # SLB节点2的IP地址

}

virtual_ipaddress {

192.168.9.1/24 # VIP地址

}

track_script {

chk_haproxy

}

}

- LB 节点 2 2 2 配置文件示例

global_defs {

notification_email {

}

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance haproxy-vip {

state BACKUP # 从服务器的初始状态

priority 99 # 优先级,从服务器的低于主服务器的值

interface eth0 # 网卡名称,根据实际情况替换

virtual_router_id 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_src_ip 192.168.9.3 # 本机eth0网卡的IP地址

unicast_peer {

192.168.9.2 # SLB节点1的IP地址

}

virtual_ipaddress {

192.168.9.1/24 # VIP地址

}

track_script {

chk_haproxy

}

}

- 启动服务并设置开机自启动(所有负载均衡节点)

[root@k8s-master-0 ~]# systemctl restart keepalived && systemctl enable keepalived

5.2.4 验证

- 查看 VIP(在负载均衡节点)

[root@k8s-slb-0 ~]# ip a s

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 52:54:9e:27:38:c8 brd ff:ff:ff:ff:ff:ff

inet 192.168.9.2/24 brd 192.168.9.255 scope global noprefixroute dynamic eth0

valid_lft 73334sec preferred_lft 73334sec

inet 192.168.9.1/24 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::510e:f96:98b2:af40/64 scope link noprefixroute

valid_lft forever preferred_lft forever

- 验证 VIP 的连通性(在

k8s-master其他节点)

[root@k8s-master-0 ~]# ping -c 4 192.168.9.1

PING 192.168.9.1 (192.168.9.1) 56(84) bytes of data.

64 bytes from 192.168.9.1: icmp_seq=1 ttl=64 time=0.664 ms

64 bytes from 192.168.9.1: icmp_seq=2 ttl=64 time=0.354 ms

64 bytes from 192.168.9.1: icmp_seq=3 ttl=64 time=0.339 ms

64 bytes from 192.168.9.1: icmp_seq=4 ttl=64 time=0.304 ms

--- 192.168.9.1 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3000ms

rtt min/avg/max/mdev = 0.304/0.415/0.664/0.145 ms

6.KubeSphere 安装 Kubernetes

6.1 下载 KubeKey

KubeKey 安装在了 master-0 节点,也可以安装在运维管理节点。

# 使用国内环境

[root@k8s-master-0 ~]# export KKZONE=cn

# 执行以下命令下载 KubeKey

[root@k8s-master-0 ~]# curl -sfL https://get-kk.kubesphere.io | VERSION=v1.1.1 sh -

# 为kk添加可执行权限(可选)

[root@k8s-master-0 ~]# chmod +x kk

6.2 创建包含默认配置的示例配置文件 config-sample.yaml

[root@k8s-master-0 ~]# ./kk create config --with-kubesphere v3.1.1 --with-kubernetes v1.20.4

-

--with-kubesphere指定 KubeSphere 版本v3.1.1 -

--with-kubernetes指定 Kubernetes 版本v1.20.4

6.3 根据规划,编辑修改配置文件

vi config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: k8s-master-0, address: 192.168.9.3, internalAddress: 192.168.9.3, user: root, password: P@ssw0rd@123}

- {name: k8s-master-1, address: 192.168.9.4, internalAddress: 192.168.9.4, user: root, password: P@ssw0rd@123}

- {name: k8s-master-2, address: 192.168.9.5, internalAddress: 192.168.9.5, user: root, password: P@ssw0rd@123}

- {name: k8s-node-0, address: 192.168.9.6, internalAddress: 192.168.9.6, user: root, password: P@ssw0rd@123}

- {name: k8s-node-1, address: 192.168.9.7, internalAddress: 192.168.9.7, user: root, password: P@ssw0rd@123}

- {name: k8s-node-2, address: 192.168.9.8, internalAddress: 192.168.9.8, user: root, password: P@ssw0rd@123}

roleGroups:

etcd:

- k8s-master-0

- k8s-master-1

- k8s-master-2

master:

- k8s-master-0

- k8s-master-1

- k8s-master-2

worker:

- k8s-node-0

- k8s-node-1

- k8s-node-2

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: "192.168.9.1"

port: 6443

kubernetes:

version: v1.20.4

imageRepo: kubesphere

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

....(后面太多都是ks的配置,本文不涉及,先省略)

重点配置项说明

-

hosts 配置 K8s 集群节点的名字、IP、管理用户、管理用户名

-

roleGroups

-

etcd:

etcd节点名称 -

master:

master节点的名称 -

worker:

worker节点的名称

-

-

controlPlaneEndpoint

-

domain:负载衡器 IP 对应的域名,一般形式

lb.clusterName -

address:负载衡器 IP 地址

-

-

kubernetes

- clusterName:

kubernetes集群的集群名称

- clusterName:

6.4 安装 KubeSphere 和 Kubernetes 集群

[root@k8s-master-0 ~]# ./kk create cluster -f config-sample.yaml

6.5 验证安装结果

- 验证安装过程

[root@k8s-master-0 ~]# kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

- 验证集群状态

安装完成后,您会看到如下内容:

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.9.2:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

the "Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 20xx-xx-xx xx:xx:xx

#####################################################