java提交spark submit_spark-submit提交方式测试Demo

写一个小小的Demo测试一下Spark提交程序的流程

Maven的pom文件

1.7

1.7

UTF-8

1.6.1

org.apache.spark

spark-core_2.10

${spark.version}

redis.clients

jedis

2.7.1

org.apache.maven.plugins

maven-compiler-plugin

1.7

1.7

org.apache.maven.plugins

maven-shade-plugin

2.4.3

package

shade

*:*

META-INF/*.SF

META-INF/*.DSA

META-INF/*.RSA

编写一个蒙特卡罗求PI的代码

importjava.util.ArrayList;importjava.util.List;importorg.apache.spark.SparkConf;importorg.apache.spark.api.java.JavaRDD;importorg.apache.spark.api.java.JavaSparkContext;importorg.apache.spark.api.java.function.Function;importorg.apache.spark.api.java.function.Function2;importredis.clients.jedis.Jedis;/*** Computes an approximation to pi

* Usage: JavaSparkPi [slices]*/

public final classJavaSparkPi {public static void main(String[] args) throwsException {

SparkConf sparkConf= new SparkConf().setAppName("JavaSparkPi")/*.setMaster("local[2]")*/;

JavaSparkContext jsc= newJavaSparkContext(sparkConf);

Jedis jedis= new Jedis("192.168.49.151",19000);int slices = (args.length == 1) ? Integer.parseInt(args[0]) : 2;int n = 100000 *slices;

List l = new ArrayList(n);for (int i = 0; i < n; i++) {

l.add(i);

}

JavaRDD dataSet =jsc.parallelize(l, slices);int count = dataSet.map(new Function() {

@OverridepublicInteger call(Integer integer) {double x = Math.random() * 2 - 1;double y = Math.random() * 2 - 1;return (x * x + y * y < 1) ? 1 : 0;

}

}).reduce(new Function2() {

@OverridepublicInteger call(Integer integer, Integer integer2) {return integer +integer2;

}

});

jedis.set("Pi", String.valueOf(4.0 * count /n));

System.out.println("Pi is roughly " + 4.0 * count /n);

jsc.stop();

}

}

前提条件的setMaster("local[2]") 没有在代码中hard code

本地模式测试情况:# Run application locally on 8 cores

spark-submit \

--master local[8] \

--class com.spark.test.JavaSparkPi \

--executor-memory 4g \

--executor-cores 4 \

/home/dinpay/test/Spark-SubmitTest.jar 100

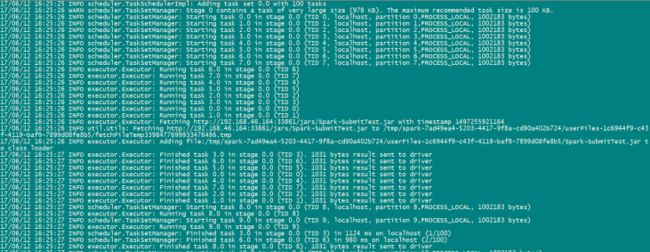

运行结果在本地:运行在本地一起提交8个Task,不会在WebUI的8080端口上看见提交的任务

-------------------------------------

spark-submit \

--master local[8] \

--class com.spark.test.JavaSparkPi \

--executor-memory 8G \

--total-executor-cores 8 \

hdfs://192.168.46.163:9000/home/test/Spark-SubmitTest.jar 100

运行报错:java.lang.ClassNotFoundException: com.spark.test.JavaSparkPi

------------------------------------

spark-submit \

--master local[8] \

--deploy-mode cluster \

--supervise \

--class com.spark.test.JavaSparkPi \

--executor-memory 8G \

--total-executor-cores 8 \

/home/dinpay/test/Spark-SubmitTest.jar 100

运行报错:Error: Cluster deploy mode is not compatible with master "local"

====================================================================

Standalone模式client模式 # Run on a Spark standalone cluster in client deploy mode

spark-submit \

--master spark://hadoop-namenode-02:7077 \

--class com.spark.test.JavaSparkPi \

--executor-memory 8g \

--tital-executor-cores 8 \

/home/dinpay/test/Spark-SubmitTest.jar 100

运行结果如下:

-------------------------------------------

spark-submit \

--master spark://hadoop-namenode-02:7077 \

--class com.spark.test.JavaSparkPi \

--executor-memory 4g \

--executor-cores 4g \

hdfs://192.168.46.163:9000/home/test/Spark-SubmitTest.jar 100

运行报错:java.lang.ClassNotFoundException: com.spark.test.JavaSparkPi

=======================================================================

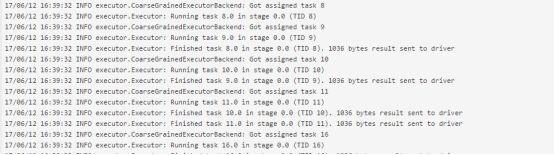

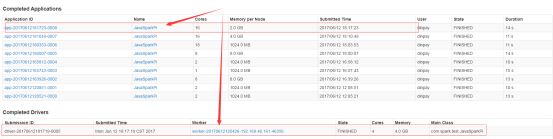

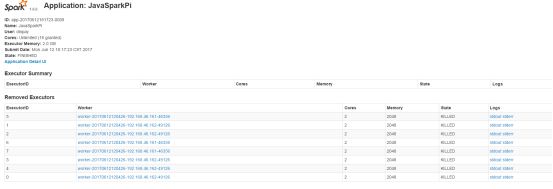

standalone模式下的cluster模式 # Run on a Spark standalone cluster in cluster deploy mode with supervise

spark-submit \

--master spark://hadoop-namenode-02:7077 \

--class com.spark.test.JavaSparkPi \

--deploy-mode cluster \

--supervise \

--executor-memory 4g \

--executor-cores 4 \

/home/dinpay/test/Spark-SubmitTest.jar 100

运行报错:java.io.FileNotFoundException: /home/dinpay/test/Spark-SubmitTest.jar (No such file or directory)

-------------------------------------------

spark-submit \

--master spark://hadoop-namenode-02:7077 \

--class com.spark.test.JavaSparkPi \

--deploy-mode cluster \

--supervise \

--driver-memory 4g \

--driver-cores 4 \

--executor-memory 2g \

--total-executor-cores 4 \

hdfs://192.168.46.163:9000/home/test/Spark-SubmitTest.jar 100

运行结果如下:

=============================================

如果代码中写定了.setMaster("local[2]");

则提交的集群模式也会运行driver,但是不会有对应的application并行运行

spark-submit --deploy-mode cluster \

--master spark://hadoop-namenode-02:6066 \

--class com.dinpay.bdp.rcp.service.Window12HzStat \

--driver-memory 2g \

--driver-cores 2 \

--executor-memory 1g \

--total-executor-cores 2 \

hdfs://192.168.46.163:9000/home/dinpay/RCP-HZ-TASK-0.0.1-SNAPSHOT.jar

如果代码中限定了.setMaster("local[2]");

则提交方式还是本地模式,会找一台worker进行本地化运行任务