(学习笔记)通过OLAMI平台语音控制unity游戏

关于OLAMI(具体指南)

仅仅简略说下OSL(OLAMI 语法描述语言)。OSL由grammar、rule、slot、template四个元素组成。

1.grammar:即语法,用OSL描述自然语言的形式,用来匹配语料,

- “[ ]” 语法规则符号表示方括号中的内容是可选的;

- “|” 表示 “或是” 的关系,左右两边的内容可以二选一,例如

[你|您]表示 “你” 或是 “您” 两者皆可之意; - “( )” 表示括号中的内容是一个整体。

2.rule:用来保存一个由多个同义词组成的集合,同义词间用|隔开,在grammar中用<>调用rule。

3.slot:如果要查询城市天气,定义grammar时不可能穷举所有的城市名称,这时就可以定义一个slot,通过设置slot的规则,就可以用slot来提取语音输入的城市信息,例如:

4.template:略。

除了OSL,还需要在OLAMI平台添加应用,在应用中使用自定义的模块,具体过程见具体指南。

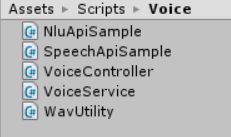

游戏中脚本的设置

OLAMI提供了SpeechApiSample.cs和NluApiSample.cs两个脚本,把他们直接放置在unity的scripts文件夹下就可以了。

想要将输入的语音通过我们在OLAMI中设置的模块进行“翻译”,需要用到VoiceService这个脚本:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Ai.Olami.Example;

public class VoiceService {

string url = "http://cn.olami.ai/cloudservice/api";

string key = "bfd34f6956cb4003adb56461e443611d";

string secret = "c20b4ab170144f45abfb19189bce0ab6";

NluApiSample nluApi;

SpeechApiSample speechApi;

private static VoiceService instance;

public static VoiceService GetInstance() {

if (instance == null) {

instance = new VoiceService();

}

return instance;

}

// Use this for initialization

private VoiceService () {

nluApi = new NluApiSample ();

nluApi.SetLocalization (url);

nluApi.SetAuthorization (key, secret);

speechApi = new SpeechApiSample ();

speechApi.SetLocalization (url);

speechApi.SetAuthorization (key, secret);

}

public string sendText(string text) {

return nluApi.GetRecognitionResult ("nli", text);

}

public string sendSpeech(byte[] audio) {

string result = speechApi.SendAudio("asr", "nli,seg", true, false, audio);

if (!result.ToLower ().Contains ("error")) {

System.Threading.Thread.Sleep (1000);

while (true) {

result = speechApi.GetRecognitionResult ("asr", "nli,seg");

if (!result.ToLower ().Contains ("\"final\":true")) {

if (result.ToLower ().Contains ("error"))

break;

System.Threading.Thread.Sleep (2000);

} else {

break;

}

}

}

return result;

}

}

脚本中所用到的Key和Secret就是App的Key和Secret。

olami接口支持的是wav格式的PCM录音,用WavUtility脚本来做格式转换:

using UnityEngine;

using System.Text;

using System.IO;

using System;

///

/// WAV utility for recording and audio playback functions in Unity.

/// Version: 1.0 alpha 1

///

/// - Use "ToAudioClip" method for loading wav file / bytes.

/// Loads .wav (PCM uncompressed) files at 8,16,24 and 32 bits and converts data to Unity's AudioClip.

///

/// - Use "FromAudioClip" method for saving wav file / bytes.

/// Converts an AudioClip's float data into wav byte array at 16 bit.

///

///

/// For documentation and usage examples: https://github.com/deadlyfingers/UnityWav

///

public class WavUtility

{

// Force save as 16-bit .wav

const int BlockSize_16Bit = 2;

///

/// Load PCM format *.wav audio file (using Unity's Application data path) and convert to AudioClip.

///

/// The AudioClip.

/// Local file path to .wav file

public static AudioClip ToAudioClip (string filePath)

{

if (!filePath.StartsWith (Application.persistentDataPath) && !filePath.StartsWith (Application.dataPath)) {

Debug.LogWarning ("This only supports files that are stored using Unity's Application data path. \nTo load bundled resources use 'Resources.Load(\"filename\") typeof(AudioClip)' method. \nhttps://docs.unity3d.com/ScriptReference/Resources.Load.html");

return null;

}

byte[] fileBytes = File.ReadAllBytes (filePath);

return ToAudioClip (fileBytes, 0);

}

public static AudioClip ToAudioClip (byte[] fileBytes, int offsetSamples = 0, string name = "wav")

{

//string riff = Encoding.ASCII.GetString (fileBytes, 0, 4);

//string wave = Encoding.ASCII.GetString (fileBytes, 8, 4);

int subchunk1 = BitConverter.ToInt32 (fileBytes, 16);

UInt16 audioFormat = BitConverter.ToUInt16 (fileBytes, 20);

// NB: Only uncompressed PCM wav files are supported.

string formatCode = FormatCode (audioFormat);

Debug.AssertFormat (audioFormat == 1 || audioFormat == 65534, "Detected format code '{0}' {1}, but only PCM and WaveFormatExtensable uncompressed formats are currently supported.", audioFormat, formatCode);

UInt16 channels = BitConverter.ToUInt16 (fileBytes, 22);

int sampleRate = BitConverter.ToInt32 (fileBytes, 24);

//int byteRate = BitConverter.ToInt32 (fileBytes, 28);

//UInt16 blockAlign = BitConverter.ToUInt16 (fileBytes, 32);

UInt16 bitDepth = BitConverter.ToUInt16 (fileBytes, 34);

int headerOffset = 16 + 4 + subchunk1 + 4;

int subchunk2 = BitConverter.ToInt32 (fileBytes, headerOffset);

//Debug.LogFormat ("riff={0} wave={1} subchunk1={2} format={3} channels={4} sampleRate={5} byteRate={6} blockAlign={7} bitDepth={8} headerOffset={9} subchunk2={10} filesize={11}", riff, wave, subchunk1, formatCode, channels, sampleRate, byteRate, blockAlign, bitDepth, headerOffset, subchunk2, fileBytes.Length);

float[] data;

switch (bitDepth) {

case 8:

data = Convert8BitByteArrayToAudioClipData (fileBytes, headerOffset, subchunk2);

break;

case 16:

data = Convert16BitByteArrayToAudioClipData (fileBytes, headerOffset, subchunk2);

break;

case 24:

data = Convert24BitByteArrayToAudioClipData (fileBytes, headerOffset, subchunk2);

break;

case 32:

data = Convert32BitByteArrayToAudioClipData (fileBytes, headerOffset, subchunk2);

break;

default:

throw new Exception (bitDepth + " bit depth is not supported.");

}

AudioClip audioClip = AudioClip.Create (name, data.Length, (int)channels, sampleRate, false);

audioClip.SetData (data, 0);

return audioClip;

}

#region wav file bytes to Unity AudioClip conversion methods

private static float[] Convert8BitByteArrayToAudioClipData (byte[] source, int headerOffset, int dataSize)

{

int wavSize = BitConverter.ToInt32 (source, headerOffset);

headerOffset += sizeof(int);

Debug.AssertFormat (wavSize > 0 && wavSize == dataSize, "Failed to get valid 8-bit wav size: {0} from data bytes: {1} at offset: {2}", wavSize, dataSize, headerOffset);

float[] data = new float[wavSize];

sbyte maxValue = sbyte.MaxValue;

int i = 0;

while (i < wavSize) {

data [i] = (float)source [i] / maxValue;

++i;

}

return data;

}

private static float[] Convert16BitByteArrayToAudioClipData (byte[] source, int headerOffset, int dataSize)

{

int wavSize = BitConverter.ToInt32 (source, headerOffset);

headerOffset += sizeof(int);

Debug.AssertFormat (wavSize > 0 && wavSize == dataSize, "Failed to get valid 16-bit wav size: {0} from data bytes: {1} at offset: {2}", wavSize, dataSize, headerOffset);

int x = sizeof(Int16); // block size = 2

int convertedSize = wavSize / x;

float[] data = new float[convertedSize];

Int16 maxValue = Int16.MaxValue;

int offset = 0;

int i = 0;

while (i < convertedSize) {

offset = i * x + headerOffset;

data [i] = (float)BitConverter.ToInt16 (source, offset) / maxValue;

++i;

}

Debug.AssertFormat (data.Length == convertedSize, "AudioClip .wav data is wrong size: {0} == {1}", data.Length, convertedSize);

return data;

}

private static float[] Convert24BitByteArrayToAudioClipData (byte[] source, int headerOffset, int dataSize)

{

int wavSize = BitConverter.ToInt32 (source, headerOffset);

headerOffset += sizeof(int);

Debug.AssertFormat (wavSize > 0 && wavSize == dataSize, "Failed to get valid 24-bit wav size: {0} from data bytes: {1} at offset: {2}", wavSize, dataSize, headerOffset);

int x = 3; // block size = 3

int convertedSize = wavSize / x;

int maxValue = Int32.MaxValue;

float[] data = new float[convertedSize];

byte[] block = new byte[sizeof(int)]; // using a 4 byte block for copying 3 bytes, then copy bytes with 1 offset

int offset = 0;

int i = 0;

while (i < convertedSize) {

offset = i * x + headerOffset;

Buffer.BlockCopy (source, offset, block, 1, x);

data [i] = (float)BitConverter.ToInt32 (block, 0) / maxValue;

++i;

}

Debug.AssertFormat (data.Length == convertedSize, "AudioClip .wav data is wrong size: {0} == {1}", data.Length, convertedSize);

return data;

}

private static float[] Convert32BitByteArrayToAudioClipData (byte[] source, int headerOffset, int dataSize)

{

int wavSize = BitConverter.ToInt32 (source, headerOffset);

headerOffset += sizeof(int);

Debug.AssertFormat (wavSize > 0 && wavSize == dataSize, "Failed to get valid 32-bit wav size: {0} from data bytes: {1} at offset: {2}", wavSize, dataSize, headerOffset);

int x = sizeof(float); // block size = 4

int convertedSize = wavSize / x;

Int32 maxValue = Int32.MaxValue;

float[] data = new float[convertedSize];

int offset = 0;

int i = 0;

while (i < convertedSize) {

offset = i * x + headerOffset;

data [i] = (float)BitConverter.ToInt32 (source, offset) / maxValue;

++i;

}

Debug.AssertFormat (data.Length == convertedSize, "AudioClip .wav data is wrong size: {0} == {1}", data.Length, convertedSize);

return data;

}

#endregion

public static byte[] FromAudioClip (AudioClip audioClip)

{

string file;

return FromAudioClip (audioClip, out file, false);

}

public static byte[] FromAudioClip (AudioClip audioClip, out string filepath, bool saveAsFile = true, string dirname = "recordings")

{

MemoryStream stream = new MemoryStream ();

const int headerSize = 44;

// get bit depth

UInt16 bitDepth = 16; //BitDepth (audioClip);

// NB: Only supports 16 bit

//Debug.AssertFormat (bitDepth == 16, "Only converting 16 bit is currently supported. The audio clip data is {0} bit.", bitDepth);

// total file size = 44 bytes for header format and audioClip.samples * factor due to float to Int16 / sbyte conversion

int fileSize = audioClip.samples * BlockSize_16Bit + headerSize; // BlockSize (bitDepth)

// chunk descriptor (riff)

WriteFileHeader (ref stream, fileSize);

// file header (fmt)

WriteFileFormat (ref stream, audioClip.channels, audioClip.frequency, bitDepth);

// data chunks (data)

WriteFileData (ref stream, audioClip, bitDepth);

byte[] bytes = stream.ToArray ();

// Validate total bytes

Debug.AssertFormat (bytes.Length == fileSize, "Unexpected AudioClip to wav format byte count: {0} == {1}", bytes.Length, fileSize);

// Save file to persistant storage location

if (saveAsFile) {

filepath = string.Format ("{0}/{1}/{2}.{3}", Application.persistentDataPath, dirname, DateTime.UtcNow.ToString ("yyMMdd-HHmmss-fff"), "wav");

Directory.CreateDirectory (Path.GetDirectoryName (filepath));

File.WriteAllBytes (filepath, bytes);

//Debug.Log ("Auto-saved .wav file: " + filepath);

} else {

filepath = null;

}

stream.Dispose ();

return bytes;

}

#region write .wav file functions

private static int WriteFileHeader (ref MemoryStream stream, int fileSize)

{

int count = 0;

int total = 12;

// riff chunk id

byte[] riff = Encoding.ASCII.GetBytes ("RIFF");

count += WriteBytesToMemoryStream (ref stream, riff, "ID");

// riff chunk size

int chunkSize = fileSize - 8; // total size - 8 for the other two fields in the header

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (chunkSize), "CHUNK_SIZE");

byte[] wave = Encoding.ASCII.GetBytes ("WAVE");

count += WriteBytesToMemoryStream (ref stream, wave, "FORMAT");

// Validate header

Debug.AssertFormat (count == total, "Unexpected wav descriptor byte count: {0} == {1}", count, total);

return count;

}

private static int WriteFileFormat (ref MemoryStream stream, int channels, int sampleRate, UInt16 bitDepth)

{

int count = 0;

int total = 24;

byte[] id = Encoding.ASCII.GetBytes ("fmt ");

count += WriteBytesToMemoryStream (ref stream, id, "FMT_ID");

int subchunk1Size = 16; // 24 - 8

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (subchunk1Size), "SUBCHUNK_SIZE");

UInt16 audioFormat = 1;

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (audioFormat), "AUDIO_FORMAT");

UInt16 numChannels = Convert.ToUInt16 (channels);

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (numChannels), "CHANNELS");

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (sampleRate), "SAMPLE_RATE");

int byteRate = sampleRate * channels * BytesPerSample (bitDepth);

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (byteRate), "BYTE_RATE");

UInt16 blockAlign = Convert.ToUInt16 (channels * BytesPerSample (bitDepth));

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (blockAlign), "BLOCK_ALIGN");

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (bitDepth), "BITS_PER_SAMPLE");

// Validate format

Debug.AssertFormat (count == total, "Unexpected wav fmt byte count: {0} == {1}", count, total);

return count;

}

private static int WriteFileData (ref MemoryStream stream, AudioClip audioClip, UInt16 bitDepth)

{

int count = 0;

int total = 8;

// Copy float[] data from AudioClip

float[] data = new float[audioClip.samples * audioClip.channels];

audioClip.GetData (data, 0);

byte[] bytes = ConvertAudioClipDataToInt16ByteArray (data);

byte[] id = Encoding.ASCII.GetBytes ("data");

count += WriteBytesToMemoryStream (ref stream, id, "DATA_ID");

int subchunk2Size = Convert.ToInt32 (audioClip.samples * BlockSize_16Bit); // BlockSize (bitDepth)

count += WriteBytesToMemoryStream (ref stream, BitConverter.GetBytes (subchunk2Size), "SAMPLES");

// Validate header

Debug.AssertFormat (count == total, "Unexpected wav data id byte count: {0} == {1}", count, total);

// Write bytes to stream

count += WriteBytesToMemoryStream (ref stream, bytes, "DATA");

// Validate audio data

Debug.AssertFormat (bytes.Length == subchunk2Size, "Unexpected AudioClip to wav subchunk2 size: {0} == {1}", bytes.Length, subchunk2Size);

return count;

}

private static byte[] ConvertAudioClipDataToInt16ByteArray (float[] data)

{

MemoryStream dataStream = new MemoryStream ();

int x = sizeof(Int16);

Int16 maxValue = Int16.MaxValue;

int i = 0;

while (i < data.Length) {

dataStream.Write (BitConverter.GetBytes (Convert.ToInt16 (data [i] * maxValue)), 0, x);

++i;

}

byte[] bytes = dataStream.ToArray ();

// Validate converted bytes

Debug.AssertFormat (data.Length * x == bytes.Length, "Unexpected float[] to Int16 to byte[] size: {0} == {1}", data.Length * x, bytes.Length);

dataStream.Dispose ();

return bytes;

}

private static int WriteBytesToMemoryStream (ref MemoryStream stream, byte[] bytes, string tag = "")

{

int count = bytes.Length;

stream.Write (bytes, 0, count);

//Debug.LogFormat ("WAV:{0} wrote {1} bytes.", tag, count);

return count;

}

#endregion

///

/// Calculates the bit depth of an AudioClip

///

/// The bit depth. Should be 8 or 16 or 32 bit.

/// Audio clip.

public static UInt16 BitDepth (AudioClip audioClip)

{

UInt16 bitDepth = Convert.ToUInt16 (audioClip.samples * audioClip.channels * audioClip.length / audioClip.frequency);

Debug.AssertFormat (bitDepth == 8 || bitDepth == 16 || bitDepth == 32, "Unexpected AudioClip bit depth: {0}. Expected 8 or 16 or 32 bit.", bitDepth);

return bitDepth;

}

private static int BytesPerSample (UInt16 bitDepth)

{

return bitDepth / 8;

}

private static int BlockSize (UInt16 bitDepth)

{

switch (bitDepth) {

case 32:

return sizeof(Int32); // 32-bit -> 4 bytes (Int32)

case 16:

return sizeof(Int16); // 16-bit -> 2 bytes (Int16)

case 8:

return sizeof(sbyte); // 8-bit -> 1 byte (sbyte)

default:

throw new Exception (bitDepth + " bit depth is not supported.");

}

}

private static string FormatCode (UInt16 code)

{

switch (code) {

case 1:

return "PCM";

case 2:

return "ADPCM";

case 3:

return "IEEE";

case 7:

return "μ-law";

case 65534:

return "WaveFormatExtensable";

default:

Debug.LogWarning ("Unknown wav code format:" + code);

return "";

}

}

}在场景中创建一个空对象,挂载VoiceController脚本,脚本用来接收录音:

using System.Collections;

using System.Collections.Generic;

using UnityEngine.UI;

using UnityEngine;

using System;

using System.Threading;

public class VoiceController : MonoBehaviour

{

AudioClip audioclip;

bool recording;

[SerializeField]

PlayerVoiceControl voiceControl;//将录音传递给PlayerVoiceControl脚本

// Use this for initialization

void Start()

{

}

// Update is called once per frame

void Update()

{

if (Input.GetKeyDown(KeyCode.F1))

{

recording = true;

}

else if (Input.GetKeyUp(KeyCode.F1))

{

recording = false;

}

}

void LateUpdate()

{

if (recording)

{

if (!Microphone.IsRecording(null))

{

audioclip = Microphone.Start(null, false, 5, 16000);

}

}

else

{

if (Microphone.IsRecording(null))

{

Microphone.End(null);

if (audioclip != null)

{

byte[] audiodata = WavUtility.FromAudioClip(audioclip);

Thread thread = new Thread(new ParameterizedThreadStart(process));

thread.Start((object)audiodata);

//thread.Start((object) result);

}

}

}

}

void process(object obj)

{

byte[] audiodata = (byte[])obj;

//string result = (string) obj;

string result = VoiceService.GetInstance().sendSpeech(audiodata);

audioclip = null;

Debug.Log(result);

VoiceResult voiceResult = JsonUtility.FromJson(result);

if (voiceResult.status.Equals("ok"))

{

Nli[] nlis = voiceResult.data.nli;

if (nlis != null && nlis.Length != 0)

{

foreach (Nli nli in nlis)

{

if (nli.type == "角色移动控制")//这里填写自己在OLAMI上定义的模块

{

foreach (Semantic sem in nli.semantic)

{

voiceControl.ProcessSemantic(sem);

}

}

}

}

}

}

}

[Serializable]

public class VoiceResult

{

public VoiceData data;

public string status;

}

[Serializable]

public class VoiceData

{

public Nli[] nli;

}

[Serializable]

public class Nli

{

public DescObj desc;

public Semantic[] semantic;

public string type;

}

[Serializable]

public class DescObj

{

public string result;

public int status;

}

[Serializable]

public class Semantic

{

public string app;

public string input;

public Slot[] slots;

public string[] modifier;

public string customer;

}

[Serializable]

public class Slot

{

public string name;

public string value;

public string[] modifier;

} PlayerVoiceControl挂在角色上:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class PlayerVoiceControl : MonoBehaviour

{

PlayerMove move;

// Use this for initialization

void Start()

{

move = GetComponent();

}

// Update is called once per frame

void Update()

{

}

public void VoiceJump()

{

move.Jump();

}

public void ProcessSemantic(Semantic sem)//处理OLAMI解析出来的语义

{

if (sem.app == "角色移动控制")//这里填写自己在OLAMI上的模块

{

string modifier = sem.modifier[0];

switch (modifier)//判断语料经过模块解析后获得的语义(返回的modifier)

{

case "jump":

{

VoiceJump();

}

break;

}

return;

}

}

} 在根据返回的modifier值调用函数时涉及到了多线程,比如我想实现跳跃的语音控制,不能直接调用addforce函数,会报错"XXX can only be called from the main thread",具体哪些可以在多线程使用,参见此处。

PlayerMoveReset.cs