Deployment脚本部署Tomcat集群:外部访问、负载均衡、文件共享及集群配置调整

文章目录

- 前置知识

- 一、Deployment脚本部署Tomcat集群

- 二、外部访问Tomcat集群

- 三、利用Rinted对外提供Service负载均衡支持

-

- 1、创建服务

- 2、端口转发工具Rinetd

- 3、定义jsp文件查看转发到哪个节点

- 四、部署配置挂载点

- 五、基于NFS实现集群文件共享

-

- 1、master

- 2、node

- 3、验证

- 六、集群配置调整与资源限定

-

- 1、增加资源限定

- 2、调整节点

- 总结

-

- 1、ERROR: cannot verify www.boutell.co.uk's certificate, issued by ‘/C=US/O=Let's Encrypt/CN=R3’:Issued certificate has expired.To connect to www.boutell.co.uk insecurely, use `--no-check-certificate'.

- 2、k8s的master无法ping通ClusterIP

前置知识

Deployment脚本是一种用于自动化应用程序部署过程的脚本。它包含一系列命令和配置,用于将应用程序从开发环境部署到生产环境或其他目标环境。

部署相对于Kubernetes向Node节点发送指令,创建容器的过程,Kubernetes支持yml格式的部署脚本

与部署相关常用命令:

- 创建部署:

kubectl create -f 部署yml文件 - 更新部署配置:

kubectl apply -f 部署yml文件 - 查看已部署pod:

kubectl get pod [-o wide],-o wide表示详细信息 - 查看Pod详细信息:

kubectl describe pod pod名称 - 查看pod输出日志:

kubectl logs [-f] pod名称,-f表示是否实时更新 - 删除部署服务:

kubectl delete service

一、Deployment脚本部署Tomcat集群

master执行即可

设置部署文件,vi tomcat-deploy.yml,内容如下:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: tomcat-deploy

spec:

replicas: 2

template:

metadata:

labels:

app: tomcat-cluster

spec:

containers:

- name: tomcat-cluster

image: tomcat:latest

ports:

- containerPort: 8080

创建部署:kubectl create -f tomcat-deploy.yml

[root@master k8s]# kubectl create -f tomcat-deploy.yml

deployment.extensions/tomcat-deploy created

看看是否配置正确:kubectl get deployment

[root@master k8s]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

tomcat-deploy 2/2 2 2 6m34s

二、外部访问Tomcat集群

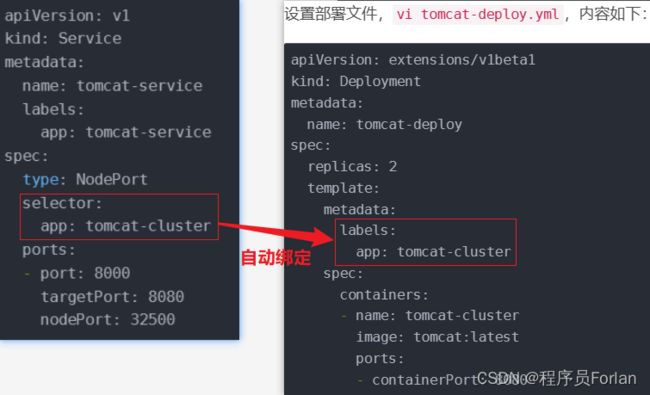

设置服务文件,vi tomcat-service.yml,内容如下:

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

labels:

app: tomcat-service

spec:

type: NodePort

selector:

app: tomcat-cluster

ports:

- port: 8000

targetPort: 8080

nodePort: 32500

参数说明:spec.selector.app就是我们之前部署的集群标签名

创建部署:kubectl create -f tomcat-service.yml

[root@master k8s]# kubectl create -f tomcat-service.yml

service/tomcat-service created

看看是否配置正确:kubectl get service

[root@master forlan-test]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 173m

tomcat-service ClusterIP 10.110.88.125 8000/TCP 159m

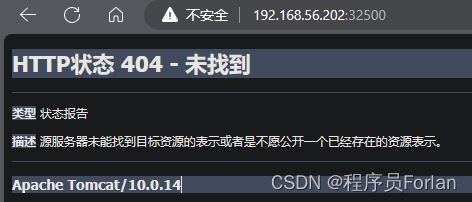

访问验证:此时,我们就可以通过node节点的ip+暴露的nodePort进行访问,http://192.168.56.201:32500 或 http://192.168.56.202:32500,打开界面,第一次比较慢,会出现404表示成功

三、利用Rinted对外提供Service负载均衡支持

上面主要通过节点和暴露的端口访问,无法进行负载均衡,没有利用起集群这个利器,我们通过Rinted,我们直接访问master节点即可,还可以实现负载均衡,下面就来操作实现下

1、创建服务

删除之前部署的服务

[root@master k8s]# kubectl delete service tomcat-service

service "tomcat-service" deleted

编辑服务文件,vi tomcat-service.yml,调整内容,主要注释掉type: NodePort和nodePort: 32500

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

labels:

app: tomcat-service

spec:

# type: NodePort

selector:

app: tomcat-cluster

ports:

- port: 8000

targetPort: 8080

# nodePort: 32500

重新创建服务:kubectl create -f tomcat-service.yml

[root@master k8s]# kubectl create -f tomcat-service.yml

service/tomcat-service created

看看是否配置正确:kubectl get service

[root@master forlan-test]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 173m

tomcat-service ClusterIP 10.110.88.125 8000/TCP 159m

查看详细的服务信息:kubectl describe service tomcat-service

[root@master forlan-test]# kubectl describe service tomcat-service

Name: tomcat-service

Namespace: default

Labels: app=tomcat-service

Annotations:

Selector: app=tomcat-cluster

Type: ClusterIP

IP: 10.110.88.125

Port: 8000/TCP

TargetPort: 8080/TCP

Endpoints: 10.244.1.10:8080,10.244.2.9:8080

Session Affinity: None

Events:

验证一下,正常情况下,在master发生请求,应该返回404才对,但是这里很奇怪,在node节点发送请求,返回404,待解决

[root@node2 forlan-web]# curl 10.110.88.125:8000

<!doctype html>"en">HTTP Status 404 – Not Found<<span class="token operator">/</span>title><style <span class="token function">type</span>=<span class="token string">"text/css"</span>>body <span class="token punctuation">{</span>font-family:Tahoma<span class="token punctuation">,</span>Arial<span class="token punctuation">,</span>sans-serif<span class="token punctuation">;</span><span class="token punctuation">}</span> h1<span class="token punctuation">,</span> h2<span class="token punctuation">,</span> h3<span class="token punctuation">,</span> b <span class="token punctuation">{</span>color:white<span class="token punctuation">;</span>background-color:<span class="token comment">#525D76;} h1 {font-size:22px;} h2 {font-size:16px;} h3 {font-size:14px;} p {font-size:12px;} a {color:black;} .line {height:1px;background-color:#525D76;border:none;}</style></head><body><h1>HTTP Status 404 – Not Found</h1><hr class="line" /><p><b>Type</b> Status Report</p><p><b>Description</b> The origin server did not find a current representation for the target resource or is not willing to disclose that one exists.</p><hr class="line" /><h3>Apache Tomcat/10.0.14</h3></body></html>[root@node2 forlan-web]# </span>

</code></pre>

<h3>2、端口转发工具Rinetd</h3>

<blockquote>

<p>上面只是实现在节点内访问,想在外部访问,往下操作</p>

</blockquote>

<p>下载rinetd工具包,解压</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master k8s]</span><span class="token comment"># wget http://www.boutell.com/rinetd/http/rinetd.tar.gz --no-check-certificate</span>

<span class="token namespace">[root@master k8s]</span><span class="token comment"># tar -xzvf rinetd.tar.gz</span>

</code></pre>

<p>进入rinetd目录,修改 rinetd.c 文件,将文件中的所有 65536 替换为 65535</p>

<pre><code class="prism language-powershell">cd rinetd

sed <span class="token operator">-</span>i <span class="token string">'s/65536/65535/g'</span> rinetd<span class="token punctuation">.</span>c

</code></pre>

<p>注:"s"表示替换操作,"65536"是要被替换的内容,"65535"是替换后的内容,"g"表示全局替换</p>

<p>创建rinetd要求的目录/usr/man,安装gcc编译器,编译并安装程序</p>

<pre><code class="prism language-powershell">mkdir <span class="token operator">-</span>p <span class="token operator">/</span>usr/man

yum install <span class="token operator">-</span>y gcc

make && make install

</code></pre>

<p><a href="http://img.e-com-net.com/image/info8/43019a2f1ee94ef38ad568d42da8f7d3.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/43019a2f1ee94ef38ad568d42da8f7d3.jpg" alt="Deployment脚本部署Tomcat集群:外部访问、负载均衡、文件共享及集群配置调整_第3张图片" width="650" height="122" style="border:1px solid black;"></a></p>

<p>进行端口映射<br> 编辑配置文件:<code>vi /etc/rinetd.conf</code>,0.0.0.0表示所有ip都转发</p>

<pre><code class="prism language-powershell">0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0 8000 10<span class="token punctuation">.</span>110<span class="token punctuation">.</span>88<span class="token punctuation">.</span>125 8000

</code></pre>

<p>让配置生效</p>

<pre><code class="prism language-powershell">rinetd <span class="token operator">-</span>c <span class="token operator">/</span>etc/rinetd<span class="token punctuation">.</span>conf

</code></pre>

<p>查看是否有监听8000端口:netstat -tulpn|grep 8000</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master rinetd]</span><span class="token comment"># netstat -tulpn|grep 8000</span>

tcp 0 0 0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0:8000 0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0:<span class="token operator">*</span> LISTEN 25126/rinetd

</code></pre>

<p>访问masterIp:8000验证,出现404表示成功</p>

<h3>3、定义jsp文件查看转发到哪个节点</h3>

<p>在node节点查看当前运行的容器</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@node1 /]</span><span class="token comment"># docker ps</span>

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e704f7fd1af2 tomcat <span class="token string">"catalina.sh run"</span> 54 minutes ago Up 54 minutes k8s_tomcat-cluster_tomcat-deploy-5fd4fc7ddb-d2cx8_default_b62cd02c-8a8d-11ee-bab1-5254004d77d3_0

</code></pre>

<p>进入容器内部:docker exec -it <container_id> /bin/bash</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@node1 /]</span><span class="token comment"># docker exec -it e704f7fd1af2 /bin/bash</span>

root@tomcat-deploy-5fd4fc7ddb-d2cx8:<span class="token operator">/</span>usr/local/tomcat<span class="token comment"># ls</span>

BUILDING<span class="token punctuation">.</span>txt CONTRIBUTING<span class="token punctuation">.</span>md LICENSE NOTICE README<span class="token punctuation">.</span>md RELEASE-NOTES RUNNING<span class="token punctuation">.</span>txt bin conf lib logs native-jni-lib temp webapps webapps<span class="token punctuation">.</span>dist work

</code></pre>

<p>在webapps下新建我们的jsp文件,vi index.jsp,发现命令不支持,也安装不了相关的命令</p>

<pre><code class="prism language-powershell">root@tomcat-deploy-5fd4fc7ddb-d2cx8:<span class="token operator">/</span>usr/local/tomcat/webapps<span class="token comment"># vi index.jsp</span>

bash: vi: command not found

root@tomcat-deploy-5fd4fc7ddb-d2cx8:<span class="token operator">/</span>usr/local/tomcat/webapps<span class="token comment"># yum install vi</span>

bash: yum: command not found

</code></pre>

<p>上面的情况,目前有2种解决方案:</p>

<ul>

<li>通过Dockerfile自定义构建镜像,安装相关的指令</li>

<li>通过挂载宿主机目录到容器<br> 第1种方式可以参考:https://blog.csdn.net/qq_36433289/article/details/134731875<br> 下面我们来介绍下第2种方式</li>

</ul>

<h2>四、部署配置挂载点</h2>

<p>主要是把宿主机的/forlan-web目录挂载到容器内的/usr/local/tomcat/webapps目录,调整我们之前定义的部署文件:<code>vi tomcat-deploy.yml</code>,调整内容如下:<br> <a href="http://img.e-com-net.com/image/info8/f819eb21e638427dabe61f8d3ce7a286.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/f819eb21e638427dabe61f8d3ce7a286.jpg" alt="Deployment脚本部署Tomcat集群:外部访问、负载均衡、文件共享及集群配置调整_第4张图片" width="457" height="442" style="border:1px solid black;"></a></p>

<pre><code class="prism language-powershell">apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: tomcat-deploy

spec:

replicas: 2

template:

metadata:

labels:

app: tomcat-cluster

spec:

volumes:

<span class="token operator">-</span> name: web-app

hostPath:

path: <span class="token operator">/</span>forlan-web

containers:

<span class="token operator">-</span> name: tomcat-cluster

image: tomcat:latest

ports:

<span class="token operator">-</span> containerPort: 8080

volumeMounts:

<span class="token operator">-</span> name: web-app

mountPath: <span class="token operator">/</span>usr/local/tomcat/webapps

</code></pre>

<p>更新集群配置:<code>kubectl apply -f tomcat-deploy.yml</code></p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master k8s]</span><span class="token comment"># kubectl apply -f tomcat-deploy.yml</span>

deployment<span class="token punctuation">.</span>extensions/tomcat-deploy configured

</code></pre>

<p>测试在宿主机/forlan-web/forlan-test下新增index.jsp文件,进入容器查看有同步即可,至此,就说明挂载成功了</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@node1 mnt]</span><span class="token comment"># vi /forlan-web/forlan-test/index.jsp</span>

<<span class="token operator">%=</span>request<span class="token punctuation">.</span>getLocalAddr<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token operator">%</span>>

<span class="token namespace">[root@node1 mnt]</span><span class="token comment"># docker ps|grep tomcat-cluster</span>

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b064bbacaf00 tomcat <span class="token string">"catalina.sh run"</span> 2 minutes ago Up 2 minutes k8s_tomcat-cluster_tomcat-deploy-6678dccdc9-gjd9r_default_b5c19b2c-8aa4-11ee-bab1-5254004d77d3_0

<span class="token namespace">[root@node1 mnt]</span><span class="token comment"># docker exec -it b064bbacaf00 /bin/bash</span>

root@tomcat-deploy-6678dccdc9-gjd9r:<span class="token operator">/</span>usr/local/tomcat<span class="token comment"># cd webapps</span>

root@tomcat-deploy-6678dccdc9-gjd9r:<span class="token operator">/</span>usr/local/tomcat/webapps<span class="token comment"># cat forlan-test/index.jsp</span>

<<span class="token operator">%=</span>request<span class="token punctuation">.</span>getLocalAddr<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token operator">%</span>>

</code></pre>

<p>验证一下,访问masterIp:8000验证,出现10.244.2.9 或 10.244.1.10,就说明请求到了node1 或 node2</p>

<h2>五、基于NFS实现集群文件共享</h2>

<p>前面的index.jsp,是单独维护在node节点的机器,如果多个node节点存在相同文件,可以怎么处理?那就可以使用NFS来实现集群文件共享了</p>

<blockquote>

<p>Network File System - NFS<br> NFS,是由SUN公司研制的文件传输协议<br> NFS主要是采用远程过程调用RPC机制实现文件传输<br> NFS允许一台服务器在网络上共享文件和资源。/etc/exports 文件是 NFS 的主要配置文件,用于定义哪些目录或文件系统可以被哪些客户端共享</p>

</blockquote>

<h3>1、master</h3>

<p>安装组件</p>

<pre><code class="prism language-powershell">yum install <span class="token operator">-</span>y nfs-utils rpcbind

</code></pre>

<p>创建共享目录</p>

<pre><code class="prism language-powershell">cd <span class="token operator">/</span>usr/local

mkdir forlan-<span class="token keyword">data</span>

</code></pre>

<p>编辑暴露文件:vi /etc/exports</p>

<pre><code class="prism language-powershell"><span class="token operator">/</span>usr/local/forlan-<span class="token keyword">data</span> 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>56<span class="token punctuation">.</span>200/24<span class="token punctuation">(</span>rw<span class="token punctuation">,</span>sync<span class="token punctuation">)</span>

</code></pre>

<p>参数说明:</p>

<ul>

<li>/24表示子网掩码,它指示前24位是网络地址,剩下的8位是主机地址,这意味着这个IP地址范围内有256个可用的IP地址(因为2的8次方等于256)</li>

<li>192.168.56.200/24表示的确是从192.168.56.0到192.168.56.255的IP地址范围<br> (rw,sync)是访问模式</li>

<li>rw 表示客户端可读写共享的目录。</li>

<li>sync 表示数据在写入后会被同步刷新到磁盘上,以保证数据的持久性。</li>

</ul>

<p>启动服务并设置开机自动启动</p>

<pre><code class="prism language-powershell">systemctl <span class="token function">start</span> nfs<span class="token punctuation">.</span>service

systemctl <span class="token function">start</span> rpcbind<span class="token punctuation">.</span>service

systemctl enable nfs<span class="token punctuation">.</span>service

systemctl enable rpcbind<span class="token punctuation">.</span>service

</code></pre>

<p>验证是否配置成功:<code>exportfs</code>,看到配置说明成功</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master local]</span><span class="token comment"># exportfs</span>

<span class="token operator">/</span>usr/local/forlan-<span class="token keyword">data</span>

192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>56<span class="token punctuation">.</span>200/24

</code></pre>

<p>后续暴露文件有变动,执行<code>exportfs -ra</code>重新加载NFS服务</p>

<h3>2、node</h3>

<p>安装组件</p>

<pre><code class="prism language-powershell">yum install <span class="token operator">-</span>y nfs-utils rpcbind

</code></pre>

<p>验证是否暴露了:showmount -e 192.168.56.200</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@node1 /]</span><span class="token comment"># showmount -e 192.168.56.200</span>

Export list <span class="token keyword">for</span> 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>56<span class="token punctuation">.</span>200:

<span class="token operator">/</span>usr/local/forlan-<span class="token keyword">data</span> 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>56<span class="token punctuation">.</span>200/24

</code></pre>

<p>进行挂载:</p>

<pre><code class="prism language-powershell"><span class="token function">mount</span> 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>56<span class="token punctuation">.</span>200:<span class="token operator">/</span>usr/local/forlan-<span class="token keyword">data</span> <span class="token operator">/</span>forlan-web

</code></pre>

<h3>3、验证</h3>

<p>master的共享目录增加文件index.jsp,node目录看得到,说明成功,如下:</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master forlan-data]</span><span class="token comment"># ll</span>

total 0

<span class="token namespace">[root@master forlan-data]</span><span class="token comment"># vi /usr/local/forlan-data/index.jsp</span>

<span class="token namespace">[root@master forlan-data]</span><span class="token comment"># ls</span>

index<span class="token punctuation">.</span>jsp

<span class="token namespace">[root@node1 forlan-web]</span><span class="token comment"># ls</span>

index<span class="token punctuation">.</span>jsp

<span class="token namespace">[root@node2 forlan-web]</span><span class="token comment"># ls</span>

index<span class="token punctuation">.</span>jsp

</code></pre>

<h2>六、集群配置调整与资源限定</h2>

<p>原来部署了2个,现在需要部署3个Tomcat?你起码得满足什么配置才能操作?</p>

<h3>1、增加资源限定</h3>

<ul>

<li>最低要求requests</li>

<li>最大限制limits</li>

<li>cpu不一定是整数,0.5也可以,指的是核数<br> <a href="http://img.e-com-net.com/image/info8/52f014afcdbc4b73b4ba9f762e281a4f.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/52f014afcdbc4b73b4ba9f762e281a4f.jpg" alt="Deployment脚本部署Tomcat集群:外部访问、负载均衡、文件共享及集群配置调整_第5张图片" width="358" height="332" style="border:1px solid black;"></a></li>

</ul>

<h3>2、调整节点</h3>

<p>编辑部署文件:vi /k8s/tomcat-deploy.yml,更改<code>replicas: 3 </code></p>

<pre><code class="prism language-powershell">apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: tomcat-deploy

spec:

replicas: 3

template:

metadata:

labels:

app: tomcat-cluster

spec:

volumes:

<span class="token operator">-</span> name: web-app

hostPath:

path: <span class="token operator">/</span>mnt

containers:

<span class="token operator">-</span> name: tomcat-cluster

image: tomcat:latest

resources:

requests:

cpu: 1

memory: 500Mi

limits:

cpu: 2

memory: 1024Mi

ports:

<span class="token operator">-</span> containerPort: 8080

volumeMounts:

<span class="token operator">-</span> name: web-app

mountPath: <span class="token operator">/</span>usr/local/tomcat/webapps

</code></pre>

<p>更新集群配置:kubectl apply -f tomcat-deploy.yml</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master k8s]</span><span class="token comment"># kubectl apply -f tomcat-deploy.yml</span>

deployment<span class="token punctuation">.</span>extensions/tomcat-deploy configured

</code></pre>

<p>查看部署:kubectl get deployment,可以看到从2变为3台了</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master k8s]</span><span class="token comment"># kubectl get deployment</span>

NAME READY UP-TO-DATE AVAILABLE AGE

tomcat-deploy 3/3 3 3 100m

</code></pre>

<p><code>注:k8s默认是在负载低的节点新增pod</code></p>

<h2>总结</h2>

<h3>1、ERROR: cannot verify www.boutell.co.uk’s certificate, issued by ‘/C=US/O=Let’s Encrypt/CN=R3’:Issued certificate has expired.To connect to www.boutell.co.uk insecurely, use `–no-check-certificate’.</h3>

<p>解决:指令后面加上wget http://www.boutell.com/rinetd/http/rinetd.tar.gz --no-check-certificate</p>

<h3>2、k8s的master无法ping通ClusterIP</h3>

<p>问题明细:</p>

<blockquote>

<p>W1124 14:24:05.207176 1 proxier.go:493] Failed to load kernel module ip_vs with modprobe. You can ignore this message when kube-proxy is running inside container without mounting /lib/modules<br> W1124 14:24:05.208150 1 proxier.go:493] Failed to load kernel module ip_vs_rr with modprobe. You can ignore this message when kube-proxy is running inside container without mounting /lib/modules<br> W1124 14:24:05.208974 1 proxier.go:493] Failed to load kernel module ip_vs_wrr with modprobe. You can ignore this message when kube-proxy is running inside container without mounting /lib/modules<br> W1124 14:24:05.209778 1 proxier.go:493] Failed to load kernel module ip_vs_sh with modprobe. You can ignore this message when kube-proxy is running inside container without mounting /lib/modules<br> W1124 14:24:05.214337 1 server_others.go:267] Flag proxy-mode=“” unknown, assuming iptables proxy</p>

</blockquote>

<p>解决:</p>

<ul>

<li>添加IPV4模块</li>

</ul>

<pre><code class="prism language-powershell"><span class="token function">cat</span> > <span class="token operator">/</span>etc/sysconfig/modules/ipvs<span class="token punctuation">.</span>modules <<EOF

modprobe <span class="token operator">--</span> ip_vs

modprobe <span class="token operator">--</span> ip_vs_rr

modprobe <span class="token operator">--</span> ip_vs_wrr

modprobe <span class="token operator">--</span> ip_vs_sh

modprobe <span class="token operator">--</span> nf_conntrack_ipv4

EOF

chmod 755 <span class="token operator">/</span>etc/sysconfig/modules/ipvs<span class="token punctuation">.</span>modules && bash <span class="token operator">/</span>etc/sysconfig/modules/ipvs<span class="token punctuation">.</span>modules && lsmod <span class="token punctuation">|</span> grep <span class="token operator">-</span>e ip_vs <span class="token operator">-</span>e nf_conntrack_ipv4

</code></pre>

<ul>

<li>修改kupe-proxy的模式</li>

</ul>

<pre><code class="prism language-powershell">mode:<span class="token string">"ipvs"</span>

</code></pre>

<ul>

<li>重启kupe-proxy</li>

</ul>

<pre><code class="prism language-powershell">kubectl get pod <span class="token operator">-</span>n kube-system <span class="token punctuation">|</span> grep kube-proxy <span class="token punctuation">|</span>awk <span class="token string">'{system("kubectl delete pod "$1" -n kube-system")}'</span>

</code></pre>

</div>

</div>

</div>

</div>

</div>

<!--PC和WAP自适应版-->

<div id="SOHUCS" sid="1732172916738568192"></div>

<script type="text/javascript" src="/views/front/js/chanyan.js"></script>

<!-- 文章页-底部 动态广告位 -->

<div class="youdao-fixed-ad" id="detail_ad_bottom"></div>

</div>

<div class="col-md-3">

<div class="row" id="ad">

<!-- 文章页-右侧1 动态广告位 -->

<div id="right-1" class="col-lg-12 col-md-12 col-sm-4 col-xs-4 ad">

<div class="youdao-fixed-ad" id="detail_ad_1"> </div>

</div>

<!-- 文章页-右侧2 动态广告位 -->

<div id="right-2" class="col-lg-12 col-md-12 col-sm-4 col-xs-4 ad">

<div class="youdao-fixed-ad" id="detail_ad_2"></div>

</div>

<!-- 文章页-右侧3 动态广告位 -->

<div id="right-3" class="col-lg-12 col-md-12 col-sm-4 col-xs-4 ad">

<div class="youdao-fixed-ad" id="detail_ad_3"></div>

</div>

</div>

</div>

</div>

</div>

</div>

<div class="container">

<h4 class="pt20 mb15 mt0 border-top">你可能感兴趣的:(运维,kubernetes,云原生)</h4>

<div id="paradigm-article-related">

<div class="recommend-post mb30">

<ul class="widget-links">

<li><a href="/article/1943991135068286976.htm"

title="k8s:安装 Helm 私有仓库ChartMuseum、helm-push插件并上传、安装Zookeeper" target="_blank">k8s:安装 Helm 私有仓库ChartMuseum、helm-push插件并上传、安装Zookeeper</a>

<span class="text-muted">云游</span>

<a class="tag" taget="_blank" href="/search/docker/1.htm">docker</a><a class="tag" taget="_blank" href="/search/helm/1.htm">helm</a><a class="tag" taget="_blank" href="/search/helm-push/1.htm">helm-push</a>

<div>ChartMuseum是Kubernetes生态中用于存储、管理和发布HelmCharts的开源系统,主要用于扩展Helm包管理器的功能核心功能集中存储:提供中央化仓库存储Charts,支持版本管理和权限控制。跨集群部署:支持多集群环境下共享Charts,简化部署流程。离线部署:适配无网络环境,可将Charts存储在本地或局域网内。HTTP接口:通过HTTP协议提供服务,用户</div>

</li>

<li><a href="/article/1943960117179379712.htm"

title="[特殊字符] 实时数据洪流突围战:Flink+Paimon实现毫秒级分析的架构革命(附压测报告)——日均百亿级数据处理成本降低60%的工业级方案" target="_blank">[特殊字符] 实时数据洪流突围战:Flink+Paimon实现毫秒级分析的架构革命(附压测报告)——日均百亿级数据处理成本降低60%的工业级方案</a>

<span class="text-muted">Lucas55555555</span>

<a class="tag" taget="_blank" href="/search/flink/1.htm">flink</a><a class="tag" taget="_blank" href="/search/%E5%A4%A7%E6%95%B0%E6%8D%AE/1.htm">大数据</a>

<div>引言:流批一体的时代拐点据阿里云2025白皮书显示,实时数据处理需求年增速达240%,但传统Lambda架构资源消耗占比超运维成本的70%。某电商平台借助Flink+Paimon重构实时数仓后,端到端延迟从分钟级压缩至800ms,计算资源节省5.6万核/月。技术红利窗口期:2025年ApachePaimon1.0正式发布,支持秒级快照与湖仓一体,成为替代Iceberg的新范式一、痛点深挖:实时数仓</div>

</li>

<li><a href="/article/1943943987777826816.htm"

title="自动化运维工程师面试题解析【真题】" target="_blank">自动化运维工程师面试题解析【真题】</a>

<span class="text-muted"></span>

<div>ZabbixAgent默认监听的端口是A.10050。以下是关键分析:选项排除:C.80是HTTP默认端口,与ZabbixAgent无关。D.5432是PostgreSQL数据库的默认端口,不涉及ZabbixAgent。B.10051是ZabbixServer的默认监听端口,用于接收Agent发送的数据,而非Agent自身的监听端口。ZabbixAgent的配置:根据官方文档,ZabbixAgen</div>

</li>

<li><a href="/article/1943920919063883776.htm"

title="Kubernetes自动扩缩容方案对比与实践指南" target="_blank">Kubernetes自动扩缩容方案对比与实践指南</a>

<span class="text-muted">浅沫云归</span>

<a class="tag" taget="_blank" href="/search/%E5%90%8E%E7%AB%AF%E6%8A%80%E6%9C%AF%E6%A0%88%E5%B0%8F%E7%BB%93/1.htm">后端技术栈小结</a><a class="tag" taget="_blank" href="/search/kubernetes/1.htm">kubernetes</a><a class="tag" taget="_blank" href="/search/autoscaling/1.htm">autoscaling</a><a class="tag" taget="_blank" href="/search/devops/1.htm">devops</a>

<div>Kubernetes自动扩缩容方案对比与实践指南随着微服务架构和容器化的广泛采用,Kubernetes自动扩缩容(Autoscaling)成为保障生产环境性能稳定与资源高效利用的关键技术。面对水平Pod扩缩容、垂直资源调整、集群节点扩缩容以及事件驱动扩缩容等多种需求,社区提供了HPA、VPA、ClusterAutoscaler、KEDA等多种方案。本篇文章将从业务背景、方案对比、优缺点分析、选型建</div>

</li>

<li><a href="/article/1943919279413981184.htm"

title="【运维实战】解决 K8s 节点无法拉取 pause:3.6 镜像导致 API Server 启动失败的问题" target="_blank">【运维实战】解决 K8s 节点无法拉取 pause:3.6 镜像导致 API Server 启动失败的问题</a>

<span class="text-muted">gs80140</span>

<a class="tag" taget="_blank" href="/search/%E5%90%84%E7%A7%8D%E9%97%AE%E9%A2%98/1.htm">各种问题</a><a class="tag" taget="_blank" href="/search/%E8%BF%90%E7%BB%B4/1.htm">运维</a><a class="tag" taget="_blank" href="/search/kubernetes/1.htm">kubernetes</a><a class="tag" taget="_blank" href="/search/%E5%AE%B9%E5%99%A8/1.htm">容器</a>

<div>目录【运维实战】解决K8s节点无法拉取pause:3.6镜像导致APIServer启动失败的问题问题分析✅解决方案:替代拉取方式导入pause镜像Step1.从私有仓库拉取pause镜像Step2.重新打tag为Kubernetes默认命名Step3.导出镜像为tar包Step4.拷贝镜像到目标节点Step5.在目标节点导入镜像到containerd的k8s.io命名空间Step6.验证镜像是否导</div>

</li>

<li><a href="/article/1943914485504864256.htm"

title="zookeeper etcd区别" target="_blank">zookeeper etcd区别</a>

<span class="text-muted">sun007700</span>

<a class="tag" taget="_blank" href="/search/zookeeper/1.htm">zookeeper</a><a class="tag" taget="_blank" href="/search/etcd/1.htm">etcd</a><a class="tag" taget="_blank" href="/search/%E5%88%86%E5%B8%83%E5%BC%8F/1.htm">分布式</a>

<div>ZooKeeper与etcd的核心区别体现在设计理念、数据模型、一致性协议及适用场景等方面。ZooKeeper基于ZAB协议实现分布式协调,采用树形数据结构和临时节点特性,适合传统分布式系统;而etcd基于Raft协议,以高性能键值对存储为核心,专为云原生场景优化,是Kubernetes等容器编排系统的默认存储组件。12架构与设计目标差异ZooKeeper。设计定位:专注于分</div>

</li>

<li><a href="/article/1943878433524215808.htm"

title="在 Linux(openEuler 24.03 LTS-SP1)上安装 Kubernetes + KubeSphere 的防火墙放行全攻略" target="_blank">在 Linux(openEuler 24.03 LTS-SP1)上安装 Kubernetes + KubeSphere 的防火墙放行全攻略</a>

<span class="text-muted"></span>

<div>目录在Linux(openEuler24.03LTS-SP1)上安装Kubernetes+KubeSphere的防火墙放行全攻略一、为什么要先搞定防火墙?二、目标环境三、需放行的端口和协议列表四、核心工具说明1.修正后的exec.sh脚本(支持管道/重定向)2.批量放行脚本:open_firewall.sh五、使用示例1.批量放行端口2.查看当前防火墙规则3.仅开放单一端口(临时需求)4.检查特定</div>

</li>

<li><a href="/article/1943865073860669440.htm"

title="为什么你的服务器总被攻击?运维老兵的深度分析" target="_blank">为什么你的服务器总被攻击?运维老兵的深度分析</a>

<span class="text-muted"></span>

<div>作为运维人员,最头疼的莫过于服务器在毫无征兆的情况下变得异常缓慢、服务中断,甚至数据泄露。事后查看日志,常常发现一些“莫名其妙”的攻击痕迹。为什么服务器会成为攻击者的目标?这些攻击又是如何悄无声息发生的?今天,我们就从实战角度分析几种常见且容易被忽视的攻击模式,并教你如何通过日志分析初步定位问题。一、服务器被攻击的常见“莫名其妙”原因“扫楼式”探测与弱口令爆破:现象:服务器CPU、内存无明显异常,</div>

</li>

<li><a href="/article/1943863558789984256.htm"

title="如何在Windows系统下使用Dockerfile构建Docker镜像:完整指南" target="_blank">如何在Windows系统下使用Dockerfile构建Docker镜像:完整指南</a>

<span class="text-muted">996蹲坑</span>

<a class="tag" taget="_blank" href="/search/windows/1.htm">windows</a><a class="tag" taget="_blank" href="/search/docker/1.htm">docker</a><a class="tag" taget="_blank" href="/search/%E5%AE%B9%E5%99%A8/1.htm">容器</a>

<div>前言Docker作为当前最流行的容器化技术,已经成为开发、测试和运维的必备工具。本文将详细介绍在Windows系统下使用Dockerfile构建Docker镜像的完整流程,包括两种镜像构建方式的对比、Dockerfile核心指令详解、实战案例演示以及Windows系统下的特殊注意事项。一、Docker镜像构建的两种方式1.容器转为镜像(不推荐)这种方式适合临时保存容器状态,但不适合生产环境使用:#</div>

</li>

<li><a href="/article/1943861412233277440.htm"

title="2025年网络安全人员薪酬趋势" target="_blank">2025年网络安全人员薪酬趋势</a>

<span class="text-muted">程序员肉肉</span>

<a class="tag" taget="_blank" href="/search/web%E5%AE%89%E5%85%A8/1.htm">web安全</a><a class="tag" taget="_blank" href="/search/%E5%AE%89%E5%85%A8/1.htm">安全</a><a class="tag" taget="_blank" href="/search/%E7%BD%91%E7%BB%9C%E5%AE%89%E5%85%A8/1.htm">网络安全</a><a class="tag" taget="_blank" href="/search/%E8%AE%A1%E7%AE%97%E6%9C%BA/1.htm">计算机</a><a class="tag" taget="_blank" href="/search/%E4%BF%A1%E6%81%AF%E5%AE%89%E5%85%A8/1.htm">信息安全</a><a class="tag" taget="_blank" href="/search/%E7%A8%8B%E5%BA%8F%E5%91%98/1.htm">程序员</a>

<div>2025年网络安全人员薪酬趋势一、网络安全行业为何成“香饽饽”?最近和几个朋友聊起职业规划,发现一个有趣的现象:不管原来是程序员、运维还是产品经理,都想往网络安全领域跳槽。问原因,答案出奇一致——“听说这行工资高”。确实,从2025年的数据来看,网络安全行业的薪资水平不仅跑赢了大多数IT岗位,甚至成了“技术岗里的天花板”。但高薪背后到底有哪些门道?哪些职位最赚钱?城市和经验如何影响收入?今天我们就</div>

</li>

<li><a href="/article/1943847035476176896.htm"

title="运维笔记<4> xxl-job打通" target="_blank">运维笔记<4> xxl-job打通</a>

<span class="text-muted">GeminiJM</span>

<a class="tag" taget="_blank" href="/search/%E8%BF%90%E7%BB%B4/1.htm">运维</a><a class="tag" taget="_blank" href="/search/java/1.htm">java</a><a class="tag" taget="_blank" href="/search/xxl-job/1.htm">xxl-job</a>

<div>新的一天,来点新的运维业务,今天是xxl-job的打通其实在非集群中,xxl-job的使用相对是比较简单的,相信很多人都有使用的经验这次我们的业务场景是在k8s集群中,用xxl-job来做定时调度加上第一次倒腾,也是遇到了不少问题,在这里做一些记录1.xxl-job的集群安装首先是xxl-job的集群安装先贴上xxl-jobsql初始化文件的地址:xxl-job/doc/db/tables_xxl</div>

</li>

<li><a href="/article/1943794700737638400.htm"

title="【ceph】坏盘更换,osd的具体操作" target="_blank">【ceph】坏盘更换,osd的具体操作</a>

<span class="text-muted">向往风的男子</span>

<a class="tag" taget="_blank" href="/search/ceph/1.htm">ceph</a><a class="tag" taget="_blank" href="/search/ceph/1.htm">ceph</a>

<div>本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》暂未更新《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》运维日常《l</div>

</li>

<li><a href="/article/1943783982449618944.htm"

title="2024年运维最新分布式存储ceph osd 常用操作_ceph查看osd对应硬盘(1),2024年最新Linux运维编程基础教程" target="_blank">2024年运维最新分布式存储ceph osd 常用操作_ceph查看osd对应硬盘(1),2024年最新Linux运维编程基础教程</a>

<span class="text-muted">2401_83944328</span>

<a class="tag" taget="_blank" href="/search/%E7%A8%8B%E5%BA%8F%E5%91%98/1.htm">程序员</a><a class="tag" taget="_blank" href="/search/%E8%BF%90%E7%BB%B4/1.htm">运维</a><a class="tag" taget="_blank" href="/search/%E5%88%86%E5%B8%83%E5%BC%8F/1.htm">分布式</a><a class="tag" taget="_blank" href="/search/ceph/1.htm">ceph</a>

<div>最全的Linux教程,Linux从入门到精通======================linux从入门到精通(第2版)Linux系统移植Linux驱动开发入门与实战LINUX系统移植第2版Linux开源网络全栈详解从DPDK到OpenFlow第一份《Linux从入门到精通》466页====================内容简介====本书是获得了很多读者好评的Linux经典畅销书**《Linu</div>

</li>

<li><a href="/article/1943774787104993280.htm"

title="K3s-io/kine项目核心架构与数据流解析" target="_blank">K3s-io/kine项目核心架构与数据流解析</a>

<span class="text-muted">富珂祯</span>

<div>K3s-io/kine项目核心架构与数据流解析kineRunKubernetesonMySQL,Postgres,sqlite,dqlite,notetcd.项目地址:https://gitcode.com/gh_mirrors/ki/kine项目概述K3s-io/kine是一个创新的存储适配器,它在传统SQL数据库之上实现了轻量级的键值存储功能。该项目最显著的特点是采用单一数据表结构,通过巧妙的</div>

</li>

<li><a href="/article/1943762687385202688.htm"

title="20250707-3-Kubernetes 核心概念-有了Docker,为什么还用K8s_笔记" target="_blank">20250707-3-Kubernetes 核心概念-有了Docker,为什么还用K8s_笔记</a>

<span class="text-muted">Andy杨</span>

<a class="tag" taget="_blank" href="/search/CKA-%E4%B8%93%E6%A0%8F/1.htm">CKA-专栏</a><a class="tag" taget="_blank" href="/search/kubernetes/1.htm">kubernetes</a><a class="tag" taget="_blank" href="/search/docker/1.htm">docker</a><a class="tag" taget="_blank" href="/search/%E7%AC%94%E8%AE%B0/1.htm">笔记</a>

<div>一、Kubernetes核心概念1.有了Docker,为什么还用Kubernetes1)企业需求独立性问题:Docker容器本质上是独立存在的,多个容器跨主机提供服务时缺乏统一管理机制负载均衡需求:为提高业务并发和高可用,企业会使用多台服务器部署多个容器实例,但Docker本身不具备负载均衡能力管理复杂度:随着Docker主机和容器数量增加,面临部署、升级、监控等统一管理难题运维效率:单机升</div>

</li>

<li><a href="/article/1943758776741982208.htm"

title="20250707-4-Kubernetes 集群部署、配置和验证-K8s基本资源概念初_笔记" target="_blank">20250707-4-Kubernetes 集群部署、配置和验证-K8s基本资源概念初_笔记</a>

<span class="text-muted"></span>

<div>一、kubeconfig配置文件文件作用:kubectl使用kubeconfig认证文件连接K8s集群生成方式:使用kubectlconfig指令生成核心字段:clusters:定义集群信息,包括证书和服务端地址contexts:定义上下文,关联集群和用户users:定义客户端认证信息current-context:指定当前使用的上下文二、Kubernetes弃用Docker1.弃用背景原因:</div>

</li>

<li><a href="/article/1943755374930751488.htm"

title="k8s之configmap" target="_blank">k8s之configmap</a>

<span class="text-muted">西京刀客</span>

<a class="tag" taget="_blank" href="/search/%E4%BA%91%E5%8E%9F%E7%94%9F%28Cloud/1.htm">云原生(Cloud</a><a class="tag" taget="_blank" href="/search/Native%29/1.htm">Native)</a><a class="tag" taget="_blank" href="/search/%E4%BA%91%E8%AE%A1%E7%AE%97/1.htm">云计算</a><a class="tag" taget="_blank" href="/search/%E8%99%9A%E6%8B%9F%E5%8C%96/1.htm">虚拟化</a><a class="tag" taget="_blank" href="/search/%23/1.htm">#</a><a class="tag" taget="_blank" href="/search/Kubernetes%28k8s%29/1.htm">Kubernetes(k8s)</a><a class="tag" taget="_blank" href="/search/kubernetes/1.htm">kubernetes</a><a class="tag" taget="_blank" href="/search/%E5%AE%B9%E5%99%A8/1.htm">容器</a><a class="tag" taget="_blank" href="/search/%E4%BA%91%E5%8E%9F%E7%94%9F/1.htm">云原生</a>

<div>文章目录k8s之configmap什么是ConfigMap?为什么需要ConfigMap?ConfigMap的创建方式ConfigMap的使用方式实际应用场景ConfigMap最佳实践参考k8s之configmap什么是ConfigMap?ConfigMap是Kubernetes中用于存储非机密配置数据的API对象。它允许你将配置信息与容器镜像解耦,使应用程序更加灵活和可移植。ConfigMap以</div>

</li>

<li><a href="/article/1943725885320392704.htm"

title="Oracle EMCC 13.5 集群安装部署指南" target="_blank">Oracle EMCC 13.5 集群安装部署指南</a>

<span class="text-muted">Lucifer三思而后行</span>

<a class="tag" taget="_blank" href="/search/DBA/1.htm">DBA</a><a class="tag" taget="_blank" href="/search/%E5%AE%9E%E6%88%98%E7%B3%BB%E5%88%97/1.htm">实战系列</a><a class="tag" taget="_blank" href="/search/oracle/1.htm">oracle</a><a class="tag" taget="_blank" href="/search/%E6%95%B0%E6%8D%AE%E5%BA%93/1.htm">数据库</a>

<div>大家好,这里是DBA学习之路,专注于提升数据库运维效率。目录前言第一阶段:OMR集群部署1.1OracleRAC环境准备1.2数据库版本验证1.3EMCC专用数据库优化第二阶段:ACFS集群文件系统构建2.1存储层配置配置multipath多路径配置UDEV设备绑定2.2ACFS文件系统创建使用ASMCA创建磁盘组创建ACFSVolume挂载点准备和文件系统创建第三阶段:OMS集群部署3.1环境准</div>

</li>

<li><a href="/article/1943718693351518208.htm"

title="SkyWalking实现微服务链路追踪的埋点方案" target="_blank">SkyWalking实现微服务链路追踪的埋点方案</a>

<span class="text-muted">MenzilBiz</span>

<a class="tag" taget="_blank" href="/search/%E6%9C%8D%E5%8A%A1%E5%99%A8/1.htm">服务器</a><a class="tag" taget="_blank" href="/search/%E8%BF%90%E7%BB%B4/1.htm">运维</a><a class="tag" taget="_blank" href="/search/%E5%BE%AE%E6%9C%8D%E5%8A%A1/1.htm">微服务</a><a class="tag" taget="_blank" href="/search/skywalking/1.htm">skywalking</a>

<div>SkyWalking实现微服务链路追踪的埋点方案一、SkyWalking简介SkyWalking是一款开源的APM(应用性能监控)系统,特别为微服务、云原生架构和容器化(Docker/Kubernetes)应用而设计。它主要功能包括分布式追踪、服务网格遥测分析、指标聚合和可视化等。SkyWalking支持多种语言(Java、Go、Python等)和协议(HTTP、gRPC等),能够提供端到端的调用</div>

</li>

<li><a href="/article/1943716673357934592.htm"

title="揭秘华为欧拉:不只是操作系统,更是云时代的技能认证体系" target="_blank">揭秘华为欧拉:不只是操作系统,更是云时代的技能认证体系</a>

<span class="text-muted"></span>

<div>揭秘华为欧拉:不只是操作系统,更是云时代的技能认证体系作为一名深耕IT培训领域的博主,今天带大家客观认识“华为欧拉”——这个在云计算领域频频出现的名词。一、华为欧拉究竟是什么?严格来说,“华为欧拉”核心包含两部分1.openEuler操作系统:一个由华为支持的企业级开源Linux操作系统发行版,专为云计算、云原生平台等场景设计优化。2.华为openEuler认证体系(HCIA/HCIP/HCIE-</div>

</li>

<li><a href="/article/1943701673738301440.htm"

title="Maven 构建性能优化深度剖析:原理、策略与实践" target="_blank">Maven 构建性能优化深度剖析:原理、策略与实践</a>

<span class="text-muted">越重天</span>

<a class="tag" taget="_blank" href="/search/Java/1.htm">Java</a><a class="tag" taget="_blank" href="/search/Maven%E5%AE%9E%E6%88%98/1.htm">Maven实战</a><a class="tag" taget="_blank" href="/search/maven/1.htm">maven</a><a class="tag" taget="_blank" href="/search/%E6%80%A7%E8%83%BD%E4%BC%98%E5%8C%96/1.htm">性能优化</a><a class="tag" taget="_blank" href="/search/java/1.htm">java</a>

<div>博主简介:CSDN博客专家,历代文学网(PC端可以访问:https://literature.sinhy.com/#/?__c=1000,移动端可微信小程序搜索“历代文学”)总架构师,15年工作经验,精通Java编程,高并发设计,Springboot和微服务,熟悉Linux,ESXI虚拟化以及云原生Docker和K8s,热衷于探索科技的边界,并将理论知识转化为实际应用。保持对新技术的好奇心,乐于分</div>

</li>

<li><a href="/article/1943691341896675328.htm"

title="专题:2025云计算与AI技术研究趋势报告|附200+份报告PDF、原数据表汇总下载" target="_blank">专题:2025云计算与AI技术研究趋势报告|附200+份报告PDF、原数据表汇总下载</a>

<span class="text-muted"></span>

<div>原文链接:https://tecdat.cn/?p=42935关键词:2025,云计算,AI技术,市场趋势,深度学习,公有云,研究报告云计算和AI技术正以肉眼可见的速度重塑商业世界。过去十年,全球云服务收入激增8倍,中国云计算市场规模突破6000亿元,而深度学习算法的应用量更是暴涨400倍。这些数字背后,是企业从“自建机房”到“云原生开发”的转型,是AI从“实验室”走向“产业级应用”的跨越。本报告</div>

</li>

<li><a href="/article/1943656939288326144.htm"

title="如何通过YashanDB数据库实现企业级数据分区管理?" target="_blank">如何通过YashanDB数据库实现企业级数据分区管理?</a>

<span class="text-muted"></span>

<a class="tag" taget="_blank" href="/search/%E6%95%B0%E6%8D%AE%E5%BA%93/1.htm">数据库</a>

<div>在当今大数据时代,企业面临着海量数据的管理和优化访问的问题。如何有效地组织和划分庞大的数据集,以提升查询性能和运维效率,成为数据库系统设计的核心挑战。数据分区技术作为解决大规模数据处理的关键手段,能够显著减少无关数据的访问,优化资源利用率。本文聚焦于YashanDB数据库,详细解析其数据分区管理的实现机制及应用,为企业级应用提供高效、灵活的数据分区解决方案。YashanDB中的数据分区基础Yash</div>

</li>

<li><a href="/article/1943655918688333824.htm"

title="【kafka】在Linux系统中部署配置Kafka的详细用法教程分享" target="_blank">【kafka】在Linux系统中部署配置Kafka的详细用法教程分享</a>

<span class="text-muted">景天科技苑</span>

<a class="tag" taget="_blank" href="/search/linux%E5%9F%BA%E7%A1%80%E4%B8%8E%E8%BF%9B%E9%98%B6/1.htm">linux基础与进阶</a><a class="tag" taget="_blank" href="/search/shell%E8%84%9A%E6%9C%AC%E7%BC%96%E5%86%99%E5%AE%9E%E6%88%98/1.htm">shell脚本编写实战</a><a class="tag" taget="_blank" href="/search/kafka/1.htm">kafka</a><a class="tag" taget="_blank" href="/search/linux/1.htm">linux</a><a class="tag" taget="_blank" href="/search/%E5%88%86%E5%B8%83%E5%BC%8F/1.htm">分布式</a><a class="tag" taget="_blank" href="/search/kafka%E5%AE%89%E8%A3%85%E9%85%8D%E7%BD%AE/1.htm">kafka安装配置</a><a class="tag" taget="_blank" href="/search/kafka%E4%BC%98%E5%8C%96/1.htm">kafka优化</a>

<div>✨✨欢迎大家来到景天科技苑✨✨养成好习惯,先赞后看哦~作者简介:景天科技苑《头衔》:大厂架构师,华为云开发者社区专家博主,阿里云开发者社区专家博主,CSDN全栈领域优质创作者,掘金优秀博主,51CTO博客专家等。《博客》:Python全栈,PyQt5和Tkinter桌面应用开发,小程序开发,人工智能,js逆向,App逆向,网络系统安全,云原生K8S,Prometheus监控,数据分析,Django</div>

</li>

<li><a href="/article/1943651257038204928.htm"

title="探索 Golang 与 Docker 集成的无限可能" target="_blank">探索 Golang 与 Docker 集成的无限可能</a>

<span class="text-muted">Golang编程笔记</span>

<a class="tag" taget="_blank" href="/search/golang/1.htm">golang</a><a class="tag" taget="_blank" href="/search/docker/1.htm">docker</a><a class="tag" taget="_blank" href="/search/%E5%BC%80%E5%8F%91%E8%AF%AD%E8%A8%80/1.htm">开发语言</a><a class="tag" taget="_blank" href="/search/ai/1.htm">ai</a>

<div>探索Golang与Docker集成的无限可能关键词:Golang、Docker、容器化、微服务、云原生、镜像优化、CI/CD摘要:本文将带你走进Golang与Docker集成的奇妙世界。我们会从“为什么需要这对组合”讲起,用生活故事类比核心概念,拆解Go静态编译与Docker容器化的“天作之合”,通过实战案例演示如何用Docker高效打包Go应用,并探讨它们在云原生时代的无限可能。无论你是Go开发</div>

</li>

<li><a href="/article/1943650626281992192.htm"

title="云原生技术与应用-Docker高级管理--Dockerfile镜像制作" target="_blank">云原生技术与应用-Docker高级管理--Dockerfile镜像制作</a>

<span class="text-muted">慕桉 ~</span>

<a class="tag" taget="_blank" href="/search/%E4%BA%91%E5%8E%9F%E7%94%9F/1.htm">云原生</a><a class="tag" taget="_blank" href="/search/docker/1.htm">docker</a><a class="tag" taget="_blank" href="/search/%E5%AE%B9%E5%99%A8/1.htm">容器</a>

<div>目录一.Docker镜像管理1.Docker镜像结构2.Dockerfile介绍二.Dockerfile实施1.构建nginx容器2.构建Tomcat容器3.构建mysql容器三.Dockerfile语法注意事项1.指令书写范围2.基础镜像选择3.文件操作注意4.执行命令要点5.环境变量和参数设置6.缓存利用与清理一.Docker镜像管理Docker镜像除了是Docker的核心技术之外,也是应用发</div>

</li>

<li><a href="/article/1943647098150907904.htm"

title="国产开源高性能对象存储RustFS保姆级上手指南" target="_blank">国产开源高性能对象存储RustFS保姆级上手指南</a>

<span class="text-muted">光爷不秃</span>

<a class="tag" taget="_blank" href="/search/%E5%AF%B9%E8%B1%A1%E5%AD%98%E5%82%A8/1.htm">对象存储</a><a class="tag" taget="_blank" href="/search/rust/1.htm">rust</a><a class="tag" taget="_blank" href="/search/%E5%9B%BD%E4%BA%A7%E5%BC%80%E6%BA%90%E8%BD%AF%E4%BB%B6/1.htm">国产开源软件</a><a class="tag" taget="_blank" href="/search/rust/1.htm">rust</a><a class="tag" taget="_blank" href="/search/%E4%BA%91%E8%AE%A1%E7%AE%97/1.htm">云计算</a><a class="tag" taget="_blank" href="/search/%E5%BC%80%E6%BA%90%E8%BD%AF%E4%BB%B6/1.htm">开源软件</a><a class="tag" taget="_blank" href="/search/github/1.htm">github</a><a class="tag" taget="_blank" href="/search/%E5%BC%80%E6%BA%90/1.htm">开源</a><a class="tag" taget="_blank" href="/search/%E6%95%B0%E6%8D%AE%E4%BB%93%E5%BA%93/1.htm">数据仓库</a><a class="tag" taget="_blank" href="/search/database/1.htm">database</a>

<div>在云计算与大数据爆发的时代,企业和开发者对存储方案的要求愈发严苛——不仅要能扛住海量数据的读写压力,还得兼顾安全性、可扩展性和兼容性。今天给大家介绍一款基于Rust语言开发的开源分布式对象存储系统——RustFS,它不仅是MinIO的国产化优秀替代方案,更是AI、大数据和云原生场景的理想之选。本文将从基础介绍到实战操作,带大家快速上手这款"优雅的存储解决方案"。一、RustFS核心特性解析Rust</div>

</li>

<li><a href="/article/1943644954844786688.htm"

title="【大家的项目】helyim: 纯 Rust 实现的分布式对象存储系统" target="_blank">【大家的项目】helyim: 纯 Rust 实现的分布式对象存储系统</a>

<span class="text-muted"></span>

<div>helyim是使用rust重写的seaweedfs,具体架构可以参考Facebook发表的haystack和f4论文。主要设计目标为:精简文件元数据信息,去掉对象存储不需要的POSIX语义(如文件权限)小文件合并成大文件,从而减小元数据数,使其完全存在内存中,以省去获取文件元数据的磁盘IO支持地域容灾,包括IDC容灾和机架容灾架构简单,易于实现和运维支持的特性:支持使用Http的文件上传,下载,删</div>

</li>

<li><a href="/article/1943639278022094848.htm"

title="AI技术全景图鉴:从模型开发到落地部署的全链路拆解" target="_blank">AI技术全景图鉴:从模型开发到落地部署的全链路拆解</a>

<span class="text-muted">大模型玩家</span>

<a class="tag" taget="_blank" href="/search/%E4%BA%BA%E5%B7%A5%E6%99%BA%E8%83%BD/1.htm">人工智能</a><a class="tag" taget="_blank" href="/search/langchain/1.htm">langchain</a><a class="tag" taget="_blank" href="/search/%E5%A4%A7%E6%A8%A1%E5%9E%8B/1.htm">大模型</a><a class="tag" taget="_blank" href="/search/%E4%BA%A7%E5%93%81%E7%BB%8F%E7%90%86/1.htm">产品经理</a><a class="tag" taget="_blank" href="/search/%E5%AD%A6%E4%B9%A0/1.htm">学习</a><a class="tag" taget="_blank" href="/search/ai/1.htm">ai</a><a class="tag" taget="_blank" href="/search/%E7%A8%8B%E5%BA%8F%E5%91%98/1.htm">程序员</a>

<div>人工智能(AI)技术的快速发展,使得企业在AI模型的开发、训练、部署和运维过程中面临前所未有的复杂性。从数据管理、模型训练到应用落地,再到算力调度和智能运维,一个完整的AI架构需要涵盖多个层面,确保AI技术能够高效、稳定地运行。本文将基于AI技术架构全景图,深入剖析AI的开发工具、AI平台、算力与框架、智能运维四大核心部分,帮助大家系统性地理解AI全生命周期管理。一、AI开发工具:赋能高效开发,提</div>

</li>

<li><a href="/article/1943622504744546304.htm"

title="突破传统:Dell R730服务器RAID 5配置与智能监控全解析" target="_blank">突破传统:Dell R730服务器RAID 5配置与智能监控全解析</a>

<span class="text-muted">芯作者</span>

<a class="tag" taget="_blank" href="/search/D2%EF%BC%9Aubuntu/1.htm">D2:ubuntu</a><a class="tag" taget="_blank" href="/search/%E6%9C%8D%E5%8A%A1%E5%99%A8/1.htm">服务器</a><a class="tag" taget="_blank" href="/search/linux/1.htm">linux</a><a class="tag" taget="_blank" href="/search/ubuntu/1.htm">ubuntu</a>

<div>在现代数据中心运维中,合理的存储配置是保障业务连续性的基石。今天,我们将深入探索DellPowerEdgeR730服务器的RAID5配置技巧,并结合热备盘策略、自动化监控脚本以及性能调优方案,为您呈现一份别开生面的技术指南。一、为什么RAID5+热备盘是企业级存储的黄金组合?RAID5通过分布式奇偶校验实现数据冗余,允许单块硬盘故障时不丢失数据。其存储效率公式为:Efficiency=\frac{</div>

</li>

<li><a href="/article/47.htm"

title="jdk tomcat 环境变量配置" target="_blank">jdk tomcat 环境变量配置</a>

<span class="text-muted">Array_06</span>

<a class="tag" taget="_blank" href="/search/java/1.htm">java</a><a class="tag" taget="_blank" href="/search/jdk/1.htm">jdk</a><a class="tag" taget="_blank" href="/search/tomcat/1.htm">tomcat</a>

<div>Win7 下如何配置java环境变量

1。准备jdk包,win7系统,tomcat安装包(均上网下载即可)

2。进行对jdk的安装,尽量为默认路径(但要记住啊!!以防以后配置用。。。)

3。分别配置高级环境变量。

电脑-->右击属性-->高级环境变量-->环境变量。

分别配置 :

path

&nbs</div>

</li>

<li><a href="/article/174.htm"

title="Spring调SDK包报java.lang.NoSuchFieldError错误" target="_blank">Spring调SDK包报java.lang.NoSuchFieldError错误</a>

<span class="text-muted">bijian1013</span>

<a class="tag" taget="_blank" href="/search/java/1.htm">java</a><a class="tag" taget="_blank" href="/search/spring/1.htm">spring</a>

<div> 在工作中调另一个系统的SDK包,出现如下java.lang.NoSuchFieldError错误。

org.springframework.web.util.NestedServletException: Handler processing failed; nested exception is java.l</div>

</li>

<li><a href="/article/301.htm"

title="LeetCode[位运算] - #136 数组中的单一数" target="_blank">LeetCode[位运算] - #136 数组中的单一数</a>

<span class="text-muted">Cwind</span>

<a class="tag" taget="_blank" href="/search/java/1.htm">java</a><a class="tag" taget="_blank" href="/search/%E9%A2%98%E8%A7%A3/1.htm">题解</a><a class="tag" taget="_blank" href="/search/%E4%BD%8D%E8%BF%90%E7%AE%97/1.htm">位运算</a><a class="tag" taget="_blank" href="/search/LeetCode/1.htm">LeetCode</a><a class="tag" taget="_blank" href="/search/Algorithm/1.htm">Algorithm</a>

<div>原题链接:#136 Single Number

要求:

给定一个整型数组,其中除了一个元素之外,每个元素都出现两次。找出这个元素

注意:算法的时间复杂度应为O(n),最好不使用额外的内存空间

难度:中等

分析:

题目限定了线性的时间复杂度,同时不使用额外的空间,即要求只遍历数组一遍得出结果。由于异或运算 n XOR n = 0, n XOR 0 = n,故将数组中的每个元素进</div>

</li>

<li><a href="/article/428.htm"

title="qq登陆界面开发" target="_blank">qq登陆界面开发</a>

<span class="text-muted">15700786134</span>

<a class="tag" taget="_blank" href="/search/qq/1.htm">qq</a>

<div>今天我们来开发一个qq登陆界面,首先写一个界面程序,一个界面首先是一个Frame对象,即是一个窗体。然后在这个窗体上放置其他组件。代码如下:

public class First { public void initul(){ jf=ne</div>

</li>

<li><a href="/article/555.htm"

title="Linux的程序包管理器RPM" target="_blank">Linux的程序包管理器RPM</a>

<span class="text-muted">被触发</span>

<a class="tag" taget="_blank" href="/search/linux/1.htm">linux</a>

<div>在早期我们使用源代码的方式来安装软件时,都需要先把源程序代码编译成可执行的二进制安装程序,然后进行安装。这就意味着每次安装软件都需要经过预处理-->编译-->汇编-->链接-->生成安装文件--> 安装,这个复杂而艰辛的过程。为简化安装步骤,便于广大用户的安装部署程序,程序提供商就在特定的系统上面编译好相关程序的安装文件并进行打包,提供给大家下载,我们只需要根据自己的</div>

</li>

<li><a href="/article/682.htm"

title="socket通信遇到EOFException" target="_blank">socket通信遇到EOFException</a>

<span class="text-muted">肆无忌惮_</span>

<a class="tag" taget="_blank" href="/search/EOFException/1.htm">EOFException</a>

<div>java.io.EOFException

at java.io.ObjectInputStream$PeekInputStream.readFully(ObjectInputStream.java:2281)

at java.io.ObjectInputStream$BlockDataInputStream.readShort(ObjectInputStream.java:</div>

</li>

<li><a href="/article/809.htm"

title="基于spring的web项目定时操作" target="_blank">基于spring的web项目定时操作</a>

<span class="text-muted">知了ing</span>

<a class="tag" taget="_blank" href="/search/java/1.htm">java</a><a class="tag" taget="_blank" href="/search/Web/1.htm">Web</a>

<div>废话不多说,直接上代码,很简单 配置一下项目启动就行

1,web.xml

<?xml version="1.0" encoding="UTF-8"?>

<web-app xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="h</div>

</li>

<li><a href="/article/936.htm"

title="树形结构的数据库表Schema设计" target="_blank">树形结构的数据库表Schema设计</a>

<span class="text-muted">矮蛋蛋</span>

<a class="tag" taget="_blank" href="/search/schema/1.htm">schema</a>

<div>原文地址:

http://blog.csdn.net/MONKEY_D_MENG/article/details/6647488

程序设计过程中,我们常常用树形结构来表征某些数据的关联关系,如企业上下级部门、栏目结构、商品分类等等,通常而言,这些树状结构需要借助于数据库完成持久化。然而目前的各种基于关系的数据库,都是以二维表的形式记录存储数据信息,</div>

</li>

<li><a href="/article/1063.htm"

title="maven将jar包和源码一起打包到本地仓库" target="_blank">maven将jar包和源码一起打包到本地仓库</a>

<span class="text-muted">alleni123</span>

<a class="tag" taget="_blank" href="/search/maven/1.htm">maven</a>

<div>http://stackoverflow.com/questions/4031987/how-to-upload-sources-to-local-maven-repository

<project>

...

<build>

<plugins>

<plugin>

<groupI</div>

</li>

<li><a href="/article/1190.htm"

title="java IO操作 与 File 获取文件或文件夹的大小,可读,等属性!!!" target="_blank">java IO操作 与 File 获取文件或文件夹的大小,可读,等属性!!!</a>

<span class="text-muted">百合不是茶</span>

<div>类 File

File是指文件和目录路径名的抽象表示形式。

1,何为文件:

标准文件(txt doc mp3...)

目录文件(文件夹)

虚拟内存文件

2,File类中有可以创建文件的 createNewFile()方法,在创建新文件的时候需要try{} catch(){}因为可能会抛出异常;也有可以判断文件是否是一个标准文件的方法isFile();这些防抖都</div>

</li>

<li><a href="/article/1317.htm"

title="Spring注入有继承关系的类(2)" target="_blank">Spring注入有继承关系的类(2)</a>

<span class="text-muted">bijian1013</span>

<a class="tag" taget="_blank" href="/search/java/1.htm">java</a><a class="tag" taget="_blank" href="/search/spring/1.htm">spring</a>

<div>被注入类的父类有相应的属性,Spring可以直接注入相应的属性,如下所例:1.AClass类

package com.bijian.spring.test4;

public class AClass {

private String a;

private String b;

public String getA() {

retu</div>

</li>

<li><a href="/article/1444.htm"

title="30岁转型期你能否成为成功人士" target="_blank">30岁转型期你能否成为成功人士</a>

<span class="text-muted">bijian1013</span>

<a class="tag" taget="_blank" href="/search/%E6%88%90%E9%95%BF/1.htm">成长</a><a class="tag" taget="_blank" href="/search/%E5%8A%B1%E5%BF%97/1.htm">励志</a>

<div> 很多人由于年轻时走了弯路,到了30岁一事无成,这样的例子大有人在。但同样也有一些人,整个职业生涯都发展得很优秀,到了30岁已经成为职场的精英阶层。由于做猎头的原因,我们接触很多30岁左右的经理人,发现他们在职业发展道路上往往有很多致命的问题。在30岁之前,他们的职业生涯表现很优秀,但从30岁到40岁这一段,很多人</div>

</li>

<li><a href="/article/1571.htm"

title="【Velocity四】Velocity与Java互操作" target="_blank">【Velocity四】Velocity与Java互操作</a>

<span class="text-muted">bit1129</span>

<a class="tag" taget="_blank" href="/search/velocity/1.htm">velocity</a>

<div>Velocity出现的目的用于简化基于MVC的web应用开发,用于替代JSP标签技术,那么Velocity如何访问Java代码.本篇继续以Velocity三http://bit1129.iteye.com/blog/2106142中的例子为基础,

POJO

package com.tom.servlets;

public</div>

</li>

<li><a href="/article/1698.htm"

title="【Hive十一】Hive数据倾斜优化" target="_blank">【Hive十一】Hive数据倾斜优化</a>

<span class="text-muted">bit1129</span>

<a class="tag" taget="_blank" href="/search/hive/1.htm">hive</a>

<div>什么是Hive数据倾斜问题

操作:join,group by,count distinct

现象:任务进度长时间维持在99%(或100%),查看任务监控页面,发现只有少量(1个或几个)reduce子任务未完成;查看未完成的子任务,可以看到本地读写数据量积累非常大,通常超过10GB可以认定为发生数据倾斜。

原因:key分布不均匀

倾斜度衡量:平均记录数超过50w且</div>

</li>

<li><a href="/article/1825.htm"

title="在nginx中集成lua脚本:添加自定义Http头,封IP等" target="_blank">在nginx中集成lua脚本:添加自定义Http头,封IP等</a>

<span class="text-muted">ronin47</span>

<a class="tag" taget="_blank" href="/search/nginx+lua+csrf/1.htm">nginx lua csrf</a>

<div>Lua是一个可以嵌入到Nginx配置文件中的动态脚本语言,从而可以在Nginx请求处理的任何阶段执行各种Lua代码。刚开始我们只是用Lua 把请求路由到后端服务器,但是它对我们架构的作用超出了我们的预期。下面就讲讲我们所做的工作。 强制搜索引擎只索引mixlr.com

Google把子域名当作完全独立的网站,我们不希望爬虫抓取子域名的页面,降低我们的Page rank。

location /{</div>

</li>

<li><a href="/article/1952.htm"

title="java-3.求子数组的最大和" target="_blank">java-3.求子数组的最大和</a>

<span class="text-muted">bylijinnan</span>

<a class="tag" taget="_blank" href="/search/java/1.htm">java</a>

<div>package beautyOfCoding;

public class MaxSubArraySum {

/**

* 3.求子数组的最大和

题目描述:

输入一个整形数组,数组里有正数也有负数。

数组中连续的一个或多个整数组成一个子数组,每个子数组都有一个和。

求所有子数组的和的最大值。要求时间复杂度为O(n)。

例如输入的数组为1, -2, 3, 10, -4,</div>

</li>

<li><a href="/article/2079.htm"

title="Netty源码学习-FileRegion" target="_blank">Netty源码学习-FileRegion</a>

<span class="text-muted">bylijinnan</span>

<a class="tag" taget="_blank" href="/search/java/1.htm">java</a><a class="tag" taget="_blank" href="/search/netty/1.htm">netty</a>

<div>今天看org.jboss.netty.example.http.file.HttpStaticFileServerHandler.java

可以直接往channel里面写入一个FileRegion对象,而不需要相应的encoder:

//pipeline(没有诸如“FileRegionEncoder”的handler):

public ChannelPipeline ge</div>

</li>

<li><a href="/article/2206.htm"

title="使用ZeroClipboard解决跨浏览器复制到剪贴板的问题" target="_blank">使用ZeroClipboard解决跨浏览器复制到剪贴板的问题</a>

<span class="text-muted">cngolon</span>

<a class="tag" taget="_blank" href="/search/%E8%B7%A8%E6%B5%8F%E8%A7%88%E5%99%A8/1.htm">跨浏览器</a><a class="tag" taget="_blank" href="/search/%E5%A4%8D%E5%88%B6%E5%88%B0%E7%B2%98%E8%B4%B4%E6%9D%BF/1.htm">复制到粘贴板</a><a class="tag" taget="_blank" href="/search/Zero+Clipboard/1.htm">Zero Clipboard</a>

<div>Zero Clipboard的实现原理

Zero Clipboard 利用透明的Flash让其漂浮在复制按钮之上,这样其实点击的不是按钮而是 Flash ,这样将需要的内容传入Flash,再通过Flash的复制功能把传入的内容复制到剪贴板。

Zero Clipboard的安装方法

首先需要下载 Zero Clipboard的压缩包,解压后把文件夹中两个文件:ZeroClipboard.js </div>

</li>

<li><a href="/article/2333.htm"

title="单例模式" target="_blank">单例模式</a>

<span class="text-muted">cuishikuan</span>

<a class="tag" taget="_blank" href="/search/%E5%8D%95%E4%BE%8B%E6%A8%A1%E5%BC%8F/1.htm">单例模式</a>

<div>第一种(懒汉,线程不安全):

public class Singleton { 2 private static Singleton instance; 3 pri</div>

</li>

<li><a href="/article/2460.htm"

title="spring+websocket的使用" target="_blank">spring+websocket的使用</a>

<span class="text-muted">dalan_123</span>

<div>一、spring配置文件

<?xml version="1.0" encoding="UTF-8"?><beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.or</div>

</li>

<li><a href="/article/2587.htm"

title="细节问题:ZEROFILL的用法范围。" target="_blank">细节问题:ZEROFILL的用法范围。</a>

<span class="text-muted">dcj3sjt126com</span>

<a class="tag" taget="_blank" href="/search/mysql/1.htm">mysql</a>

<div> 1、zerofill把月份中的一位数字比如1,2,3等加前导0

mysql> CREATE TABLE t1 (year YEAR(4), month INT(2) UNSIGNED ZEROFILL, -> day</div>

</li>

<li><a href="/article/2714.htm"

title="Android开发10——Activity的跳转与传值" target="_blank">Android开发10——Activity的跳转与传值</a>

<span class="text-muted">dcj3sjt126com</span>

<a class="tag" taget="_blank" href="/search/Android%E5%BC%80%E5%8F%91/1.htm">Android开发</a>

<div>Activity跳转与传值,主要是通过Intent类,Intent的作用是激活组件和附带数据。

一、Activity跳转

方法一Intent intent = new Intent(A.this, B.class); startActivity(intent)

方法二Intent intent = new Intent();intent.setCla</div>

</li>

<li><a href="/article/2841.htm"

title="jdbc 得到表结构、主键" target="_blank">jdbc 得到表结构、主键</a>

<span class="text-muted">eksliang</span>

<a class="tag" taget="_blank" href="/search/jdbc+%E5%BE%97%E5%88%B0%E8%A1%A8%E7%BB%93%E6%9E%84%E3%80%81%E4%B8%BB%E9%94%AE/1.htm">jdbc 得到表结构、主键</a>

<div>转自博客:http://blog.csdn.net/ocean1010/article/details/7266042

假设有个con DatabaseMetaData dbmd = con.getMetaData(); rs = dbmd.getColumns(con.getCatalog(), schema, tableName, null); rs.getSt</div>

</li>

<li><a href="/article/2968.htm"

title="Android 应用程序开关GPS" target="_blank">Android 应用程序开关GPS</a>

<span class="text-muted">gqdy365</span>

<a class="tag" taget="_blank" href="/search/android/1.htm">android</a>

<div>要在应用程序中操作GPS开关需要权限:

<uses-permission android:name="android.permission.WRITE_SECURE_SETTINGS" />

但在配置文件中添加此权限之后会报错,无法再eclipse里面正常编译,怎么办?

1、方法一:将项目放到Android源码中编译;

2、方法二:网上有人说cl</div>

</li>

<li><a href="/article/3095.htm"

title="Windows上调试MapReduce" target="_blank">Windows上调试MapReduce</a>

<span class="text-muted">zhiquanliu</span>

<a class="tag" taget="_blank" href="/search/mapreduce/1.htm">mapreduce</a>

<div>1.下载hadoop2x-eclipse-plugin https://github.com/winghc/hadoop2x-eclipse-plugin.git 把 hadoop2.6.0-eclipse-plugin.jar 放到eclipse plugin 目录中。 2.下载 hadoop2.6_x64_.zip http://dl.iteye.com/topics/download/d2b</div>

</li>

<li><a href="/article/3222.htm"

title="如何看待一些知名博客推广软文的行为?" target="_blank">如何看待一些知名博客推广软文的行为?</a>

<span class="text-muted">justjavac</span>

<a class="tag" taget="_blank" href="/search/%E5%8D%9A%E5%AE%A2/1.htm">博客</a>

<div>本文来自我在知乎上的一个回答:http://www.zhihu.com/question/23431810/answer/24588621

互联网上的两种典型心态:

当初求种像条狗,如今撸完嫌人丑

当初搜贴像条犬,如今读完嫌人软

你为啥感觉不舒服呢?

难道非得要作者把自己的劳动成果免费给你用,你才舒服?

就如同 Google 关闭了 Gooled Reader,那是</div>

</li>

<li><a href="/article/3349.htm"

title="sql优化总结" target="_blank">sql优化总结</a>

<span class="text-muted">macroli</span>

<a class="tag" taget="_blank" href="/search/sql/1.htm">sql</a>

<div>为了是自己对sql优化有更好的原则性,在这里做一下总结,个人原则如有不对请多多指教。谢谢!

要知道一个简单的sql语句执行效率,就要有查看方式,一遍更好的进行优化。

一、简单的统计语句执行时间

declare @d datetime ---定义一个datetime的变量set @d=getdate() ---获取查询语句开始前的时间select user_id</div>

</li>

<li><a href="/article/3476.htm"

title="Linux Oracle中常遇到的一些问题及命令总结" target="_blank">Linux Oracle中常遇到的一些问题及命令总结</a>

<span class="text-muted">超声波</span>

<a class="tag" taget="_blank" href="/search/oracle/1.htm">oracle</a><a class="tag" taget="_blank" href="/search/linux/1.htm">linux</a>

<div>1.linux更改主机名

(1)#hostname oracledb 临时修改主机名

(2) vi /etc/sysconfig/network 修改hostname

(3) vi /etc/hosts 修改IP对应的主机名

2.linux重启oracle实例及监听的各种方法

(注意操作的顺序应该是先监听,后数据库实例)

&nbs</div>

</li>

<li><a href="/article/3603.htm"

title="hive函数大全及使用示例" target="_blank">hive函数大全及使用示例</a>

<span class="text-muted">superlxw1234</span>

<a class="tag" taget="_blank" href="/search/hadoop/1.htm">hadoop</a><a class="tag" taget="_blank" href="/search/hive%E5%87%BD%E6%95%B0/1.htm">hive函数</a>

<div>

具体说明及示例参 见附件文档。

文档目录:

目录

一、关系运算: 4

1. 等值比较: = 4

2. 不等值比较: <> 4

3. 小于比较: < 4

4. 小于等于比较: <= 4

5. 大于比较: > 5

6. 大于等于比较: >= 5

7. 空值判断: IS NULL 5</div>

</li>

<li><a href="/article/3730.htm"

title="Spring 4.2新特性-使用@Order调整配置类加载顺序" target="_blank">Spring 4.2新特性-使用@Order调整配置类加载顺序</a>

<span class="text-muted">wiselyman</span>

<a class="tag" taget="_blank" href="/search/spring+4/1.htm">spring 4</a>

<div>4.1 @Order

Spring 4.2 利用@Order控制配置类的加载顺序

4.2 演示

两个演示bean

package com.wisely.spring4_2.order;

public class Demo1Service {

}

package com.wisely.spring4_2.order;

public class</div>

</li>

</ul>

</div>

</div>

</div>

<div>

<div class="container">

<div class="indexes">

<strong>按字母分类:</strong>

<a href="/tags/A/1.htm" target="_blank">A</a><a href="/tags/B/1.htm" target="_blank">B</a><a href="/tags/C/1.htm" target="_blank">C</a><a

href="/tags/D/1.htm" target="_blank">D</a><a href="/tags/E/1.htm" target="_blank">E</a><a href="/tags/F/1.htm" target="_blank">F</a><a

href="/tags/G/1.htm" target="_blank">G</a><a href="/tags/H/1.htm" target="_blank">H</a><a href="/tags/I/1.htm" target="_blank">I</a><a

href="/tags/J/1.htm" target="_blank">J</a><a href="/tags/K/1.htm" target="_blank">K</a><a href="/tags/L/1.htm" target="_blank">L</a><a

href="/tags/M/1.htm" target="_blank">M</a><a href="/tags/N/1.htm" target="_blank">N</a><a href="/tags/O/1.htm" target="_blank">O</a><a

href="/tags/P/1.htm" target="_blank">P</a><a href="/tags/Q/1.htm" target="_blank">Q</a><a href="/tags/R/1.htm" target="_blank">R</a><a

href="/tags/S/1.htm" target="_blank">S</a><a href="/tags/T/1.htm" target="_blank">T</a><a href="/tags/U/1.htm" target="_blank">U</a><a

href="/tags/V/1.htm" target="_blank">V</a><a href="/tags/W/1.htm" target="_blank">W</a><a href="/tags/X/1.htm" target="_blank">X</a><a

href="/tags/Y/1.htm" target="_blank">Y</a><a href="/tags/Z/1.htm" target="_blank">Z</a><a href="/tags/0/1.htm" target="_blank">其他</a>

</div>

</div>

</div>

<footer id="footer" class="mb30 mt30">

<div class="container">

<div class="footBglm">

<a target="_blank" href="/">首页</a> -

<a target="_blank" href="/custom/about.htm">关于我们</a> -

<a target="_blank" href="/search/Java/1.htm">站内搜索</a> -

<a target="_blank" href="/sitemap.txt">Sitemap</a> -

<a target="_blank" href="/custom/delete.htm">侵权投诉</a>

</div>

<div class="copyright">版权所有 IT知识库 CopyRight © 2000-2050 E-COM-NET.COM , All Rights Reserved.

<!-- <a href="https://beian.miit.gov.cn/" rel="nofollow" target="_blank">京ICP备09083238号</a><br>-->

</div>

</div>

</footer>

<!-- 代码高亮 -->

<script type="text/javascript" src="/static/syntaxhighlighter/scripts/shCore.js"></script>

<script type="text/javascript" src="/static/syntaxhighlighter/scripts/shLegacy.js"></script>

<script type="text/javascript" src="/static/syntaxhighlighter/scripts/shAutoloader.js"></script>

<link type="text/css" rel="stylesheet" href="/static/syntaxhighlighter/styles/shCoreDefault.css"/>

<script type="text/javascript" src="/static/syntaxhighlighter/src/my_start_1.js"></script>

</body>

</html>