java集成kettle:创建作业保存作业并运行作业

首先参考 https://blog.csdn.net/cgm625637391/article/details/95047724

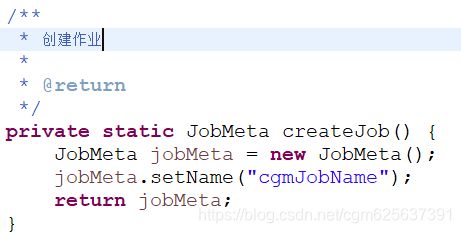

1.创建作业

对应java代码

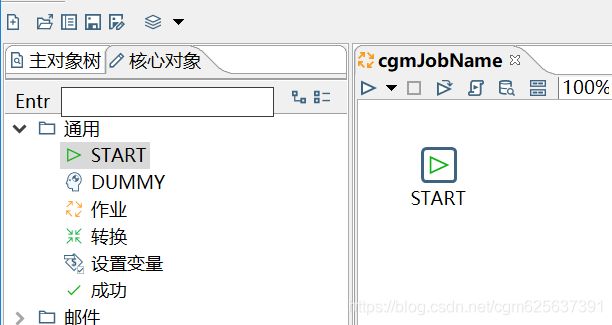

2.创建START

对应Java代码

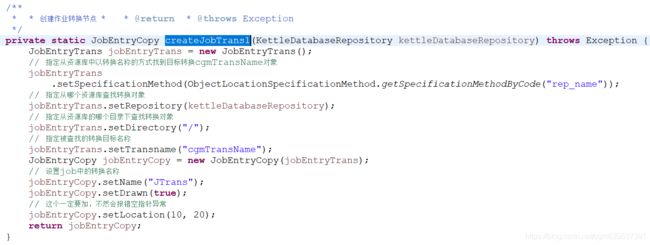

3.创建作业转换节点

对应java代码

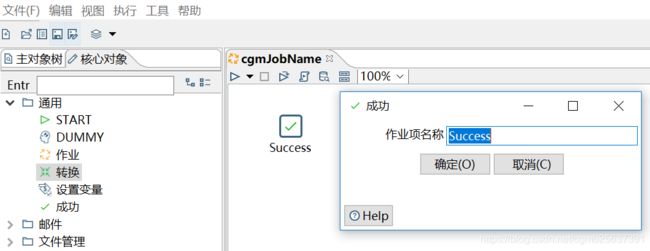

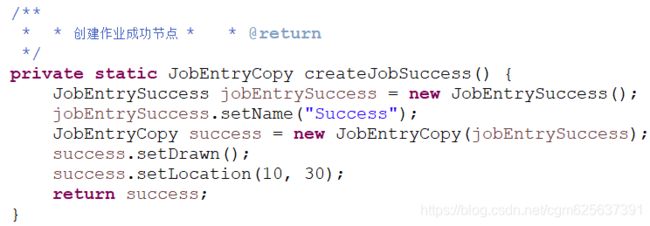

4.创建成功节点

对应java代码

5.创建节点连接

对应java代码

6.将各个节点综合起来

7.保存作业

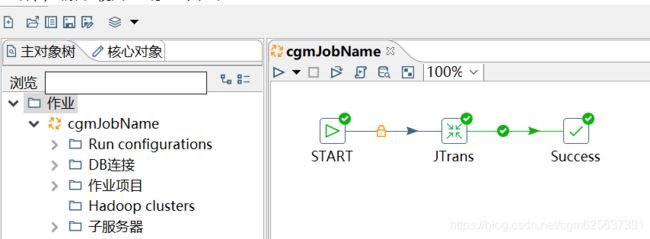

8.执行结果

在资源库中创建了1个转换,1个作业

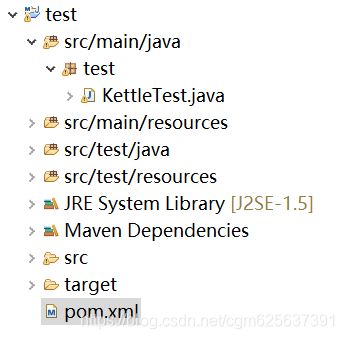

项目结构

pom.xml

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

4.0.0

www.test.com

test

0.0.1-SNAPSHOT

pentaho-kettle

kettle-core

7.1.0.0-12

pentaho-kettle

kettle-dbdialog

7.1.0.0-12

pentaho-kettle

kettle-engine

7.1.0.0-12

pentaho

metastore

7.1.0.0-12

org.apache.commons

commons-vfs2

2.2

com.google.guava

guava

19.0

mysql

mysql-connector-java

5.1.46

commons-lang

commons-lang

2.6

pentaho-releases

Kettle

https://nexus.pentaho.org/content/groups/omni/

public

aliyun nexus

http://maven.aliyun.com/nexus/content/groups/public/

true

KettleTest.java

package test;

import org.pentaho.di.core.KettleEnvironment;

import org.pentaho.di.core.ObjectLocationSpecificationMethod;

import org.pentaho.di.core.database.DatabaseMeta;

import org.pentaho.di.core.exception.KettleException;

import org.pentaho.di.job.JobHopMeta;

import org.pentaho.di.job.JobMeta;

import org.pentaho.di.job.entries.special.JobEntrySpecial;

import org.pentaho.di.job.entries.success.JobEntrySuccess;

import org.pentaho.di.job.entries.trans.JobEntryTrans;

import org.pentaho.di.job.entry.JobEntryCopy;

import org.pentaho.di.repository.RepositoryDirectoryInterface;

import org.pentaho.di.repository.kdr.KettleDatabaseRepository;

import org.pentaho.di.repository.kdr.KettleDatabaseRepositoryMeta;

import org.pentaho.di.trans.TransHopMeta;

import org.pentaho.di.trans.TransMeta;

import org.pentaho.di.trans.step.StepMeta;

import org.pentaho.di.trans.steps.insertupdate.InsertUpdateMeta;

import org.pentaho.di.trans.steps.tableinput.TableInputMeta;/**

* * * @author cgm * @date 2019年7月8日

*/

public class KettleTest {public static void main(String[] args) throws Exception {

KettleEnvironment.init();

KettleDatabaseRepository kettleDatabaseRepository = repositoryCon();

createAndSaveTrans(kettleDatabaseRepository);

JobMeta jobMeta = generateJob(kettleDatabaseRepository);

saveJob(kettleDatabaseRepository, jobMeta);

}/**

* * * 连接到资源库

*/

private static KettleDatabaseRepository repositoryCon() throws KettleException {

// 初始化环境

if (!KettleEnvironment.isInitialized()) {

try {

KettleEnvironment.init();

} catch (KettleException e) {

e.printStackTrace();

}

}

// 数据库连接元对象

// (kettle数据库连接名称(KETTLE工具右上角显示),资源库类型,连接方式,IP,数据库名,端口,用户名,密码) //cgmRepositoryConn

DatabaseMeta databaseMeta = new DatabaseMeta("cgmRepository", "mysql", "Native(JDBC)", "127.0.0.1",

"cgmrepositorydb", "3306", "root", "root");

// 数据库形式的资源库元对象

KettleDatabaseRepositoryMeta kettleDatabaseRepositoryMeta = new KettleDatabaseRepositoryMeta();

kettleDatabaseRepositoryMeta.setConnection(databaseMeta);

// 数据库形式的资源库对象

KettleDatabaseRepository kettleDatabaseRepository = new KettleDatabaseRepository();

// 用资源库元对象初始化资源库对象

kettleDatabaseRepository.init(kettleDatabaseRepositoryMeta);

// 连接到资源库

kettleDatabaseRepository.connect("admin", "admin");// 默认的连接资源库的用户名和密码

if (kettleDatabaseRepository.isConnected()) {

System.out.println("连接成功");

return kettleDatabaseRepository;

} else {

System.out.println("连接失败");

return null;

}

}/**

* 生成作业

*

* @param kettleDatabaseRepository

* @return

* @throws Exception

*/

private static JobMeta generateJob(KettleDatabaseRepository kettleDatabaseRepository) throws Exception {

// 创建作业

JobMeta jobMeta = createJob();

// 创建作业中的各个节点

JobEntryCopy start = createJobStart();

JobEntryCopy trans1 = createJobTrans1(kettleDatabaseRepository);

JobEntryCopy success = createJobSuccess();

// 将节点加入作业中

jobMeta.addJobEntry(start);

jobMeta.addJobEntry(trans1);

jobMeta.addJobEntry(success);

// 创建并增加节点连接

jobMeta.addJobHop(createJobHopMeta(start, trans1));

jobMeta.addJobHop(createJobHopMeta(trans1, success));

return jobMeta;

}/**

* * 创建作业 * * @return

*/

private static JobMeta createJob() {

JobMeta jobMeta = new JobMeta();

jobMeta.setName("cgmJobName");

jobMeta.setJobstatus(0);

return jobMeta;

}/**

* * 创建作业开始节点 * * @return

*/

private static JobEntryCopy createJobStart() {

JobEntrySpecial jobEntrySpecial = new JobEntrySpecial();

jobEntrySpecial.setName("START");

jobEntrySpecial.setStart(true);

JobEntryCopy start = new JobEntryCopy(jobEntrySpecial);

start.setDrawn();

start.setLocation(10, 10);

return start;

}/**

* * 创建作业转换节点 * * @return * @throws Exception

*/

private static JobEntryCopy createJobTrans1(KettleDatabaseRepository kettleDatabaseRepository) throws Exception {

JobEntryTrans jobEntryTrans = new JobEntryTrans();

// 指定从资源库中以转换名称的方式找到目标转换cgmTransName对象

jobEntryTrans

.setSpecificationMethod(ObjectLocationSpecificationMethod.getSpecificationMethodByCode("rep_name"));

// 指定从哪个资源库查找转换对象

jobEntryTrans.setRepository(kettleDatabaseRepository);

// 指定从资源库的哪个目录下查找转换对象

jobEntryTrans.setDirectory("/");

// 指定被查找的转换目标名称

jobEntryTrans.setTransname("cgmTransName");

JobEntryCopy jobEntryCopy = new JobEntryCopy(jobEntryTrans);

// 设置job中的转换名称

jobEntryCopy.setName("JTrans");

jobEntryCopy.setDrawn(true);

// 这个一定要加,不然会报错空指针异常

jobEntryCopy.setLocation(10, 20);

return jobEntryCopy;

}/**

* * 创建作业成功节点 * * @return

*/

private static JobEntryCopy createJobSuccess() {

JobEntrySuccess jobEntrySuccess = new JobEntrySuccess();

jobEntrySuccess.setName("Success");

JobEntryCopy success = new JobEntryCopy(jobEntrySuccess);

success.setDrawn();

success.setLocation(10, 30);

return success;

}/**

* * 创建节点连接 * * @param start * @param end * @return

*/

private static JobHopMeta createJobHopMeta(JobEntryCopy start, JobEntryCopy end) {

return new JobHopMeta(start, end);

}/**

* 保存作业到资源库

*

* @param kettleDatabaseRepository

* @param jobMeta

* @throws Exception

*/

private static void saveJob(KettleDatabaseRepository kettleDatabaseRepository, JobMeta jobMeta) throws Exception {

RepositoryDirectoryInterface dir = kettleDatabaseRepository.loadRepositoryDirectoryTree().findDirectory("/");

jobMeta.setRepositoryDirectory(dir);

kettleDatabaseRepository.save(jobMeta, null);

}/**

* * 创建转换并把转换保存到资源库 * * @throws Exception

*/

private static void createAndSaveTrans(KettleDatabaseRepository kettleDatabaseRepository) throws Exception {

TransMeta transMeta = generateTrans();

saveTrans(kettleDatabaseRepository, transMeta);

}/**

* * * 定义一个转换,但是还没有保存到资源库 * * @return * @throws KettleException

*/

private static TransMeta generateTrans() throws KettleException {

TransMeta transMeta = createTrans();

// 创建步骤1并添加到转换中

StepMeta step1 = createStep1(transMeta);

transMeta.addStep(step1);

// 创建步骤2并添加到转换中

StepMeta step2 = createStep2(transMeta);

transMeta.addStep(step2);

// 创建hop连接并添加hop

TransHopMeta TransHopMeta = createTransHop(step1, step2);

transMeta.addTransHop(TransHopMeta);

return transMeta;

}/**

* * * 创建步骤:(输入:表输入) * * @param transMeta * @return

*/

private static StepMeta createStep1(TransMeta transMeta) {

// 新建一个表输入步骤(TableInputMeta)

TableInputMeta tableInputMeta = new TableInputMeta();

// 设置步骤1的数据库连接

tableInputMeta.setDatabaseMeta(transMeta.findDatabase("fromDbName"));

// 设置步骤1中的sql

tableInputMeta.setSQL("SELECT id1 as id2 ,name1 as name2 FROM from_user");

// 设置步骤名称

StepMeta step1 = new StepMeta("step1name", tableInputMeta);

return step1;

}/**

* * * 创建转换 * * @return

*/

private static TransMeta createTrans() {

TransMeta transMeta = new TransMeta();

// 设置转化的名称

transMeta.setName("cgmTransName");

// 添加转换的数据库连接

transMeta.addDatabase(new DatabaseMeta("fromDbName", "mysql", "Native(JDBC)", "127.0.0.1",

"fromdb?useSSL=false", "3306", "root", "root"));

transMeta.addDatabase(new DatabaseMeta("toDbName", "mysql", "Native(JDBC)", "127.0.0.1", "todb?useSSL=false",

"3306", "root", "root"));

return transMeta;

}/**

* * * 创建步骤:(输出:插入/更新) * * @param transMeta * @return

*/

private static StepMeta createStep2(TransMeta transMeta) {

// 新建一个插入/更新步骤

InsertUpdateMeta insertUpdateMeta = new InsertUpdateMeta();

// 设置步骤2的数据库连接

insertUpdateMeta.setDatabaseMeta(transMeta.findDatabase("toDbName"));

// 设置目标表

insertUpdateMeta.setTableName("to_user");

// 设置用来查询的关键字

insertUpdateMeta.setKeyLookup(new String[] {"id2"});

insertUpdateMeta.setKeyCondition(new String[] {"="});

insertUpdateMeta.setKeyStream(new String[] {"id2"});

insertUpdateMeta.setKeyStream2(new String[] {""});// 一定要加上

// 设置要更新的字段

String[] updatelookup = {"id2", "name2"};

String[] updateStream = {"id2", "name2"};

Boolean[] updateOrNot = {false, true};

// 设置表字段

insertUpdateMeta.setUpdateLookup(updatelookup);

// 设置流字段

insertUpdateMeta.setUpdateStream(updateStream);

// 设置是否更新

insertUpdateMeta.setUpdate(updateOrNot);

// 设置步骤2的名称

StepMeta step2 = new StepMeta("step2name", insertUpdateMeta);

return step2;

}/**

* * * 创建节点连接 * * @param step1 * @param step2 * @return

*/

private static TransHopMeta createTransHop(StepMeta step1, StepMeta step2) {

// 设置起始步骤和目标步骤,把两个步骤关联起来

TransHopMeta transHopMeta = new TransHopMeta(step1, step2);

return transHopMeta;

}/**

* * * 保存转换到资源库 * * @param kettleDatabaseRepository * @param transMeta * @throws Exception

*/

private static void saveTrans(KettleDatabaseRepository kettleDatabaseRepository, TransMeta transMeta)

throws Exception {

RepositoryDirectoryInterface dir = kettleDatabaseRepository.loadRepositoryDirectoryTree().findDirectory("/");

transMeta.setRepositoryDirectory(dir);

kettleDatabaseRepository.save(transMeta, null);

}}

执行完成后就会在数据库中生产相应的记录了

那么作业在资源库中表间的结构如下:

作业在资源库中表间的结构关系

作业在资源库中表间的结构关系

现在我们来运行这个保存到资源库中的作业

KettleTest.java

package test;

import org.pentaho.di.core.KettleEnvironment;

import org.pentaho.di.core.database.DatabaseMeta;

import org.pentaho.di.core.exception.KettleException;

import org.pentaho.di.job.Job;

import org.pentaho.di.job.JobMeta;

import org.pentaho.di.repository.ObjectId;

import org.pentaho.di.repository.RepositoryDirectoryInterface;

import org.pentaho.di.repository.kdr.KettleDatabaseRepository;

import org.pentaho.di.repository.kdr.KettleDatabaseRepositoryMeta;/**

* * * @author cgm * @date 2019年7月8日

*/

public class KettleTest {public static void main(String[] args) throws Exception {

runJob();

}/**

* 运行资源库中的作业

*

* @throws KettleException

*/

public static void runJob() throws KettleException {

KettleDatabaseRepository kettleDatabaseRepository = repositoryCon();

// 获取目录

RepositoryDirectoryInterface directory =

kettleDatabaseRepository.loadRepositoryDirectoryTree().findDirectory("/");

// 根据作业名称获取作业id

ObjectId id = kettleDatabaseRepository.getJobId("cgmJobName", directory);

// 加载作业

JobMeta jobMeta = kettleDatabaseRepository.loadJob(id, null);

Job job = new Job(kettleDatabaseRepository, jobMeta);

// 执行作业

job.run();

// 等待作业执行完毕

job.waitUntilFinished();

}/**

* * * 连接到资源库

*/

private static KettleDatabaseRepository repositoryCon() throws KettleException {

// 初始化环境

if (!KettleEnvironment.isInitialized()) {

try {

KettleEnvironment.init();

} catch (KettleException e) {

e.printStackTrace();

}

}

// 数据库连接元对象

// (kettle数据库连接名称(KETTLE工具右上角显示),资源库类型,连接方式,IP,数据库名,端口,用户名,密码) //cgmRepositoryConn

DatabaseMeta databaseMeta = new DatabaseMeta("cgmRepository", "mysql", "Native(JDBC)", "127.0.0.1",

"cgmrepositorydb", "3306", "root", "root");

// 数据库形式的资源库元对象

KettleDatabaseRepositoryMeta kettleDatabaseRepositoryMeta = new KettleDatabaseRepositoryMeta();

kettleDatabaseRepositoryMeta.setConnection(databaseMeta);

// 数据库形式的资源库对象

KettleDatabaseRepository kettleDatabaseRepository = new KettleDatabaseRepository();

// 用资源库元对象初始化资源库对象

kettleDatabaseRepository.init(kettleDatabaseRepositoryMeta);

// 连接到资源库

kettleDatabaseRepository.connect("admin", "admin");// 默认的连接资源库的用户名和密码

if (kettleDatabaseRepository.isConnected()) {

System.out.println("连接成功");

return kettleDatabaseRepository;

} else {

System.out.println("连接失败");

return null;

}

}

}

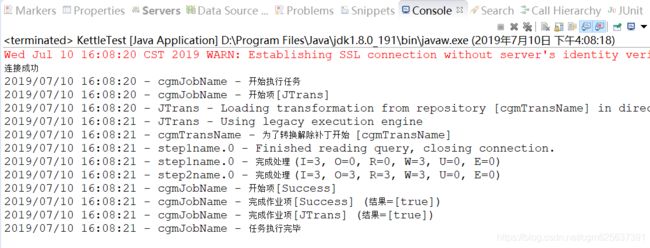

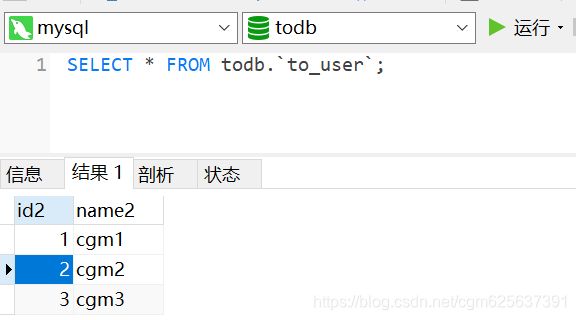

运行结果

完毕!