Nacos源码解读08——基于JRaft实现AP模式

什么是JRaft算法

详情参考

https://www.cnblogs.com/luozhiyun/p/13150808.html

http://www.zhenchao.io/2020/06/01/sofa/sofa-jraft-node-startup/

Nacos对JRaft算法的应用

当Nacos使用嵌入数据源( -DembeddedStorage=true,每个节点有一个数据源),以集群方式启动(-Dnacos.standalone=false)时,使用raft协议,来保证数据一致性。

Nacos中使用JRaft保障数据一致性

JRaft创建

创建PersistentConsistencyServiceDelegateImpl的时候会调用createNewPersistentServiceProcessor方法会创建BasePersistentServiceProcessor

(持久化服务处理器) 然后会执行到 PersistentServiceProcessor的构造方法中去创建CPProtocol 并进行CPProtocol的初始化

private void initCPProtocol() {

ApplicationUtils.getBeanIfExist(CPProtocol.class, protocol -> {

//获取Config类型 这里拿到的是RaftConfig

Class configType = ClassUtils.resolveGenericType(protocol.getClass());

Config config = (Config) ApplicationUtils.getBean(configType);

//注入成员

injectMembers4CP(config);

//初始化

protocol.init(config);

ProtocolManager.this.cpProtocol = protocol;

});

}

注入成员

private void injectMembers4CP(Config config) {

//获取当前节点成员信息

final Member selfMember = memberManager.getSelf();

//获取自己节点的ip端口偏移 比如我自己是8848 这里会拿到的ip:7848

final String self = selfMember.getIp() + ":" + Integer

.parseInt(String.valueOf(selfMember.getExtendVal(MemberMetaDataConstants.RAFT_PORT)));

//拿到其他节点信息 ip:端口

Set<String> others = toCPMembersInfo(memberManager.allMembers());

//设置集群节点信息

config.setMembers(self, others);

}

初始化 JRaft

JRaftProtocol

@Override

public void init(RaftConfig config) {

if (initialized.compareAndSet(false, true)) {

this.raftConfig = config;

//注册RaftEvent事件

NotifyCenter.registerToSharePublisher(RaftEvent.class);

this.raftServer.init(this.raftConfig);

this.raftServer.start();

//监听 RaftEvent事件

NotifyCenter.registerSubscriber(new Subscriber<RaftEvent>() {

@Override

public void onEvent(RaftEvent event) {

Loggers.RAFT.info("This Raft event changes : {}", event);

final String groupId = event.getGroupId();

Map<String, Map<String, Object>> value = new HashMap<>();

Map<String, Object> properties = new HashMap<>();

final String leader = event.getLeader();

final Long term = event.getTerm();

final List<String> raftClusterInfo = event.getRaftClusterInfo();

final String errMsg = event.getErrMsg();

// Leader information needs to be selectively updated. If it is valid data,

// the information in the protocol metadata is updated.

MapUtil.putIfValNoEmpty(properties, MetadataKey.LEADER_META_DATA, leader);

MapUtil.putIfValNoNull(properties, MetadataKey.TERM_META_DATA, term);

MapUtil.putIfValNoEmpty(properties, MetadataKey.RAFT_GROUP_MEMBER, raftClusterInfo);

MapUtil.putIfValNoEmpty(properties, MetadataKey.ERR_MSG, errMsg);

value.put(groupId, properties);

metaData.load(value);

// The metadata information is injected into the metadata information of the node

injectProtocolMetaData(metaData);

}

@Override

public Class<? extends Event> subscribeType() {

return RaftEvent.class;

}

});

}

}

JRaftServer初始化

JRaftServer

void init(RaftConfig config) {

this.raftConfig = config;

this.serializer = SerializeFactory.getDefault();

Loggers.RAFT.info("Initializes the Raft protocol, raft-config info : {}", config);

//初始化JRaft处理的一些线程池

RaftExecutor.init(config);

//获取当前节点的漂移的ip端口

final String self = config.getSelfMember();

//ip和端口信息 info[0] =ip info[1]=端口

String[] info = InternetAddressUtil.splitIPPortStr(self);

selfIp = info[0];

selfPort = Integer.parseInt(info[1]);

localPeerId = PeerId.parsePeer(self);

//创建节点 下面都是设置节点信息

nodeOptions = new NodeOptions();

// Set the election timeout time. The default is 5 seconds.

int electionTimeout = Math.max(ConvertUtils.toInt(config.getVal(RaftSysConstants.RAFT_ELECTION_TIMEOUT_MS),

RaftSysConstants.DEFAULT_ELECTION_TIMEOUT), RaftSysConstants.DEFAULT_ELECTION_TIMEOUT);

rpcRequestTimeoutMs = ConvertUtils.toInt(raftConfig.getVal(RaftSysConstants.RAFT_RPC_REQUEST_TIMEOUT_MS),

RaftSysConstants.DEFAULT_RAFT_RPC_REQUEST_TIMEOUT_MS);

nodeOptions.setSharedElectionTimer(true);

nodeOptions.setSharedVoteTimer(true);

nodeOptions.setSharedStepDownTimer(true);

nodeOptions.setSharedSnapshotTimer(true);

nodeOptions.setElectionTimeoutMs(electionTimeout);

RaftOptions raftOptions = RaftOptionsBuilder.initRaftOptions(raftConfig);

nodeOptions.setRaftOptions(raftOptions);

// open jraft node metrics record function

nodeOptions.setEnableMetrics(true);

CliOptions cliOptions = new CliOptions();

this.cliService = RaftServiceFactory.createAndInitCliService(cliOptions);

this.cliClientService = (CliClientServiceImpl) ((CliServiceImpl) this.cliService).getCliClientService();

}

JRaftServer启动

JRaftServer

synchronized void start() {

if (!isStarted) {

Loggers.RAFT.info("========= The raft protocol is starting... =========");

try {

// 初始化节点管理信息

com.alipay.sofa.jraft.NodeManager raftNodeManager = com.alipay.sofa.jraft.NodeManager.getInstance();

//遍历集群节点信息并设置

for (String address : raftConfig.getMembers()) {

PeerId peerId = PeerId.parsePeer(address);

conf.addPeer(peerId);

raftNodeManager.addAddress(peerId.getEndpoint());

}

nodeOptions.setInitialConf(conf);

//初始化RPCServer

rpcServer = JRaftUtils.initRpcServer(this, localPeerId);

//如果没有初始化成功抛异常

if (!this.rpcServer.init(null)) {

Loggers.RAFT.error("Fail to init [BaseRpcServer].");

throw new RuntimeException("Fail to init [BaseRpcServer].");

}

// Initialize multi raft group service framework

isStarted = true;

//创建多个raft分组

createMultiRaftGroup(processors);

Loggers.RAFT.info("========= The raft protocol start finished... =========");

} catch (Exception e) {

Loggers.RAFT.error("raft protocol start failure, cause: ", e);

throw new JRaftException(e);

}

}

}

初始化JRPCRpcServer的一些处理类

public static RpcServer initRpcServer(JRaftServer server, PeerId peerId) {

GrpcRaftRpcFactory raftRpcFactory = (GrpcRaftRpcFactory) RpcFactoryHelper.rpcFactory();

raftRpcFactory.registerProtobufSerializer(Log.class.getName(), Log.getDefaultInstance());

raftRpcFactory.registerProtobufSerializer(GetRequest.class.getName(), GetRequest.getDefaultInstance());

raftRpcFactory.registerProtobufSerializer(WriteRequest.class.getName(), WriteRequest.getDefaultInstance());

raftRpcFactory.registerProtobufSerializer(ReadRequest.class.getName(), ReadRequest.getDefaultInstance());

raftRpcFactory.registerProtobufSerializer(Response.class.getName(), Response.getDefaultInstance());

MarshallerRegistry registry = raftRpcFactory.getMarshallerRegistry();

registry.registerResponseInstance(Log.class.getName(), Response.getDefaultInstance());

registry.registerResponseInstance(GetRequest.class.getName(), Response.getDefaultInstance());

registry.registerResponseInstance(WriteRequest.class.getName(), Response.getDefaultInstance());

registry.registerResponseInstance(ReadRequest.class.getName(), Response.getDefaultInstance());

final RpcServer rpcServer = raftRpcFactory.createRpcServer(peerId.getEndpoint());

RaftRpcServerFactory.addRaftRequestProcessors(rpcServer, RaftExecutor.getRaftCoreExecutor(),

RaftExecutor.getRaftCliServiceExecutor());

// Deprecated

rpcServer.registerProcessor(new NacosLogProcessor(server, SerializeFactory.getDefault()));

// Deprecated

rpcServer.registerProcessor(new NacosGetRequestProcessor(server, SerializeFactory.getDefault()));

rpcServer.registerProcessor(new NacosWriteRequestProcessor(server, SerializeFactory.getDefault()));

rpcServer.registerProcessor(new NacosReadRequestProcessor(server, SerializeFactory.getDefault()));

return rpcServer;

}

持久化节点注册

这里拿持久化节点的注入举例子

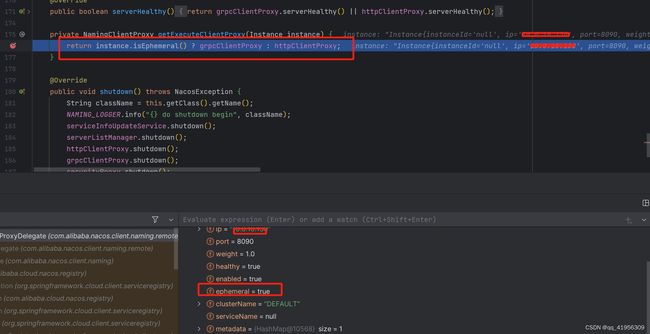

在Nacos 2.0版本中如果是持久化实例会使用NamingHttpClientProxy进行HTTP请求进行实例的注册

@Override

public void registerService(String serviceName, String groupName, Instance instance) throws NacosException {

NAMING_LOGGER.info("[REGISTER-SERVICE] {} registering service {} with instance: {}", namespaceId, serviceName,

instance);

String groupedServiceName = NamingUtils.getGroupedName(serviceName, groupName);

if (instance.isEphemeral()) {

BeatInfo beatInfo = beatReactor.buildBeatInfo(groupedServiceName, instance);

beatReactor.addBeatInfo(groupedServiceName, beatInfo);

}

//拼接请求参数

final Map<String, String> params = new HashMap<String, String>(32);

params.put(CommonParams.NAMESPACE_ID, namespaceId);

params.put(CommonParams.SERVICE_NAME, groupedServiceName);

params.put(CommonParams.GROUP_NAME, groupName);

params.put(CommonParams.CLUSTER_NAME, instance.getClusterName());

params.put(IP_PARAM, instance.getIp());

params.put(PORT_PARAM, String.valueOf(instance.getPort()));

params.put(WEIGHT_PARAM, String.valueOf(instance.getWeight()));

params.put(REGISTER_ENABLE_PARAM, String.valueOf(instance.isEnabled()));

params.put(HEALTHY_PARAM, String.valueOf(instance.isHealthy()));

params.put(EPHEMERAL_PARAM, String.valueOf(instance.isEphemeral()));

params.put(META_PARAM, JacksonUtils.toJson(instance.getMetadata()));

reqApi(UtilAndComs.nacosUrlInstance, params, HttpMethod.POST);

}

public String reqApi(String api, Map<String, String> params, String method) throws NacosException {

return reqApi(api, params, Collections.EMPTY_MAP, method);

}

public String reqApi(String api, Map<String, String> params, Map<String, String> body, String method)

throws NacosException {

return reqApi(api, params, body, serverListManager.getServerList(), method);

}

发送HTTP请求

public String reqApi(String api, Map<String, String> params, Map<String, String> body, List<String> servers,

String method) throws NacosException {

params.put(CommonParams.NAMESPACE_ID, getNamespaceId());

if (CollectionUtils.isEmpty(servers) && !serverListManager.isDomain()) {

throw new NacosException(NacosException.INVALID_PARAM, "no server available");

}

NacosException exception = new NacosException();

if (serverListManager.isDomain()) {

String nacosDomain = serverListManager.getNacosDomain();

for (int i = 0; i < maxRetry; i++) {

try {

return callServer(api, params, body, nacosDomain, method);

} catch (NacosException e) {

exception = e;

if (NAMING_LOGGER.isDebugEnabled()) {

NAMING_LOGGER.debug("request {} failed.", nacosDomain, e);

}

}

}

} else {

Random random = new Random(System.currentTimeMillis());

int index = random.nextInt(servers.size());

for (int i = 0; i < servers.size(); i++) {

String server = servers.get(index);

try {

return callServer(api, params, body, server, method);

} catch (NacosException e) {

exception = e;

if (NAMING_LOGGER.isDebugEnabled()) {

NAMING_LOGGER.debug("request {} failed.", server, e);

}

}

index = (index + 1) % servers.size();

}

}

NAMING_LOGGER.error("request: {} failed, servers: {}, code: {}, msg: {}", api, servers, exception.getErrCode(),

exception.getErrMsg());

throw new NacosException(exception.getErrCode(),

"failed to req API:" + api + " after all servers(" + servers + ") tried: " + exception.getMessage());

}

服务端接收HTTP请求进行注册

通过阅读官方文档可以知道,对于HTTP注册来说,服务端处理的API地址为:

http://ip:port/nacos/v1/ns/instance/register

InstanceController

/**

* Register new instance.

*

* @param request http request

* @return 'ok' if success

* @throws Exception any error during register

*/

@CanDistro

@PostMapping

@Secured(action = ActionTypes.WRITE)

public String register(HttpServletRequest request) throws Exception {

// 获得当前请求中的命名空间信息,如果不存在则使用默认的命名空间

final String namespaceId = WebUtils

.optional(request, CommonParams.NAMESPACE_ID, Constants.DEFAULT_NAMESPACE_ID);

// 获得当前请求中的服务名称,如果不存在则使用默认的服务名称

final String serviceName = WebUtils.required(request, CommonParams.SERVICE_NAME);

// 检查服务名称是否合法

NamingUtils.checkServiceNameFormat(serviceName);

// 将当前信息构造为一个Instance实例对象

final Instance instance = HttpRequestInstanceBuilder.newBuilder()

.setDefaultInstanceEphemeral(switchDomain.isDefaultInstanceEphemeral()).setRequest(request).build();

// 根据当前对GRPC的支持情况 调用符合条件的处理,支持GRPC特征则调用InstanceOperatorClientImpl

getInstanceOperator().registerInstance(namespaceId, serviceName, instance);

return "ok";

}

判断是否支持GRPC 如果支持GRPC则会使用V2版本 否则使用V1

private InstanceOperator getInstanceOperator() {

return upgradeJudgement.isUseGrpcFeatures() ? instanceServiceV2 : instanceServiceV1;

}

V1版本方案进行注册

先来看v1会调用 InstanceOperatorServiceImpl的registerInstance进行注册

public void registerInstance(String namespaceId, String serviceName, Instance instance) throws NacosException {

//创建服务信息,不存在则进行创建,同时创建service、cluster的关系

//同时初始化service的时候,创建服务端的心跳检测判断任务

createEmptyService(namespaceId, serviceName, instance.isEphemeral());

// 再次获得服务信息

Service service = getService(namespaceId, serviceName);

// 再次判断服务

checkServiceIsNull(service, namespaceId, serviceName);

// 添加实例信息

addInstance(namespaceId, serviceName, instance.isEphemeral(), instance);

}

创建服务信息

public void createServiceIfAbsent(String namespaceId, String serviceName, boolean local, Cluster cluster)

throws NacosException {

//从缓存中获取服务信息

Service service = getService(namespaceId, serviceName);

if (service == null) {

Loggers.SRV_LOG.info("creating empty service {}:{}", namespaceId, serviceName);

//初始化service

service = new Service();

service.setName(serviceName);

service.setNamespaceId(namespaceId);

service.setGroupName(NamingUtils.getGroupName(serviceName));

// now validate the service. if failed, exception will be thrown

service.setLastModifiedMillis(System.currentTimeMillis());

service.recalculateChecksum();

if (cluster != null) {

//关联服务和集群的关系

cluster.setService(service);

service.getClusterMap().put(cluster.getName(), cluster);

}

//校验服务名称等是否合规

service.validate();

//初始话服务信息,创建心跳检测任务

putServiceAndInit(service);

if (!local) {

//是否是临时服务,一致性处理

addOrReplaceService(service);

}

}

在putServiceAndInit方法中的核心逻辑就是将当前服务信息放置到缓存中,同时调用初始化方法开启服务端的心跳检测任务,用于判断当前服务下的实例信息的变化,如果有变化则同时客户端.

public void init() {

// 开启当前服务的心跳检测任务

HealthCheckReactor.scheduleCheck(clientBeatCheckTask);

for (Map.Entry<String, Cluster> entry : clusterMap.entrySet()) {

entry.getValue().setService(this);

entry.getValue().init();

}

}

添加实例信息

public void addInstance(String namespaceId, String serviceName, boolean ephemeral, Instance... ips)

throws NacosException {

//构建key

String key = KeyBuilder.buildInstanceListKey(namespaceId, serviceName, ephemeral);

//从缓存中获得服务信息

Service service = getService(namespaceId, serviceName);

//为服务设置一把锁

synchronized (service) {

//这个方法里面就是最核心的对命名空间->服务->cluster->instance

//基于这套数据结构和模型完成内存服务注册,就是在这里

List<Instance> instanceList = addIpAddresses(service, ephemeral, ips);

Instances instances = new Instances();

instances.setInstanceList(instanceList);

// 会把你的服务实例数据页放在内存里,同时发起一个延迟异步任务的sync的数据复制任务

// 延迟一段时间

consistencyService.put(key, instances);

}

}

对于addIpAddresses方法来说,核心的就是创建起相关的关联关系

public List<Instance> updateIpAddresses(Service service, String action, boolean ephemeral, Instance... ips)

throws NacosException {

Datum datum = consistencyService

.get(KeyBuilder.buildInstanceListKey(service.getNamespaceId(), service.getName(), ephemeral));

List<Instance> currentIPs = service.allIPs(ephemeral);

Map<String, Instance> currentInstances = new HashMap<>(currentIPs.size());

Set<String> currentInstanceIds = CollectionUtils.set();

for (Instance instance : currentIPs) {

currentInstances.put(instance.toIpAddr(), instance);

currentInstanceIds.add(instance.getInstanceId());

}

Map<String, Instance> instanceMap;

if (datum != null && null != datum.value) {

instanceMap = setValid(((Instances) datum.value).getInstanceList(), currentInstances);

} else {

instanceMap = new HashMap<>(ips.length);

}

for (Instance instance : ips) {

if (!service.getClusterMap().containsKey(instance.getClusterName())) {

Cluster cluster = new Cluster(instance.getClusterName(), service);

cluster.init();

service.getClusterMap().put(instance.getClusterName(), cluster);

Loggers.SRV_LOG

.warn("cluster: {} not found, ip: {}, will create new cluster with default configuration.",

instance.getClusterName(), instance.toJson());

}

if (UtilsAndCommons.UPDATE_INSTANCE_ACTION_REMOVE.equals(action)) {

instanceMap.remove(instance.getDatumKey());

} else {

Instance oldInstance = instanceMap.get(instance.getDatumKey());

if (oldInstance != null) {

instance.setInstanceId(oldInstance.getInstanceId());

} else {

instance.setInstanceId(instance.generateInstanceId(currentInstanceIds));

}

instanceMap.put(instance.getDatumKey(), instance);

}

}

if (instanceMap.size() <= 0 && UtilsAndCommons.UPDATE_INSTANCE_ACTION_ADD.equals(action)) {

throw new IllegalArgumentException(

"ip list can not be empty, service: " + service.getName() + ", ip list: " + JacksonUtils

.toJson(instanceMap.values()));

}

return new ArrayList<>(instanceMap.values());

}

该方法结束以后,命名空间->服务->cluster->instance,这个存储结构的关系就确定了。

V2版本方案进行注册

InstanceOperatorClientImpl

@Override

public void registerInstance(String namespaceId, String serviceName, Instance instance) {

//是否是临时实例

boolean ephemeral = instance.isEphemeral();

//获取客户端id

String clientId = IpPortBasedClient.getClientId(instance.toInetAddr(), ephemeral);

createIpPortClientIfAbsent(clientId);

//获取服务信息

Service service = getService(namespaceId, serviceName, ephemeral);

//注册实例信息

clientOperationService.registerInstance(service, instance, clientId);

}

持久化实例会使用PersistentClientOperationServiceImpl进行服务的信息注册 使用JRaft协议,将数据写入raft集群

@Override

public void registerInstance(Service service, Instance instance, String clientId) {

Service singleton = ServiceManager.getInstance().getSingleton(service);

//如果是临时实例直接报错

if (singleton.isEphemeral()) {

throw new NacosRuntimeException(NacosException.INVALID_PARAM,

String.format("Current service %s is ephemeral service, can't register persistent instance.",

singleton.getGroupedServiceName()));

}

final InstanceStoreRequest request = new InstanceStoreRequest();

request.setService(service);

request.setInstance(instance);

request.setClientId(clientId);

final WriteRequest writeRequest = WriteRequest.newBuilder().setGroup(group())

.setData(ByteString.copyFrom(serializer.serialize(request))).setOperation(DataOperation.ADD.name())

.build();

try {

//基于jraft协议写

protocol.write(writeRequest);

} catch (Exception e) {

throw new NacosRuntimeException(NacosException.SERVER_ERROR, e);

}

}

JRaft同步

JRaftProtocol

@Override

public Response write(WriteRequest request) throws Exception {

//异步写入

CompletableFuture<Response> future = writeAsync(request);

// Here you wait for 10 seconds, as long as possible, for the request to complete

return future.get(10_000L, TimeUnit.MILLISECONDS);

}

@Override

public CompletableFuture<Response> writeAsync(WriteRequest request) {

return raftServer.commit(request.getGroup(), request, new CompletableFuture<>());

}

JRaftServer

public CompletableFuture<Response> commit(final String group, final Message data,

final CompletableFuture<Response> future) {

LoggerUtils.printIfDebugEnabled(Loggers.RAFT, "data requested this time : {}", data);

final RaftGroupTuple tuple = findTupleByGroup(group);

if (tuple == null) {

future.completeExceptionally(new IllegalArgumentException("No corresponding Raft Group found : " + group));

return future;

}

FailoverClosureImpl closure = new FailoverClosureImpl(future);

//获取集群中的当前节点

final Node node = tuple.node;

//判断这个节点是不是leader 如果是则直接将数据应用到本地,然后再通知其他节点

if (node.isLeader()) {

// The leader node directly applies this request

applyOperation(node, data, closure);

} else {

// 如果是follower,重定向到leader 由leader节点执行写入操作。

// Forward to Leader for request processing

invokeToLeader(group, data, rpcRequestTimeoutMs, closure);

}

return future;

}

follower节点重定向leader节点

如果是follower节点的时候会拿到leader节点的信息通过grpc请求去重定向到leader节点由leader节点去处理写入请求 最后还是调用applyOperation进行的处理

private void invokeToLeader(final String group, final Message request, final int timeoutMillis,

FailoverClosure closure) {

try {

//拿到leader节点信息

final Endpoint leaderIp = Optional.ofNullable(getLeader(group))

.orElseThrow(() -> new NoLeaderException(group)).getEndpoint();

//由leader节点进行操作

cliClientService.getRpcClient().invokeAsync(leaderIp, request, new InvokeCallback() {

@Override

public void complete(Object o, Throwable ex) {

if (Objects.nonNull(ex)) {

closure.setThrowable(ex);

closure.run(new Status(RaftError.UNKNOWN, ex.getMessage()));

return;

}

if (!((Response)o).getSuccess()) {

closure.setThrowable(new IllegalStateException(((Response) o).getErrMsg()));

closure.run(new Status(RaftError.UNKNOWN, ((Response) o).getErrMsg()));

return;

}

closure.setResponse((Response) o);

closure.run(Status.OK());

}

@Override

public Executor executor() {

return RaftExecutor.getRaftCliServiceExecutor();

}

}, timeoutMillis);

} catch (Exception e) {

closure.setThrowable(e);

closure.run(new Status(RaftError.UNKNOWN, e.toString()));

}

}

leader节点处理写入请求

如果当前是leader节点,可以直接执行写入操作。这里使用sofa-jraft的Node.apply(Task)方法提交本次写入请求到Raft集群

public void applyOperation(Node node, Message data, FailoverClosure closure) {

final Task task = new Task();

task.setDone(new NacosClosure(data, status -> {

NacosClosure.NacosStatus nacosStatus = (NacosClosure.NacosStatus) status;

closure.setThrowable(nacosStatus.getThrowable());

closure.setResponse(nacosStatus.getResponse());

closure.run(nacosStatus);

}));

// add request type field at the head of task data.

byte[] requestTypeFieldBytes = new byte[2];

requestTypeFieldBytes[0] = ProtoMessageUtil.REQUEST_TYPE_FIELD_TAG;

if (data instanceof ReadRequest) {

requestTypeFieldBytes[1] = ProtoMessageUtil.REQUEST_TYPE_READ;

} else {

requestTypeFieldBytes[1] = ProtoMessageUtil.REQUEST_TYPE_WRITE;

}

byte[] dataBytes = data.toByteArray();

task.setData((ByteBuffer) ByteBuffer.allocate(requestTypeFieldBytes.length + dataBytes.length)

.put(requestTypeFieldBytes).put(dataBytes).position(0));

node.apply(task);

}

应用log处理

NacosStateMachine

将Task提交到sofa-jraft框架后,当超半数节点commit log成功,所有节点(无论是leader发现超半数commit,还是follower通过日志复制),最终会调用用户实现的状态机的onApply方法,可以应用到所有节点的本地状态机中。NacosStateMachine是Nacos对于sofa-jraft StateMachine的实现类,定位到onApply方法。

@Override

public void onApply(Iterator iter) {

int index = 0;

int applied = 0;

Message message;

NacosClosure closure = null;

try {

while (iter.hasNext()) {

Status status = Status.OK();

try {

//如果是leader节点,这里done不为空,减少反序列化报文的开销

if (iter.done() != null) {

closure = (NacosClosure) iter.done();

message = closure.getMessage();

} else {

final ByteBuffer data = iter.getData();

message = ProtoMessageUtil.parse(data.array());

if (message instanceof ReadRequest) {

//'iter.done() == null' means current node is follower, ignore read operation

applied++;

index++;

iter.next();

continue;

}

}

LoggerUtils.printIfDebugEnabled(Loggers.RAFT, "receive log : {}", message);

// 处理写请求

if (message instanceof WriteRequest) {

Response response = processor.onApply((WriteRequest) message);

postProcessor(response, closure);

}

// 一致性读降级走raft流程

if (message instanceof ReadRequest) {

Response response = processor.onRequest((ReadRequest) message);

postProcessor(response, closure);

}

} catch (Throwable e) {

index++;

status.setError(RaftError.UNKNOWN, e.toString());

Optional.ofNullable(closure).ifPresent(closure1 -> closure1.setThrowable(e));

throw e;

} finally {

Optional.ofNullable(closure).ifPresent(closure1 -> closure1.run(status));

}

applied++;

index++;

iter.next();

}

} catch (Throwable t) {

Loggers.RAFT.error("processor : {}, stateMachine meet critical error: {}.", processor, t);

iter.setErrorAndRollback(index - applied,

new Status(RaftError.ESTATEMACHINE, "StateMachine meet critical error: %s.",

ExceptionUtil.getStackTrace(t)));

}

}

JRaft处理写请求

最终WriteRequest被提交到一个RequestProcessor处理,因为这里讲的是持久化实例的注册过程所以会去交给PersistentClientOperationServiceImpl去处理走到onApply的方法中

@Override

public Response onApply(WriteRequest request) {

final InstanceStoreRequest instanceRequest = serializer.deserialize(request.getData().toByteArray());

final DataOperation operation = DataOperation.valueOf(request.getOperation());

final Lock lock = readLock;

lock.lock();

try {

switch (operation) {

//添加

case ADD:

onInstanceRegister(instanceRequest.service, instanceRequest.instance,

instanceRequest.getClientId());

break;

//删除

case DELETE:

onInstanceDeregister(instanceRequest.service, instanceRequest.getClientId());

break;

default:

return Response.newBuilder().setSuccess(false).setErrMsg("unsupport operation : " + operation)

.build();

}

return Response.newBuilder().setSuccess(true).build();

} finally {

lock.unlock();

}

}

private void onInstanceRegister(Service service, Instance instance, String clientId) {

Service singleton = ServiceManager.getInstance().getSingleton(service);

if (!clientManager.contains(clientId)) {

clientManager.clientConnected(clientId, new ClientAttributes());

}

Client client = clientManager.getClient(clientId);

InstancePublishInfo instancePublishInfo = getPublishInfo(instance);

client.addServiceInstance(singleton, instancePublishInfo);

client.setLastUpdatedTime();

//发送变更事件

NotifyCenter.publishEvent(new ClientOperationEvent.ClientRegisterServiceEvent(singleton, clientId));

}

这里又会回到处理变更事件那里