基于PaddleOCR文字识别Android端打包成aar包

目的

官方其实已经提供了详细的端侧部署,如果按照官方步骤无法正常编译的,可在评论区留言,我将详细编译步骤也发布出来,在这里我主要讲一下如何将官方的提供的代码转成可方便移植的module包,便于方便引入各个项目中。

官方2.5编译教程:PaddleOCR/readme.md at release/2.5 · PaddlePaddle/PaddleOCR (github.com)

开始!!!

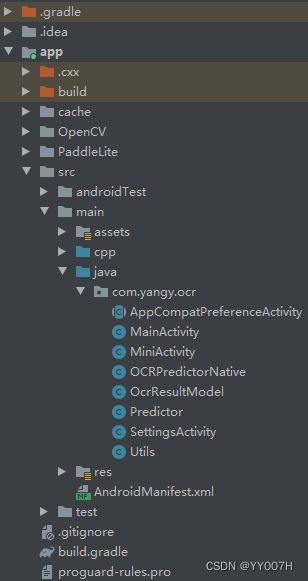

官方包结构对比module包结构,可以发现后面结构清晰明了

1、首先将官方包下所有文件拷贝到新建的Module包下

2、删除以下文件

3、新建单例模式启动OCRPredictor类和接口OnImagePredictorListener类,代码如下:

package com.yangy.ocr;

import android.annotation.SuppressLint;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.media.ExifInterface;

import android.os.Binder;

import android.os.Build;

import android.os.Bundle;

import android.os.Handler;

import android.os.HandlerThread;

import android.os.Message;

import android.text.TextUtils;

import android.util.Base64;

import android.util.Log;

import java.io.IOException;

public class OCRPredictor {

public final int REQUEST_LOAD_MODEL = 0;

public final int REQUEST_RUN_MODEL = 1;

public final int RESPONSE_LOAD_MODEL_SUCCESSED = 0;

public final int RESPONSE_LOAD_MODEL_FAILED = 1;

public final int RESPONSE_RUN_MODEL_SUCCESSED = 2;

public final int RESPONSE_RUN_MODEL_FAILED = 3;

public final int RESPONSE_RUN_MODEL_NO_FILE = 4;

// private final String assetModelDirPath = "models/bank";

// private final String assetlabelFilePath = "labels/ppocr_keys_bank.txt";

private final String assetModelDirPath = "models/ocr";

private final String assetlabelFilePath = "labels/ppocr_keys_v1.txt";

protected Handler sender = null; // Send command to worker thread

protected HandlerThread worker = null; // Worker thread to load&run model

protected volatile Predictor predictor = null;

private OnImagePredictorListener listener;

@SuppressLint("StaticFieldLeak")

private static OCRPredictor ocrPredictor;

private Context context;

public static OCRPredictor getInstance(Context context) {

if (ocrPredictor == null) {

ocrPredictor = new OCRPredictor(context);

}

return ocrPredictor;

}

public OCRPredictor(Context context) {

this.context = context;

init();

}

/**

*

*/

private void init() {

worker = new HandlerThread("Predictor Worker");

worker.start();

sender = new Handler(worker.getLooper()) {

public void handleMessage(Message msg) {

switch (msg.what) {

case REQUEST_LOAD_MODEL:

// Load model and reload test image

if (!onLoadModel(context)) {

predictor = null;

Log.d("OCR", "加载模型失败!");

} else {

Log.d("OCR", "加载模型成功!");

}

break;

case REQUEST_RUN_MODEL:

Bundle bundle = msg.getData();

if (predictor == null) {

receiver.sendEmptyMessage(RESPONSE_LOAD_MODEL_FAILED);

} else {

// Run model if model is loaded

if (!TextUtils.isEmpty(bundle.getString("imagePath"))) {

if (!onRunModel(bundle.getString("imagePath"))) {

receiver.sendEmptyMessage(RESPONSE_RUN_MODEL_FAILED);

} else {

receiver.sendEmptyMessage(RESPONSE_RUN_MODEL_SUCCESSED);

}

} else {

ImageBinder imageBinder = (ImageBinder) bundle.getBinder("bitmap");

if (imageBinder != null) {

Bitmap bitmap = imageBinder.getBitmap();

if (!onRunModel(bitmap)) {

receiver.sendEmptyMessage(RESPONSE_RUN_MODEL_FAILED);

} else {

receiver.sendEmptyMessage(RESPONSE_RUN_MODEL_SUCCESSED);

}

} else {

StringBinder stringBinder = (StringBinder) bundle.getBinder("base64");

if (stringBinder != null) {

String str = stringBinder.getStr();

if (!onRunModelBase64(str)) {

receiver.sendEmptyMessage(RESPONSE_RUN_MODEL_FAILED);

} else {

receiver.sendEmptyMessage(RESPONSE_RUN_MODEL_SUCCESSED);

}

} else {

receiver.sendEmptyMessage(RESPONSE_RUN_MODEL_NO_FILE);

}

}

}

}

break;

}

}

};

sender.sendEmptyMessage(REQUEST_LOAD_MODEL);

}

private Handler receiver = new Handler() {

@Override

public void handleMessage(Message msg) {

switch (msg.what) {

// case RESPONSE_LOAD_MODEL_SUCCESSED:

// sender.sendEmptyMessage(REQUEST_RUN_MODEL);

// break;

case RESPONSE_LOAD_MODEL_FAILED:

listener.failure(0, "加载模型失败!");

break;

case RESPONSE_RUN_MODEL_SUCCESSED:

listener.success(predictor.outputResult, predictor.outputResultList, predictor.outputImage);

break;

case RESPONSE_RUN_MODEL_FAILED:

listener.failure(1, "运行模型失败!");

break;

case RESPONSE_RUN_MODEL_NO_FILE:

listener.failure(2, "运行模型失败,请传入待识别图片!");

break;

default:

break;

}

}

};

public void predictor(final String imagePath, final OnImagePredictorListener listener) {

this.listener = listener;

Message message = new Message();

message.what = REQUEST_RUN_MODEL;

Bundle bundle = new Bundle();

bundle.putString("imagePath", imagePath);

message.setData(bundle);

sender.sendMessage(message);

}

public void predictorBitMap(final Bitmap bitmap, final OnImagePredictorListener listener) {

this.listener = listener;

Message message = new Message();

message.what = REQUEST_RUN_MODEL;

Bundle bundle = new Bundle();

bundle.putBinder("bitmap", new ImageBinder(bitmap));

message.setData(bundle);

sender.sendMessage(message);

}

public void predictorBase64(final String base64, final OnImagePredictorListener listener) {

this.listener = listener;

Message message = new Message();

message.what = REQUEST_RUN_MODEL;

Bundle bundle = new Bundle();

bundle.putBinder("base64", new StringBinder(base64));

message.setData(bundle);

sender.sendMessage(message);

}

private static class ImageBinder extends Binder {

private Bitmap bitmap;

public ImageBinder(Bitmap bitmap) {

this.bitmap = bitmap;

}

Bitmap getBitmap() {

return bitmap;

}

}

private static class StringBinder extends Binder {

private String str;

public StringBinder(String str) {

this.str = str;

}

String getStr() {

return str;

}

}

/**

* call in onCreate, model init

*

* @return

*/

private boolean onLoadModel(Context context) {

if (predictor == null) {

predictor = new Predictor();

}

return predictor.init(context, assetModelDirPath, assetlabelFilePath);

}

private boolean onRunModel(String imagePath) {

try {

ExifInterface exif = null;

exif = new ExifInterface(imagePath);

int orientation = exif.getAttributeInt(ExifInterface.TAG_ORIENTATION,

ExifInterface.ORIENTATION_UNDEFINED);

Log.i("OCR", "rotation " + orientation);

Bitmap image = BitmapFactory.decodeFile(imagePath);

image = Utils.rotateBitmap(image, orientation);

predictor.setInputImage(image);

return predictor.isLoaded() && predictor.runModel();

} catch (IOException e) {

e.printStackTrace();

return false;

}

}

private boolean onRunModel(Bitmap image) {

try {

predictor.setInputImage(image);

return predictor.isLoaded() && predictor.runModel();

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

private boolean onRunModelBase64(String base64) {

try {

byte[] bitmapByte = Base64.decode(base64, Base64.DEFAULT);

Bitmap bitmap = BitmapFactory.decodeByteArray(bitmapByte, 0, bitmapByte.length);

predictor.setInputImage(bitmap);

return predictor.isLoaded() && predictor.runModel();

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 卸载模型

*/

private void onUnloadModel() {

if (predictor != null) {

predictor.releaseModel();

predictor = null;

}

}

/**

* 退出线程

*/

private void quit() {

if (worker != null) {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.JELLY_BEAN_MR2) {

worker.quitSafely();

} else {

worker.quit();

}

}

}

/**

* 销毁

*/

public void onDestroy() {

onUnloadModel();

quit();

ocrPredictor = null;

}

}

package com.yangy.ocr;

import android.graphics.Bitmap;

import java.util.ArrayList;

public abstract class OnImagePredictorListener {

public abstract void success(String result, ArrayList list, Bitmap bitmap);

public void failure(int code, String message) {

}

} 4、修改Predictor类,加入以下两行

![]()

![]()

5、修改native.cpp文件,将官方的_com_baidu_paddle_lite_demo_ocr_替换成我们自己的包名,如_com_yangy_ocr_,如下截图:

![]()

![]()

6、最后一步,删除Module包下build.gradle中下载PaddleLite、OpenCV包的代码,红框部分全部删除,我们不需要 ,PaddleLite和模型包需要自行编译

7、使用方式,在主App下引入该Module包,短短几行代码就可实现

完毕!!!

这里发布一个自己打的包,引入aar包后,使用如上面最后一图:

1、minSdkVersion 21

2、支持arm64-v8a和armabi-v7a

下载地址