云原生系列1-Docker

1、虚拟机集群环境准备

VirtualBox类似vmware的虚拟化软件,去官网https://www.virtualbox.org/下载最新版本免费的,VirtualBox中鼠标右ctrl加home跳出鼠标到wins中。

VirtualBox安装步骤 https://blog.csdn.net/rfc2544/article/details/131338906

centos 镜像地址:https://mirrors.ustc.edu.cn/centos/7.9.2009/isos/x86_64/

virtualbox安装centos7或者其他虚拟机,配置完成后开机或者重启出现重新安装系统的界面,出现这个问题的原因可能是重新启动时,虚拟机的启动方式,是光盘启动优先于硬盘启动。

解决办法:刚完成安装,还未开机时,进入对应的虚拟机下,进入设置,系统,调整启动顺序,顺序为硬盘,软驱,光驱,网络,一般情况下不勾选网络,然后开机,就是正常的了。

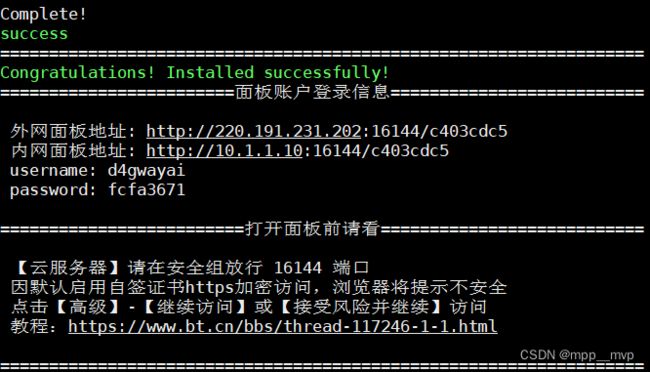

宝塔面板使用,https://www.bt.cn/new/index.html

2、docker第一阶段

Docker官网:http://www.docker.com,官网文档: https://docs.docker.com/get-docker/

官网安装参考手册:https://docs.docker.com/engine/install/centos/

1、docker安装

1、保证环境是符合要求(centos内核在 3.10以上)

[root@localhost ~]# uname -r

3.10.0-957.el7.x86_64

使用系统自带的yum源

[root@localhost yum.repos.d]# ls

CentOS-Base.repo CentOS-CR.repo CentOS-Debuginfo.repo CentOS-fasttrack.repo CentOS-Media.repo CentOS-Sources.repo CentOS-Vault.repo

2、卸载旧的docker

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

3、安装 gcc 环境

yum -y install gcc \

yum -y install gcc-c++

4、安装docker需要的仓库地址配置

sudo yum install -y yum-utils

# download.docker.com 很卡

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# 在这里就要使用国内的镜像

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

5、安装docker

#更新yum索引

yum makecache fast

#安装docker

yum install -y docker-ce docker-ce-cli containerd.io

6、启动docker

systemctl start docker

# 查看版本

docker version

7、测试是否安装成功

docker run hello-world

2、docker的卸载

systemctl stop docker

yum remove docker-ce docker-ce-cli containerd.io

sudo rm -rf /var/lib/docker

sudo rm -rf /var/lib/containerd

3、配置镜像加速,用阿里云镜像源(默认从dockerhub下载镜像)

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://aaaa.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

4、docker常用命令,求帮助,docker --help

镜像命令,求帮助,docker images --help

查看所有镜像,docker images

仓库 标签:版本号 镜像id 创建时间 大小

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest feb5d9fea6a5 2 years ago 13.3kB

# -a 展示所有的镜像 -q 只展示镜像的id

[root@localhost ~]# docker images -qa

feb5d9fea6a5

搜索镜像,docker search mysql

下载镜像,docker pull mysql,默认下载最新版本镜像latest

下载指定版本镜像,docker pull mysql:5.7,docker pull 镜像名:版本号

删除镜像,docker rmi 镜像名或镜像id

有容器在指向这个镜像,则不能直接删除,如果要删除,就需要强制删除,docker rmi -f 镜像名或镜像id

删除所有的镜像,docker rmi -f $(docker images -aq)

容器命令,求帮助,docker run --help

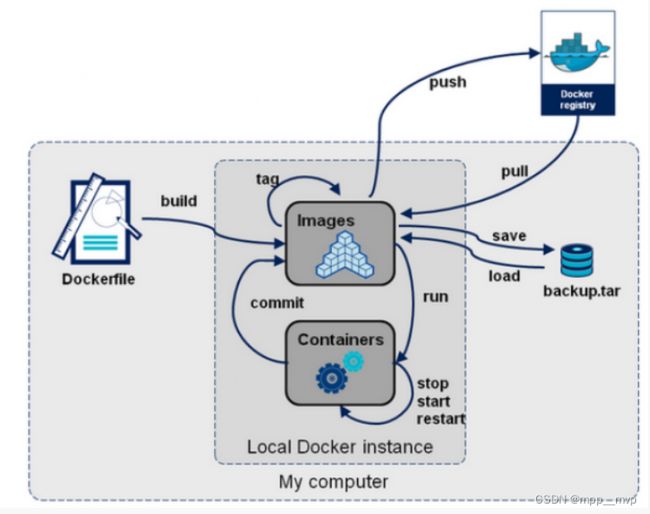

仓库--镜像--容器,用镜像运行一个容器:docker run 镜像名

先本地寻找镜像(如果存在,则通过本地镜像启动容器,不存在,去仓库dockerhub下载镜像)

到仓库下载镜像(存在,拉取到本地,然后执行,不存在,就直接报错)

# 常用参数

--name="Name" 可以给容器起一个名字

-d 容器后台启动

-i 让容器用交互的方式启动

-t 给容器分配一个终端登录使用 /bin/bash

-p 小p指定端口映射 (主机访问的端口:容器端口)

-P 大P随机暴露一个端口

docker run 镜像名(停止的容器,通过docker start也起不来)

docker run -d 镜像名,在后台运行容器(停止的容器,通过docker start也起不来)

docker run -tid 镜像名,在后台运行容器(容器是运行中的)

如果这个容器里面没有前台进程-it,直接通过-d启动,就会退出并且这个容器是停止的。-ti 后可加指定终端,不加的话默认是/bin/bash

docker run -it 镜像名,进入容器内部后,exit退出容器,会停止容器运行 ,Ctrl+P+Q,不会停止运行

docker ps 查看正在运行的容器,-a 查看所有的容器包括已经停止的,-q 只展示容器id,docker ps -aq

容器启动关闭重启start/stop/restart,docker stop 容器名或容器id

docker rm -f 容器id或容器名

删除所有镜像,docker rmi -f $(docker images -aq)

删除所有容器,docker rm -f $(docker ps -aq)

查看日志,docker logs 容器名或容器id

-c 可以输入一些shell命令来执行,死循环导致容器一直在运行,不然容器就停止了。

[root@localhost ~]# docker run -d centos /bin/bash -c "while true;do echo 111;sleep 1;done"

8d802e2c9f8c34a52437fb15feb8c2ff77fca1413d675c203443604abe4d1849

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8d802e2c9f8c centos "/bin/bash -c 'while…" 5 seconds ago Up 3 seconds objective_lumiere

-t 打印 时间戳 -f 打印最新的日志

[root@localhost ~]# docker logs -tf --tail 10 8d802e2c9f8c

2023-12-10T12:22:11.180425555Z 111

2023-12-10T12:22:12.190936232Z 111

2023-12-10T12:22:13.199118756Z 111

2023-12-10T12:22:14.215693476Z 111

2023-12-10T12:22:15.223953131Z 111

2023-12-10T12:22:16.235145389Z 111

2023-12-10T12:22:17.239058891Z 111

查看容器相关的进程,docker top 容器名或容器id

[root@localhost ~]# docker top 8d802e2c9f8c

UID PID PPID C STIME TTY TIME CMD

root 40812 40790 0 20:21 ? 00:00:00 /bin/bash -c while true;do echo 111;sleep 1;done

root 42114 40812 0 20:32 ? 00:00:00 /usr/bin/coreutils --coreutils-prog-shebang=sleep /usr/bin/sleep 1

查看容器的详细信息,docker inspect 容器名或容器id,docker inspect 镜像名或镜像id,也可查镜像详细信息

[root@localhost ~]# docker inspect 8d802e2c9f8c

[

{

"Id": "8d802e2c9f8c34a52437fb15feb8c2ff77fca1413d675c203443604abe4d1849",

"Created": "2023-12-10T12:21:31.68845678Z",

"Path": "/bin/bash",

...

进入一个正在执行的容器

exec 在容器中打开新的shell终端,并启动新的shell终端进程。exit退出容器不会停止

attach 直接进入容器启动命令的shell终端,不会启动新的shell终端进程。exit退出容器停止

docker exec -it 容器id /bin/bash

[root@localhost ~]# docker run -tid centos

9f201440b1dc880d12678b17a35630089f1a6fda750b7e6b4c38a7fac7ba3ec6

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9f201440b1dc centos "/bin/bash" 4 seconds ago Up 2 seconds naughty_brattain

[root@localhost ~]# docker exec -ti 9f /bin/bash

[root@9f201440b1dc /]# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 12:41 pts/0 00:00:00 /bin/bash

root 30 0 1 12:43 pts/1 00:00:00 /bin/bash

root 44 30 0 12:43 pts/1 00:00:00 ps -ef

[root@localhost ~]# docker attach 9f

[root@9f201440b1dc /]# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 12:41 pts/0 00:00:00 /bin/bash

root 45 1 0 12:44 pts/0 00:00:00 ps -ef

拷贝容器内的文件到主机上,docker cp 容器id:容器内的文件 拷贝到主机目录

[root@localhost ~]# docker cp 9f201440b1dc:/etc/passwd /root/

Successfully copied 2.56kB to /root/

[root@localhost ~]# ls

anaconda-ks.cfg initial-setup-ks.cfg passwd

docker 安装 nginx

# 搜索镜像

docker search nginx

# 拉取镜像

docker pull nginx

# 通过镜像启动容器

docker run nginx

# --name 给容器命名

# -p 3500:80 端口映射,主机端口:容器内端口

[root@localhost ~]# docker run -d --name nginx1 -p 3500:80 nginx

4da723921fe9d716e5d3623031f51da71c980cbfa988937c44b8b0ee2c2d32e1

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4da723921fe9 nginx "/docker-entrypoint.…" 5 seconds ago Up 3 seconds 0.0.0.0:3500->80/tcp, :::3500->80/tcp nginx1

[root@localhost ~]# curl localhost:3500

进入容器查看,docker exec -it nginx1 /bin/bash

nginx首页位置,/usr/share/nginx/html/index.html

docker 安装 tomcat

阿里云的tomcat是没有首页的,提示404

[root@localhost ~]# docker pull tomcat

[root@localhost ~]# docker run -d -p 8080:8080 --name tomcat1 tomcat

[root@localhost ~]# curl 127.0.0.1:8080

docker 安装 es(elasticsearch)

[root@localhost ~]# docker pull elastaicsearch

使用多个 -p 可以同时暴露多个端口,-e 配置环境变量,使用多个 -e 可以同时配置多个环境变量

[root@localhost ~]# docker run -d --name elasticsearch1 -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" elasticsearch:7.6.2

[root@localhost ~]# curl localhost:9200

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

caf07ba7236f elasticsearch:7.6.2 "/usr/local/bin/dock…" 30 minutes ago Up 30 minutes 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp, 0.0.0.0:9300->9300/tcp, :::9300->9300/tcp elasticsearch1

dc5ba16eaa57 tomcat "catalina.sh run" 38 minutes ago Up 38 minutes 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp tomcat1

4da723921fe9 nginx "/docker-entrypoint.…" 51 minutes ago Up 51 minutes 0.0.0.0:3500->80/tcp, :::3500->80/tcp nginx1

docker mysql

docker redis

docker rabbitmq

docker kafka

5、docker可视化工具,下载镜像portainer/portainer

[root@localhost ~]# docker run -d -p 8088:9000 \

> --restart=always -v /var/run/docker.sock:/var/run/docker.sock --privileged=true portainer/portainer

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

261ca4ca611c portainer/portainer "/portainer" 50 seconds ago Up 50 seconds 0.0.0.0:8088->9000/tcp, :::8088->9000/tcp infallible_rosalind

3、docker第二阶段

1、Docker镜像详解

镜像是一种轻量级、可执行的独立软件包,用来打包软件运行环境和基于运行环境开发的软件,它包含运行某个软件所需的所有内容,包括代码、运行时、库、环境变量和配置文件。镜像是一个环境或者项目。

镜像分层下载:所有Docker镜像都起始于一个基础镜像层,当进行修改或增加新的内容时,就会在当前镜像层之上,创建新的镜像层。

[root@localhost ~]# docker pull mysql

Using default tag: latest

latest: Pulling from library/mysql

72a69066d2fe: Pull complete

93619dbc5b36: Pull complete

99da31dd6142: Pull complete

626033c43d70: Pull complete

37d5d7efb64e: Pull complete

ac563158d721: Pull complete

d2ba16033dad: Pull complete

688ba7d5c01a: Pull complete

00e060b6d11d: Pull complete

1c04857f594f: Pull complete

4d7cfa90e6ea: Pull complete

e0431212d27d: Pull complete

Digest: sha256:e9027fe4d91c0153429607251656806cc784e914937271037f7738bd5b8e7709

Status: Downloaded newer image for mysql:latest

docker.io/library/mysql:latest

[root@localhost ~]# docker inspect mysql docker inspect 镜像名或镜像id,查镜像详细信息

"RootFS": {

"Type": "layers",

// 镜像分层下载的片段

"Layers": [

"sha256:ad6b69b549193f81b039a1d478bc896f6e460c77c1849a4374ab95f9a3d2cea2",

"sha256:fba7b131c5c350d828ebea6ce6d52cdc751219c6287c4a7f13a51435b35eac06",

"sha256:0798f2528e8383f031ebd3c6d351f7d9f7731b3fd12007e5f2fdcdc4e1efc31a",

"sha256:a0c2a050fee24f87fde784c197a8b3eb66a3881b96ea261165ac1a01807ffb80",

"sha256:d7a777f6c3a4ded4667f61398eb1f9b380db07bf48876f64d93bf30fb1393f96",

"sha256:0d17fee8db40d61d9ca0d85bff8b32ef04bbd09d77e02cc67c454c8f84edb3d8",

"sha256:aad27784b7621a3e58bd03e5d798e505fb80b081a5070d7c822e41606b90a5c0",

"sha256:1d1f48e448f9b8abb9a2aad1e76d4746b69957882d1ddb9c11115302d45fcbbd",

"sha256:c654c2afcbba8c359565df63f6ecee333c9cc6abaeaa39838b05b4465a82758b",

"sha256:118fee5d988ac2057ab66d87bbebd1f18b865fb02a03ba0e23762af5b55b0bd5",

"sha256:fc8a043a3c7556d9abb4fad3aefa3ab6a5e1c02abda5f924f036c696687d094e",

"sha256:d67a9f3f65691979bc9e2b5ee0afcd4549c994f13e1a384ecf3e11f83d82d3f2"

]

}

例如基于 Ubuntu Linux 16.04 创建一个新的镜像,这就是新镜像的第一层;如果在该镜像中添加 Python包,就会在基础镜像层之上创建第二个镜像层;如果继续添加一个安全补丁,就会创建第三个镜像层。

在添加额外的镜像层的同时,镜像始终保持是当前所有镜像的组合,例如每个镜像层包含 3 个文件,而镜像包含了来自两个镜像层的 6 个文件。

下图的的三层镜像,在外部看来整个镜像只有 6 个文件,这是因为最上层中的文件 7 是文件 5 的一个更新版本。

上层镜像层中的文件覆盖了底层镜像层中的文件,这样就使得文件的更新版本作为一个新镜像层添加到镜像当中。

Docker 通过存储引擎(新版本采用快照机制)的方式来实现镜像层堆栈,并保证多镜像层对外展示为统一的文件系统。Linux 上可用的存储引擎有 AUFS、Overlay2、Device Mapper、Btrfs 以及 ZFS。每种存储引擎都基于 Linux 中对应的文件系统或者块设备技术,并且每种存储引擎都有其独有的性能特点。Docker 在 Windows 上仅支持 windowsfilter 一种存储引擎,该引擎基于 NTFS 文件系统之上实现了分层和 CoW。

所有镜像层堆叠并合并,对外提供统一的视图。

2、Docker镜像加载原理

docker的镜像实际上由一层一层的文件系统组成,这种层级的文件系统就是UnionFS。

UnionFS(联合文件系统):UnionFS文件系统是一种分层、轻量级并且高性能的文件系统,它支持对文件系统的修改作为一次提交来一层层的叠加,同时可以将不同目录挂载到同一个虚拟文件系统下(unite several directories into a single virtual filesystem)。Union 文件系统是 Docker 镜像的基础。镜像可以通过分层来进行继承,基于基础镜像,可以制作各种具体的应用镜像。

特性:一次同时加载多个文件系统,但从外面看起来,只能看到一个文件系统,联合加载会把各层文件系统叠加起来,这样最终的文件系统会包含所有底层的文件和目录。

bootfs(boot file system)主要包含bootloader和kernel, bootloader主要是引导加载kernel, Linux刚启动时会加载bootfs文件系统,在Docker镜像的最底层是bootfs文件系统。这一层与Linux/Unix系统是一样的,包含boot加载器和内核。当boot加载完成之后整个内核就都在内存中了,此时内存的使用权已由bootfs文件系统转交给内核,此时系统也会卸载bootfs。

rootfs (root file system) ,在bootfs文件系统之上。包含的就是典型 Linux 系统中的 /dev, /proc, /bin, /etc 等标准目录和文件。rootfs就是各种不同的操作系统发行版,比如Ubuntu,Centos等。

平常安装进虚拟机的CentOS都是好几个G,Docker中安装的CentOS才200M。对于一个精简的OS,rootfs 可以很小,只需要包含最基本的命令,工具和程序库就可以了,因为底层直接用Host的kernel,自己只需要提供rootfs就可以了。由此可见对于不同的linux发行版, bootfs基本是一致的, rootfs会有差别, 因此不同的发行版可以公用bootfs。

3、将容器打包成镜像,docker commit os1 centos:txt

docker commit ,提交容器的副本,让这个容器产生一个新的镜像。

1、阿里云的tomcat是没有首页的,提示404

[root@localhost ~]# docker pull tomcat

[root@localhost ~]# docker run -d -p 8080:8080 --name tomcat1 tomcat

[root@localhost ~]# curl 127.0.0.1:8080

2、修改了阿里云的tomcat的镜像让其拥有首页

[root@localhost ~]# docker exec -ti c935326879e8 /bin/bash

root@c935326879e8:/usr/local/tomcat# ls

BUILDING.txt CONTRIBUTING.md LICENSE NOTICE README.md RELEASE-NOTES RUNNING.txt bin conf lib logs native-jni-lib temp webapps webapps.dist work

root@c935326879e8:/usr/local/tomcat# ls webapps

root@c935326879e8:/usr/local/tomcat# cp -r webapps.dist/* webapps

root@c935326879e8:/usr/local/tomcat# ls webapps

ROOT docs examples host-manager manager

root@c935326879e8:/usr/local/tomcat# exit

exit

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c935326879e8 tomcat "catalina.sh run" 3 minutes ago Up 3 minutes 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp tomcat1

[root@localhost ~]# curl localhost:8080

3、通过commit提交,docker commit tomcat1 tomcat:index

[root@localhost ~]# docker commit tomcat1 tomcat:index

sha256:1a5af59db7820a0007b80c52af356699bce2627f71d73c47e3000ca424a023ef

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

tomcat index 1a5af59db782 5 seconds ago 684MB

nginx latest 605c77e624dd 23 months ago 141MB

tomcat latest fb5657adc892 23 months ago 680MB

修改提交以后的镜像分层:(11层)

[root@localhost ~]# docker inspect tomcat:index,docker inspect 镜像名或镜像id,查镜像详细信息

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:11936051f93baf5a4fb090a8fa0999309b8173556f7826598e235e8a82127bce",

"sha256:31892cc314cb1993ba1b8eb5f3002c4e9f099a9237af0d03d1893c6fcc559aab",

"sha256:8bf42db0de72f74f4ef0c1d1743f5d54efc3491ee38f4af6d914a6032148b78e",

"sha256:26a504e63be4c63395f216d70b1b8af52263a5289908df8e96a0e7c840813adc",

"sha256:f9e18e59a5651609a1503ac17dcfc05856b5bea21e41595828471f02ad56a225",

"sha256:832e177bb5008934e2f5ed723247c04e1dd220d59a90ce32000b7c22bd9d9b54",

"sha256:3bb5258f46d2a511ddca2a4ec8f9091d676a116830a7f336815f02c4b34dbb23",

"sha256:59c516e5b6fafa2e6b63d76492702371ca008ade6e37d931089fe368385041a0",

"sha256:bd2befca2f7ef51f03b757caab549cc040a36143f3b7e3dab94fb308322f2953",

"sha256:3e2ed6847c7a081bd90ab8805efcb39a2933a807627eb7a4016728f881430f5f",

"sha256:a02ce5cb130a594f285de5a731affd2fb8cc19a725d15dc95723fb726a55a6c5"

]

},

原来的镜像分层:(10层)

[root@localhost ~]# docker inspect tomcat:latest

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:11936051f93baf5a4fb090a8fa0999309b8173556f7826598e235e8a82127bce",

"sha256:31892cc314cb1993ba1b8eb5f3002c4e9f099a9237af0d03d1893c6fcc559aab",

"sha256:8bf42db0de72f74f4ef0c1d1743f5d54efc3491ee38f4af6d914a6032148b78e",

"sha256:26a504e63be4c63395f216d70b1b8af52263a5289908df8e96a0e7c840813adc",

"sha256:f9e18e59a5651609a1503ac17dcfc05856b5bea21e41595828471f02ad56a225",

"sha256:832e177bb5008934e2f5ed723247c04e1dd220d59a90ce32000b7c22bd9d9b54",

"sha256:3bb5258f46d2a511ddca2a4ec8f9091d676a116830a7f336815f02c4b34dbb23",

"sha256:59c516e5b6fafa2e6b63d76492702371ca008ade6e37d931089fe368385041a0",

"sha256:bd2befca2f7ef51f03b757caab549cc040a36143f3b7e3dab94fb308322f2953",

"sha256:3e2ed6847c7a081bd90ab8805efcb39a2933a807627eb7a4016728f881430f5f"

]

},

4、容器数据卷:容器的持久化

将应用和运行的环境打包形成容器运行,运行可以伴随着容器,但是对于数据的要求是能够持久化的。Docker容器产生的数据,如果不通过docker commit 生成新的镜像,使得数据作为镜像的一部分保存下来,那么当容器删除后,数据自然也就没有了,这样是不行的。在Docker中使用卷,让容器中数据挂载到主机本地实现数据的持久化,这样数据就不会因为容器删除而丢失了。

使用数据卷,docker -v 宿主机目录:容器目录。删除容器以后,数据依旧存在。新建容器挂载回来这个目录,容器内又可以使用这个数据了。

# 自动在宿主机创建对应的文件夹

docker run -it -v /home/ceshi:/root centos

[root@localhost ~]# docker run -it -v /home/ceshi:/root centos

bash-4.4# cd root

bash-4.4# touch 1.txt

宿主机查看,[root@localhost ~]# ls /home/ceshi

1.txt

[root@localhost ~]# docker inspect bfb9c7301d2a 查看容器详细信息

"Mounts": [

{

"Type": "bind",

"Source": "/home/ceshi",

"Destination": "/root",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

],

安装mysql

1、测试,创建数据库,新增数据

2、删除容器

3、启动容器,数据依旧存在。

docker run -d -p 3310:3306 \

-v /home/mysql/conf:/etc/mysql/conf.d \

-v /home/mysql/data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=123456 \

--name mysql01 \

mysql:5.7

Dockerfile挂载数据卷,Dockerfile是用来构建Docker镜像的构建文件,是由一系列命令和参数构成的脚本。

[root@localhost ~]# cat dockerfile1

FROM centos

VOLUME /data

CMD /bin/bash

dokcer build 构建一个镜像,-f 指定用哪个文件构建 -t 输出的镜像名:版本号 .点代表当前路径

[root@localhost ~]# docker build -f dockerfile1 -t centos:volume .

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos volume 298171eb7062 2 years ago 231MB

centos latest 5d0da3dc9764 2 years ago 231MB

[root@localhost ~]# docker run -ti centos:volume

[root@0131b1dfef75 /]# cd /data

[root@0131b1dfef75 data]# touch 2.txt

[root@0131b1dfef75 data]# ls

2.txt

[root@localhost ~]# find / -name 2.txt

/var/lib/docker/volumes/aa2d4697123d98529a31e63e1c9be99299ed701950060a9994c95438bb29808c/_data/2.txt

[root@localhost ~]# docker inspect 0131b1dfef75 查看容器详细信息

"Mounts": [

{

"Type": "volume",

"Name": "aa2d4697123d98529a31e63e1c9be99299ed701950060a9994c95438bb29808c",

"Source": "/var/lib/docker/volumes/aa2d4697123d98529a31e63e1c9be99299ed701950060a9994c95438bb29808c/_data",

"Destination": "/data",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

}

],

匿名挂载、具名挂载

# 指定目录挂载

-v 宿主机目录:容器内目录

# 匿名挂载

-v 容器内目录

docker run -d -P --name nginx01 -v /etc/nginx nginx

# 具名挂载

-v 卷名:/容器内目录

docker run -d -P --name nginx02 -v nginxconfig:/etc/nginx nginx

# 匿名挂载、具名挂载,通常使用命令 docker volume维护

[root@localhost ~]# docker volume ls

DRIVER VOLUME NAME

local aa2d4697123d98529a31e63e1c9be99299ed701950060a9994c95438bb29808c

local b93067a3cdca7ee27f466226b6c81f312b5733af413df65345d3bdc206f9d252

local nginxconfig

[root@localhost ~]# docker volume inspect nginxconfig

[

{

"CreatedAt": "2023-12-11T16:29:55+08:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/nginxconfig/_data",

"Name": "nginxconfig",

"Options": null,

"Scope": "local"

}

]

容器数据卷:容器的持久化

数据卷容器:把容器当做一个持久化的卷。

数据卷容器:容器之间的数据是可以共享的

[root@localhost ~]# docker run -it --name docker01 centos:volume

[root@2ee3275d6e03 /]# cd /data

[root@2ee3275d6e03 data]# touch 666.txt

[root@localhost ~]# docker run -it --name docker02 --volumes-from docker01 centos:volume

[root@876f12d7f5ee /]# ls /data

666.txt

[root@876f12d7f5ee /]# touch /data/555.txt

[root@876f12d7f5ee /]# ls /data

555.txt 666.txt

[root@localhost ~]# docker run -it --name docker03 --volumes-from docker01 centos:volume

[root@e8b6070febda /]# ls /data

555.txt 666.txt

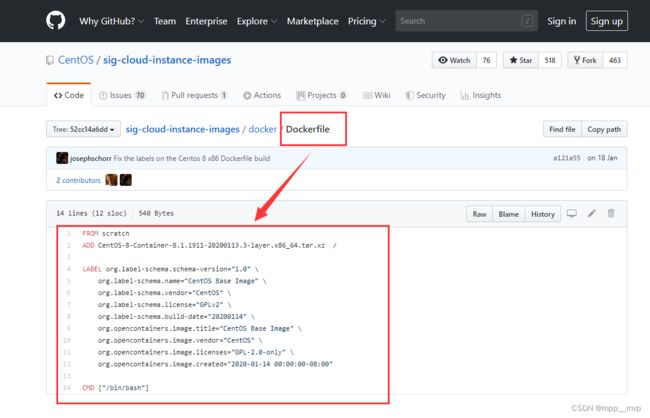

5、DockerFile文件

将微服务打包成镜像,任何装了Docker的地方,都可以下载使用,很方便。

流程:开发应用=>DockerFile=>打包为镜像=>上传到仓库(私有仓库公司内部的aliyun,公有仓库dockerhub)=> 下载镜像 => 启动运行。

dockerfile是用来构建Docker镜像的构建文件,是由一系列命令和参数构成的脚本。

构建步骤:

1、编写DockerFile文件

2、docker build 构建镜像

3、docker run 运行容器

阿里云官方的centos是基于dockerhub这个dockerfile文件来的,https://hub.docker.com/_/centos

DockerFile构建过程

基础知识:

1、每条关键字指令都必须为大写字母且后面要跟随至少一个参数

2、指令按照从上到下,顺序执行

3、# 表示注释

4、每条指令都会创建一个新的镜像层,并对镜像进行提交

流程:

1、docker从基础镜像运行一个容器

2、执行一条指令并对容器做出修改

3、执行类似 docker commit 的操作提交一个新的镜像层

4、Docker再基于刚提交的镜像运行一个新容器

5、执行dockerfile中的下一条指令直到所有指令都执行完成

DockerFile:DockerFile定义了进程需要的一切东西,包括执行代码或者是文件、环境变量、依赖包、运行时环境、动态链接库、操作系统的发行版、服务进程和内核进程等。

Docker镜像:在DockerFile 定义了一个文件之后,Docker build 时会产生一个Docker镜像,当运行 Docker 镜像成为Docker容器时,会真正开始提供服务;

Docker容器:容器是直接提供服务的。

DockerFile指令关键字:

FROM # 基础镜像,当前新镜像是基于哪个镜像的

MAINTAINER # 镜像维护者的姓名混合邮箱地址

RUN # 容器构建时需要运行的命令

EXPOSE # 当前容器对外保留出的端口

WORKDIR # 指定在创建容器后,终端默认登录的进来工作目录,一个落脚点

ENV # 用来在构建镜像过程中设置环境变量

ADD # 将宿主机目录下的文件拷贝进镜像且ADD命令会自动处理URL和解压tar压缩包

COPY # 类似ADD,拷贝文件和目录到镜像中

VOLUME # 容器数据卷,用于数据保存和持久化工作

CMD # 指定一个容器启动时要运行的命令,dockerFile中可以有多个CMD指令,但只有最后一个生效!

ENTRYPOINT # 指定一个容器启动时要运行的命令和CMD一样

ONBUILD # 当构建一个被继承的DockerFile时运行命令,父镜像在被子镜像继承后,父镜像的ONBUILD被触发

Docker Hub 中99% 的镜像都是通过在base镜像(Scratch)中安装和配置需要的软件构建出来的。Scratch镜像小巧而且快速,它没有bug、安全漏洞、延缓的代码或技术债务。除了被Docker添加了metadata之外,它基本上是空的。在使用Dockerfile构建docker镜像时,一种方式是使用官方预先配置好的容器镜像。优点是不用从头开始构建,节省了很多工作量,但付出的代价是需要下载很大的镜像包。在构建一个符合实际业务需求的Docker镜像的前提下,确保镜像尺寸尽可能的小,可以使用空镜像scratch,可以说是真正的从零开始构建属于自己的镜像,镜像的第一层。

基于阿里云官方的centos(不完整的,很多命令没有),发行一个自己的centos(自定义一个镜像增加vim 、ifconfig)

dokcer build 构建一个镜像,-f 指定用哪个文件构建 -t 输出的镜像名:版本号 .点代表当前路径

[root@localhost ~]# docker build -f dockerfile1 -t centos:volume .

[root@localhost ~]# cat dockerfile

FROM centos

MAINTAINER mpp

ENV MYPATH /usr/local

WORKDIR $MYPATH

# 在基础的centos上安装vim、net-tools

RUN yum -y install vim

RUN yum -y install net-tools

EXPOSE 80

CMD echo $MYPATH

CMD /bin/bash

[root@localhost ~]# docker build -f dockerfile -t mycentos:1.0 .

yum安装vim报错解决方案,https://developer.aliyun.com/article/1165954

> [3/4] RUN yum -y install vim:

7.597 CentOS Linux 8 - AppStream 82 B/s | 38 B 00:00

7.666 Error: Failed to download metadata for repo 'appstream': Cannot prepare internal mirrorlist: No URLs in mirrorlist

[root@localhost ~]# cat dockerfile

FROM centos

MAINTAINER mpp

ENV MYPATH /usr/local

WORKDIR $MYPATH

# 镜像源更新

RUN cd /etc/yum.repos.d/

RUN sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-*

RUN sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-*

RUN yum clean all

RUN yum makecache

RUN yum update -y

# 在基础的centos上安装vim、net-tools

RUN yum -y install vim

RUN yum -y install net-tools

EXPOSE 80

CMD echo $MYPATH

CMD /bin/bash

[root@localhost ~]# docker build -f dockerfile -t mycentos:1.0 .

[+] Building 230.0s (14/14) FINISHED docker:default

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load build definition from dockerfile 0.0s

=> => transferring dockerfile: 515B 0.0s

=> [internal] load metadata for docker.io/library/centos:latest 0.0s

=> [ 1/10] FROM docker.io/library/centos 0.0s

=> CACHED [ 2/10] WORKDIR /usr/local 0.0s

=> CACHED [ 3/10] RUN cd /etc/yum.repos.d/ 0.0s

=> CACHED [ 4/10] RUN sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-* 0.0s

=> CACHED [ 5/10] RUN sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-* 0.0s

=> [ 6/10] RUN yum clean all 0.9s

=> [ 7/10] RUN yum makecache 72.7s

=> [ 8/10] RUN yum update -y 128.8s

=> [ 9/10] RUN yum -y install vim 19.8s

=> [10/10] RUN yum -y install net-tools 5.0s

=> exporting to image 2.8s

=> => exporting layers 2.8s

=> => writing image sha256:6aa0737e2d517a89bc6ad172f98f6e4918c5778dbe8c061772ebb8ef3f250a87 0.0s

=> => naming to docker.io/library/mycentos:1.0 0.0s

docker history 镜像名或镜像id,查看镜像的变更历史。

[root@localhost ~]# docker history mycentos:1.0

IMAGE CREATED CREATED BY SIZE COMMENT

6aa0737e2d51 23 minutes ago CMD ["/bin/sh" "-c" "/bin/bash"] 0B buildkit.dockerfile.v0

<missing> 23 minutes ago CMD ["/bin/sh" "-c" "echo $MYPATH"] 0B buildkit.dockerfile.v0

<missing> 23 minutes ago EXPOSE map[80/tcp:{}] 0B buildkit.dockerfile.v0

<missing> 23 minutes ago RUN /bin/sh -c yum -y install net-tools # bu… 28.6MB buildkit.dockerfile.v0

<missing> 23 minutes ago RUN /bin/sh -c yum -y install vim # buildkit 67.1MB buildkit.dockerfile.v0

<missing> 23 minutes ago RUN /bin/sh -c yum update -y # buildkit 276MB buildkit.dockerfile.v0

<missing> 25 minutes ago RUN /bin/sh -c yum makecache # buildkit 27.2MB buildkit.dockerfile.v0

<missing> 27 minutes ago RUN /bin/sh -c yum clean all # buildkit 460kB buildkit.dockerfile.v0

<missing> 32 minutes ago RUN /bin/sh -c sed -i 's|#baseurl=http://mir… 8.8kB buildkit.dockerfile.v0

32 minutes ago RUN /bin/sh -c sed -i ' s/mirrorlist/#mirrorl… 8.82kB buildkit.dockerfile.v0

<missing> 32 minutes ago RUN /bin/sh -c cd /etc/yum.repos.d/ # buildk… 0B buildkit.dockerfile.v0

<missing> 39 minutes ago WORKDIR /usr/local 0B buildkit.dockerfile.v0

<missing> 39 minutes ago ENV MYPATH=/usr/local 0B buildkit.dockerfile.v0

<missing> 39 minutes ago MAINTAINER mpp 0B buildkit.dockerfile.v0

<missing> 2 years ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B

<missing> 2 years ago /bin/sh -c #(nop) LABEL org.label-schema.sc… 0B

<missing> 2 years ago /bin/sh -c #(nop) ADD file:805cb5e15fb6e0bb0… 231MB

CMD、ENTRYPOINT,这两个命令都是指容器启动时要运行的命令,这里的生效,指容器运行起来之后的参数生效。

CMD:Dockerfile 中可以有多个CMD指令,但只有最后一个CMD生效,CMD会被docker run之后的参数替换。

ENTRYPOINT:docker run之后的参数会被拼接到 ENTRYPOINT 指令中,之后形成新的命令组合。

FROM centos

CMD ["ls","-a"]

# docker run cmd ls -lh

FROM centos

ENTRYPOINT ["ls","-a"]

# docker run entrypoint -l

项目中编写Docker,通过docker可以实现自动化构建。

1、基于一个空的镜像

2、下载需要的环境 ADD

3、执行环境变量的配置 ENV

4、执行一些Linux命令 RUN

5、日志 CMD

6、端口暴露 EXPOSE

7、挂载数据卷 VOLUME

自定义一个Tomcat,一般一个项目都在一个文件夹中,只需要在项目目录下 编辑一个Dockerfile 文件即可,build 不需要在-f来指定文件,默认寻找项目目录下的 dockerfile 来构建镜像。docker build -t tomcat:1.0 .

[root@localhost mpp]# pwd

/root/mpp

[root@localhost mpp]# ll

total 165976

-rw-r--r-- 1 root root 10929702 Dec 12 14:03 apache-tomcat-9.0.22.tar.gz

-rw-r--r-- 1 root root 1054 Dec 12 14:11 dockerfile

-rw-r--r-- 1 root root 159019376 Dec 12 14:03 jdk-8u11-linux-x64.tar.gz

-rw-r--r-- 1 root root 0 Dec 12 14:11 readme.txt

[root@localhost mpp]# cat dockerfile

FROM centos

MAINTAINER mpp

#将宿主机目录下文件拷贝到容器中

COPY readme.txt /usr/local/readmeme.txt

#添加自己的安装包

ADD jdk-8u11-linux-x64.tar.gz /usr/local

ADD apache-tomcat-9.0.22.tar.gz /usr/local

#配置工作目录

ENV MYPATH /usr/local

WORKDIR $MYPATH

# 镜像源更新

RUN cd /etc/yum.repos.d/

RUN sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-*

RUN sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-*

RUN yum clean all

RUN yum makecache

RUN yum update -y

#安装vim

RUN yum -y install vim

# 配置环境变量

ENV JAVA_HOME /usr/local/jdk1.8.0_11

ENV CLASSPATH $JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

ENV CATALINA_HOME /usr/local/apache-tomcat-9.0.22

ENV CATALINA_BASE /usr/local/apache-tomcat-9.0.22

ENV PATH $PATH:$JAVA_HOME/bin:$CATALINA_HOME/lib:$CATALINA_HOME/bin

# 暴露端口

EXPOSE 8080

# 启动的时候自动运行tomcat

CMD /usr/local/apache-tomcat-9.0.22/bin/startup.sh && tail -f /usr/local/apache-tomcat-9.0.22/logs/catalina.out

[root@localhost mpp]# docker build -t tomcat:1.0 .

[+] Building 494.7s (17/17) FINISHED docker:default

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load build definition from dockerfile 0.0s

=> => transferring dockerfile: 1.09kB 0.0s

=> [internal] load metadata for docker.io/library/centos:latest 0.0s

=> CACHED [ 1/12] FROM docker.io/library/centos 0.0s

=> [internal] load build context 3.5s

=> => transferring context: 169.99MB 3.5s

=> [ 2/12] COPY readme.txt /usr/local/readmeme.txt 0.0s

=> [ 3/12] ADD jdk-8u11-linux-x64.tar.gz /usr/local 9.3s

=> [ 4/12] ADD apache-tomcat-9.0.22.tar.gz /usr/local 0.8s

=> [ 5/12] WORKDIR /usr/local 0.0s

=> [ 6/12] RUN cd /etc/yum.repos.d/ 0.7s

=> [ 7/12] RUN sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-* 0.7s

=> [ 8/12] RUN sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-* 0.3s

=> [ 9/12] RUN yum clean all 0.8s

=> [10/12] RUN yum makecache 134.8s

=> [11/12] RUN yum update -y 314.7s

=> [12/12] RUN yum -y install vim 25.9s

=> exporting to image 3.1s

=> => exporting layers 3.1s

=> => writing image sha256:4c90dc20dbeb111b5a02761d393b41e3ff124ba044928cb7b3ca79d1a154fa86 0.0s

=> => naming to docker.io/library/tomcat:1.0 0.0s

–privileged=true 这个是保证权限允许

docker run -d -p 9090:8080 --name mpptomcat \

-v /root/mytomcat/webapps/test:/usr/local/apache-tomcat-9.0.22/webapps/test \

-v /root/mytomcat/logs:/usr/local/apache-tomcat-9.0.22/logs \

--privileged=true \

tomcat:1.0

6、发布镜像

公有仓库:DockerHub,注册dockerhub,https://hub.docker.com/signup,需要有一个账号。

docker login 默认登录是 Docker Hub 仓库

求帮助,# docker login --help

# docker login -u mpp

# 上传镜像需要打标签

# docker tag ebd82d75f8c0 mpp/tomcat:1.0

# 上传推送到Dockerhub

# docker push mpp/tomcat:1.0

公司内部,一般都搭建私有仓库,aliyun

按照阿里云提示,登录阿里云的仓库,推送或者下载自己的镜像文件。

mpp123456是命名空间,mpp是仓库名,5.7是镜像版本号

# docker login --username=**** registry.cn-hangzhou.aliyuncs.com

# docker tag c20987f18b13 registry.cn-hangzhou.aliyuncs.com/mpp123456/mpp:5.7

# docker push registry.cn-hangzhou.aliyuncs.com/mpp123456/mpp:5.7

# docker pull registry.cn-hangzhou.aliyuncs.com/mpp123456/mpp:5.7

4、docker进阶1,Docker网络和Docker Compose

1、Docker网络详解

[root@localhost ~]# ip addr

# 本地回环网络

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

# 网卡

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:77:d6:b9 brd ff:ff:ff:ff:ff:ff

inet 10.1.1.128/24 brd 10.1.1.255 scope global noprefixroute dynamic eth0

valid_lft 1291sec preferred_lft 1291sec

inet6 fe80::6759:e3f6:8ca:99a9/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:b5:aa:ea brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:b5:aa:ea brd ff:ff:ff:ff:ff:ff

# docker 0 ,docker创建的网络(默认的)

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:c6:18:33:b9 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

docker每启动一个容器,就会给它分配一个ip,这个ip就是归docker0 管理,docker0是docker默认给的。在不指定网络的情况下,创建容器都在docker0中,未来开发中要自定义网络。

[root@localhost ~]# docker run -itd --name web01 centos

[root@localhost ~]# docker exec -it web01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# 容器外的宿主机可以ping到容器里面

[root@localhost ~]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.106 ms

每次启动一个容器都会给容器分配一个网络地址,容器外docker网络和容器内部的网络是配对的。

再次启动一个容器查看,docker run -itd --name web02 centos

[root@localhost ~]# docker exec -it web01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@localhost ~]# docker exec -it web02 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@localhost ~]# ip addr

...

5: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c6:18:33:b9 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:c6ff:fe18:33b9/64 scope link

valid_lft forever preferred_lft forever

7: vethea42c38@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether ee:d9:b2:13:06:4f brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::ecd9:b2ff:fe13:64f/64 scope link

valid_lft forever preferred_lft forever

9: veth765b60b@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 16:57:e8:c0:54:a1 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::1457:e8ff:fec0:54a1/64 scope link

valid_lft forever preferred_lft forever

总结:

1、容器web01 -- linux宿主机 6: eth0@if7: 7: vethea42c38@if6:

2、容器web02 -- linux宿主机 8: eth0@if9: 9: veth765b60b@if8:

只要启动一个容器,默认就会分配一对网卡(虚拟接口),可以连通容器内外。

veth-pair 就是一对的虚拟设备接口,它都是成对出现的。一端连着协议栈,一端彼此相连着。

测试容器之间的访问,ip访问没有问题。

[root@localhost ~]# docker exec -it web02 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.220 ms

[root@localhost ~]# docker exec -it web01 ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.098 ms

Docker使用Linux桥接(bridge),在宿主机虚拟一个Docker容器网桥(docker0),Docker启动一个容器时会根据Docker网桥的网段分配给容器一个IP地址,称为Container-IP,同时Docker网桥是每个容器的默认网关。因为在同一宿主机内的容器都接入同一个网桥,这样容器之间就能够通过容器的Container-IP直接通信。

Docker容器网络很好的利用了Linux虚拟网络技术,在本地主机和容器内分别创建一个虚拟接口,并让它们彼此联通(这样一对虚拟接口叫veth-pair)。Docker中的网络接口默认都是虚拟的接口,虚拟接口的优势就是转发效率高(因为Linux是在内核中进行数据的复制来实现虚拟接口之间的数据转发,无需通过外部的网络设备交换),对于本地系统和容器系统来说,虚拟接口跟一个正常的以太网卡相比并没有区别,只是虚拟接口它的速度快很多。

容器删除后再次启动,ip发生了变化怎么办?可通过 --link 容器名访问。

[root@localhost ~]# docker exec -it web01 ping web02

ping: web02: Name or service not known

可以通过 --link在启动容器的时候连接到另一个容器网络中,可以通过容器名访问了

[root@localhost ~]# docker run -itd --name web03 --link web02 centos

36c67766973143c0f98e794c20a4f5c8291bc3c18ec39eeb8ffaac3af76b060a

[root@localhost ~]# docker exec -ti web03 ping web02

PING web02 (172.17.0.3) 56(84) bytes of data.

64 bytes from web02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.166 ms

底层原理,web03的域名解析文件/etc/hosts 添加了一条记录,172.17.0.3 web02

[root@localhost ~]# docker exec -it web03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 web02 2ae6fda57da1

172.17.0.4 36c677669731

但是反向ping不通

[root@localhost ~]# docker exec -ti web02 ping web03

ping: web03: Name or service not known

可在web02的域名解析文件/etc/hosts 添加了一条记录,172.17.0.4 web03

[root@localhost ~]# docker exec -ti web02 vi /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 2ae6fda57da1

172.17.0.4 web03

web02能ping通web03

[root@localhost ~]# docker exec -ti web02 ping web03

PING web03 (172.17.0.4) 56(84) bytes of data.

64 bytes from web03 (172.17.0.4): icmp_seq=1 ttl=64 time=0.085 ms

web01能ping通web02,在web01的域名解析文件/etc/hosts 添加了一条记录,172.17.0.3 web02

[root@localhost ~]# docker exec -ti web01 ping web02

ping: web02: Name or service not known

[root@localhost ~]# docker exec -ti web01 vi /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 14be71f5e7b2

172.17.0.3 web02

[root@localhost ~]# docker exec -ti web01 ping web02

PING web02 (172.17.0.3) 56(84) bytes of data.

64 bytes from web02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.104 ms

自定义网络,求帮助 docker network --help

查看所有的网络

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

37e502623b8e bridge bridge local

503dcc75c2ce host host local

45540010ac5f none null local

[root@localhost ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "37e502623b8e28632776002ca58f703e6675d29b07663314a86910571f1d4a0f",

"Created": "2023-12-13T09:33:25.194726343+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

// 网络配置: config配置,子网网段 255*255-2 个地址

"Subnet": "172.17.0.0/16",

// docker0 网关地址

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

// 在这个网络下的容器地址。Name就是容器的名字

"Containers": {

"14be71f5e7b29d5353b6bc3d05fb8797c50e248e4000bf9423d228dfaa49b397": {

"Name": "web01",

"EndpointID": "bc34e573aba1f6d070328a1ad0e3aebbca036411f41e4c61aebd7a4e709437d7",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"2ae6fda57da1d67d1b2b0082fba52edc119ccc7c6365a7e63c23ebd536f4e0e9": {

"Name": "web02",

"EndpointID": "af21577a2b80fc777348b1add17f7f0e85d333ec3ea3010e764f6a026fafceed",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"36c67766973143c0f98e794c20a4f5c8291bc3c18ec39eeb8ffaac3af76b060a": {

"Name": "web03",

"EndpointID": "5367391c591557d7c78aaef90bebc19b6c576cddb6b150878b2caf35fe1e0160",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

--net bridge就是docker 0网络

docker run -itd --name web04 --net bridge centos

docker0网络的特点

1.它是默认的

2.域名(容器名)访问不通,加--link 域名访问就通了

自定义一个网络,create命令创建一个新的网络

docker network create \

--driver bridge \

--subnet 192.168.0.0/16 \

--gateway 192.168.0.1 \

mynet

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

37e502623b8e bridge bridge local

503dcc75c2ce host host local

7ceedf04db0c mynet bridge local

45540010ac5f none null local

未来可以通过网络来隔离项目,一个项目一个网络

启动两个服务在自定义的mynet网络下,--net mynet

[root@localhost ~]# docker run -itd --name web01-net --net mynet centos

[root@localhost ~]# docker run -itd --name web02-net --net mynet centos

docker network inspect mynet 查看自定义网络mynet详细信息

[root@localhost ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "7ceedf04db0c18ddbb8e885fa5c760559063dc64de321e516125a024042eb406",

"Created": "2023-12-13T15:21:28.761349404+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"4d514ced8d81bdea48e2c7cbfabcc462f0e2fc033b8bfde1604c18558b699e32": {

"Name": "web01-net",

"EndpointID": "506208f28cefb1a6eed0d39cf5dbf7e5275d44fcd558edc32de730a3e0a8d31c",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"fb34599475533ad3c25a40ca6ada842c6ab2d0101923fa0fc080d4d6ae12426e": {

"Name": "web02-net",

"EndpointID": "c81ddeae807aced5f717c133c473b961bbaf52bbc7d4a53dc33a55414783f7c8",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

测试互相ping,可以使用域名ping通了

[root@localhost ~]# docker exec -it web01-net ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.109 ms

[root@localhost ~]# docker exec -it web01-net ping web02-net

PING web02-net (192.168.0.3) 56(84) bytes of data.

64 bytes from web02-net.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.052 ms

[root@localhost ~]# docker exec -it web02-net ping web01-net

PING web01-net (192.168.0.2) 56(84) bytes of data.

64 bytes from web01-net.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.063 ms

未来在项目中,直接使用容器名来连接服务,ip无论怎么变,都不会发生变化

docker run -itd --name mysql --net mynet mysql

网络连通,由于现在是跨网络的,容器之间无法访问的。

[root@localhost ~]# docker exec -it web01 ping web01-net

ping: web01-net: Name or service not known

[root@localhost ~]# docker exec -it web01 ping 192.168.0.2

docker0和自定义网络肯定不通,使用自定义网络的好处就是网络隔离。

求帮助,# docker network connect --help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

# 第一个参数 网络,第二个参数 容器

[root@localhost ~]# docker network connect mynet web01

[root@localhost ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "7ceedf04db0c18ddbb8e885fa5c760559063dc64de321e516125a024042eb406",

"Created": "2023-12-13T15:21:28.761349404+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

# web01 这个容器进入了 mynet 网络

"14be71f5e7b29d5353b6bc3d05fb8797c50e248e4000bf9423d228dfaa49b397": {

"Name": "web01",

"EndpointID": "7a03a65f83656720206bf792b3ec73a8234c636e5d5e1885e83a86cd48635961",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

},

"4d514ced8d81bdea48e2c7cbfabcc462f0e2fc033b8bfde1604c18558b699e32": {

"Name": "web01-net",

"EndpointID": "506208f28cefb1a6eed0d39cf5dbf7e5275d44fcd558edc32de730a3e0a8d31c",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"fb34599475533ad3c25a40ca6ada842c6ab2d0101923fa0fc080d4d6ae12426e": {

"Name": "web02-net",

"EndpointID": "c81ddeae807aced5f717c133c473b961bbaf52bbc7d4a53dc33a55414783f7c8",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

查看docker 0网络,发现 web01 依旧存在

[root@localhost ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "37e502623b8e28632776002ca58f703e6675d29b07663314a86910571f1d4a0f",

"Created": "2023-12-13T09:33:25.194726343+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

# 查看docker 0网络,发现 web01 依旧存在

"14be71f5e7b29d5353b6bc3d05fb8797c50e248e4000bf9423d228dfaa49b397": {

"Name": "web01",

"EndpointID": "bc34e573aba1f6d070328a1ad0e3aebbca036411f41e4c61aebd7a4e709437d7",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"2ae6fda57da1d67d1b2b0082fba52edc119ccc7c6365a7e63c23ebd536f4e0e9": {

"Name": "web02",

"EndpointID": "af21577a2b80fc777348b1add17f7f0e85d333ec3ea3010e764f6a026fafceed",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"36c67766973143c0f98e794c20a4f5c8291bc3c18ec39eeb8ffaac3af76b060a": {

"Name": "web03",

"EndpointID": "5367391c591557d7c78aaef90bebc19b6c576cddb6b150878b2caf35fe1e0160",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

docker network connect连接之后,web01这个容器拥有了两个ip。一个容器是可以有多个ip的。

结论:如果要跨网络操作,就需要使用 docker network connect [OPTIONS] NETWORK CONTAINER 连接。

#通过docker network connec连接之后,网络就打通了

[root@localhost ~]# docker exec -it web01 ping web02-net

PING web02-net (192.168.0.3) 56(84) bytes of data.

64 bytes from web02-net.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.123 ms

[root@localhost ~]# docker exec -it web02-net ping web01

PING web01 (192.168.0.4) 56(84) bytes of data.

64 bytes from web01.mynet (192.168.0.4): icmp_seq=1 ttl=64 time=0.049 ms

2、Docker Compose,官网文档:https://docs.docker.com/compose

docker run 单机服务,一个个容器启动和停止。定义dockerfile文件通过docker build构建为镜像,docker run 成为一个服务。

Compose 定义和运行多个Docker容器,通过Compose, 使用一个yaml文件管理应用服务,通过一个简单的命令,就可以将所有服务全部启动。Compose 项目是Docker官方的开源项目,负责实现Docker容器集群的快速编排,开源代码在https://github.com/docker/compose 上。

项目(project):由一组关联的应用容器(多个容器)组成的一个服务,在docker-compose.yml中定义。Compose 项目是由Python编写的,实际上就是调用了Docker服务提供的API来对容器进行管理,只要所在的操作系统的平台支持Docker API,就可以在其上利用Compose来进行编排管理。

Docker 安装Compose,新版本Docker 默认安装了Compose

[root@localhost ~]# yum install docker-compose-plugin

[root@localhost ~]# docker compose version

Docker Compose version v2.21.0

一般情况下,linux要安装python环境,pip 是 Python 包管理工具

[root@localhost ~]# yum install python-pip

参考官网入门使用,https://docs.docker.com/compose/gettingstarted/

Docker run 启动一个容器,Docker compse 启动一组容器服务,容器编排,批量管理容器。

1、创建项目目录composetest,编写项目文件

2、编写dockerfile文件打包项目成为服务镜像

3、通过compose.yaml管理构建所有服务

# 可以定义多个服务

services:

web:

build: .

ports:

- "8000:5000"

depens_on:

- redis

redis:

image: "redis:alpine"

4、docker compose up 启动所有服务,按照compose.yaml定义的来一步步加载

5、会生成镜像composetest-web和redis,docker compose up 启动后会将yaml中所有需要用到的镜像全部下载。

6、查看服务,由于是单机版的docker,这个服务无法查看,集群环境中才可以看到。

[root@localhost ~]# docker service ls

Error response from daemon: This node is not a swarm manager. Use "docker swarm init" or "docker swarm join" to connect this node to swarm and try again.

# docker compose up 启动后发现一次拉起了两个容器,这个就是Compose.yaml 定义的服务,

# 容器名规律:项目目录名-容器服务名-1编号(composetest-redis-1和composetest-web-1)

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

292c625ce219 redis:alpine "docker-entrypoint.s…" 6 minutes ago Up 3 minutes 6379/tcp composetest-redis-1

21f4f7a63283 composetest-web "python app.py" 6 minutes ago Up 3 minutes 0.0.0.0:8000->5000/tcp composetest-web-1

停止服务,# docker compose down

[+] Running 3/2

✔ Container composetest-redis-1 Removed 0.2s

✔ Container composetest-web-1 Removed 0.3s

# compose 会自动创建一个服务的网络

✔ Network composetest_default Removed

docker compose 命令需在项目目录下使用,docker network inspect composetest_default 查看这个网络详细信息

docker compose命令,求帮助,docker compose --help

启动服务:docker compose up -d,-d 让这个容器(应用程序)在后台运行

docker compose logs和ps命令可以用来验证应用程序的状态,还能帮助调试。修改代码后,先执行 docker compose build 构建新的镜像,然后执行 docker compose up -d 取代运行中的容器。注意,Compose会保留原来容器中所有旧的数据卷,这意味着即使容器更新后,旧的数据库和缓存也依旧在容器内。如果修改了Compose的YAML文件,但不需要构建新的镜像,可以通过up -d参数使Compose以新的配置替换容器。

简单使用:

1、执行命令运行容器:docker compose up -d

2、查看镜像:docker images

3、先停止再删除容器: docker compose stop 和 docker compose rm

参数选项:

-f,--file 指定模板文件,默认是docker-compose.yml模板文件

-p,--project-name 指定项目名称,默认使用所在项目目录名称作为项目名称

# Compose所支持的命令

build Build or rebuild services (构建项目中的服务容器)

config Validate and view the Compose file (验证并查看Compose文件)

create Create services (为服务创建容器)

down Stop and remove containers, networks, images, and volumes (停止容器并删除由其创建的容器,网络,卷和图像up)

events Receive real time events from containers (为项目中的每个容器流式传输容器事件)

exec Execute a command in a running container (这相当于docker exec。使用此子命令,您可以在服务中运行任意命令。默认情况下,命令分配TTY,因此您可以使用命令docker compose exec web sh来获取交互式提示。)

help Get help on a command (获得一个命令的帮助)

images List images (查看镜像)

kill Kill containers (通过发送SIGKILL信号来强制停止服务容器)

logs View output from containers (查看服务容器的输出)

pause Pause services (暂停一个容器)

port Print the public port for a port binding (打印某个容器端口所映射的公共端口)

ps List containers (列出项目中目前所有的容器)

pull Pull service images (拉取服务依赖镜像)

push Push service images (推送服务镜像)

restart Restart services (重启项目中的服务)

rm Remove stopped containers (删除所有停止状态的服务容器)

run Run a one-off command (在指定服务上执行一个命令)

start Start services (启动已存在的服务容器)

stop Stop services (停止已存在的服务容器)

top Display the running processes (显示容器正在运行的进程)

unpause Unpause services (恢复处于暂停状态的容器)

up Create and start containers (自动完成包括构建镜像、创建服务、启动服务并关联服务相关容器的一系列操作)

version Show the Docker-Compose version information (输出版本)

默认的文件模板名是docker-compose.yml

官网链接:https://docs.docker.com/compose/compose-file/#compose-file-structure-and-examples

# 语法-3层

version: "3.8"

services: # 定义很多服务

服务1:

# 当前的服务配置

服务2:

# 当前服务配置

#服务要用的网络、卷、等其他全局规则

volumes:

networks:

configs:

...

# 常用参数:

version # 指定 compose 文件的版本

services # 定义所有的 service 信息, services 下面的第一级别的 key 既是一个 service 的名称

服务

build # 指定包含构建上下文的路径, 或作为一个对象,该对象具有 context 和指定的 dockerfile

context # context: 指定 Dockerfile 文件所在的路径

dockerfile # dockerfile: 指定 context 指定的目录下面的 Dockerfile 的名称(默认为 Dockerfile)

args # args: Dockerfile 在 build 过程中需要的参数

command # 覆盖容器启动后默认执行的命令, 支持 shell 格式和 [] 格式

container_name # 指定容器的名称 (等同于 docker run --name 的作用)

deploy # v3 版本以上, 指定与部署和运行服务相关的配置

# deploy 部分是 docker stack 使用的, docker stack 依赖 docker swarm

depends_on # 定义容器启动顺序 (此选项解决了容器之间的依赖关系

dns # 设置 DNS 地址(等同于 docker run --dns 的作用)

entrypoint # 覆盖容器的默认 entrypoint 指令

env_file # 从指定文件中读取变量设置为容器中的环境变量,可以是单个值或者一个文件列表

environment # 设置环境变量, environment 的值可以覆盖 env_file 的值

expose # 暴露端口, 但是不能和宿主机建立映射关系, 类似于 Dockerfile 的 EXPOSE 指令

external_links # 连接不在 docker-compose.yml 中定义的容器或者不在 compose 管理的容器

extra_hosts # 添加 host 记录到容器中的 /etc/hosts 中

healthcheck # v2.1 以上版本, 定义容器健康状态检查

image # 指定 docker 镜像, 可以是远程仓库镜像、本地镜像

labels # 使用 Docker 标签将元数据添加到容器

logging # 设置容器日志服务

network_mode # 指定网络模式 (等同于 docker run --net 的作用, 在使用 swarm 部署时将忽略该选项)

networks # 将容器加入指定网络 (等同于 docker network connect 的作用)

# networks 可以位于 compose 文件顶级键和 services 键的二级键

pid: 'host' # 共享宿主机的 进程空间(PID)

ports # 建立宿主机和容器之间的端口映射关系, ports 支持两种语法格式

- "8000:8000" # 容器的 8000 端口和宿主机的 8000 端口建立映射关系

volumes # 定义容器和宿主机的卷映射关系

- /var/lib/mysql # 映射容器内的 /var/lib/mysql 到宿主机的一个随机目录中

- /opt/data:/var/lib/mysql # 映射容器内的 /var/lib/mysql 到宿主机的 /opt/data

- ./cache:/tmp/cache # 映射容器内的 /var/lib/mysql 到宿主机 compose 文件所在的位置

- ~/configs:/etc/configs/:ro # 映射容器宿主机的目录到容器中去, 权限只读

- datavolume:/var/lib/mysql # datavolume 为 volumes 顶级键定义的目录, 在此处直接调用

...

# depends_on依赖示例

version: '3'

services:

web:

build: .

depends_on:

- db

- redis

redis:

image: redis

ports:

- "6379:6379"

db:

image: postgres

# docker compose up 以依赖顺序启动服务,在这个例子中 redis 和 db 服务在 web 启动前启动

# 默认情况下使用 docker compose up web 这样的方式启动 web 服务时,也会启动 redis 和 db 两个服务,因为在配置文件中定义了依赖关系

# network示例

version: '3.7'

services:

test:

image: nginx:1.14-alpine

container_name: mynginx

command: ifconfig

networks:

app_net: # 调用下面 networks 定义的 app_net 网络

ipv4_address: 172.16.238.10

networks:

app_net:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.16.238.0/24

- getaway: 172.16.238.1

搭建WordPress博客,https://github.com/docker/awesome-compose/tree/master/official-documentation-samples/wordpress/

[root@localhost ~]# mkdir mywordpress

[root@localhost ~]# cd mywordpress

[root@localhost mywordpress]# vi docker-compose.yaml

version: '3.8'

services:

db:

image: mysql:5.7

volumes:

- db_data:/var/lib/mysql

restart: always

environment:

MYSQL_ROOT_PASSWORD: somewordpress

MYSQL_DATABASE: wordpress

MYSQL_USER: wordpress

MYSQL_PASSWORD: wordpress

wordpress:

depends_on:

- db

image: wordpress:latest

ports:

- "8000:80"

restart: always

environment:

WORDPRESS_DB_HOST: db:3306

WORDPRESS_DB_USER: wordpress

WORDPRESS_DB_PASSWORD: wordpress

WORDPRESS_DB_NAME: wordpress

volumes:

db_data:

[root@localhost mywordpress]# docker compose up -d

网页测试访问,http://10.1.1.128:8000/

开源项目:常见的环境安装,https://gitee.com/zhengqingya/docker-compose

5、docker进阶2,Docker Swarm

Docker Swarm官网:https://docs.docker.com/engine/swarm/how-swarm-mode-works/nodes/

Raft协议

如果只有两个管理节点,其中一个管理节点挂了,另外一个管理节点也不能用。Raft协议,确保大多数节点存活才可以用,至少2台管理节点,集群才可以使用,否则直接挂掉。生产环境最少3 个管理节点(允许挂一个)。

1、只有两个管理节点,挂掉其中一台,集群不可用。(systemctl stop docker 模拟挂了,node ls 不可用)

2、如果有work工作节点挂了,状态会更新为down,不可用了。可以没有工作节点,全是管理节点。

基础环境准备,启动4个服务器(2核4G),分别重命名node1,node2,node3,node4,每个服务器都安装Docker。

克隆出4台虚拟机,关闭NetworkManager,firewalld,selinux等,设置ip重启network服务。

systemctl stop NetworkManager

systemctl disable NetworkManager

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

vim /etc/selinux/config

将SELINUX的值修改为disabled

SELINUX=disabled

搭建集群环境

[root@node1 ~]# docker swarm --help

Usage: docker swarm COMMAND

Manage Swarm

Commands:

# 初始化节点

init Initialize a swarm

# 加入节点

join Join a swarm as a node and/or manager

Run 'docker swarm COMMAND --help' for more information on a command.

初始化节点,docker swarm init --help

初始化暴露一个节点(初始化的节点为leader,且是管理节点)

[root@node1 ~]# docker swarm init --advertise-addr 10.1.1.118

Swarm initialized: current node (tdso5plqf2fckel6faqw2mrbw) is now a manager.

添加工作节点的命令

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-6c4e41htpvfttd6xiz3q7dq5kojn81w85win4j5h2rl8ayvtdt-76pfbtozjh2fexrudd2j84zah 10.1.1.118:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

初始化的节点为leader,且是管理节点

[root@node1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

tdso5plqf2fckel6faqw2mrbw * node1 Ready Active Leader 24.0.7

在初始化节点执行即此节点执行 docker swarm join-token manager生成 添加管理节点的命令

[root@node1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

添加管理节点的命令

docker swarm join --token SWMTKN-1-6c4e41htpvfttd6xiz3q7dq5kojn81w85win4j5h2rl8ayvtdt-amzxa805f4xyqn8jb2rmaey4f 10.1.1.118:2377

如果你当前电脑上的docker已经加入了一个swarm集群,要先将节点离开swarm集群才能加入。

[root@node1 ~]# docker swarm join --token SWMTKN-1-6c4e41htpvfttd6xiz3q7dq5kojn81w85win4j5h2rl8ayvtdt-amzxa805f4xyqn8jb2rmaey4f 10.1.1.118:2377

Error response from daemon: This node is already part of a swarm. Use "docker swarm leave" to leave this swarm and join another one.

docker swarm leave

docker swarm leave --force

node2、node3加入管理节点

[root@node2 yum.repos.d]# docker swarm join --token SWMTKN-1-6c4e41htpvfttd6xiz3q7dq5kojn81w85win4j5h2rl8ayvtdt-amzxa805f4xyqn8jb2rmaey4f 10.1.1.118:2377

This node joined a swarm as a manager.

[root@node3 yum.repos.d]# docker swarm join --token SWMTKN-1-6c4e41htpvfttd6xiz3q7dq5kojn81w85win4j5h2rl8ayvtdt-amzxa805f4xyqn8jb2rmaey4f 10.1.1.118:2377

This node joined a swarm as a manager.

node4加入工作节点

[root@node4 yum.repos.d]# docker swarm join --token SWMTKN-1-6c4e41htpvfttd6xiz3q7dq5kojn81w85win4j5h2rl8ayvtdt-76pfbtozjh2fexrudd2j84zah 10.1.1.118:2377

This node joined a swarm as a worker.

查看集群是否搭建成功,在任意管理节点执行docker node ls即可

[root@node3 yum.repos.d]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

tdso5plqf2fckel6faqw2mrbw node1 Ready Active Leader 24.0.7

ns95pn0tfc90tn8q2lq8c3vr8 node2 Ready Active Reachable 24.0.7

rcfb45hukbi6s9ow8iysdogat * node3 Ready Active Reachable 24.0.7

33il0e3zmb7sl1m22i36zx3g3 node4 Ready Active 24.0.7

使用Swarm

单机docker compose up使用compose启动一个服务,集群docker service使用swarm启动一个服务。

单机服务:单个节点单个容器运行,docker run 流量太大扛不住

集群服务:多个节点多个容器运行,流量分摊做负载均衡

单机下docker run,集群下docker service create,求帮助docker service --help

通过docker service启动服务,集群内任何节点都可以访问到该服务,不仅仅是在运行容器的节点可以访问。

默认情况下,管理节点也会充当工作节点来运行服务容器。管理节点根据服务容器的副本数分配任务给工作节点,工作节点接收并执行从管理器节点分派的任务(Task),将这个任务docker run变成容器运行。

[root@node1 ~]# docker service create -p 8888:80 --name my-nginx nginx

zxs3o1cxotyiwv17imt11ts5g

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ssr3jqh13sbk my-nginx replicated 1/1 nginx:latest *:8888->80/tcp

[root@node2 yum.repos.d]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

af8ea597a0ba nginx:latest "/docker-entrypoint.…" 41 seconds ago Up 39 seconds 80/tcp my-nginx.1.xtlvs76nohaqw46vjq03o5m64

查看服务的详细信息

[root@node1 ~]# docker service inspect --pretty my-nginx

ID: ssr3jqh13sbkhthqf1g2ek8zc

Name: my-nginx

# 副本数量Replicated

Service Mode: Replicated

Replicas: 1

Placement:

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-first

RollbackConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Rollback order: stop-first

ContainerSpec:

Image: nginx:latest@sha256:10d1f5b58f74683ad34eb29287e07dab1e90f10af243f151bb50aa5dbb4d62ee

Init: false

Resources:

Endpoint Mode: vip

Ports:

PublishedPort = 8888

Protocol = tcp

TargetPort = 80

PublishMode = ingress

[root@node1 ~]# docker service ps my-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xtlvs76nohaq my-nginx.1 nginx:latest node2 Running Running 7 minutes ago

创建服务时创建多个副本

[root@node1 ~]# docker service create -p 7777:80 --replicas 3 --name my-nginx2 nginx

6vu7p9hsohzvg8kauj5oumssm

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

6vu7p9hsohzv my-nginx2 replicated 3/3 nginx:latest *:7777->80/tcp

[root@node1 ~]# docker service ps my-nginx2

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xq3ho434gq71 my-nginx2.1 nginx:latest node1 Running Running 27 seconds ago

gyampfuf9m86 my-nginx2.2 nginx:latest node2 Running Running 26 seconds ago

xx2b6skk3yy0 my-nginx2.3 nginx:latest node3 Running Running 27 seconds ago

节点故障了会在其他节点上拉起这个节点上运行的容器

模拟节点2故障[root@node2 ~]# systemctl stop docker

[root@node1 ~]# docker service ps my-nginx2

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xq3ho434gq71 my-nginx2.1 nginx:latest node1 Running Running 3 minutes ago

33ypg5qaflw0 my-nginx2.2 nginx:latest node4 Running Running 2 minutes ago

gyampfuf9m86 \_ my-nginx2.2 nginx:latest node2 Shutdown Running 3 minutes ago

xx2b6skk3yy0 my-nginx2.3 nginx:latest node3 Running Running 3 minutes ago

服务运行中动态扩缩容副本,docker service update 或 docker service scale

[root@node1 ~]# docker service update --replicas 3 my-nginx

my-nginx

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ssr3jqh13sbk my-nginx replicated 3/3 nginx:latest *:8888->80/tcp

qkay5zggcn81 my-nginx2 replicated 3/3 nginx:latest *:7777->80/tcp

[root@node1 ~]# docker service ps my-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xtlvs76nohaq my-nginx.1 nginx:latest node2 Running Running 18 minutes ago

bbgqmt6ajc8t my-nginx.2 nginx:latest node4 Running Running 34 seconds ago

c5bdd9g5igka my-nginx.3 nginx:latest node3 Running Running 34 seconds ago

[root@node1 ~]# docker service scale my-nginx=5

my-nginx scaled to 5

overall progress: 5 out of 5 tasks

1/5: running [==================================================>]

2/5: running [==================================================>]

3/5: running [==================================================>]

4/5: running [==================================================>]

5/5: running [==================================================>]

verify: Service converged

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ssr3jqh13sbk my-nginx replicated 5/5 nginx:latest *:8888->80/tcp

qkay5zggcn81 my-nginx2 replicated 3/3 nginx:latest *:7777->80/tcp

[root@node1 ~]# docker service ps my-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xtlvs76nohaq my-nginx.1 nginx:latest node2 Running Running 30 minutes ago

xxlpyup8mo93 my-nginx.2 nginx:latest node3 Running Running 7 minutes ago

ss23ctr0zm3n my-nginx.3 nginx:latest node1 Running Running 6 minutes ago

y4i3nzwyl8ue my-nginx.4 nginx:latest node2 Running Running 54 seconds ago

e9bzbpas74kk my-nginx.5 nginx:latest node4 Running Running 55 seconds ago

回滚到之前的状态(当前和上一个来回回滚)

[root@node1 ~]# docker service rollback my-nginx

my-nginx

rollback: manually requested rollback

overall progress: rolling back update: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ssr3jqh13sbk my-nginx replicated 3/3 nginx:latest *:8888->80/tcp

qkay5zggcn81 my-nginx2 replicated 3/3 nginx:latest *:7777->80/tcp

[root@node1 ~]# docker service ps my-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xtlvs76nohaq my-nginx.1 nginx:latest node2 Running Running 32 minutes ago

xxlpyup8mo93 my-nginx.2 nginx:latest node3 Running Running 9 minutes ago

ss23ctr0zm3n my-nginx.3 nginx:latest node1 Running Running 9 minutes ago

灰度发布,分批更新服务的新容器镜像

# --image nginx:1.18.0-alpine 新的容器镜像

# --update-parallelism 1 同时更新的数量

# --update-delay 10s 更新延迟时间

[root@node1 ~]# docker service update --image nginx:1.18.0-alpine --update-parallelism 1 --update-delay 10s my-nginx

my-nginx

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@node2 yum.repos.d]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ssr3jqh13sbk my-nginx replicated 2/3 nginx:1.18.0-alpine *:8888->80/tcp

qkay5zggcn81 my-nginx2 replicated 3/3 nginx:latest *:7777->80/tcp

[root@node2 yum.repos.d]# docker service ps my-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xtlvs76nohaq my-nginx.1 nginx:latest node2 Running Running 35 minutes ago

n8zj8pk6qc00 my-nginx.2 nginx:1.18.0-alpine node3 Running Running 28 seconds ago

xxlpyup8mo93 \_ my-nginx.2 nginx:latest node3 Shutdown Shutdown 34 seconds ago

ppeabem9a2g8 my-nginx.3 nginx:1.18.0-alpine node1 Running Running 8 seconds ago

ss23ctr0zm3n \_ my-nginx.3 nginx:latest node1 Shutdown Shutdown 15 seconds ago

[root@node2 yum.repos.d]# docker service ps my-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

mujron7k6ek8 my-nginx.1 nginx:1.18.0-alpine node2 Running Running 9 seconds ago

xtlvs76nohaq \_ my-nginx.1 nginx:latest node2 Shutdown Shutdown 15 seconds ago

n8zj8pk6qc00 my-nginx.2 nginx:1.18.0-alpine node3 Running Running 48 seconds ago

xxlpyup8mo93 \_ my-nginx.2 nginx:latest node3 Shutdown Shutdown 53 seconds ago

ppeabem9a2g8 my-nginx.3 nginx:1.18.0-alpine node1 Running Running 28 seconds ago

ss23ctr0zm3n \_ my-nginx.3 nginx:latest node1 Shutdown Shutdown 34 seconds ago

[root@node2 yum.repos.d]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ssr3jqh13sbk my-nginx replicated 3/3 nginx:1.18.0-alpine *:8888->80/tcp

qkay5zggcn81 my-nginx2 replicated 3/3 nginx:latest *:7777->80/tcp

删除服务,所有节点上该服务的容器都被删除了

[root@node1 ~]# docker service rm my-nginx

my-nginx

[root@node1 ~]# docker service ps my-nginx

no such service: my-nginx

创建tomcat服务

给服务创建一个专属网络,求帮助 docker network create --help

[root@node1 ~]# docker network create -d overlay tomcat-net

6ny9tzkxr0l0ensmguvsulyed

[root@node1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

eb0252e056f5 bridge bridge local

724126650e2b docker_gwbridge bridge local

f802b86354b8 host host local

ij4fnv5g6mpb ingress overlay swarm

54a7dfe3d977 none null local

6ny9tzkxr0l0 tomcat-net overlay swarm

创建tomcat服务

docker service create --name my-tomcat \

--network tomcat-net \

-p 8000:8080 \

--replicas 3 \

tomcat

灰度发布,分批更新服务的新容器镜像

# --image tomcat:8.5.46-jdk8-openjdk 新的容器镜像

# --update-parallelism 1 同时更新的数量

# --update-delay 10s 更新延迟时间

阿里云新的tomcat镜像无首页,回滚到这个旧的tomcat镜像有首页,tomcat:8.5.46-jdk8-openjdk

[root@node1 ~]# docker service update --image tomcat:8.5.46-jdk8-openjdk --update-parallelism 1 --update-delay 10s my-tomcat

my-tomcat

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

qkay5zggcn81 my-nginx2 replicated 3/3 nginx:latest *:7777->80/tcp

sqvjqu6icj2h my-tomcat replicated 3/3 tomcat:8.5.46-jdk8-openjdk *:8000->8080/tcp

[root@node1 ~]# docker service ps my-tomcat

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

k9kofy18huok my-tomcat.1 tomcat:8.5.46-jdk8-openjdk node3 Running Running 3 minutes ago

3hj0u6rredqh \_ my-tomcat.1 tomcat:latest node1 Shutdown Shutdown 7 minutes ago

lporfh2y59y6 my-tomcat.2 tomcat:8.5.46-jdk8-openjdk node2 Running Preparing 36 seconds ago

x3g7zsnkcsgk \_ my-tomcat.2 tomcat:latest node2 Shutdown Shutdown 3 minutes ago

qdhqml3vy69a my-tomcat.3 tomcat:8.5.46-jdk8-openjdk node1 Running Running 3 minutes ago

jvziz6snnrbj \_ my-tomcat.3 tomcat:latest node4 Shutdown Shutdown 5 minutes ago

删除网络

[root@node1 ~]# docker network rm tomcat-net

tomcat-net

[root@node1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

eb0252e056f5 bridge bridge local

724126650e2b docker_gwbridge bridge local

f802b86354b8 host host local

ij4fnv5g6mpb ingress overlay swarm

54a7dfe3d977 none null local

搭建wordpress博客

1、创建网络

[root@node1 ~]# docker network create -d overlay wordpress-net

ms7lkpqdwtzukhccwd9bk172i

[root@node1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

eb0252e056f5 bridge bridge local

724126650e2b docker_gwbridge bridge local

f802b86354b8 host host local

ij4fnv5g6mpb ingress overlay swarm

54a7dfe3d977 none null local

ms7lkpqdwtzu wordpress-net overlay swarm

2、创建 mysql 服务

[root@node1 ~]# docker service create --name my-mysql --env MYSQL_ROOT_PASSWORD=123456 --env MYSQL_DATABASE=wordpress --network wordpress-net --mount type=volume,source=mysql-data,destination=/var/lib/mysql mysql:5.7.24

iw1aot8ca86jp16payccw0e9d

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

iw1aot8ca86j my-mysql replicated 1/1 mysql:5.7.24

[root@node1 ~]# docker service ps my-mysql

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

n24gs0184yf6 my-mysql.1 mysql:5.7.24 node3 Running Running 18 minutes ago

3、创建 wordpress 服务

[root@node1 ~]# docker service create --name my-wordpress -p 800:80 --env WORDPRESS_DB_USER=root --env WORDPRESS_DB_PASSWORD=123456 --env WORDPRESS_DB_HOST=my-mysql:3306 --env WORDPRESS_DB_NAME=wordpress --network wordpress-net wordpress

7n8uzmb6d5i5auhyiqdnv1a5y

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

iw1aot8ca86j my-mysql replicated 1/1 mysql:5.7.24

7n8uzmb6d5i5 my-wordpress replicated 1/1 wordpress:latest *:800->80/tcp

[root@node1 ~]# docker service ps my-wordpress

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ngl96fw92a5w my-wordpress.1 wordpress:latest node2 Running Running about a minute ago

swarm网络服务模式一共有两种:ingress和host,如果不指定,则默认的是ingress。

1、ingress模式(overlay网络)下,通过docker service启动服务,swarm集群内任何节点都可以访问到该服务,不仅仅是在运行容器的节点可以访问。

2、host模式(host网络)下,仅在运行容器的节点可以访问。

node节点工作模式:管理节点下发任务,任务可在工作节点和管理节点中执行。

service服务运行模式:在管理节点通过service创建服务,分配任务到管理节点或工作节点执行,每个task任务都是执行自己的docker run命令。

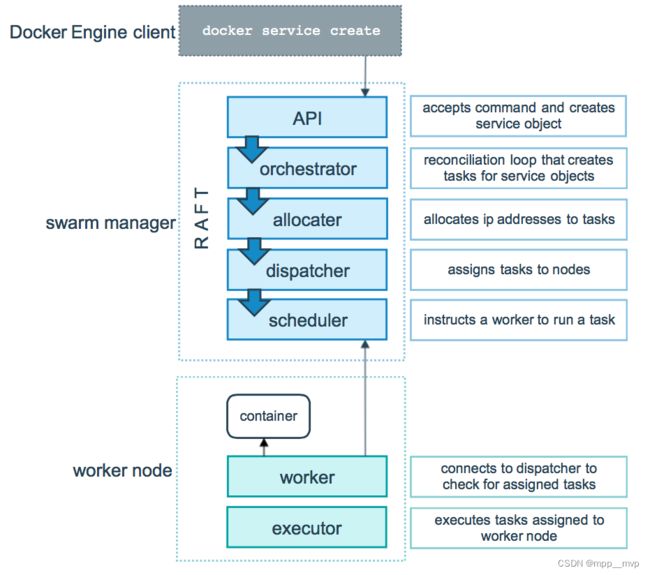

任务与调度

swarm manager:

-- 1、API:这个请求直接由Swarm manager的API进行接收,接收命令并创建服务对象。

-- 2、orchestrator:为服务创建一个任务。

-- 3、allocater:为这个任务分配IP地址。

-- 4、dispatcher:将任务分配到指定的节点。

-- 5、scheduler:在该节点中下发指定命令。(调度器,判断哪个节点执行任务)

worker node:接收manager任务后去运行这个任务。(将这个任务docker run变成容器运行)

-- 1、container:创建相应的容器。

-- 2、worker:连接到调度程序以检查分配的任务

-- 3、executor:执行分配给工作节点的任务

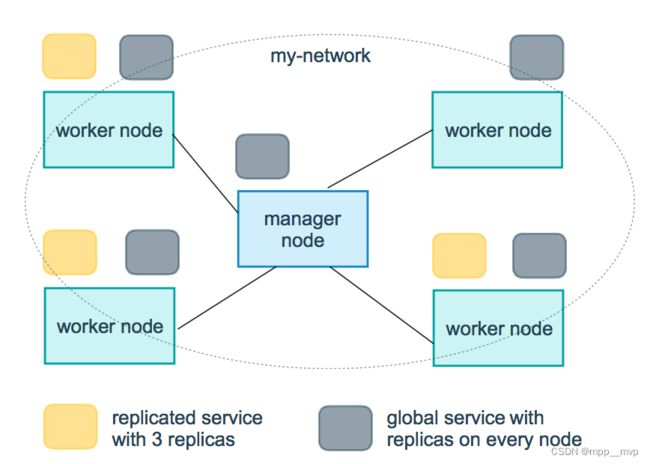

在docker swarm中部署的service,有2种类型,service服务副本与全局服务(replicated mode和global mode)

1、replicated(副本),默认,默认一个副本数

2、global(全局) ,启动一个服务,会在所有的节点运行容器,保证每个节点上都有该容器运行(适用场景:每个节点有日志、监控等服务)

下面的图显示了一个有3个副本的service(黄色)和一个global的service(灰色):

service默认以replicated副本方式运行,默认一个副本数,指定几个副本就有几个task在swarm集群中运行。

docker service create --mode replicated --name mynginx nginx 默认的replicated副本方式运行

global全局类型的service,类似于k8s的daemonset对象,就是在每个节点上都运行一个task,不需要预先指定副本的数量,如果有新的节点加入到集群中,也会自动的在这个节点上运行一个新的task。

[root@node1 ~]# docker service create --mode global --name test alpine ping baidu.com

lr916gtyy08f5xxog1cbiz38p

overall progress: 4 out of 4 tasks

ns95pn0tfc90: running [==================================================>]

rcfb45hukbi6: running [==================================================>]

v2vp8agnzi7x: running [==================================================>]

tdso5plqf2fc: running [==================================================>]

verify: Service converged

每个节点都运行了该服务的容器,docker ps

服务容器是随机分布在某个节点上的。可以给节点打标签让服务容器运行在指定节点上。

1、为每个 node 定义 label。

2、设置 service 运行在指定 label 的 node 上。

为节点打标签,docker node update --label-add env=test 节点id (docker node ls 查看节点id)

[root@node1 ~]# docker node update --label-add env=test rcfb45hukbi6s9ow8iysdogat

rcfb45hukbi6s9ow8iysdogat(给node3节点打了test标签)

# 指定在哪个节点上来运行这个服务容器

docker service create \

--constraint node.labels.env==test \

--replicas 2 \

--name mynginx \

--publish 8080:80 \

nginx

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

hg4bk5npz55x mynginx replicated 2/2 nginx:latest *:8080->80/tcp

[root@node1 ~]# docker service ps mynginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

knftwy6elvfg mynginx.1 nginx:latest node3 Running Running 44 seconds ago

z8mldtcci8nh mynginx.2 nginx:latest node3 Running Running 44 seconds ago

将service服务mynginx 迁移到其它标签节点

docker node update --label-add env=prod v2vp8agnzi7xuzt6hg09y1ag4 给node4节点打标签prod

docker service update --constraint-rm node.labels.env==test mynginx

docker service update --constraint-add node.labels.env==prod mynginx

[root@node1 ~]# docker service ps mynginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

m9diwv111m61 mynginx.1 nginx:latest node4 Running Running about a minute ago

hl7o034egajz \_ mynginx.1 nginx:latest node3 Shutdown Shutdown about a minute ago

5nr5kzm28izz mynginx.2 nginx:latest node4 Running Running about a minute ago

jv6mu30prpx7 \_ mynginx.2 nginx:latest node3 Shutdown Shutdown about a minute ago

Docker Swarm网络,https://docs.docker.com/engine/swarm/ingress/

# docker network ls

NETWORK ID NAME DRIVER SCOPE

5e37371ce45d bridge bridge local

17c1fa186281 docker_gwbridge bridge local

15b68b92218d host host local

ij4fnv5g6mpb ingress overlay swarm

6cf80a9ca11c none null local

ms7lkpqdwtzu wordpress-net overlay swarm

1、overlay networks 管理 Swarm 中 Docker 守护进程间的通信。可以将服务加到一个或多个已存在的 overlay 网络上,使得服务与服务之间能够通信。

2、ingress network 是一个特殊的 overlay 网络,用于服务节点间的负载均衡,启动多个服务,访问的时候随机分配到一个服务上。当任何 Swarm 节点在发布的端口上接收到请求时,它将该请求交给一个名为 IPVS 的模块。IPVS 跟踪参与该服务的所有IP地址,选择其中的一个,并通过 ingress 网络将请求路由到它。初始化或加入 Swarm 集群时会自动创建 ingress 网络,大多数情况下,用户不需要自定义配置,但是 docker 17.05 和更高版本允许自定义。

3、docker_gwbridge是一种桥接网络,将 overlay 网络(包括 ingress 网络)连接到一个单独的 Docker 守护进程的物理网络。默认情况下,服务正在运行的每个容器都连接到本地 Docker 守护进程主机的 docker_gwbridge 网络。docker_gwbridge 网络在初始化或加入 Swarm 时自动创建。大多数情况下,用户不需要自定义配置,但是Docker 允许自定义。

1、docker_gwbridge和ingress是swarm自动创建的,当用户执行了docker swarm init/join之后。

2、docker_gwbridge是bridge桥接网络类型的,负责本机container和主机直接的连接。

3、ingress负责service在多个主机container之间的路由。

4、custom-network是用户自己创建的overlay网络,通常都需要创建自己的network并把service挂在上面。ingress具备负载均衡,自定义的overlay网络不具备负载均衡。

6、docker进阶3,Docker Stack

单机:docker run(单节点单容器) docker compose(单节点多容器)

集群:docker service(多节点单服务) docker stack(多节点多服务)

单机模式下,可以使用docker compose来编排多个服务,而docker service只能实现对单个服务的简单部署。通过docker stack可以完成 Docker 集群环境下的多服务编排。安装Docker时默认安装了Docker Stack

搭建一个投票服务,https://gitee.com/landylee007/voting-app

[root@node1 ~]# mkdir vote

[root@node1 ~]# cd vote

[root@node1 vote]# cat docker-compose.yml

version: "3"

services:

redis:

image: redis:alpine

networks:

- frontend

deploy:

replicas: 1

update_config:

parallelism: 2

delay: 10s

restart_policy:

condition: on-failure

db: