ks8的数据管理---动态配置StorageClass

1、背景

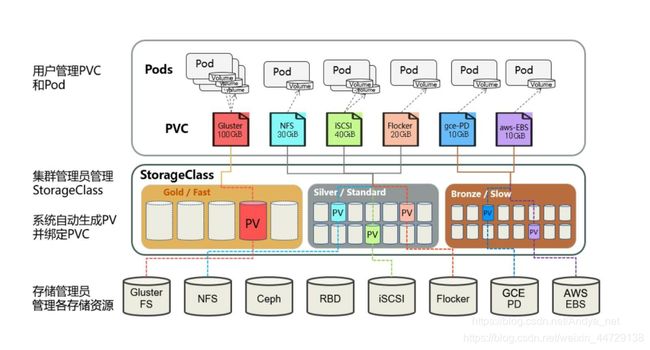

PV是运维人员来创建的,开发操作PVC,可是大规模集群中可能会有很多PV,如果这些PV都需要运维手动来处理这也是一件很繁琐的事情,所以就有了动态供给概念,也就是Dynamic Provisioning。而我们上面的创建的PV都是静态供给方式,也就是Static Provisioning。而动态供给的关键就是StorageClass,它的作用就是创建PV模板。

创建StorageClass里面需要定义PV属性比如存储类型、大小等;另外创建这种PV需要用到存储插件。最终效果是,用户提交PVC,里面指定存储类型,如果符合我们定义的StorageClass,则会为其自动创建PV并进行绑定。

自动创建PV的机制Dynamic Provisioning

人工管理PV的方式就叫作Static Provisioning

StorageClass对象就是创建PV的模板

定义StorageClass一般包含Name,后端使用存储插件类型,存储插件需要使用到的参数等信息定义好之后相当于定义了一块巨大的存储磁盘

当pvc中定义的storageClassName和StorageClass的Name相同的时候那么StorageClass会自动从巨大的存储磁盘创建一个指定storage大小的pv,创建的pv和pvc进行绑定

StorageClass对象会定义如下两个部分内容

第一 PV的属性. 比如,存储类型

第二 创建这种PV需要用到的存储插件. 比如Ceph等等

StorageClass 支持 Delete 和 Retain 两种 reclaimPolicy,默认是 Delete

k8s根据用户提交的PVC中指定的storageClassName的属性值找到对应的StorageClass.然后调用该StorageClass声明的存储插件创建出需要的PV

有了Dynamic Provisioning机制,运维人员只需要在Kubernetes集群里创建出数量有限的StorageClass对象就可以了

当开发人员提交了包含StorageClass字段的PVC之后,Kubernetes就会根据这个StorageClass创建出对应的PV

在没有StorageClass的情况下运维人员对开发人员定义的每一个pvc都要手动创建一个对应的pv与其进行绑定 这样就会大大的增加创建pv的工作量

2、操作步骤

step1:创建一个StorageClass资源(对应3中的step4)

step2:pvc的yaml中增加spec.storageClassName进行配置(对应3中的step5)

3、NFS的动态PV创建(案例演示,参考官网文档)

kubernetes本身支持的动态PV创建不包括nfs,所以需要使用额外插件实现–》nfs-client

step1:下载nfs-client的相关文件

step2:设置权限

NS=$(kubectl config get-contexts|grep -e "^\*" |awk '{print $5}')

NAMESPACE=${NS:-default}

cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services", "endpoints"]

verbs: ["get","create","list", "watch","update"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

sed -i'' "s/namespace:.*/namespace: $NAMESPACE/g" ./rbac.yaml ./deployment.yaml

kubectl create -f deploy/rbac.yaml

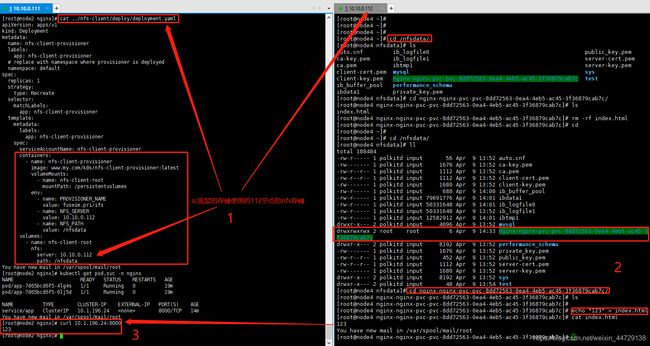

step3:配置nfs-client

cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: www.my.com/k8s/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 10.10.0.112

- name: NFS_PATH

value: /nfsdata

volumes:

- name: nfs-client-root

nfs:

server: 10.10.0.112

path: /nfsdata

Kunectl create -f deployment.yam

step4: 创建StorageClass

cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false" # # When set to "false" your PVs will not be archived

# by the provisioner upon deletion of the PVC.

kubectl create -f class.yaml

kubectl get sc #查看SC

kubectl describe sc managed-nfs-storage #查看SC详情

step5:创建PVC资源

cat test-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

cat test-pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: www.my.com/k8s/busybox:latest

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && sleep 300"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim

kubectl create -f test-claim.yaml #创建PVC资源

kubectl create -f test-pod.yaml #创建pod资源

kubectl get pvc,pod #查看pod和pvc

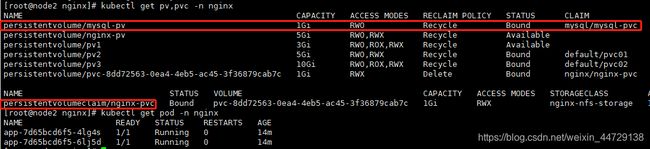

4、MySQL 数据库提供持久化存储(静态pv)

step1:创建mysql的命名空间、mysql-pv和mysql-pvc

apiVersion: v1

kind: Namespace # 创建命名空间

metadata:

name: mysql # 定义命名空间的名称为mysql

---

apiVersion: v1

kind: PersistentVolume

metadata: # PV建立不要加名称空间,因为PV属于集群级别的

name: mysql-pv # PV名称

labels: # 这些labels可以不定义

name: mysql-pv

storetype: nfs

spec: # 这里的spec和volumes里面的一样

storageClassName: nfs

accessModes: # 设置访问模型

- ReadWriteOnce

# - ReadWriteMany

# - ReadOnlyMany

capacity: # 设置存储空间大小

storage: 1Gi

persistentVolumeReclaimPolicy: Recycle # 回收策略

nfs:

path: /nfsdata

server: 10.10.0.112

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc # pvc的名字

namespace: mysql

labels: # 这些labels可以不定义

name: mysql-pvc

storetype: nfs

capacity: 1Gi

spec:

storageClassName: nfs

accessModes: # PVC也需要定义访问模式,不过它的模式一定是和现有PV相同或者是它的子集,否则匹配不到PV

- ReadWriteOnce

resources: # 定义资源要求PV满足这个PVC的要求才会被匹配到

requests:

storage: 1Gi # 定义要求有多大空间

step2:部署mysql

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: mysql

name: mysql

namespace: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: www.my.com/sys/mysql:5.7

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-storage

persistentVolumeClaim:

claimName: mysql-pvc

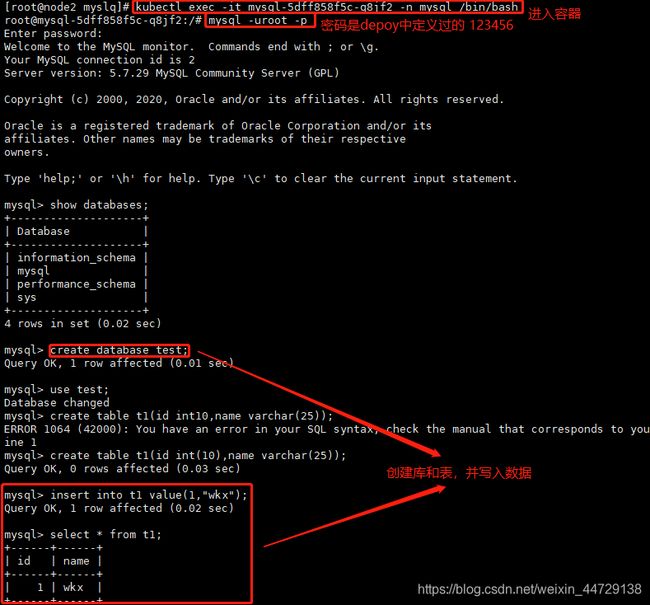

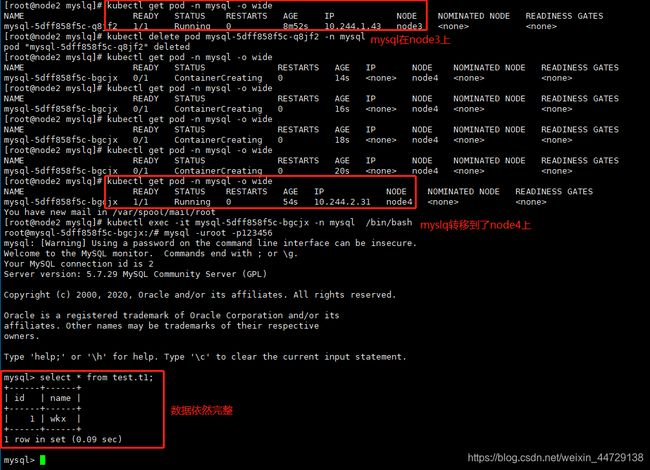

step3:mysql中添加数据

step4:模拟mysql宕机

关闭mysql服务即可

step5:验证数据一致性

5、nginx提供持久化存储(动态sc)

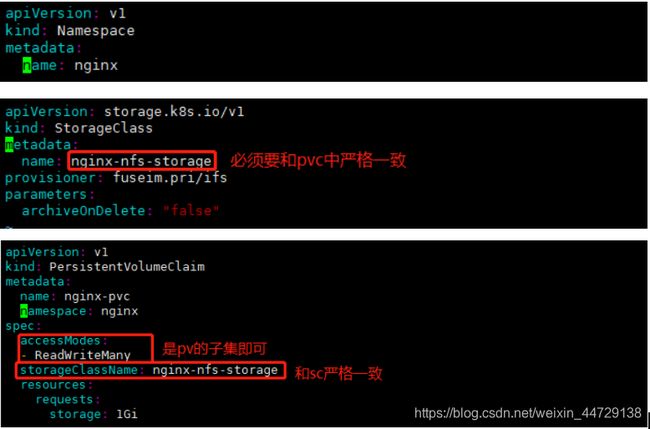

step1:创建nginx的命名空间、nginx-sc、nginx-pvc

apiVersion: v1

kind: Namespace

metadata:

name: nginx

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nginx-nfs-storage

provisioner: fuseim.pri/ifs

parameters:

archiveOnDelete: "false"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

namespace: nginx

spec:

accessModes:

- ReadWriteMany

storageClassName: nginx-nfs-storage

resources:

requests:

storage: 1Gi

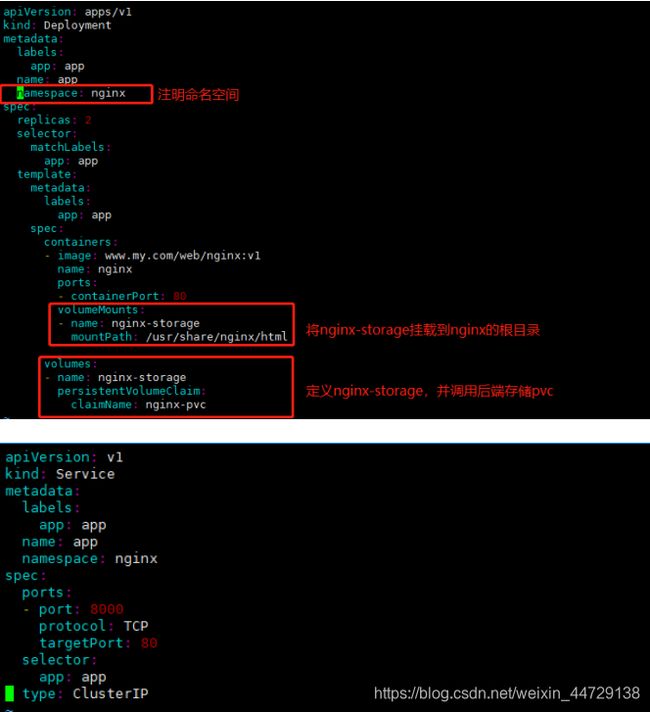

step2:部署nginx-deploy和nginx-svc

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: app

name: app

namespace: nginx

spec:

replicas: 2

selector:

matchLabels:

app: app

template:

metadata:

labels:

app: app

spec:

containers:

- image: www.my.com/web/nginx:v1

name: nginx

ports:

- containerPort: 80

volumeMounts:

- name: nginx-storage

mountPath: /usr/share/nginx/html

volumes:

- name: nginx-storage

persistentVolumeClaim:

claimName: nginx-pvc

---

apiVersion: v1

kind: Service

metadata:

labels:

app: app

name: app

namespace: nginx

spec:

ports:

- port: 8000

protocol: TCP

targetPort: 80

selector:

app: app

type: ClusterIP