私有部署ELK,搭建自己的日志中心(三)-- Logstash的安装与使用

一、部署ELK

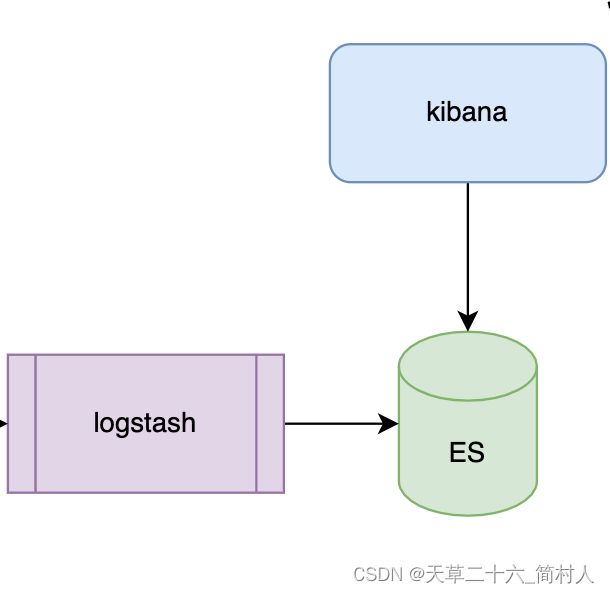

上文把采集端filebeat如何使用介绍完,现在随着数据的链路,继续~~

同样,使用docker-compose部署:

version: "3"

services:

elasticsearch:

container_name: elasticsearch

image: elastic/elasticsearch:7.9.3

restart: always

user: root

ports:

- 9200:9200

- 9300:9300

volumes:

- ./elasticsearch/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./elasticsearch/data:/usr/share/elasticsearch/data

- ./elasticsearch/logs:/usr/share/elasticsearch/logs

environment:

- "discovery.type=single-node"

- "TAKE_FILE_OWNERSHIP=true"

- "ES_JAVA_OPTS=-Xms1500m -Xmx1500m"

- "TZ=Asia/Shanghai"

kibana:

container_name: kibana

image: elastic/kibana:7.9.3

restart: always

ports:

- 5601:5601

volumes:

- ./kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml

environment:

- elasticsearch.hosts=elasticsearch:9200

- "TZ=Asia/Shanghai"

depends_on:

- elasticsearch

logstash:

image: elastic/logstash:7.9.3

restart: always

container_name: logstash

volumes:

- ./logstash/conf/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

- ./logstash/template.json:/etc/logstash/template.json

ports:

- "5044:5044"

- "9600:9600"

environment:

- "LS_JAVA_OPTS=-Xms1024m -Xmx1024m"

- elasticsearch.hosts=elasticsearch:9200

- "TZ=Asia/Shanghai"

depends_on:

- elasticsearch

可以看到,logstash和kibana都依赖于ElasticSearch,填写es的地址使用容器名“elasticsearch:9200”,省去分配内网IP的过程。

es存储需要持久化,

volumes:

- ./elasticsearch/data:/usr/share/elasticsearch/data

三个组件的配置文件都开放,便于在宿主机上修改。

├── elasticsearch

│ ├── conf

│ │ └── elasticsearch.yml

│ ├── data

│ └── logs

│ ├── gc.log

│ ├── gc.log.00

│ ├── gc.log.01

│ ├── gc.log.02

│ ├── gc.log.03

│ ├── gc.log.04

│ ├── gc.log.05

│ └── gc.log.06

├── kibana

│ └── conf

│ └── kibana.yml

└── logstash

├── conf

│ └── logstash.conf

└── template.json

由于es和kibana在后文将另外讲述,所以本文只进一步介绍logstash的使用。

二、logstash的配置

1、template.json

定义索引的mapping信息:

{

"template": "jvm-*",

"settings": {

"number_of_shards": 1,

"number_of_replicas": 0

},

"mappings": {

"properties": {

"logclass": {

"type": "text"

},

"appname": {

"type": "keyword"

},

"traceid": {

"type": "keyword"

},

"spanid": {

"type": "keyword"

},

"export": {

"type": "boolean"

},

"logpid": {

"type": "keyword"

},

"logdate": {

"type": "date",

"format": "yyyy-MM-dd HH:mm:ss.SSS"

},

"loglevel": {

"type": "keyword"

},

"threadname": {

"type": "keyword"

},

"logmsg": {

"type": "text"

}

}

}

}

2、logstash.conf

input {

beats {

port => 5044

}

}

filter {

grok {

pattern_definitions => {

"QUALIFIED" => "[a-zA-Z0-9$_.]+"

}

match => {

"message" => "%{TIMESTAMP_ISO8601:logdate}%{SPACE}%{WORD:loglevel}%{SPACE}\[%{DATA:appname},%{DATA:traceid},%{DATA:spanid},%{DATA:export}\]%{SPACE}%{NUMBER:logpid} --- \[%{USERNAME:threadname}\] %{DATA:logclass} - %{GREEDYDATA:logmsg}"

}

}

}

output {

elasticsearch {

hosts =>["elasticsearch:9200"]

#索引的正则表达式,比如jvm-20231227

index => "jvm-%{+yyyy.MM.dd}"

template => "/etc/logstash/template.json"

template_name => "logstash"

}

}

三、注意事项

1、logstash.conf中的注释#开头,不能加空格

下面是错误的注释:

# 索引的正则表达式,比如jvm-20231227

正确的注释是:

#索引的正则表达式,比如jvm-20231227

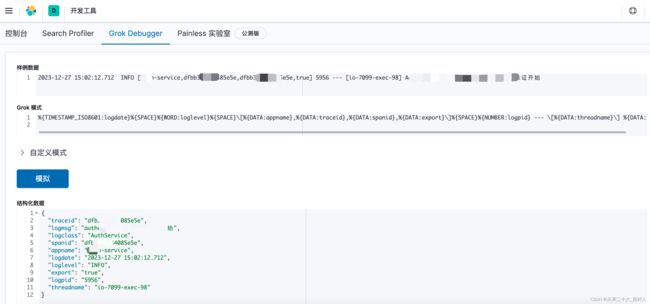

2、grok语法

已有在线的grok表达式,这里推荐一款kibana的开发工具:

具体的语法见其github官网:

https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/ecs-v1/grok-patterns