大数据技术之Debezium

Debezium概述

Debezium是用于捕获变更数据的开源分布式平台。可以响应数据库的所有插入,更新和删除操作。Debezium依赖于kafka上,所以在安装Debezium时需要提前安装好Zookeeper,Kafka,以及Kakfa Connect。

Kafka Connect

Kafka Connect用于在Apache Kafka和其他系统之间可扩展且可靠地数据流传输数据的工具,连接器可以轻松地将大量数据导入或导出。

Kafka Connect当前支持两种模式,standalone和distributed两种模式。

standalone主要用于入门测试,所以我们来实现distributed模式。

官网地址:https://kafka.apache.org/documentation.html#connect

Distributed,分布式模式可以在处理工作中自动平衡,允许动态扩展或缩减,并在活动任务以及配置和偏移量提交数据中提供容错能力。和standalone模式非常类似,最大区别在于启动的类和配置参数,参数决定了Kafka Connect流程如果存储偏移量,如何分配工作,在分布式模式下,Kafka Connect将偏移量,配置和任务状态存储在topic中。建议手动创建topic指定分区数,也可以通过配置文件参数自动创建topic。

参数配置:

-

group.id 默认connect-cluster 集群的唯一名称,不能重名,用于形成connect集群组

-

config.storage.topic 用于存储kafka connector和任务配置信息的topic。

-

offset.storage.topic 用于存储偏移量的topic。

-

status.storage.topic 用于存储状态的topic。

配置

[root@hadoop101 ~]# cd /opt/module/kafka_2.11-2.4.0/config/

[root@hadoop101 config]# vim connect-distributed.properties

bootstrap.servers=hadoop101:9092,hadoop102:9092,hadoop103:9092

group.id=connect-mysql

key.converter=org.apache.kafka.connect.json.JsonConverter

value.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable=true

value.converter.schemas.enable=true

offset.storage.topic=connect-mysql-offsets

offset.storage.replication.factor=2

config.storage.topic=connect-mysql-configs

config.storage.replication.factor=2

status.storage.topic=connect-mysql-status

status.storage.replication.factor=2

offset.flush.interval.ms=10000

安装Debezium对MySql的支持

(1)创建kafka plugins文件夹

[root@hadoop101 module]# mkdir -p /usr/local/share/kafka/plugins/

(2)上传debezium-connector-mysql-1.2.0.Final-plugin.tar,并解压

[root@hadoop101 software]# tar -zxvf debezium-connector-mysql-1.2.0.Final-plugin.tar.gz -C /usr/local/share/kafka/plugins/

(3)修改mysql配置,如果是rpm的方式安装MySql的那么则没有my.cnf文件,则需要修改mysql-default.cnf然后复制到/etc/路径下,如果/etc/路径下有my.cnf则直接修改my.cnf即可。安装mysql参考之前文档

[root@hadoop101 software]# vim /usr/share/mysql/my-default.cnf

[mysqld]

server_id=1

log-bin=mysql-bin

binlog-format=ROW

(4)复制my-default.conf到/etc路径下

[root@hadoop101 software]# cp /usr/share/mysql/my-default.cnf /etc/my.cnf

(5)修改mysql参数,并创建binlog用户

[root@hadoop101 software]# mysql -uroot -p123456

mysql> set global binlog_format=ROW;

mysql> set global binlog_row_image=FULL;

mysql> CREATE USER 'testbinlog'@'%' IDENTIFIED BY '123456'

mysql> GRANT ALL PRIVILEGES ON *.* to "testbinlog"@"%" IDENTIFIED BY "123456";

mysql> use user;

mysql> delete from user where host='localhost';

mysql> FLUSH PRIVILEGES;

(6)重启mysql

[root@hadoop101 software]# service mysql restart

(7)再次修改kafka下的connect-distributed.properties文件,添加plugins路径

[root@hadoop101 ~]# vim /opt/module/kafka_2.11-2.4.0/config/connect-distributed-mysql.properties plugin.path=/usr/local/share/kafka/plugins/

(8)分发plugins文件夹,分发connect-distributed.properties

[root@hadoop101 share]# scp -r /usr/local/share/kafka/ hadoop102:/usr/local/share/

[root@hadoop101 share]# scp -r /usr/local/share/kafka/ hadoop103:/usr/local/share/

[root@hadoop101 config]# scp connect-distributed.properties hadoop102:/opt/module/kafka_2.11-2.4.0/config/

[root@hadoop101 config]# scp connect-distributed.properties hadoop102:/opt/module/kafka_2.11-2.4.0/config/

(9)启动zk,启动kafka

(10)启动kafka connect

[root@hadoop101 kafka_2.11-2.4.0]# bin/connect-distributed.sh -daemon config/connect-distributed.properties

[root@hadoop102 kafka_2.11-2.4.0]# bin/connect-distributed.sh -daemon config/connect-distributed.properties

[root@hadoop103 kafka_2.11-2.4.0]# bin/connect-distributed.sh -daemon config/connect-distributed.properties

(12)启动Debezium,发送http请求

[root@hadoop101 ~]# curl -H "Content-Type: application/json" -X POST -d '{

"name" : "inventory-connector",

"config" : {

"connector.class" : "io.debezium.connector.mysql.MySqlConnector",

"database.hostname" : "hadoop101",

"database.port" : "3306",

"database.user" : "testbinlog",

"database.password" : "123456",

"database.server.id" : "184054",

"database.server.name" : "mysql",

"database.whitelist" : "test",

"database.history.kafka.bootstrap.servers":"hadoop101:9092,hadoop102:9092,hadoop103:9092",

"database.history.kafka.topic":"binlogtest",

"include.schema.change":"true"

}

}' http://hadoop101:8083/connectors

database.server.name 对应数据库逻辑名称

database.whitelist 需要监控的数据库白名单,正则表达式。也有黑名单,用了白名单就不能用黑名单反之一样。

配置参数参考,官网

https://debezium.io/documentation/reference/1.2/connectors/mysql.html

(13)发送请求成功后,可以通过http请求浏览状态

保证状态都是running

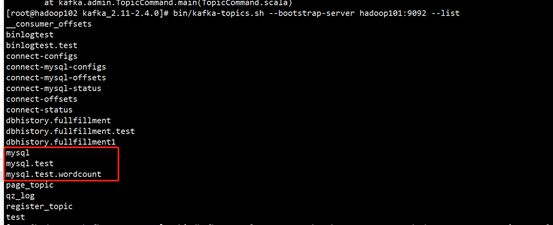

(14)运行成功后可以通过kafka命令查看topic个数,多出了我们需要的监听的topic

(16)查看对应的topic信息

[root@hadoop102 kafka_2.11-2.4.0]# bin/kafka-console-consumer.sh --bootstrap-server hadoop101:9092 --topic mysql.test.wordcount --from-beginning

{

"schema": {

"type": "struct",

"fields": [{

"type": "struct",

"fields": [{

"type": "int32",

"optional": false,

"field": "id"

}, {

"type": "string",

"optional": true,

"field": "appname"

}, {

"type": "int32",

"optional": true,

"field": "value"

}],

"optional": true,

"name": "mysql.test.wordcount.Value",

"field": "before"

}, {

"type": "struct",

"fields": [{

"type": "int32",

"optional": false,

"field": "id"

}, {

"type": "string",

"optional": true,

"field": "appname"

}, {

"type": "int32",

"optional": true,

"field": "value"

}],

"optional": true,

"name": "mysql.test.wordcount.Value",

"field": "after"

}, {

"type": "struct",

"fields": [{

"type": "string",

"optional": false,

"field": "version"

}, {

"type": "string",

"optional": false,

"field": "connector"

}, {

"type": "string",

"optional": false,

"field": "name"

}, {

"type": "int64",

"optional": false,

"field": "ts_ms"

}, {

"type": "string",

"optional": true,

"name": "io.debezium.data.Enum",

"version": 1,

"parameters": {

"allowed": "true,last,false"

},

"default": "false",

"field": "snapshot"

}, {

"type": "string",

"optional": false,

"field": "db"

}, {

"type": "string",

"optional": true,

"field": "table"

}, {

"type": "int64",

"optional": false,

"field": "server_id"

}, {

"type": "string",

"optional": true,

"field": "gtid"

}, {

"type": "string",

"optional": false,

"field": "file"

}, {

"type": "int64",

"optional": false,

"field": "pos"

}, {

"type": "int32",

"optional": false,

"field": "row"

}, {

"type": "int64",

"optional": true,

"field": "thread"

}, {

"type": "string",

"optional": true,

"field": "query"

}],

"optional": false,

"name": "io.debezium.connector.mysql.Source",

"field": "source"

}, {

"type": "string",

"optional": false,

"field": "op"

}, {

"type": "int64",

"optional": true,

"field": "ts_ms"

}, {

"type": "struct",

"fields": [{

"type": "string",

"optional": false,

"field": "id"

}, {

"type": "int64",

"optional": false,

"field": "total_order"

}, {

"type": "int64",

"optional": false,

"field": "data_collection_order"

}],

"optional": true,

"field": "transaction"

}],

"optional": false,

"name": "mysql.test.wordcount.Envelope"

},

"payload": {

"before": {

"id": 3,

"appname": "aa",

"value": 4

},

"after": {

"id": 3,

"appname": "aa",

"value": 6

},

"source": {

"version": "1.2.0.Final",

"connector": "mysql",

"name": "mysql",

"ts_ms": 1597740355000,

"snapshot": "false",

"db": "test",

"table": "wordcount",

"server_id": 1,

"gtid": null,

"file": "mysql-bin.000002",

"pos": 861,

"row": 0,

"thread": 8,

"query": null

},

"op": "u",

"ts_ms": 1597740355324,

"transaction": null

}

}

数据不仅包含了表的schema信息,也有修改前和修改后的数据。

(17)如果要删除对应connector,使用delete请求

curl -v -X DELETE http://hadoop101:8083/connectors/inventory-connector