电商数仓可视化1--数据导入

1、数据来源介绍以及数据文件下载

1、业务数据

业务数据往往产生于事务型过程处理,所以一般存储在关系型数据库中,如mysql、oracle

业务数据源:

用户基本信息、商品分类信息、商品信息、店铺信息、订单数据、订单支付信息、活动信息、物流信息等2、埋点数据

埋点日志相对业务数据是用于数据分析、挖掘需求,一般以日志形式存储于日志文件中,随后通过采集落地分布式存储介质中如hdfs、hbase

用户行为日志:

用户浏览、用户点评、用户关注、用户搜索、用户投诉、用户咨询3、外部数据

当前一般公司都会通过线上广告来进行获客,与三方公司合作更多的提取相关数据来进行深度刻画用户及用户群体,另外爬取公共公开数据也是分析运营的常用方式。

外部数据源:

广告投放数据、爬虫数据、三方业务接口数据、微信小程序

数据文件:链接:https://pan.baidu.com/s/1fZ1u7h86asr03Dw8NgiZKA?pwd=k9se

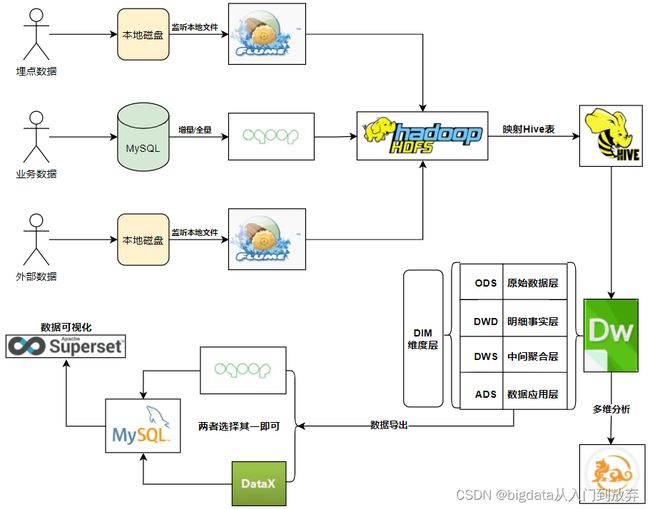

2、数仓架构图

组件版本以及说明:

jdk:1.8

hadoop:2.7.3(伪分布搭建)

hive:3.x

sqoop:1.4.7

superset:

azkaban

3、数据导入

3.1、业务数据的导入

1、数据来源:来自给大家发的数据文件里面的nsho.sql,在navicat中创建一个nshop数据库,将sql文件拖进去执行

2、数据迁移到hive:通过sqoop和azkaban联合导入

创建一个sqoopJob.sh导入脚本(记得更改主机名和mysql连接配置)

#!/bin/bash

sqoop import \

--connect jdbc:mysql://zz:3306/nshop \

--query "select customer_id,customer_login,customer_nickname,customer_name,customer_pass,customer_mobile,customer_idcard,customer_gender,customer_birthday,customer_age,customer_age_range,customer_email,customer_natives,customer_ctime,customer_utime,customer_device_num from customer where \$CONDITIONS" \

--username root \

--password 123456 \

--target-dir /data/nshop/ods/ods_02_customer \

--hive-import \

--hive-database ods_nshop \

--hive-table ods_02_customer \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--query "select customer_id,attention_id,attention_type,attention_status,attention_ctime from customer_attention where \$CONDITIONS" \

--username root \

--password 123456 \

--target-dir /data/nshop/ods/ods_02_customer_attention \

--hive-import \

--hive-database ods_nshop \

--hive-table ods_02_customer_attention \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--query "select consignee_id,customer_id,consignee_name,consignee_mobile,consignee_zipcode,consignee_addr,consignee_tag,ctime from customer_consignee where \$CONDITIONS" \

--username root \

--password 123456 \

--target-dir /data/nshop/ods/ods_02_customer_consignee \

--hive-import \

--hive-database ods_nshop \

--hive-table ods_02_customer_consignee \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select category_code,category_name,category_parent_id,category_status,category_utime from category where \$CONDITIONS" \

--target-dir /data/nshop/ods/dim_pub_category \

--hive-import \

--hive-database dim_nshop \

--hive-table dim_pub_category \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select supplier_code,supplier_name,supplier_type,supplier_status,supplier_utime from supplier where \$CONDITIONS" \

--target-dir /data/nshop/ods/dim_pub_supplier \

--hive-import \

--hive-database dim_nshop \

--hive-table dim_pub_supplier \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select order_id,customer_id,order_status,customer_ip,customer_longitude,customer_latitude,customer_areacode,consignee_name,consignee_mobile,consignee_zipcode,pay_type,pay_code,pay_nettype,district_money,shipping_money,payment_money,order_ctime,shipping_time,receive_time from orders where \$CONDITIONS" \

--target-dir /data/nshop/ods/ods_02_orders \

--hive-import \

--hive-database ods_nshop \

--hive-table ods_02_orders \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select order_detail_id,order_id,product_id,product_name,product_remark,product_cnt,product_price,weighing_cost,district_money,is_activity,order_detail_ctime from order_detail where \$CONDITIONS" \

--target-dir /data/nshop/ods/ods_02_order_detail \

--hive-import \

--hive-database ods_nshop \

--hive-table ods_02_order_detail \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select page_code,page_remark,page_type,page_target,page_ctime from page_dim where \$CONDITIONS" \

--target-dir /data/nshop/ods/dim_pub_page \

--hive-import \

--hive-database dim_nshop \

--hive-table dim_pub_page \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select region_code,region_code_desc,region_city,region_city_desc,region_province,region_province_desc from area_dim where \$CONDITIONS" \

--target-dir /data/nshop/ods/dim_pub_area \

--hive-import \

--hive-database dim_nshop \

--hive-table dim_pub_area \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select date_day,date_day_desc,date_day_month,date_day_year,date_day_en,date_week,date_week_desc,date_month,date_month_en,date_month_desc,date_quarter,date_quarter_en,date_quarter_desc,date_year from date_dim where \$CONDITIONS" \

--target-dir /data/nshop/ods/dim_pub_date \

--hive-import \

--hive-database dim_nshop \

--hive-table dim_pub_date \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select pay_id,order_id,customer_id,pay_status,pay_type,pay_code,pay_nettype,pay_amount,pay_ctime from orders_pay_records where \$CONDITIONS" \

--target-dir /data/nshop/ods/ods_02_orders_pay_records \

--hive-import \

--hive-database ods_nshop \

--hive-table ods_02_orders_pay_records \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select product_code,product_name,product_remark,category_code,supplier_code,product_price,product_weighing_cost,product_publish_status,product_audit_status,product_bar_code,product_weight,product_length,product_height,product_width,product_colors,product_date,product_shelf_life,product_ctime,product_utime from product where \$CONDITIONS" \

--target-dir /data/nshop/ods/dim_pub_product \

--hive-import \

--hive-database dim_nshop \

--hive-table dim_pub_product \

--delete-target-dir \

-m 1

sqoop import \

--connect jdbc:mysql://caiji:3306/nshop \

--username root \

--password 123456 \

--query "select dim_type,dim_code,dim_remark,dim_ext1,dim_ext2,dim_ext3,dim_ext4,ct from comm_dim where \$CONDITIONS" \

--target-dir /data/nshop/ods/dim_pub_common \

--hive-import \

--hive-database dim_nshop \

--hive-table dim_pub_common \

--delete-target-dir \

-m 1创建azkaban的任务sqoop.flow (执行脚本要在对应的文件位置)

nodes:

- name: ods

type: command

config:

command: sh sqoopJob.sh

创建azkaban的任务sqoop.project

azkaban-flow-version: 2.0

3.2、埋点日志数据导入hive

我们的埋点日志数据 是json格式 如下:

{"action":"05","event_type":"01","customer_id":"20101000324999676","device_num":"586344","device_type":"9","os":"2","os_version":"2.2","manufacturer":"05","carrier":"2","network_type":"2","area_code":"41092","longitude":"116.35636","latitude":"40.06919","extinfo":"{\"target_type\":\"4\",\"target_keys\":\"20402\",\"target_order\":\"31\",\"target_ids\":\"[\\\"4320402595801\\\",\\\"4320402133801\\\",\\\"4320402919201\\\",\\\"4320402238501\\\"]\"}","ct":1567896035000}

这个数据在数据文件里000000_0日志数据中,我们采用hive中建造对应的表,将数据load进去的方式导入数据

1、创建数据存放位置 mkdir datas 将数据拖进去

2、创建hive表

create external table if not exists ods_nshop.ods_nshop_01_useractlog(

action string comment '行为类型:install安装|launch启动|interactive交互|page_enter_h5页面曝光|page_enter_native页面进入|exit退出',

event_type string comment '行为类型:click点击|view浏览|slide滑动|input输入',

customer_id string comment '用户id',

device_num string comment '设备号',

device_type string comment '设备类型',

os string comment '手机系统',

os_version string comment '手机系统版本',

manufacturer string comment '手机制造商',

carrier string comment '电信运营商',

network_type string comment '网络类型',

area_code string comment '地区编码',

longitude string comment '经度',

latitude string comment '纬度',

extinfo string comment '扩展信息(json格式)',

duration string comment '停留时长',

ct bigint comment '创建时间'

) partitioned by (bdp_day string)

ROW FORMAT SERDE 'org.apache.hive.hcatalog.data.JsonSerDe'

STORED AS TEXTFILE

location '/data/nshop/ods/user_action_log/';3、导入数据

load data local inpath '/home/datas/000000_0' into table ods_nshop.ods_nshop_01_useractlog partition(bdp_day="20231227");

4、在hive中查看对应的数据

3.3、外部数据的导入

外部接口每天抽取过来的,这样的数据每天都会有,可以存放到某个文件夹中,通过flume抽取文件夹里面的数据:

flume组件的选取为:spooling dir+ memory+hdfs

3.3.1、编写flume的脚本nshop_csv2hdfs.conf

# 命名

a1.sources = r1

a1.channels = c1

a1.sinks = s1

# 关联

a1.sources.r1.channels = c1

a1.sinks.s1.channel = c1

# 配置source类型和属性

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = g1

a1.sources.r1.filegroups.g1 = /home/datas/.*.csv

# 元数据保存位置

a1.sources.r1.positionFile = /opt/flume/flume-log/taildir_position.json

# 配置channel类型属性

a1.channels.c1.type = memory

# 缓存池大小

a1.channels.c1.capacity = 1000

# 每个事务sink拉取的大小

a1.channels.c1.transactionCapacity = 100

# 配置Sink类型和属性

a1.sinks.s1.type = hdfs

a1.sinks.s1.hdfs.path = /data/nshop/ods/release/%Y%m%d

a1.sinks.s1.hdfs.fileSuffix = .log

# 下面三个配置参数如果都设置为0,那么表示不执行次参数(失效)

a1.sinks.s1.hdfs.rollInterval = 10

a1.sinks.s1.hdfs.rollSize = 0

a1.sinks.s1.hdfs.rollCount = 0

# 设置采集文件格式 如果你是存文本文件,就是用DataStream

a1.sinks.s1.hdfs.fileType = DataStream

a1.sinks.s1.hdfs.writeFormat = Text

# 开启本地时间戳获取参数,因为我们的目录上面已经使用转义符号,所以要使用时间戳

a1.sinks.s1.hdfs.useLocalTimeStamp = true

3.3.2、启动flume进行采集

flume-ng agent -n a1 -c /opt/flume/conf -f /opt/flume/conf/flumeconf/nshop_csv2hdfs.conf -Dflume.root.logger=INFO,console

3.3.3、对我们的外部数据进行筛选

create external table if not exists ods_nshop.ods_nshop_01_releasedatas(

device_num string comment '设备号',

device_type string comment '设备类型',

os string comment '手机系统',

os_version string comment '手机系统版本',

manufacturer string comment '手机制造商',

area_code string comment '地区编码',

release_sid string comment '投放请求id',

release_session string comment '投放会话id',

release_sources string comment '投放渠道',

release_params string comment '投放请求参数',

ct string comment '创建时间'

) partitioned by (bdp_day string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

stored as textfile

location '/data/nshop/ods/release/';

3.3.4、将数据与分区对应

load data inpath '/data/nshop/ods/release/20231009/*' into table ods_nshop_01_releasedatas partition(bdp_day='20231009');