云原生入门-k8s-service

Kubernetes Pod是平凡的,它门会被创建,也会死掉(生老病死),并且他们是不可复活的。 ReplicationControllers动态的创建和销毁Pods(比如规模扩大或者缩小,或者执行动态更新)。每个pod都由自己的ip,这些IP也随着时间的变化也不能持续依赖。这样就引发了一个问题:如果一些Pods(让我们叫它作后台,后端)提供了一些功能供其它的Pod使用(让我们叫作前台),在kubernete集群中是如何实现让这些前台能够持续的追踪到这些后台的?

答案是:Service

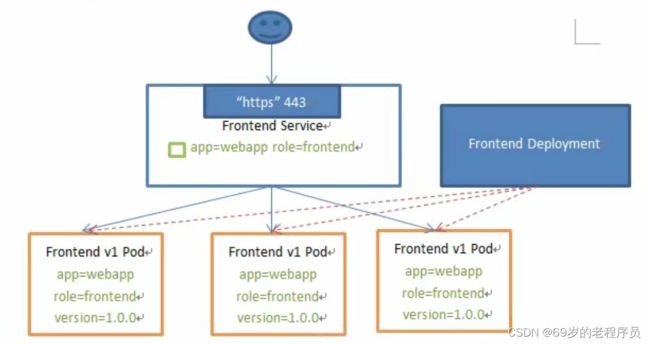

如图, 一个叫Frontend Deployment的控制器创建了三个pod, pod 的selector labels 为 app=webapp role=frontend version=1.0.0

然后有一个service 指定 标签为 app=webapp role=frontend 的 pod都会被我记录下来。

之后用户访问service就会被负载均衡到各自的pod上,如果pod切换了,service会自己发现。

service 的类型

service 在 K8S中有4种类型

- clusterIp: 默认类型,自动分配一个仅Cluster 内部可以访问的虚拟ip

- NodePort:在ClusterIp基础上为servicezai 每台机器上绑定一个端口,这样就可以通过 nodeIp:nodeport来访问服务

- loadbalancer:在nodeport基础上,借助 cloudprovider 创建一个外部负载均衡器,并将请求转发到 nodeip:nodeport上

- externalName:把集群外部的服务引入到集群内部来,集群内部可与直接访问。

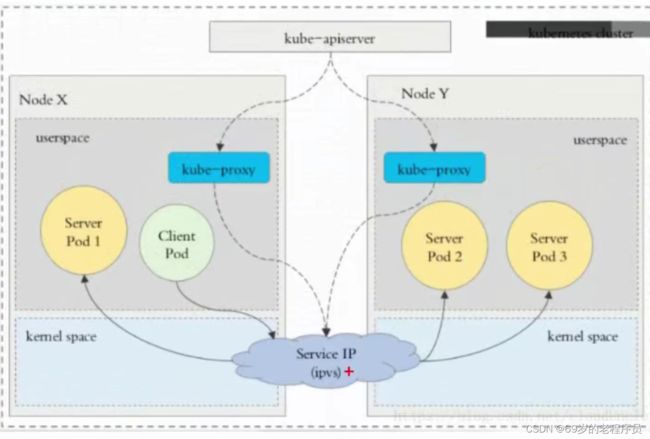

kube-proxy 实现 service代理

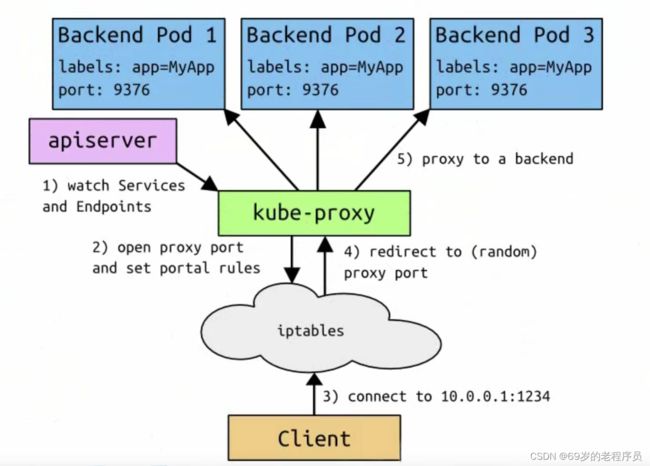

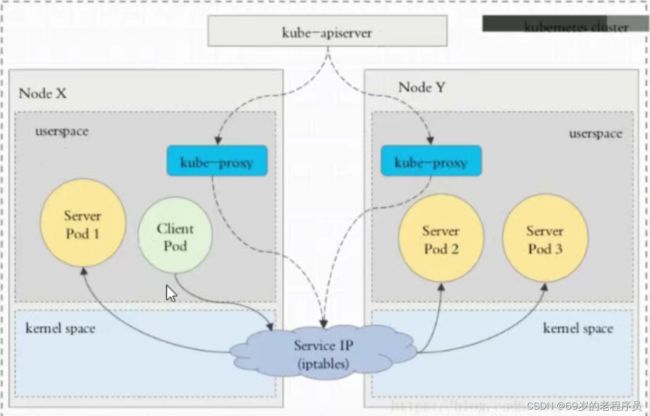

K8S集群中,每个node运行一个kube-proxy进程。 kube-proxy负责为service实现一种 vip(虚拟ip)的形式。K8SV1.2 开始默认采用iptables代理 在 k8sV1.14开始默认使用ipvs

代理分类

iptables

iptables 是防火墙里面的数据包转发过滤的一个模块,可以通过配置 DNAT 来进行服务转发

ipvs

svc 工作流程

- apiServer 用户通过kubectl 命令向apiServer发送创建service的命令。apiServer接收到请求后将数据存储到etcd中

- kube-proxy 感知到 etcd发生变化,将规则写入到 iptables或者ipvs中

- iptables 或 ipvs 使用 NAT等技术将 vip的流量转发到真实服务上

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

type: ClusterIP

selector:

app: nginx

ports:

- name: http

port: 8080

targetPort: 8080

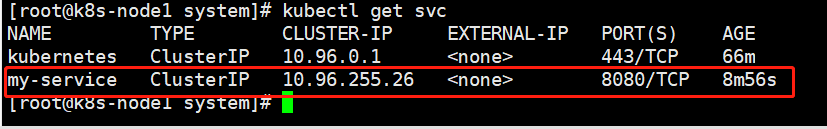

创建svc服务

kubectl apply -f service.yaml

就会看到创建了如下的svc

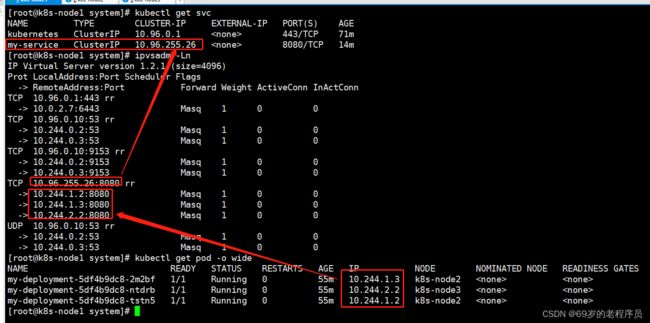

使用 ipvsadm -Ln 命令查看映射关系

所以访问 clusterIp就是负载均衡到旗下的ip去了

如果出现访问不行的情况

==Kubernetes K8S在IPVS代理模式下Service服务的ClusterIP类型访问失败处理 ==

ethtool -K flannel.1 tx-checksum-ip-generic off

上面那个命令如果运行后就可以访问了,执行

[root@k8s-node02 ~]# vi /etc/systemd/system/k8s-flannel-tx-checksum-off.service

[Unit]

Description=Turn off checksum offload on flannel.1

After=sys-devices-virtual-net-flannel.1.device

[Install]

WantedBy=sys-devices-virtual-net-flannel.1.device

[Service]

Type=oneshot

ExecStart=/sbin/ethtool -K flannel.1 tx-checksum-ip-generic off

开机启动

1 systemctl enable k8s-flannel-tx-checksum-off

2 systemctl start k8s-flannel-tx-checksum-off

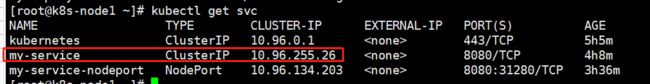

nodeport 模式

只是需要把 type 改成nodeport 就好了

apiVersion: v1

kind: Service

metadata:

name: my-service-nodeport

spec:

type: NodePort

selector:

app: nginx

ports:

- name: http

port: 8080

targetPort: 8080

ingress

svc 模式只支持4层代理,需要用ingress才支持7层的通过域名代理的模式

ingress-nginx

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "-"

# Here: "-"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.25.1

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

---

在创建一个 service-nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

现在有一个service

想要通过ingress 暴露域名访问,首先创建一个ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: my-ingress

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /

backend:

serviceName: my-service

servicePort: 8080

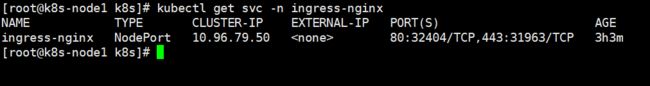

使用 kubectl apply -f ingress.yaml 创建ingress。

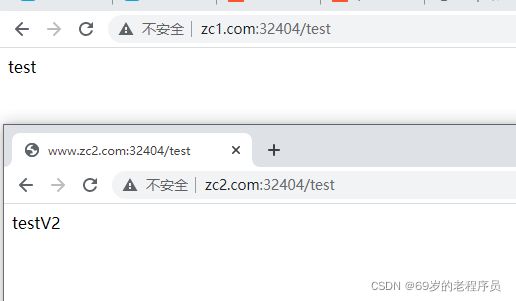

这时候访问 foo.bar.com:32404/test ingress 就会把请求转到 my-service 的8080端口,然后my-service在通过ipvs寻找pod转发请求

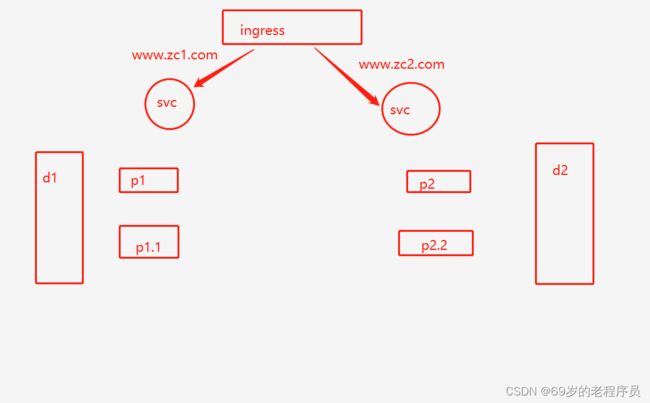

使用ingress实现一个虚拟主机

- 创建两个deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment-01

spec:

replicas: 2

selector:

matchLabels:

app: v1

template:

metadata:

labels:

app: v1

spec:

containers:

- name: zc-deploy-01

image: zhucheng1992/myboot:1.0

ports:

- containerPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment-02

spec:

replicas: 2

selector:

matchLabels:

app: v2

template:

metadata:

labels:

app: v2

spec:

containers:

- name: zc-deploy-02

image: zhucheng1992/myboot:2.0

ports:

- containerPort: 8080

- 创建两个svc

apiVersion: v1

kind: Service

metadata:

name: my-service-01

spec:

type: ClusterIP

selector:

app: v1

ports:

- name: http

port: 8080

targetPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: my-service-02

spec:

type: ClusterIP

selector:

app: v2

ports:

- name: http

port: 8080

targetPort: 8080

- 创建一个ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: my-ingress-01

spec:

rules:

- host: www.zc1.com

http:

paths:

- path: /

backend:

serviceName: my-service-01

servicePort: 8080

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: my-ingress-02

spec:

rules:

- host: www.zc2.com

http:

paths:

- path: /

backend:

serviceName: my-service-02

servicePort: 8080

headless svc 无头服务

apiVersion: v1

kind: Service

metadata:

name: headless-svc

spec:

selector:

app: myapp

clusterIP: "None"

ports:

- port: 80

targetPort: 80

使用

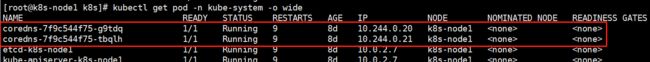

dig -t A headless-svc.default.svc.cluster.local. @10.244.0.20

dig -t A 无头服务名称.命名空间.集群名称. @coreDNSip