基于seatunnel实现mysql同步clickhouse验证

场景:

需求想要实现mysql同步到clickhouse,seatunnel部署见前面文档linux环境seatunnel安装运行-CSDN博客。

官方说明文档

Clickhouse | Apache SeaTunnel

mysql同步配置

server-id=1

log_bin=/var/lib/mysql/bin.log

binlog_format=ROW

#binlog-do-db 具体要同步的数据库

binlog-do-db=gisqbpm

gtid-mode=on

enforce-gtid-consistency=on

验证MYSQL同步clckhouse

mysql数据库测试表

CREATE TABLE `test` (

`id_` varchar(255) NOT NULL,

`name` varchar(255) DEFAULT NULL,

PRIMARY KEY (`id_`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

clikhouse库测试表

-- gisqbpm.test definition

CREATE TABLE gisqbpm.test

(`id_` String,

`name` Nullable(String),

`_sign` Int8 MATERIALIZED 1,

`_version` UInt64 MATERIALIZED 1,

INDEX _version _version TYPE minmax GRANULARITY 1

)

ENGINE = ReplacingMergeTree(_version)

ORDER BY tuple(id_)

SETTINGS index_granularity = 8192;

在config中创建mysqltoclickhouse.conf

touch mysqltoclickhouse.conf配置如下

env {

execution.parallelism = 1

job.mode = "STREAMING"

checkpoint.interval = 2000

}

source {

MySQL-CDC {

base-url = "jdbc:mysql://192.168.85.128:3307/gisqbpm"

username = "root"

password = "wxy123456"

table-names = ["gisqbpm.test"]

}

}sink {

Clickhouse {

host = "192.168.85.128:8123"

database = "gisqbpm"

table = "test"

username = "default"

password = "wxy123456“

}

}

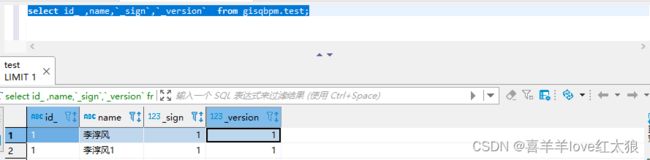

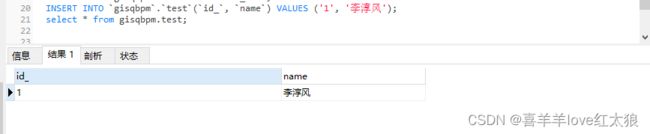

验证一:INSERT

mysql添加一条数据

INSERT INTO `gisqbpm`.`test`(`id_`, `name`) VALUES ('1', '李淳风');

select * from gisqbpm.test;

在clickhouse中也同步添加一条数据

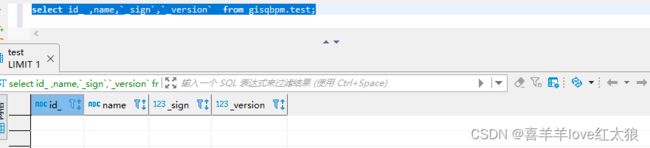

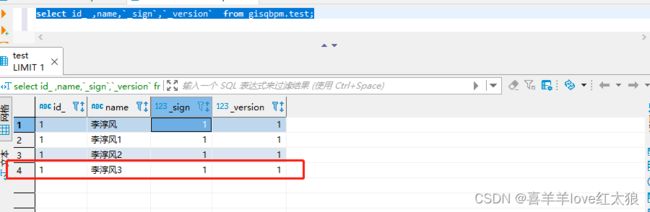

验证二:UPDATE

mysql修改一条数据

update test set name='李淳风1' where id_='1';

select * from gisqbpm.test;

clickhouse显示如下,而是新增一个数据

验证三:DELETE

mysql删除一条数据

clickhouse发现是添加了一个数据

结论一:

这个是网上常见的案列,但都是往往写了一个添加操作就没了,奶奶个腿啊,这么多的坑竟然不说,妈的这放到生产环境不要气死人?同步时修改和删除变成添加和实际逻辑不一样。咨询了社区大佬,给我提供了一些意见,好吧那我就再验证一下

经过大佬点播,又看了一下官方文档确实有写,要通过主键去删除和更新!!!!

二:修改配置再次验证

env {

execution.parallelism = 1

job.mode = "STREAMING"

checkpoint.interval = 2000

}

source {

MySQL-CDC {

base-url = "jdbc:mysql://192.168.85.128:3307/gisqbpm"

username = "root"

password = "wxy123456"

table-names = ["gisqbpm.test"]

}

}sink {

Clickhouse {

host = "192.168.85.128:8123"

database = "gisqbpm"

table = "test"

username = "default"

password = "wxy123456"

primary_key="id_"

support_upsert=true

}

}

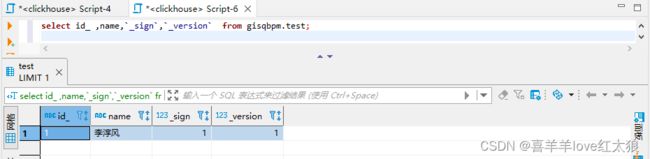

验证一:INSERT

mysql插入一条数据

clickhouse也同步显示添加一条数据

验证二:DELETE

mysql删除一条数据

clickhouse也同步删除一条数据

验证三:UPDATE

mysql修改一条数据

clickhouse没有变化,服务此时也挂了

错误一:

错误如下 Caused by: org.apache.seatunnel.connectors.seatunnel.clickhouse.exception.ClickhouseConnectorException: ErrorCode:[COMMON-10], ErrorDescription:[Flush data operation that in sink connector failed] - Clickhouse execute batch statement error

重启后再次执行修改操作

mysql修改一条数据

clickhouse显示是新增一条数据

结论二:

这种方式同样也是不能完美解决同步问题,修改变成了添加,而且中途服务还挂了,稳定性也有风险。

三:修改配置再次验证

env {

execution.parallelism = 1

job.mode = "STREAMING"

checkpoint.interval = 2000

}

source {

MySQL-CDC {

base-url = "jdbc:mysql://192.168.85.128:3307/gisqbpm"

username = "root"

password = "wxy123456"

table-names = ["gisqbpm.test"]

}

}sink {

Clickhouse {

host = "192.168.85.128:8123"

database = "gisqbpm"

table = "test"

username = "default"

password = "wxy123456"

primary_key="id_"

support_upsert=true

allow_experimental_lightweight_delete = true

}

}

这个是官方文档提供的配置测试一下

错误二:

Caused by: java.sql.BatchUpdateException: Code: 62. DB::Exception: Syntax error: failed at position 1 ('DELETE'): DELETE FROM "test" WHERE "id_" = '1' settings allow_experimental_lightweight_delete = true. Expected one of: Query, Query

结论三

经测试在mysql中添加一条数据,clickhouse也同步添加一条数据,但是mysql中通过主键修改一条数据,clickhouse变成添加一条数据,mysql删除一条数据,clickhosue直接报错且服务挂掉

总结:

1.seatunel同步mysql到clickhouse中存在很大的问题,生产环境如果有修改和删除操作同步到clickhouse时趁早换方案!!!

2.从操作来看seatunel对全库同步的场景也不是很友好,我100张表那就要创建100个sink对应的表非常麻烦!!

3.MaterializeMySQL的方式都比这个靠谱,至少服务不会挂!!!