Centos 7 部署 OpenStack_Rocky版高可用集群3-2

Centos 7 部署 OpenStack_Rocky版高可用集群3-2

文章目录

- Centos 7 部署 OpenStack_Rocky版高可用集群3-2

-

- 8、部署glance-镜像服务

-

-

- 8.1 配置MariaDB数据库中的glance用户和权限等

- 8.2 创建glance api

- 8.3 安装glance程序

- 8.3 配置glance-api.conf程序配置文件(三个控制节点全操作cont01,cont02.cont03)

- 8.4 配置glance-registry.conf程序配置文件(三个控制节点全操作cont01,cont02.cont03)

- 8.5 同步glance数据库(任意控制节点操作即可)

- 8.6 启动glance服务

- 8.7 测试镜像

- 8.7 设置PCS资源

-

- 9、 Nova控制节点集群

-

-

- 9.1 创建Nova相关数据库(任意控制节点操作即可)

- 9.2 创建nova/placement-api(任意控制节点操作即可)

- 9.3 安装Nova服务(在全部控制节点安装nova相关服务)

- 9.4 配置nova.conf(在全部控制节点安装nova相关服务)

- 9.5 配置00-nova-placement-api.conf

- 9.6 同步nova相关数据库(任意控制节点操作)

- 9.6 启动nova服务(所有控制节点操作cont01 cont02 cont03)

- 9.7 验证

- 9.8 部署 Nova计算节点(部署计算节点上comp01、comp02、comp03)

- 9.10 计算节点和直接ssh免密认证

- 9.11 配置计算节点的配置nova.conf

- 9.12 启动计算节点服务 在comp01 comp02 comp03上执行

- 9.13 向cell数据库添加计算节点(在任意控制节点操作)

-

- 10、 Neutron控制/网络节点集群

-

-

- 10.1 创建neutron数据库(在任意控制节点创建数据库,后台数据自动同步)

- 10.2 创建neutron用户、赋权、服务实体

- 10.3 创建neutron-api

- 10.4 部署Neutron (在所有控制节点上安装)

-

- 10.4.1 安装Neutron程序

- 10.4.2 配置neutron.conf

- 10.4.3 配置ml2_conf.ini (在全部控制节点操作)

- 10.4.4 配置 openvswitch (在全部控制节点操作)

- 10.4.5 配置l3_agent.ini(self-networking)(在全部控制节点操作 cont01 cont02 cont03)

- 10.4.6 配置dhcp_agent.ini (在全部控制节点操作 cont01 cont02 cont03)

- 10.4.7 配置metadata_agent.ini (在全部控制节点操作 cont01 cont02 cont03)

- 10.4.8 配置nova.conf (在全部控制节点操作 cont01 cont02 cont03)

- 10.4.9 建立软链接 (在全部控制节点操作 cont01 cont02 cont03)

- 10.4.10 同步neutron数据库并验证 (任意控制节点操作)

- 10.4.11 重启nova服务并启动neutron服务 (在全部控制节点操作 cont01 cont02 cont03)

- 10.4.12 设置PCS资源(在任意控制节点操作 cont01 cont02 cont03)

- 10.4.13.1 OVS命令(三网卡)

- 10.4.13 验证

- 10.4.14 Open vSwitch网络概念补充

- 10.4.15 namespace知识补充

- 10.4.16 获取dhcp IP过程分析知识补充

- 10.5 部署计算节点上的Neutron

-

- 10.5.1 安装openstack-neutron-openvswitch服务(所有计算节点上安装)

- 10.5.2 配置neutron.conf(所有计算节点上安装)

- 10.5.3 配置openvswitch_agent.ini (所有计算节点上安装)

- 10.5.4 配置 nova.conf (所有计算节点上安装)

- 10.5.5 重启nova服务并启动neutron服务 (在全部计算节点操作 comp01 comp02 comp03)

- 10.5.6 验证

-

8、部署glance-镜像服务

8.1 配置MariaDB数据库中的glance用户和权限等

注:由于是集群,所以只要在一个控制节点上创建即可

[root@cont02:/root]# mysql -uroot -p"typora#2019"

MariaDB [(none)]> CREATE DATABASE glance;

Query OK, 1 row affected (0.009 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_typora';

Query OK, 0 rows affected (0.010 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_typora';

Query OK, 0 rows affected (0.010 sec)

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

Bye

8.2 创建glance api

[root@cont02:/root]# source openrc

[root@cont02:/root]# openstack user list

+----------------------------------+--------+

| ID | Name |

+----------------------------------+--------+

| 02c1960ba4c44f46b7152c0a7e52fdba | admin |

| 61c06b9891a64e68b87d84dbcec5e9ac | myuser |

+----------------------------------+--------+

//创建glance用户

[root@cont02:/root]# openstack user create --domain default --password=glance_typora glance

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 34c34fe5d78e4f39bfd63f82ad989585 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

//glance用户赋权(为glance用户赋予admin权限)

[root@cont02:/root]# openstack role add --project service --user glance admin

//创建glacne服务实体

[root@cont02:/root]# openstack service create --name glance --description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | 369d083b4a094c1fb57e189d54305ea9 |

| name | glance |

| type | image |

+-------------+----------------------------------+

//创建glance-api

注:--region与初始化admin用户时生成的region一致;api地址统一采用VIP,服务类型为image

[root@cont02:/root]# openstack endpoint create --region RegionOne image public http://VirtualIP:9293

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3df1aef87c1a4f069e9742486f200c18 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 369d083b4a094c1fb57e189d54305ea9 |

| service_name | glance |

| service_type | image |

| url | http://VirtualIP:9293 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne image internal http://VirtualIP:9293

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b7b0084313744b8a91a142b1221e0443 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 369d083b4a094c1fb57e189d54305ea9 |

| service_name | glance |

| service_type | image |

| url | http://VirtualIP:9293 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne image admin http://VirtualIP:9293

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | e137861e214c46ed898a751db74cb70a |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 369d083b4a094c1fb57e189d54305ea9 |

| service_name | glance |

| service_type | image |

| url | http://VirtualIP:9293 |

+--------------+----------------------------------+

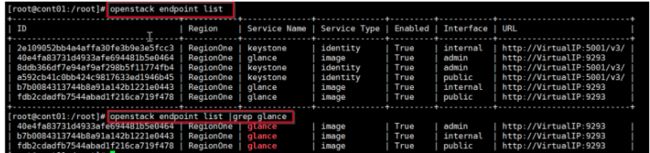

[root@cont01:/root]# openstack endpoint list

+----------------------------------+-----------+--------------+--------------+---------+----

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+----------------------------------+-----------+--------------+--------------+---------+----

| 2e109052bb4a4affa30fe3b9e3e5fcc3 | RegionOne | keystone | identity | True | internal | http://VirtualIP:5001/v3/ |

| 40e4fa83731d4933afe694481b5e0464 | RegionOne | glance | image | True | admin | http://VirtualIP:9293 |

| 8ddb366df7e94af9af298b5f11774fb4 | RegionOne | keystone | identity | True | admin | http://VirtualIP:5001/v3/ |

| a592cb41c0bb424c9817633ed1946b45 | RegionOne | keystone | identity | True | public | http://VirtualIP:5001/v3/ |

| b7b0084313744b8a91a142b1221e0443 | RegionOne | glance | image | True | internal | http://VirtualIP:9293 |

| fdb2cdadfb7544abad1f216ca719f478 | RegionOne | glance | image | True | public | http://VirtualIP:9293 |

+----------------------------------+-----------+--------------+--------------+---------+----

8.3 安装glance程序

[root@cont01:/root]# yum install openstack-glance -y

[root@cont02:/root]# yum install openstack-glance -y

[root@cont03:/root]# yum install openstack-glance -y

8.3 配置glance-api.conf程序配置文件(三个控制节点全操作cont01,cont02.cont03)

[root@cont01:/etc/glance]# cp -p /etc/glance/glance-api.conf{,.bak}

[root@cont02:/root]# cp -p /etc/glance/glance-api.conf{,.bak}

[root@cont03:/root]# cp -p /etc/glance/glance-api.conf{,.bak}

[root@cont01:/etc/glance]# vim glance-api.conf

1 [DEFAULT]

2 enable_v1_api = false

730 bind_host = 192.168.10.21

1882 [database]

1883 connection = mysql+pymysql://glance:GLANCE_typora@VirtualIP:3307/glance

2006 [glance_store]

2007 stores = file,http

2008 default_store = file

2009 filesystem_store_datadir = /var/lib/glance/images/

3473 [keystone_authtoken]

3474 www_authenticate_uri = http://VirtualIP:5001

3475 auth_url = http://VirtualIP:5001

3476 memcached_servers = cont01:11211,cont02:11211,cont03:11211

3477 auth_type = password

3478 project_domain_name = Default

3479 user_domain_name = Default

3480 project_name = service

3481 username = glance

3482 password = glance_typora

4398 [paste_deploy]

4399 flavor = keystone

//注: /var/lib/glance/images是默认的存储目录

[root@cont02:/root]# vim /etc/glance/glance-api.conf

1 [DEFAULT]

2 enable_v1_api = false

730 bind_host = 192.168.10.22

1882 [database]

1883 connection = mysql+pymysql://glance:GLANCE_typora@VirtualIP:3307/glance

2006 [glance_store]

2007 stores = file,http

2008 default_store = file

2009 filesystem_store_datadir = /var/lib/glance/images/

3473 [keystone_authtoken]

3474 www_authenticate_uri = http://VirtualIP:5001

3475 auth_url = http://VirtualIP:5001

3476 memcached_servers = cont01:11211,cont02:11211,cont03:11211

3477 auth_type = password

3478 project_domain_name = Default

3479 user_domain_name = Default

3480 project_name = service

3481 username = glance

3482 password = glance_typora

4398 [paste_deploy]

4399 flavor = keystone

[root@cont03:/root]# vim /etc/glance/glance-api.conf

1 [DEFAULT]

2 enable_v1_api = false

730 bind_host = 192.168.10.23

1882 [database]

1883 connection = mysql+pymysql://glance:GLANCE_typora@VirtualIP:3307/glance

2006 [glance_store]

2007 stores = file,http

2008 default_store = file

2009 filesystem_store_datadir = /var/lib/glance/images/

3473 [keystone_authtoken]

3474 www_authenticate_uri = http://VirtualIP:5001

3475 auth_url = http://VirtualIP:5001

3476 memcached_servers = cont01:11211,cont02:11211,cont03:11211

3477 auth_type = password

3478 project_domain_name = Default

3479 user_domain_name = Default

3480 project_name = service

3481 username = glance

3482 password = glance_typora

4398 [paste_deploy]

4399 flavor = keystone

//查看[root@cont0$:/root]# egrep -v "^#|^$" /etc/glance/glance-api.conf

配置Ceph为glance****镜像的后端存储(节后参考)

编辑/etc/glance/glance-api.conf

[glance_store]

stores = rbd

default_store = rbd

rbd_store_pool = images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

rbd_store_chunk_size = 8

如果你想允许用image的写时复制克隆,再添加下列内容到[DEFAULT]段下:

show_image_direct_url = True

建议把如下属性也加上,加到[default]下:

hw_scsi_model=virtio-scsi #添加 virtio-scsi 控制器以获得更好的性能、并支持 discard 操作

hw_disk_bus=scsi #把所有 cinder 块设备都连到这个控制器;

hw_qemu_guest_agent=yes #启用 QEMU guest agent (访客代理)

os_require_quiesce=yes #通过 QEMU guest agent 发送fs-freeze/thaw调用

测试下上传镜像:

如果镜像cirros-0.3.5-x86_64-disk.img是qcow2格式的,可以先将它转换成raw格式的,因为如果要使用ceph作为后端存储,就应该将它的镜像格式转为raw:

可以使用命令qemu-img info cirros-0.3.5-x86_64-disk.img查看它是什么格式的,使用命令将它从qcow2格式转换成raw格式并保存成另外一个镜像文件:

qemu-img convert -f qcow2 -O raw cirros-0.3.5-x86_64-disk.img image.img

以下进行上传镜像操作:

. admin-openrc.sh

glance image-create --name “imagetest” --file image.img --disk-format raw --container-format bare --visibility public --progress

使用如下命令验证是否创建成功:

openstack image list

8.4 配置glance-registry.conf程序配置文件(三个控制节点全操作cont01,cont02.cont03)

[root@cont01:/etc/glance]# cp -p /etc/glance/glance-registry.conf{,.bak}

[root@cont02:/root]# cp -p /etc/glance/glance-registry.conf{,.bak}

[root@cont03:/root]# cp -p /etc/glance/glance-registry.conf{,.bak}

[root@cont01:/etc/glance]# vim /etc/glance/glance-registry.conf

1 [DEFAULT]

603 bind_host = 192.168.10.21

1128 [database]

1129 connection = mysql+pymysql://glance:GLANCE_typora@VirtualIP:3307/glance

1252 [keystone_authtoken]

1253 www_authenticate_uri = http://VirtualIP:5001

1254 auth_url = http://VirtualIP:5001

1255 memcached_servers = cont01:11211,cont02:11211,cont03:11211

1256 auth_type = password

1257 project_domain_name = Default

1258 user_domain_name = Default

1259 project_name = service

1260 username = glance

1261 password = glance_typora

2150 [paste_deploy]

2151 flavor = keystone

[root@cont02:/root]# vim /etc/glance/glance-registry.conf

1 [DEFAULT]

603 bind_host = 192.168.10.22

1128 [database]

1129 connection = mysql+pymysql://glance:GLANCE_typora@VirtualIP:3307/glance

1252 [keystone_authtoken]

1253 www_authenticate_uri = http://VirtualIP:5001

1254 auth_url = http://VirtualIP:5001

1255 memcached_servers = cont01:11211,cont02:11211,cont03:11211

1256 auth_type = password

1257 project_domain_name = Default

1258 user_domain_name = Default

1259 project_name = service

1260 username = glance

1261 password = glance_typora

2150 [paste_deploy]

2151 flavor = keystone

[root@cont03:/root]# vim /etc/glance/glance-registry.conf

1 [DEFAULT]

603 bind_host = 192.168.10.23

1128 [database]

1129 connection = mysql+pymysql://glance:GLANCE_typora@VirtualIP:3307/glance

1252 [keystone_authtoken]

1253 www_authenticate_uri = http://VirtualIP:5001

1254 auth_url = http://VirtualIP:5001

1255 memcached_servers = cont01:11211,cont02:11211,cont03:11211

1256 auth_type = password

1257 project_domain_name = Default

1258 user_domain_name = Default

1259 project_name = service

1260 username = glance

1261 password = glance_typora

2150 [paste_deploy]

2151 flavor = keystone

8.5 同步glance数据库(任意控制节点操作即可)

[root@cont02:/root]# su -s /bin/sh -c "glance-manage db_sync" glance

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:1352: OsloDBDeprecationWarning: EngineFacade is deprecated; please use oslo_db.sqlalchemy.enginefacade

expire_on_commit=expire_on_commit, _conf=conf)

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1280, u"Name 'alembic_version_pkc' ignored for PRIMARY key.")

result = self._query(query)

INFO [alembic.runtime.migration] Running upgrade -> liberty, liberty initial

INFO [alembic.runtime.migration] Running upgrade liberty -> mitaka01, add index on created_at and updated_at columns of 'images' table

INFO [alembic.runtime.migration] Running upgrade mitaka01 -> mitaka02, update metadef os_nova_server

INFO [alembic.runtime.migration] Running upgrade mitaka02 -> ocata_expand01, add visibility to images

INFO [alembic.runtime.migration] Running upgrade ocata_expand01 -> pike_expand01, empty expand for symmetry with pike_contract01

INFO [alembic.runtime.migration] Running upgrade pike_expand01 -> queens_expand01

INFO [alembic.runtime.migration] Running upgrade queens_expand01 -> rocky_expand01, add os_hidden column to images table

INFO [alembic.runtime.migration] Running upgrade rocky_expand01 -> rocky_expand02, add os_hash_algo and os_hash_value columns to images table

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Upgraded database to: rocky_expand02, current revision(s): rocky_expand02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Database migration is up to date. No migration needed.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade mitaka02 -> ocata_contract01, remove is_public from images

INFO [alembic.runtime.migration] Running upgrade ocata_contract01 -> pike_contract01, drop glare artifacts tables

INFO [alembic.runtime.migration] Running upgrade pike_contract01 -> queens_contract01

INFO [alembic.runtime.migration] Running upgrade queens_contract01 -> rocky_contract01

INFO [alembic.runtime.migration] Running upgrade rocky_contract01 -> rocky_contract02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Upgraded database to: rocky_contract02, current revision(s): rocky_contract02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Database is synced successfully.

[root@cont02:/root]# mysql -uroot -ptypora#2019

MariaDB [(none)]> use glance;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MariaDB [glance]> show tables;

+----------------------------------+

| Tables_in_glance |

+----------------------------------+

| alembic_version |

| image_locations |

| image_members |

| image_properties |

| image_tags |

| images |

| metadef_namespace_resource_types |

| metadef_namespaces |

| metadef_objects |

| metadef_properties |

| metadef_resource_types |

| metadef_tags |

| migrate_version |

| task_info |

| tasks |

+----------------------------------+

15 rows in set (0.001 sec)

MariaDB [glance]> exit

Bye

8.6 启动glance服务

(所有控制节点上操作 cont01,cont02,cont03)

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl start openstack-glance-api.service openstack-glance-registry.service

systemctl status openstack-glance-api.service openstack-glance-registry.service

8.7 测试镜像

[root@cont02:/root]# cd /var/lib/glance/images/

[root@cont02:/var/lib/glance/images]# openstack image list

[root@cont02:/var/lib/glance/images]# ls

[root@cont02:/var/lib/glance/images]# glance image-list

+----+------+

| ID | Name |

+----+------+

+----+------+

[root@cont02:/var/lib/glance/images]# cd

[root@cont02:/root]# wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

[root@cont02:/root]# ls

admin-openrc anaconda-ks.cfg cirros-0.4.0-x86_64-disk.img demo-openrc get-pip.py openrc

[root@cont02:/root]# openstack image create "cirros" \

> --file /root/cirros-0.4.0-x86_64-disk.img \

> --disk-format qcow2 --container-format bare \

> --public

[root@cont02:/root]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| f18d54e0-cf78-4881-9348-f446958a4c4b | cirros | active |

+--------------------------------------+--------+--------+

[root@cont02:/root]# glance image-list

+--------------------------------------+--------+

| ID | Name |

+--------------------------------------+--------+

| f18d54e0-cf78-4881-9348-f446958a4c4b | cirros |

+--------------------------------------+--------+

8.7 设置PCS资源

[root@cont02:/root]# pcs resource create openstack-glance-api systemd:openstack-glance-api --clone interleave=true

[root@cont02:/root]# pcs resource create openstack-glance-registry systemd:openstack-glance-registry --clone interleave=true

[root@cont02:/root]# pcs resource

VirtualIP (ocf::heartbeat:IPaddr2): Started cont01

Clone Set: openstack-glance-api-clone [openstack-glance-api]

Started: [ cont01 cont02 cont03 ]

Clone Set: openstack-glance-registry-clone [openstack-glance-registry]

Started: [ cont01 cont02 cont03 ]

9、 Nova控制节点集群

9.1 创建Nova相关数据库(任意控制节点操作即可)

注:nova服务含4个数据库,统一授权到nova用户;

[root@cont02:/root]# mysql -uroot -p"typora#2019"

MariaDB [(none)]> CREATE DATABASE nova_api;

Query OK, 1 row affected (0.010 sec)

MariaDB [(none)]> CREATE DATABASE nova;

Query OK, 1 row affected (0.009 sec)

MariaDB [(none)]> CREATE DATABASE nova_cell0;

Query OK, 1 row affected (0.009 sec)

MariaDB [(none)]> CREATE DATABASE placement;

Query OK, 1 row affected (0.009 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_typora';

Query OK, 0 rows affected (0.011 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_typora';

Query OK, 0 rows affected (0.010 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_typora';

Query OK, 0 rows affected (0.010 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_typora';

Query OK, 0 rows affected (0.011 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_typora';

Query OK, 0 rows affected (0.009 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_typora';

Query OK, 0 rows affected (0.010 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_typora';

Query OK, 0 rows affected (0.024 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_typora';

Query OK, 0 rows affected (0.010 sec)

MariaDB [(none)]> flush privileges;

Query OK, 0 rows affected (0.011 sec)

MariaDB [(none)]> exit

Bye

9.2 创建nova/placement-api(任意控制节点操作即可)

[root@cont02:/root]# source openrc

[root@cont02:/root]# openstack user create --domain default --password=nova_typora nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | edf5d194c7454a3e81fe5f099cb743b1 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

//密码:nova_typora

[root@cont02:/root]# openstack user list

+----------------------------------+--------+

| ID | Name |

+----------------------------------+--------+

| 02c1960ba4c44f46b7152c0a7e52fdba | admin |

| 34c34fe5d78e4f39bfd63f82ad989585 | glance |

| 61c06b9891a64e68b87d84dbcec5e9ac | myuser |

| edf5d194c7454a3e81fe5f099cb743b1 | nova |

+----------------------------------+--------+

[root@cont02:/root]# openstack role add --project service --user nova admin

[root@cont02:/root]# openstack service list

+----------------------------------+----------+----------+

| ID | Name | Type |

+----------------------------------+----------+----------+

| 369d083b4a094c1fb57e189d54305ea9 | glance | image |

| 66fbd70e526f48828b5a18cb7aaf4d1b | keystone | identity |

+----------------------------------+----------+----------+

[root@cont02:/root]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 28ed51dbfde848f791e70a3be574c143 |

| name | nova |

| type | compute |

+-------------+----------------------------------+

[root@cont02:/root]# openstack service list

+----------------------------------+----------+----------+

| ID | Name | Type |

+----------------------------------+----------+----------+

| 28ed51dbfde848f791e70a3be574c143 | nova | compute |

| 369d083b4a094c1fb57e189d54305ea9 | glance | image |

| 66fbd70e526f48828b5a18cb7aaf4d1b | keystone | identity |

+----------------------------------+----------+----------+

[root@cont02:/root]# openstack endpoint create --region RegionOne compute public http://VirtualIP:9774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3ae6c07e8c1844b3a21c3fc073cd3da9 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 28ed51dbfde848f791e70a3be574c143 |

| service_name | nova |

| service_type | compute |

| url | http://VirtualIP:9774/v2.1 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne compute internal http://VirtualIP:9774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b0f71d34aedf41a9a8fb9d56313efb00 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 28ed51dbfde848f791e70a3be574c143 |

| service_name | nova |

| service_type | compute |

| url | http://VirtualIP:9774/v2.1 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne compute admin http://VirtualIP:9774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 7bff1a44974a42a59e49eebffad550c0 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 28ed51dbfde848f791e70a3be574c143 |

| service_name | nova |

| service_type | compute |

| url | http://VirtualIP:9774/v2.1 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack catalog list

//查看所有服务端点的服务地址

+----------+----------+----------------------------------------+

| Name | Type | Endpoints |

+----------+----------+----------------------------------------+

| nova | compute | RegionOne |

| | | public: http://VirtualIP:9774/v2.1 |

| | | RegionOne |

| | | admin: http://VirtualIP:9774/v2.1 |

| | | RegionOne |

| | | internal: http://VirtualIP:9774/v2.1 |

| | | |

| glance | image | RegionOne |

| | | admin: http://VirtualIP:9293 |

| | | RegionOne |

| | | internal: http://VirtualIP:9293 |

| | | RegionOne |

| | | public: http://VirtualIP:9293 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://VirtualIP:5001/v3/ |

| | | RegionOne |

| | | admin: http://VirtualIP:5001/v3/ |

| | | RegionOne |

| | | public: http://VirtualIP:5001/v3/ |

| | | |

+----------+----------+----------------------------------------+

[root@cont02:/root]# openstack user create --domain default --password=placement_typora placement

//密码:placement_typora

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 66b6d87d0410419e8070817a9fa6493e |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@cont02:/root]# openstack role add --project service --user placement admin

[root@cont02:/root]# openstack service create --name placement --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | dba3c60da5084dfca6b220fe666c2f9b |

| name | placement |

| type | placement |

+-------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne placement public http://VirtualIP:9778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | c2a1f308b3c04a448667967afb6016fe |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | dba3c60da5084dfca6b220fe666c2f9b |

| service_name | placement |

| service_type | placement |

| url | http://VirtualIP:9778 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne placement internal http://VirtualIP:9778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 9035afba42be4b4387571d02b16c168c |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | dba3c60da5084dfca6b220fe666c2f9b |

| service_name | placement |

| service_type | placement |

| url | http://VirtualIP:9778 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne placement admin http://VirtualIP:9778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 53be3d592dfa4060b46ca6a488067191 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | dba3c60da5084dfca6b220fe666c2f9b |

| service_name | placement |

| service_type | placement |

| url | http://VirtualIP:9778 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack catalog list

+-----------+-----------+----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+----------------------------------------+

| nova | compute | RegionOne |

| | | public: http://VirtualIP:9774/v2.1 |

| | | RegionOne |

| | | admin: http://VirtualIP:9774/v2.1 |

| | | RegionOne |

| | | internal: http://VirtualIP:9774/v2.1 |

| | | |

| glance | image | RegionOne |

| | | admin: http://VirtualIP:9293 |

| | | RegionOne |

| | | internal: http://VirtualIP:9293 |

| | | RegionOne |

| | | public: http://VirtualIP:9293 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://VirtualIP:5001/v3/ |

| | | RegionOne |

| | | admin: http://VirtualIP:5001/v3/ |

| | | RegionOne |

| | | public: http://VirtualIP:5001/v3/ |

| | | |

| placement | placement | RegionOne |

| | | admin: http://VirtualIP:9778 |

| | | RegionOne |

| | | internal: http://VirtualIP:9778 |

| | | RegionOne |

| | | public: http://VirtualIP:9778 |

| | | |

+-----------+-----------+----------------------------------------+

9.3 安装Nova服务(在全部控制节点安装nova相关服务)

[root@cont0$:/root]#

yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api -y

9.4 配置nova.conf(在全部控制节点安装nova相关服务)

[root@cont01:/root]# cp -p /etc/nova/nova.conf{,.bak}

[root@cont02:/etc/nova]# cp -p /etc/nova/nova.conf{,.bak}

[root@cont03:/root]# cp -p /etc/nova/nova.conf{,.bak}

[root@cont02:/etc/nova]# vim /etc/nova/nova.conf

1 [DEFAULT]

2 enabled_apis = osapi_compute,metadata

3 my_ip = 192.168.10.22

4 use_neutron = true

5 firewall_driver = nova.virt.firewall.NoopFirewallDriver

6 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

7 osapi_compute_listen=$my_ip

8 osapi_compute_listen_port=8774

9 metadata_listen=$my_ip

10 metadata_listen_port=8775

12 agent_down_time = 30

13 report_interval=15

14 dhcp_agents_per_network = 3

3186 [api]

3187 auth_strategy = keystone

3480 [api_database]

3481 connection = mysql+pymysql://nova:NOVA_typora@VirtualIP:3307/nova_api

3582 [cache]

3583 backend=oslo_cache.memcache_pool

3584 enabled=True

3585 memcached_servers=cont01:11211,cont02:11211,cont03:11211

4566 [database]

4567 connection = mysql+pymysql://nova:NOVA_typora@VirtualIP:3307/nova

5248 [glance]

5249 api_servers = http://VirtualIP:9293

6069 [keystone_authtoken]

6070 auth_url = http://VirtualIP:5001/v3

6071 memcached_servers=cont01:11211,cont02:11211,cont03:11211

6072 auth_type = password

6073 project_domain_name = Default

6074 user_domain_name = Default

6075 project_name = service

6076 username = nova

6077 password = nova_typora

8003 [oslo_concurrency]

8004 lock_path = /var/lib/nova/tmp

8822 [placement]

8823 region_name = RegionOne

8824 project_domain_name = Default

8825 project_name = service

8826 auth_type = password

8827 user_domain_name = Default

8828 auth_url = http://VirtualIP:5001/v3

8829 username = placement

8830 password = placement_typora

8986 ##[placement_database]

8987 ##connection = mysql+pymysql://placement:PLACEMENT_typora@VirtualIP:3307/placement

9602 [scheduler]

9603 discover_hosts_in_cells_interval = 60

10705 [vnc]

10706 enabled = true

10707 server_listen = $my_ip

10708 server_proxyclient_address = $my_ip

10709 ##novncproxy_base_url=http://$my_ip:6080/vnc_auto.html

10710 ##novncproxy_host=$my_ip

10711 ##novncproxy_port=6080

[root@cont01:/etc/nova]# vim /etc/nova/nova.conf

1 [DEFAULT]

2 enabled_apis = osapi_compute,metadata

3 my_ip = 192.168.10.21

4 use_neutron = true

5 firewall_driver = nova.virt.firewall.NoopFirewallDriver

6 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

7 osapi_compute_listen=$my_ip

8 osapi_compute_listen_port=8774

9 metadata_listen=$my_ip

10 metadata_listen_port=8775

3186 [api]

3187 auth_strategy = keystone

3480 [api_database]

3481 connection = mysql+pymysql://nova:NOVA_typora@VirtualIP:3307/nova_api

3582 [cache]

3583 backend=oslo_cache.memcache_pool

3584 enabled=True

3585 memcached_servers=cont01:11211,cont02:11211,cont03:11211

4566 [database]

4567 connection = mysql+pymysql://nova:NOVA_typora@VirtualIP:3307/nova

5248 [glance]

5249 api_servers = http://VirtualIP:9293

6069 [keystone_authtoken]

6070 auth_url = http://VirtualIP:5001/v3

6071 memcached_servers=cont01:11211,cont02:11211,cont03:11211

6072 auth_type = password

6073 project_domain_name = Default

6074 user_domain_name = Default

6075 project_name = service

6076 username = nova

6077 password = nova_typora

8003 [oslo_concurrency]

8004 lock_path = /var/lib/nova/tmp

8822 [placement]

8823 region_name = RegionOne

8824 project_domain_name = Default

8825 project_name = service

8826 auth_type = password

8827 user_domain_name = Default

8828 auth_url = http://VirtualIP:5001/v3

8829 username = placement

8830 password = placement_typora

8986 ##[placement_database]

8987 ##connection = mysql+pymysql://placement:PLACEMENT_typora@VirtualIP:3307/placement

9602 [scheduler]

9603 discover_hosts_in_cells_interval = 60

10705 [vnc]

10706 enabled = true

10707 server_listen = $my_ip

10708 server_proxyclient_address = $my_ip

10709 ##novncproxy_base_url=http://$my_ip:6080/vnc_auto.html

10710 ##novncproxy_host=$my_ip

10711 ##novncproxy_port=6080

[root@cont03:/etc/nova]# vim /etc/nova/nova.conf

1 [DEFAULT]

2 enabled_apis = osapi_compute,metadata

3 my_ip = 192.168.10.23

4 use_neutron = true

5 firewall_driver = nova.virt.firewall.NoopFirewallDriver

6 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

7 osapi_compute_listen=$my_ip

8 osapi_compute_listen_port=8774

9 metadata_listen=$my_ip

10 metadata_listen_port=8775

3186 [api]

3187 auth_strategy = keystone

3480 [api_database]

3481 connection = mysql+pymysql://nova:NOVA_typora@VirtualIP:3307/nova_api

3582 [cache]

3583 backend=oslo_cache.memcache_pool

3584 enabled=True

3585 memcached_servers=cont01:11211,cont02:11211,cont03:11211

4566 [database]

4567 connection = mysql+pymysql://nova:NOVA_typora@VirtualIP:3307/nova

5248 [glance]

5249 api_servers = http://VirtualIP:9293

6069 [keystone_authtoken]

6070 auth_url = http://VirtualIP:5001/v3

6071 memcached_servers=cont01:11211,cont02:11211,cont03:11211

6072 auth_type = password

6073 project_domain_name = Default

6074 user_domain_name = Default

6075 project_name = service

6076 username = nova

6077 password = nova_typora

8003 [oslo_concurrency]

8004 lock_path = /var/lib/nova/tmp

8822 [placement]

8823 region_name = RegionOne

8824 project_domain_name = Default

8825 project_name = service

8826 auth_type = password

8827 user_domain_name = Default

8828 auth_url = http://VirtualIP:5001/v3

8829 username = placement

8830 password = placement_typora

8986 ##[placement_database]

8987 ##connection = mysql+pymysql://placement:PLACEMENT_typora@VirtualIP:3307/placement

9602 [scheduler]

9603 discover_hosts_in_cells_interval = 60

10705 [vnc]

10706 enabled = true

10707 server_listen = $my_ip

10708 server_proxyclient_address = $my_ip

10709 ##novncproxy_base_url=http://$my_ip:6080/vnc_auto.html

10710 ##novncproxy_host=$my_ip

10711 ##novncproxy_port=6080

9.5 配置00-nova-placement-api.conf

[root@cont01:/root]# cp -p /etc/httpd/conf.d/00-nova-placement-api.conf{,.bak}

[root@cont02:/root]# cp -p /etc/httpd/conf.d/00-nova-placement-api.conf{,.bak}

[root@cont03:/root]# cp -p /etc/httpd/conf.d/00-nova-placement-api.conf{,.bak}

[root@cont02:/root]# vim /etc/httpd/conf.d/00-nova-placement-api.conf

//在最后添加

#Placement API

/usr/bin>

= 2.4>

Require all granted

</IfVersion>

.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

[root@cont01:/root]# vim /etc/httpd/conf.d/00-nova-placement-api.conf

//在最后添加

#Placement API

/usr/bin>

= 2.4>

Require all granted

</IfVersion>

.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

[root@cont03:/root]# vim /etc/httpd/conf.d/00-nova-placement-api.conf

//在最后添加

#Placement API

/usr/bin>

= 2.4>

Require all granted

</IfVersion>

.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

[root@cont01:/root]# systemctl restart httpd

[root@cont02:/root]# systemctl restart httpd

[root@cont03:/root]# systemctl restart httpd

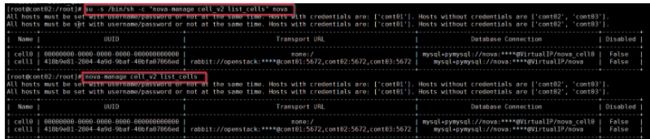

9.6 同步nova相关数据库(任意控制节点操作)

[root@cont02:/root]# su -s /bin/sh -c "nova-manage api_db sync" nova

[root@cont02:/root]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

[root@cont02:/root]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

All hosts must be set with username/password or not at the same time. Hosts with credentials are: ['cont01']. Hosts without credentials are ['cont02', 'cont03'].

All hosts must be set with username/password or not at the same time. Hosts with credentials are: ['cont01']. Hosts without credentials are ['cont02', 'cont03'].

418b9e81-2804-4a9d-9baf-40bfa07066ed

[root@cont02:/root]# su -s /bin/sh -c "nova-manage db sync" nova

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release')

result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release')

result = self._query(query)

[root@cont02:/root]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+-----------------------------------

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+-----------------------------------

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@VirtualIP/nova_cell0 | False

| cell1 | 418b9e81-2804-4a9d-9baf-40bfa07066ed | rabbit://openstack:****@cont01:5672,cont02:5672,cont03:5672 | mysql+pymysql://nova:****@VirtualIP/nova | False |

+-------+--------------------------------------+-----------------------------------

9.6 启动nova服务(所有控制节点操作cont01 cont02 cont03)

[root@cont0$:/root]#

systemctl enable openstack-nova-api.service openstack-nova-consoleauth openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl restart openstack-nova-api.service openstack-nova-consoleauth openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl status openstack-nova-api.service openstack-nova-consoleauth openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service | grep active

9.7 验证

[root@cont02:/root]# . admin-openrc

[root@cont02:/root]# openstack compute service list

+----+------------------+--------+----------+---------+-------+------------

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+--------+----------+---------+-------+------------

| 1 | nova-scheduler | cont01 | internal | enabled | up | 2020-01-17T05:52:27.000000 |

| 13 | nova-conductor | cont01 | internal | enabled | up | 2020-01-17T05:52:32.000000 |

| 16 | nova-consoleauth | cont01 | internal | enabled | up | 2020-01-17T05:52:25.000000 |

| 28 | nova-scheduler | cont02 | internal | enabled | up | 2020-01-17T05:52:25.000000 |

| 43 | nova-conductor | cont02 | internal | enabled | up | 2020-01-17T05:52:32.000000 |

| 49 | nova-consoleauth | cont02 | internal | enabled | up | 2020-01-17T05:52:31.000000 |

| 67 | nova-conductor | cont03 | internal | enabled | up | 2020-01-17T05:52:27.000000 |

| 70 | nova-scheduler | cont03 | internal | enabled | up | 2020-01-17T05:52:26.000000 |

| 82 | nova-consoleauth | cont03 | internal | enabled | up | 2020-01-17T05:52:32.000000 |

+----+------------------+--------+----------+---------+-------+------------

[root@cont02:/root]# openstack catalog list

+-----------+-----------+----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+----------------------------------------+

| nova | compute | RegionOne |

| | | public: http://VirtualIP:9774/v2.1 |

| | | RegionOne |

| | | admin: http://VirtualIP:9774/v2.1 |

| | | RegionOne |

| | | internal: http://VirtualIP:9774/v2.1 |

| | | |

| glance | image | RegionOne |

| | | admin: http://VirtualIP:9293 |

| | | RegionOne |

| | | internal: http://VirtualIP:9293 |

| | | RegionOne |

| | | public: http://VirtualIP:9293 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://VirtualIP:5001/v3/ |

| | | RegionOne |

| | | admin: http://VirtualIP:5001/v3/ |

| | | RegionOne |

| | | public: http://VirtualIP:5001/v3/ |

| | | |

| placement | placement | RegionOne |

| | | admin: http://VirtualIP:9778 |

| | | RegionOne |

| | | internal: http://VirtualIP:9778 |

| | | RegionOne |

| | | public: http://VirtualIP:9778 |

| | | |

+-----------+-----------+----------------------------------------+

[root@cont02:/root]# nova-status upgrade check

+--------------------------------------------------------------------+

| Upgrade Check Results |

+--------------------------------------------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: No host mappings or compute nodes were found. Remember to |

| run command 'nova-manage cell_v2 discover_hosts' when new |

| compute hosts are deployed. |

+--------------------------------------------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Resource Providers |

| Result: Success |

| Details: There are no compute resource providers in the Placement |

| service nor are there compute nodes in the database. |

| Remember to configure new compute nodes to report into the |

| Placement service. See |

| https://docs.openstack.org/nova/latest/user/placement.html |

| for more details. |

+--------------------------------------------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: API Service Version |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Request Spec Migration |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Console Auths |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

9.8 设置PCS资源(任意控制节点操作)

//添加资源openstack-nova-api,openstack-nova-consoleauth,openstack-nova-scheduler,openstack-nova-conductor与openstack-nova-novncproxy

//经验证,建议openstack-nova-api,openstack-nova-consoleauth,openstack-nova-conductor与openstack-nova-novncproxy 等无状态服务以active/active模式运行;

//经验证,建议openstack-nova-scheduler等服务以active/passive模式运行

[root@cont01:/root]# pcs resource create openstack-nova-api systemd:openstack-nova-api --clone interleave=true

[root@cont01:/root]# pcs resource create openstack-nova-consoleauth systemd:openstack-nova-consoleauth --clone interleave=true

[root@cont01:/root]# pcs resource create openstack-nova-scheduler systemd:openstack-nova-scheduler --clone interleave=true

[root@cont01:/root]# pcs resource create openstack-nova-conductor systemd:openstack-nova-conductor --clone interleave=true

[root@cont01:/root]# pcs resource create openstack-nova-novncproxy systemd:openstack-nova-novncproxy --clone interleave=true

[root@cont01:/root]# pcs resource

VirtualIP (ocf::heartbeat:IPaddr2): Started cont01

Clone Set: openstack-glance-api-clone [openstack-glance-api]

Started: [ cont01 cont02 cont03 ]

Clone Set: openstack-glance-registry-clone [openstack-glance-registry]

Started: [ cont01 cont02 cont03 ]

Clone Set: openstack-nova-api-clone [openstack-nova-api]

Started: [ cont01 cont02 cont03 ]

Clone Set: openstack-nova-consoleauth-clone [openstack-nova-consoleauth]

Started: [ cont01 cont02 cont03 ]

Clone Set: openstack-nova-scheduler-clone [openstack-nova-scheduler]

Started: [ cont01 cont02 cont03 ]

Clone Set: openstack-nova-conductor-clone [openstack-nova-conductor]

Started: [ cont01 cont02 cont03 ]

Clone Set: openstack-nova-novncproxy-clone [openstack-nova-novncproxy]

Started: [ cont01 cont02 cont03 ]

控制nova节点已布置完成

9.8 部署 Nova计算节点(部署计算节点上comp01、comp02、comp03)

在计算节点上comp01、comp02、comp03执行:[root@comp0$:/root]

yum install centos-release-openstack-rocky -y

yum update

yum install openstack-nova-compute -y

9.10 计算节点和直接ssh免密认证

ssh-keygen

ssh-copy-id comp01

ssh-copy-id comp02

ssh-copy-id comp03

9.11 配置计算节点的配置nova.conf

[root@comp01:/etc/nova]# cp -p /etc/nova/nova.conf{,.bak}

[root@comp02:/etc/nova]# cp -p /etc/nova/nova.conf{,.bak}

[root@comp03:/etc/nova]# cp -p /etc/nova/nova.conf{,.bak}

[root@comp02:/etc/nova]# vim /etc/nova/nova.conf

1 [DEFAULT]

6 enabled_apis = osapi_compute,metadata

7 my_ip = 192.168.10.18

8 use_neutron = true

9 firewall_driver = nova.virt.firewall.NoopFirewallDriver

10 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

3182 [api]

3183 auth_strategy = keystone

5240 [glance]

5241 api_servers = http://VirtualIP:9293

6060 [keystone_authtoken]

6061 auth_url = http://VirtualIP:5001/v3

6062 memcached_servers=cont01:11211,cont02:11211,cont03:11211

6063 auth_type = password

6064 project_domain_name = Default

6065 user_domain_name = Default

6066 project_name = service

6067 username = nova

6068 password = nova_typora

6259 [libvirt]

6260 virt_type = qemu

//注:通过“egrep -c '(vmx|svm)' /proc/cpuinfo”命令查看主机是否支持硬件加速,返回1或者更大的值表示支持,返回0表示不支持;

//注: 支持硬件加速使用”kvm”类型,不支持则使用”qemu”类型;

//注: 一般虚拟机不支持硬件加速

//注:此处正常全用qemu,实验测试时,根据返回结果为8 ,将virt_type = kvm 后,创建实例显示 nova的type错误,实例创建后显示状态为错误

8813 [placement]

8814 region_name = RegionOne

8815 project_domain_name = Default

8816 project_name = service

8817 auth_type = password

8818 user_domain_name = Default

8819 auth_url = http://VirtualIP:5001/v3

8820 username = placement

8821 password = placement_typora

10689 [vnc]

10690 enabled=true

10691 vncserver_listen=0.0.0.0

10692 vncserver_proxyclient_address=$my_ip

10693 novncproxy_base_url=http://192.168.10.20:6081/vnc_auto.html

//注:此处novncproxy_base_url的IP使用数字192.168.10.20,不建议使用VirtualIP;因为用VirtualIP会报错。

#novncproxy_host=$my_ip

#novncproxy_port=6080

[root@comp01:/root]# vim /etc/nova/nova.conf

1 [DEFAULT]

6 enabled_apis = osapi_compute,metadata

7 my_ip = 192.168.10.18

8 use_neutron = true

9 firewall_driver = nova.virt.firewall.NoopFirewallDriver

10 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

3182 [api]

3183 auth_strategy = keystone

5240 [glance]

5241 api_servers = http://VirtualIP:9293

6060 [keystone_authtoken]

6061 auth_url = http://VirtualIP:5001/v3

6062 memcached_servers=cont01:11211,cont02:11211,cont03:11211

6063 auth_type = password

6064 project_domain_name = Default

6065 user_domain_name = Default

6066 project_name = service

6067 username = nova

6068 password = nova_typora

6259 [libvirt]

6260 virt_type = qemu

//注:通过“egrep -c '(vmx|svm)' /proc/cpuinfo”命令查看主机是否支持硬件加速,返回1或者更大的值表示支持,返回0表示不支持;

//注: 支持硬件加速使用”kvm”类型,不支持则使用”qemu”类型;

//注: 一般虚拟机不支持硬件加速

//注:此处正常全用qemu,实验测试时,根据返回结果为8 ,将virt_type = kvm 后,创建实例显示 nova的type错误,实例创建后显示状态为错误

8813 [placement]

8814 region_name = RegionOne

8815 project_domain_name = Default

8816 project_name = service

8817 auth_type = password

8818 user_domain_name = Default

8819 auth_url = http://VirtualIP:5001/v3

8820 username = placement

8821 password = placement_typora

10689 [vnc]

10690 enabled=true

10691 vncserver_listen=0.0.0.0

10692 vncserver_proxyclient_address=$my_ip

10693 novncproxy_base_url=http://192.168.10.20:6081/vnc_auto.html

//注:此处novncproxy_base_url的IP使用192.168.10.20,不建议使用VirtualIP;因为用VIrtualIP会报错。

[root@comp03:/root]# vim /etc/nova/nova.conf

1 [DEFAULT]

6 enabled_apis = osapi_compute,metadata

7 my_ip = 192.168.10.17

8 use_neutron = true

9 firewall_driver = nova.virt.firewall.NoopFirewallDriver

10 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

3182 [api]

3183 auth_strategy = keystone

5240 [glance]

5241 api_servers = http://VirtualIP:9293

6060 [keystone_authtoken]

6061 auth_url = http://VirtualIP:5001/v3

6062 memcached_servers=cont01:11211,cont02:11211,cont03:11211

6063 auth_type = password

6064 project_domain_name = Default

6065 user_domain_name = Default

6066 project_name = service

6067 username = nova

6068 password = nova_typora

6259 [libvirt]

6260 virt_type = qemu

//注:通过“egrep -c '(vmx|svm)' /proc/cpuinfo”命令查看主机是否支持硬件加速,返回1或者更大的值表示支持,返回0表示不支持;

//注: 支持硬件加速使用”kvm”类型,不支持则使用”qemu”类型;

//注: 一般虚拟机不支持硬件加速

//注:此处正常全用qemu,实验测试时,根据返回结果为8 ,将virt_type = kvm 后,创建实例显示 nova的type错误,实例创建后显示状态为错误

8813 [placement]

8814 region_name = RegionOne

8815 project_domain_name = Default

8816 project_name = service

8817 auth_type = password

8818 user_domain_name = Default

8819 auth_url = http://VirtualIP:5001/v3

8820 username = placement

8821 password = placement_typora

10689 [vnc]

10690 enabled=true

10691 vncserver_listen=0.0.0.0

10692 vncserver_proxyclient_address=$my_ip

10693 novncproxy_base_url=http://192.168.10.20:6081/vnc_auto.html

//注:此处novncproxy_base_url的IP使用192.168.10.20,不建议使用VirtualIP;因为用VIrtualIP会报错。

9.12 启动计算节点服务 在comp01 comp02 comp03上执行

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl restart libvirtd.service openstack-nova-compute.service

systemctl status libvirtd.service openstack-nova-compute.service

9.13 向cell数据库添加计算节点(在任意控制节点操作)

[root@cont02:/root]# . admin-openrc

[root@cont02:/root]# openstack compute service list --service nova-compute

+----+--------------+--------+------+---------+-------+----------------------------

| ID | Binary | Host | Zone | Status | State | Updated At

| 91 | nova-compute | comp02 | nova | enabled | up | 2020-01-17T12:26:33.000000 |

| 94 | nova-compute | comp01 | nova | enabled | up | 2020-01-17T12:26:29.000000 |

| 97 | nova-compute | comp03 | nova | enabled | up | 2020-01-17T12:26:36.000000 |

+----+--------------+--------+------+---------+-------+----------------------------

//手工发现计算节点主机,即添加到cell数据库

[root@cont02:/root]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

All hosts must be set with username/password or not at the same time. Hosts with credentials are: ['cont01']. Hosts without credentials are ['cont02', 'cont03'].

All hosts must be set with username/password or not at the same time. Hosts with credentials are: ['cont01']. Hosts without credentials are ['cont02', 'cont03'].

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 418b9e81-2804-4a9d-9baf-40bfa07066ed

Found 0 unmapped computes in cell: 418b9e81-2804-4a9d-9baf-40bfa07066ed

[root@cont02:/root]# openstack hypervisor list

+----+---------------------+-----------------+---------------+-------+

| ID | Hypervisor Hostname | Hypervisor Type | Host IP | State |

+----+---------------------+-----------------+---------------+-------+

| 1 | comp01 | QEMU | 192.168.10.19 | up |

| 4 | comp02 | QEMU | 192.168.10.18 | up |

| 7 | comp03 | QEMU | 192.168.10.17 | up |

+----+---------------------+-----------------+---------------+-------+

[root@cont02:/root]# openstack compute service list

//注:此命令为查看计算服务列表 Status表示nova功能已打开 State表示nova功能已运行

+----+------------------+--------+----------+---------+-------+--------------------

| ID | Binary | Host | Zone | Status | State | Updated At

+----+------------------+--------+----------+---------+-------+--------------------

| 1 | nova-scheduler | cont01 | internal | enabled | up | 2020-01-17T12:28:17.000000 |

| 13 | nova-conductor | cont01 | internal | enabled | up | 2020-01-17T12:28:14.000000 |

| 16 | nova-consoleauth | cont01 | internal | enabled | up | 2020-01-17T12:28:18.000000 |

| 28 | nova-scheduler | cont02 | internal | enabled | up | 2020-01-17T12:28:20.000000 |

| 43 | nova-conductor | cont02 | internal | enabled | up | 2020-01-17T12:28:21.000000 |

| 49 | nova-consoleauth | cont02 | internal | enabled | up | 2020-01-17T12:28:19.000000 |

| 67 | nova-conductor | cont03 | internal | enabled | up | 2020-01-17T12:28:18.000000 |

| 70 | nova-scheduler | cont03 | internal | enabled | up | 2020-01-17T12:28:21.000000 |

| 82 | nova-consoleauth | cont03 | internal | enabled | up | 2020-01-17T12:28:14.000000 |

| 91 | nova-compute | comp02 | nova | enabled | up | 2020-01-17T12:28:23.000000 |

| 94 | nova-compute | comp01 | nova | enabled | up | 2020-01-17T12:28:19.000000 |

| 97 | nova-compute | comp03 | nova | enabled | up | 2020-01-17T12:28:16.000000 |

+----+------------------+--------+----------+---------+-------+--------------------

[root@cont02:/root]# nova-status upgrade check

//注:Cells v2 、Placement API、Resource Providers 这3个必须成功

+--------------------------------+

| Upgrade Check Results |

+--------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Resource Providers |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: API Service Version |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Request Spec Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Console Auths |

| Result: Success |

| Details: None |

+--------------------------------+

10、 Neutron控制/网络节点集群

neutron-server 端口9696 api:接受和响应外部的网络管理请求

neutron-linuxbridge-agent: 负责创建桥接网卡

neturon-dhcp-agent: 负责分配IP

neturon-metadata-agent: 配合Nova-metadata-api实现虚拟机的定制化操作

L3-agent 实现三层网络vxlan(网络层)

**Neutron Server:**对外提供Openstack网络API,接收请求,并调用Plugin处理请求。

**Plugin:**处理Neturon Server发来的请求,维护Openstack逻辑网络状态,并调用Agent处理请求。

**Agent:**处理Plugin的请求,负责在network provider上真正实现各种网络功能。

**Network provider:**提供网络服务的虚拟或物理网络设备,例如Linux Bridge,Open vSwitch或者其他支持Neutron的物理交换机。

**Queue:**Neutron Server,Plugin和Agent之间通过Messagings Queue通信和调用。

**Database:**存放OpenStack的网络状态信息,包括Network,Subnet,Port,Router等。

10.1 创建neutron数据库(在任意控制节点创建数据库,后台数据自动同步)

[root@cont02:/root]# mysql -uroot -p"typora#2019"

MariaDB [(none)]> CREATE DATABASE neutron;

Query OK, 1 row affected (0.009 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_typora';

Query OK, 0 rows affected (0.009 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_typora';

Query OK, 0 rows affected (0.011 sec)

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

Bye

10.2 创建neutron用户、赋权、服务实体

[root@cont02:/root]# source openrc

[root@cont02:/root]# openstack user create --domain default --password=neutron_typora neutron

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | a80531c8a7534a30954246b1eefd74d1 |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

// 为neutron用户赋予admin权限

[root@cont02:/root]# openstack role add --project service --user neutron admin

// neutron服务实体类型”network”

[root@cont02:/root]# openstack service create --name neutron --description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | a0a29f234103480ebc93457753d59341 |

| name | neutron |

| type | network |

+-------------+----------------------------------+

[root@cont02:/root]# openstack service list

+----------------------------------+-----------+-----------+

| ID | Name | Type |

+----------------------------------+-----------+-----------+

| 28ed51dbfde848f791e70a3be574c143 | nova | compute |

| 369d083b4a094c1fb57e189d54305ea9 | glance | image |

| 66fbd70e526f48828b5a18cb7aaf4d1b | keystone | identity |

| a0a29f234103480ebc93457753d59341 | neutron | network |

| dba3c60da5084dfca6b220fe666c2f9b | placement | placement |

+----------------------------------+-----------+-----------+

10.3 创建neutron-api

// neutron-api 服务类型为network;

[root@cont02:/root]# openstack endpoint create --region RegionOne network public http://VirtualIP:9997

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 670dfb6bb8ba4b0eb29cf5ce117fa7f7 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a0a29f234103480ebc93457753d59341 |

| service_name | neutron |

| service_type | network |

| url | http://VirtualIP:9997 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne network internal http://VirtualIP:9997

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 2c6b3657b8bd431586934cc9dde33f84 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a0a29f234103480ebc93457753d59341 |

| service_name | neutron |

| service_type | network |

| url | http://VirtualIP:9997 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint create --region RegionOne network admin http://VirtualIP:9997

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 5ff90d7cff57495d80338ef7299319d3 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a0a29f234103480ebc93457753d59341 |

| service_name | neutron |

| service_type | network |

| url | http://VirtualIP:9997 |

+--------------+----------------------------------+

[root@cont02:/root]# openstack endpoint list

+----------------------------------+-----------+--------------+--------------+---------+----

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+----------------------------------+-----------+--------------+--------------+---------+----

| 2c6b3657b8bd431586934cc9dde33f84 | RegionOne | neutron | network | True | internal | http://VirtualIP:9697 |

| 2e109052bb4a4affa30fe3b9e3e5fcc3 | RegionOne | keystone | identity | True | internal | http://VirtualIP:5001/v3/ |

| 3ae6c07e8c1844b3a21c3fc073cd3da9 | RegionOne | nova | compute | True | public | http://VirtualIP:9774/v2.1 |

| 40e4fa83731d4933afe694481b5e0464 | RegionOne | glance | image | True | admin | http://VirtualIP:9293 |

| 53be3d592dfa4060b46ca6a488067191 | RegionOne | placement | placement | True | admin | http://VirtualIP:9778 |

| 5ff90d7cff57495d80338ef7299319d3 | RegionOne | neutron | network | True | admin | http://VirtualIP:9997 |

| 670dfb6bb8ba4b0eb29cf5ce117fa7f7 | RegionOne | neutron | network | True | public | http://VirtualIP:9997 |

| 7bff1a44974a42a59e49eebffad550c0 | RegionOne | nova | compute | True | admin | http://VirtualIP:9774/v2.1 |

| 8ddb366df7e94af9af298b5f11774fb4 | RegionOne | keystone | identity | True | admin | http://VirtualIP:5001/v3/ |

| 9035afba42be4b4387571d02b16c168c | RegionOne | placement | placement | True | internal | http://VirtualIP:9778 |

| a592cb41c0bb424c9817633ed1946b45 | RegionOne | keystone | identity | True | public | http://VirtualIP:5001/v3/ |

| b0f71d34aedf41a9a8fb9d56313efb00 | RegionOne | nova | compute | True | internal | http://VirtualIP:9774/v2.1 |

| b7b0084313744b8a91a142b1221e0443 | RegionOne | glance | image | True | internal | http://VirtualIP:9293 |

| c2a1f308b3c04a448667967afb6016fe | RegionOne | placement | placement | True | public | http://VirtualIP:9778 |

| fdb2cdadfb7544abad1f216ca719f478 | RegionOne | glance | image | True | public | http://VirtualIP:9293 |

+----------------------------------+-----------+--------------+--------------+---------+----

注:误操作,删除命令://# openstack endpoint delete ff76f2ea08914c98ad6e8fee3a789498

[root@cont02:/root]# openstack catalog list

+-----------+-----------+----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+----------------------------------------+

| nova | compute | RegionOne |

| | | public: http://VirtualIP:9774/v2.1 |

| | | RegionOne |

| | | admin: http://VirtualIP:9774/v2.1 |

| | | RegionOne |

| | | internal: http://VirtualIP:9774/v2.1 |

| | | |

| glance | image | RegionOne |

| | | admin: http://VirtualIP:9293 |

| | | RegionOne |

| | | internal: http://VirtualIP:9293 |

| | | RegionOne |

| | | public: http://VirtualIP:9293 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://VirtualIP:5001/v3/ |

| | | RegionOne |

| | | admin: http://VirtualIP:5001/v3/ |

| | | RegionOne |

| | | public: http://VirtualIP:5001/v3/ |

| | | |

| neutron | network | RegionOne |

| | | internal: http://VirtualIP:9997 |

| | | RegionOne |

| | | admin: http://VirtualIP:9997 |

| | | RegionOne |

| | | public: http://VirtualIP:9997 |

| | | |

| placement | placement | RegionOne |

| | | admin: http://VirtualIP:9778 |

| | | RegionOne |

| | | internal: http://VirtualIP:9778 |

| | | RegionOne |

| | | public: http://VirtualIP:9778 |

| | | |

+-----------+-----------+----------------------------------------+

10.4 部署Neutron (在所有控制节点上安装)

选择安装 Networking Option 2: Self-service networks

控制节点上:(注:此处我们安装openvswitch代替openstack-neutron-linuxbridge)

10.4.1 安装Neutron程序

[root@cont01:/root]# yum install openstack-neutron openstack-neutron-ml2 openvswitch openstack-neutron-openvswitch ebtables -y

[root@cont02:/root]# yum install openstack-neutron openstack-neutron-ml2 openvswitch openstack-neutron-openvswitch ebtables -y

[root@cont03:/root]# yum install openstack-neutron openstack-neutron-ml2 openvswitch openstack-neutron-openvswitch ebtables -y

10.4.2 配置neutron.conf

[root@cont01:/root]# cp -p /etc/neutron/neutron.conf{,.bak}

[root@cont02:/root]# cp -p /etc/neutron/neutron.conf{,.bak}

[root@cont03:/root]# cp -p /etc/neutron/neutron.conf{,.bak}

//注意neutron.conf文件的权限:root:neutron

//配置neutron.conf

[root@cont02:/root]# vim /etc/neutron/neutron.conf

1 [DEFAULT]

2 #

3 bind_host = 192.168.10.22

4 auth_strategy = keystone

5 core_plugin = ml2

6 allow_overlapping_ips = true

7 notify_nova_on_port_status_changes = true

8 notify_nova_on_port_data_changes = true

9 service_plugins = router

10 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

723 [database]

724 connection = mysql+pymysql://neutron:NEUTRON_typora@VirtualIP:3307/neutron

846 [keystone_authtoken]

847 www_authenticate_uri = http://VirtualIP:5001/v3

848 auth_url = http://VirtualIP:5001/v3

849 memcache_servers=cont01:11211,cont02:11211,cont03:11211

850 auth_type = password

851 project_domain_name = Default

852 user_domain_name = Default

853 project_name = service

854 username = neutron

855 password = neutron_typora

1093 [nova]

1094 auth_url = http://VirtualIP:5001/v3

1095 auth_type = password

1096 project_domain_name = default

1097 user_domain_name = default

1098 region_name = RegionOne

1099 project_name = service

1100 username = nova

1101 password = nova_typora

1211 [oslo_concurrency]

1212 lock_path = /var/lib/neutron/tmp

[root@cont01:/root]# vim /etc/neutron/neutron.conf

1 [DEFAULT]

2 #

3 bind_host = 192.168.10.21

4 auth_strategy = keystone

5 core_plugin = ml2

6 allow_overlapping_ips = true

7 notify_nova_on_port_status_changes = true

8 notify_nova_on_port_data_changes = true

9 service_plugins = router

10 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

723 [database]

724 connection = mysql+pymysql://neutron:NEUTRON_typora@VirtualIP:3307/neutron

846 [keystone_authtoken]

847 www_authenticate_uri = http://VirtualIP:5001/v3

848 auth_url = http://VirtualIP:5001/v3

849 memcache_servers=cont01:11211,cont02:11211,cont03:11211

850 auth_type = password

851 project_domain_name = Default

852 user_domain_name = Default

853 project_name = service

854 username = neutron

855 password = neutron_typora

1093 [nova]

1094 auth_url = http://VirtualIP:5001/v3

1095 auth_type = password

1096 project_domain_name = default

1097 user_domain_name = default

1098 region_name = RegionOne

1099 project_name = service

1100 username = nova

1101 password = nova_typora

1211 [oslo_concurrency]

1212 lock_path = /var/lib/neutron/tmp

[root@cont03:/root]# vim /etc/neutron/neutron.conf

1 [DEFAULT]

2 #

3 bind_host = 192.168.10.23

4 auth_strategy = keystone

5 core_plugin = ml2

6 allow_overlapping_ips = true

7 notify_nova_on_port_status_changes = true

8 notify_nova_on_port_data_changes = true

9 service_plugins = router

10 transport_url = rabbit://openstack:adminopenstack@cont01:5672,openstack:adminopenstack@cont02:5672,openstack:adminopenstack@cont03:5672

723 [database]

724 connection = mysql+pymysql://neutron:NEUTRON_typora@VirtualIP:3307/neutron

846 [keystone_authtoken]

847 www_authenticate_uri = http://VirtualIP:5001/v3

848 auth_url = http://VirtualIP:5001/v3

849 memcache_servers=cont01:11211,cont02:11211,cont03:11211

850 auth_type = password

851 project_domain_name = Default

852 user_domain_name = Default

853 project_name = service

854 username = neutron

855 password = neutron_typora

1093 [nova]

1094 auth_url = http://VirtualIP:5001/v3

1095 auth_type = password

1096 project_domain_name = default

1097 user_domain_name = default

1098 region_name = RegionOne

1099 project_name = service

1100 username = nova

1101 password = nova_typora

1211 [oslo_concurrency]

1212 lock_path = /var/lib/neutron/tmp

[root@cont0$:/root]# egrep -v "^$|^#" /etc/neutron/neutron.conf

10.4.3 配置ml2_conf.ini (在全部控制节点操作)

[root@cont01:/root]# cp -p /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

[root@cont02:/root]# cp -p /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

[root@cont03:/root]# cp -p /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

[root@cont02:/root]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

128 [ml2]

129 type_drivers = flat,vxlan

130 tenant_network_types = vxlan

131 mechanism_drivers = openvswitch,l2population

132 extension_drivers = port_security

234 [ml2_type_vxlan]

235 vni_ranges = 1:1000

250 [securitygroup]

251 enable_ipset = true

[root@cont01:/root]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

128 [ml2]

129 type_drivers = flat,vxlan

130 tenant_network_types = vxlan

131 mechanism_drivers = openvswitch,l2population

132 extension_drivers = port_security

234 [ml2_type_vxlan]

235 vni_ranges = 1:1000

250 [securitygroup]

251 enable_ipset = true

[root@cont03:/root]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

128 [ml2]

129 type_drivers = flat,vxlan

130 tenant_network_types = vxlan

131 mechanism_drivers = openvswitch,l2population

132 extension_drivers = port_security

234 [ml2_type_vxlan]

235 vni_ranges = 1:1000

250 [securitygroup]

251 enable_ipset = true

10.4.4 配置 openvswitch (在全部控制节点操作)

[root@cont01:/root]# cp -p /etc/neutron/plugins/ml2/openvswitch_agent.ini{,.bak}

[root@cont02:/root]# cp -p /etc/neutron/plugins/ml2/openvswitch_agent.ini{,.bak}

[root@cont03:/root]# cp -p /etc/neutron/plugins/ml2/openvswitch_agent.ini{,.bak}

[root@cont02:/root]# vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

117 [agent]

131 tunnel_types = vxlan

143 l2_population = true

149 ##arp_responder = true

193 [ovs]

204 ##integration_bridge = br-int

207 tunnel_bridge = br-tun

210 ##int_peer_patch_port = patch-tun

213 ##tun_peer_patch_port = patch-int

220 ##//注:这里的local_ip必须写第二块网卡的IP

221 local_ip = 10.10.10.22

230 datapath_type = system

231 bridge_mappings = public:br-ex

310 [securitygroup]

317 firewall_driver = iptables_hybrid

322 enable_security_group = true

[root@cont01:/root]# vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

117 [agent]

131 tunnel_types = vxlan

143 l2_population = true

149 ##arp_responder = true

193 [ovs]

204 ##integration_bridge = br-int

207 tunnel_bridge = br-tun

210 ##int_peer_patch_port = patch-tun

213 ##tun_peer_patch_port = patch-int

220 ##//注:这里的local_ip必须写第二块网卡的IP

221 local_ip = 10.10.10.21

230 datapath_type = system

231 bridge_mappings = public:br-ex

310 [securitygroup]

317 firewall_driver = iptables_hybrid

322 enable_security_group = true

[root@cont03:/root]# vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

117 [agent]

131 tunnel_types = vxlan

143 l2_population = true

149 ##arp_responder = true

193 [ovs]

204 ##integration_bridge = br-int

207 tunnel_bridge = br-tun

210 ##int_peer_patch_port = patch-tun

213 ##tun_peer_patch_port = patch-int

220 ##//注:这里的local_ip必须写第二块网卡的IP

221 local_ip = 10.10.10.23

##enable_tunneling = True

230 datapath_type = system

231 bridge_mappings = public:br-ex

310 [securitygroup]

317 firewall_driver = iptables_hybrid

322 enable_security_group = true

10.4.5 配置l3_agent.ini(self-networking)(在全部控制节点操作 cont01 cont02 cont03)

[root@cont0$:/root]#

[root@cont0$:/root]# cp -p /etc/neutron/l3_agent.ini{,.bak}

[root@cont0$:/root]# vim /etc/neutron/l3_agent.ini

16 interface_driver = openvswitch

或者:interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

17 external_network_bridge = br-ex

可以使用命令neutron l3-agent-list-hosting-router router-id来查看router的master l3-agent是在哪个节点上

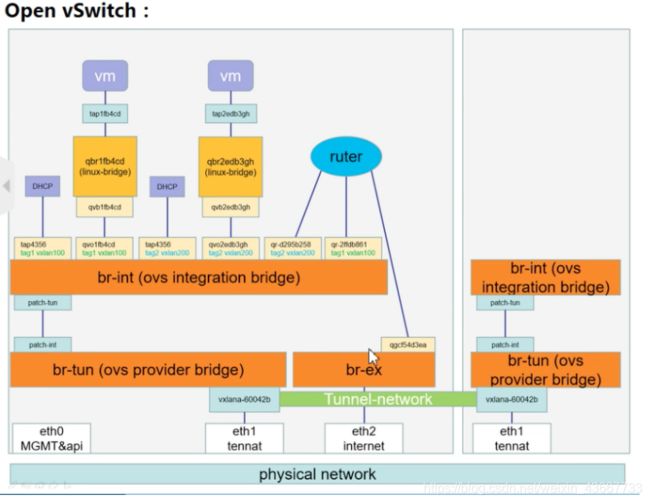

Open vSwitch架构参考图

10.4.6 配置dhcp_agent.ini (在全部控制节点操作 cont01 cont02 cont03)

注:由于DHCP协议自身就支持多个DHCP服务器,因此只需要在各个网络节点上部署DHCP Agent服务,并且在配置文件中配置相关选项,即可实现租户网络的DHCP服务是高可用的。

[root@cont0$:/root]# cp -p /etc/neutron/dhcp_agent.ini{,.bak}

[root@cont0$:/root]# vim /etc/neutron/dhcp_agent.ini

1 [DEFAULT]

16 interface_driver = openvswitch

28 dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

37 enable_isolated_metadata = true

10.4.7 配置metadata_agent.ini (在全部控制节点操作 cont01 cont02 cont03)

[root@cont0$:/root]# cp -p /etc/neutron/metadata_agent.ini{,.bak}

[root@cont0$:/root]# vim /etc/neutron/metadata_agent.ini

1 [DEFAULT]

5 nova_metadata_host = VirtualIP

6 metadata_proxy_shared_secret = METADATA_SECRET

7 nova_metadata_port = 9775

# metadata_proxy_shared_secret:与/etc/neutron/metadata_agent.ini文件中参数一致

10.4.8 配置nova.conf (在全部控制节点操作 cont01 cont02 cont03)

[root@cont0$:/root]# vim /etc/nova/nova.conf

7665 [neutron]

7668 url = http://VirtualIP:9997

7669 auth_url = http://VirtualIP:5001

7670 auth_type = password