K8s(9)——集群通信之calico策略

目录

k8s网络通信简介

Flannel

calico

介绍

calico安装与部署

calico网络插件

网络策略

k8s网络通信简介

k8s通过CNI接口接入其他插件来实现网络通讯。目前比较流行的插件有flannel,calico等。

同一节点的pod之间通过cni网桥转发数据包。(brctl show可以查看)

不同节点的pod之间的通信需要网络插件支持。flannel,calico

Flannel

Flannel 由CoreOS开发,用于解决docker集群跨主机通讯的覆盖网络(overlay network),在基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路连接起来,类似VPN隧道,原理为在物理网络上实现的逻辑网络它的主要思路是:预先留出一个网段,每个主机使用其中一部分,然后每个容器被分配不同的ip;让所有的容器认为大家在同一个直连的网络,底层通过UDP/VxLAN/Host-GW等进行报文的封装和转发。

它的功能是让集群中的不同节点主机创建的Docker容器都具有全集群唯一的虚拟IP地址。

Flannel的设计目的就是为集群中的所有节点重新规划IP地址的使用规则,从而使得不同节点上的容器能够获得同属一个内网且不重复的IP地址,并让属于不同节点上的容器能够直接通过内网IP通信。

flannel支持多种后端:

1、Vxlan,报文封装,默认使用vxlan

2、host-gw,主机网关,性能好,但只能在二层网络中,不支持跨网络,如果有成千上万的Pod,容易产生广播风暴

3、UDP,性能差,不推荐

calico

介绍

flannel实现的是网络通信,calico的特性是在pod之间的隔离。endpoints组成的网络是单纯的三层网络,报文的流向完全通过路由规则控制,没有overlay等额外开销;

flannel组件只可以实现网络的通信,无法写入策略,无法实现对服务、对pod的控制(黑名单白名单pod之间的隔离等等),所以我们把网络组件替换为calico,不仅支持网络的通信,还支持策略的写入

BIRD:一个标准的路由程序,它会从内核里面获取哪一些IP的路由发生了变化,然后通过标准BGP的路由协议扩散到整个其他的宿主机上,让外界都知道这个IP在这里,路由的时候到这里来。

calico安装与部署

官网:Install Calico networking and network policy for on-premises deployments | Calico Documentation

calico网络插件

在harbor创建calico的仓库项目

下载部署文件

[root@k8s2 calico]# curl https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico.yaml -O

修改配置

把CALICO_IPV4POOL_IPIP功能关闭

[root@k8s2 calico]# vim calico.yaml

- name: CALICO_IPV4POOL_IPIP

value: "Never"

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

修改镜像的路径为自己的harbor仓库的路径

image: calico/kube-controllers:v3.25.0

image: calico/cni:v3.25.0

image: calico/node:v3.25.0

上传镜像到harbor

[root@k8s1 harbor]# docker images |grep reg.westos.org/calico

reg.westos.org/calico/kube-controllers v3.25.0 5e785d005ccc 2 days ago 71.6MB

reg.westos.org/calico/cni v3.25.0 d70a5947d57e 2 days ago 198MB

reg.westos.org/calico/node v3.25.0 08616d26b8e7 2 days ago 245MB

[root@k8s1 harbor]# docker images |grep reg.westos.org/calico | awk '{system("docker push "$1":"$2"")}'

删除flannel网络插件,否则两个插件会混乱

[root@k8s2 ~]# kubectl delete -f kube-flannel.yml

namespace "kube-flannel" deleted

clusterrole.rbac.authorization.k8s.io "flannel" deleted

clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted

serviceaccount "flannel" deleted

configmap "kube-flannel-cfg" deleted

daemonset.apps "kube-flannel-ds" deleted

并删除所有节点的cni配置文件,避免与calico冲突

[root@k8s2 ~]# rm -f /etc/cni/net.d/10-flannel.conflist

[root@k8s3 ~]# rm -f /etc/cni/net.d/10-flannel.conflist

[root@k8s4 ~]# rm -f /etc/cni/net.d/10-flannel.conflist

部署calico

[root@k8s2 calico]# kubectl apply -f calico.yaml

查看,多出了四个calico的组件

[root@k8s2 ~]# kubectl -n kube-system get pod |grep calico

calico-kube-controllers-6f469fff97-zlqw6 1/1 Running 0 17m

calico-node-cvt4w 1/1 Running 0 17m

calico-node-qh5l7 1/1 Running 0 17m

calico-node-skjf7 1/1 Running 0 17m

重建pod,检测ip分配

[root@k8s2 ~]# kubectl delete pod --all

pod "myapp-deployment-67984c8646-2f4l8" deleted

pod "myapp-deployment-67984c8646-qhf6r" deleted

pod "myapp-deployment-67984c8646-vppq7" deleted

pod "myapp-deployment-v2-74b4cdbf78-4gbm2" deleted

pod "myapp-deployment-v2-74b4cdbf78-fjgn5" deleted

pod "myapp-deployment-v2-74b4cdbf78-k79s5" deleted

重新分配地址,不规律

[root@k8s2 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-deployment-67984c8646-b6jqt 1/1 Running 0 9s 10.244.219.2 k8s3

myapp-deployment-67984c8646-b9s4z 1/1 Running 0 9s 10.244.106.129 k8s4

myapp-deployment-67984c8646-whzhd 1/1 Running 0 10s 10.244.106.128 k8s4

myapp-deployment-v2-74b4cdbf78-9v9jd 1/1 Running 0 9s 10.244.219.1 k8s3

myapp-deployment-v2-74b4cdbf78-c5jj9 1/1 Running 0 9s 10.244.106.130 k8s4

myapp-deployment-v2-74b4cdbf78-qxfhp 1/1 Running 0 10s 10.244.219.0 k8s3

测试网络,访问没问题,通信正常

[root@k8s2 ~]# curl 10.102.0.141

Hello MyApp | Version: v1 | Pod Name

[root@k8s2 ~]# curl 10.108.173.0

Hello MyApp | Version: v2 | Pod Name

网络策略

限制pod流量

只允许访问带frontend标签的pod,ingress入栈为常用控制

[root@k8s2 calico]# vim networkpolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: myapp

policyTypes:

- Ingress

ingress:

- from:

#- ipBlock:

# cidr: 192.168.0.0/16

# except:

# - 192.168.56.0/24

#- namespaceSelector:

# matchLabels:

# project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 80

运行

[root@k8s2 calico]# kubectl apply -f networkpolicy.yaml

控制的对象是具有app=myapp标签的pod

此时访问svc是不通的

创建测试pod,还是不通

[root@k8s2 calico]# kubectl run demo --image busyboxplus -it

/ # curl 10.102.0.141

给测试pod添加指定标签后,可以访问

[root@k8s2 ~]# kubectl label pod demo role=frontend

/ # curl 10.102.0.141

Hello MyApp | Version: v1 | Pod Name

限制namespace流量

指定namespace里的pod可以访问

[root@k8s2 calico]# vim networkpolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: myapp

policyTypes:

- Ingress

ingress:

- from:

#- ipBlock:

# cidr: 192.168.0.0/16

# except:

# - 192.168.56.0/24

- namespaceSelector:

matchLabels:

project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 80

[root@k8s2 calico]# kubectl apply -f networkpolicy.yaml

创建测试ns

[root@k8s2 ~]# kubectl create namespace test

给namespace添加指定标签

[root@k8s2 calico]# kubectl label ns test project=myproject

具备ns的标签,成功访问

[root@k8s2 ~]# kubectl run test --image busyboxplus -it -n test

/ # curl 10.102.0.141

Hello MyApp | Version: v1 | Pod Name

同时限制namespace和pod

注意和上面的区别,少了一个“-”

[root@k8s2 calico]# vim networkpolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: myapp

policyTypes:

- Ingress

ingress:

- from:

#- ipBlock:

# cidr: 192.168.0.0/16

# except:

# - 192.168.56.0/24

- namespaceSelector:

matchLabels:

project: myproject

podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 80

[root@k8s2 calico]# kubectl apply -f networkpolicy.yaml

ns和pod同时具备标签才可以i访问

[root@k8s2 calico]# kubectl -n test label pod test role=frontend

[root@k8s2 ~]# kubectl run test --image busyboxplus -it -n test

/ # curl 10.102.0.141

Hello MyApp | Version: v1 | Pod Name

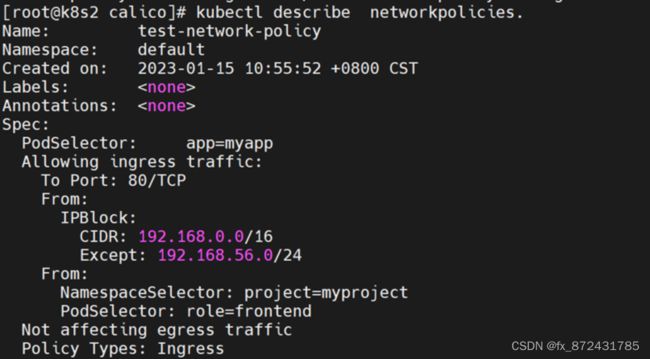

限制集群外部流量

默认情况下是不允许外网访问服务的,

在上面的基础上,再次添加策略,修改ns.yaml文件,允许特定网段进来

[root@k8s2 calico]# vim networkpolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: myapp

policyTypes:

- Ingress

ingress:

- from:

- ipBlock:

cidr: 192.168.0.0/16 #允许168这个大网段进来

except:

- 192.168.56.0/24 #不让56这个子网段进来

- namespaceSelector:

matchLabels:

project: myproject

podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 80

运行

[root@k8s2 calico]# kubectl apply -f networkpolicy.yaml

修改svc类型,loadbalancer

通过外网访问失败

再次修改允许56网段进来

[root@k8s1 ~]# curl 192.168.56.101

Hello MyApp | Version: v1 | Pod Name