Flink 1.15本地集群部署Standalone模式(独立集群模式)

一. 环境准备

1、集群规划,CentOS7环境

192.168.11.104 11.104(DB测试) centf11104

192.168.11.105 11.105(DB测试) centf11105

192.168.11.106 11.106(DB测试) centf11106

jdk安装参考:linux 环境java jdk12.0.2部署_天一道长--玄彬的博客-CSDN博客

2、jdk12 安装》配置免密登入》三个节点配置hosts 文件,通过主机名称可以访问

cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.11.104 centf11104

192.168.11.105 centf11105

192.168.11.106 centf11106

3、下载安装包

官网地址:Apache Flink: 下载

下载版本:flink-1.15.0-bin-scala_2.12.tgz

4、上传到服务并配置

上传目录:/data

解压:tar -zxf flink-1.15.0-bin-scala_2.12.tgz -C /usr/local

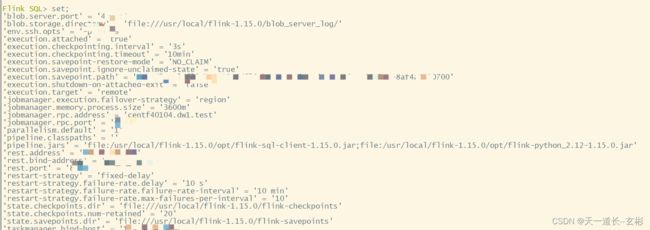

4.1 修改配置文件 flink-conf.yaml

进去目录:/usr/local/flink-1.15.0/conf

flink配置参考官网:Configuration | Apache Flink

vi flink-conf.yaml

######## Modify ssh default from 22 to 22222 #########

env.ssh.opts: -p 22222 #免密登入端口地址修改

blob.storage.directory: file:///usr/local/flink-1.15.0/blob_server_log/ #可不配置

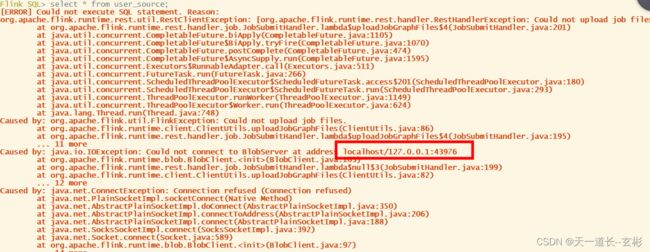

blob.server.port: 43976 #不配置会随机变动

jobmanager.rpc.address: 192.168.11.104 #JobManager 节点

jobmanager.rpc.port: 6123

#jobmanager.bind-host: localhost #jobmanager绑定ip,最好不配置,否则blob.server报错

jobmanager.memory.process.size: 3600m

taskmanager.bind-host: 192.168.11.104 #taskmanager服务地址,可用机器名,在哪台机器改哪个ip

#taskmanager.bind-host: 192.168.11.105 #在105机器就用这个

#taskmanager.bind-host: 192.168.11.106 #在106机器就用这个

taskmanager.host: 192.168.11.104

#taskmanager.host: 192.168.11.105

#taskmanager.host: 192.168.11.106

taskmanager.memory.process.size: 2728m

taskmanager.numberOfTaskSlots: 6

parallelism.default: 1

execution.checkpointing.interval: 3min

# execution.checkpointing.externalized-checkpoint-retention: [DELETE_ON_CANCELLATION, RETAIN_ON_CANCELLATION]

# execution.checkpointing.max-concurrent-checkpoints: 1

# execution.checkpointing.min-pause: 0

# execution.checkpointing.mode: [EXACTLY_ONCE, AT_LEAST_ONCE]

execution.checkpointing.timeout: 10min

# execution.checkpointing.tolerable-failed-checkpoints: 0

# execution.checkpointing.unaligned: false

state.checkpoints.dir: file:///usr/local/flink-1.15.0/flink-checkpoints

state.savepoints.dir: file:///usr/local/flink-1.15.0/flink-savepoints

state.checkpoints.num-retained: 20

jobmanager.execution.failover-strategy: region

rest.port: 8084

rest.address: 192.168.11.104

rest.bind-address: 192.168.11.104

taskmanager.memory.network.fraction: 0.1

taskmanager.memory.network.min: 64mb

taskmanager.memory.network.max: 1gb

taskmanager.memory.flink.size: 2280m

#Job重启

restart-strategy: fixed-delay

restart-strategy.failure-rate.max-failures-per-interval: 10

restart-strategy.failure-rate.failure-rate-interval: 10 min

restart-strategy.failure-rate.delay: 10 s

#History Server

#作业取消时依旧保存检查点不删除,需要手动删除

execution.checkpointing.externalized-checkpoint-retention: RETAIN_ON_CANCELLATION

#将已完成的作业上传到目录

jobmanager.archive.fs.dir: file:///usr/local/flink-1.15.0/completed-jobs

#以逗号分隔目录列表,用于监视已完成的作业

historyserver.archive.fs.dir: file:///usr/local/flink-1.15.0/completed-jobs

historyserver.archive.fs.refresh-interval: 10 s #刷新受监控的目录的事件间隔

4.2修改 workers 文件, 将另外两台(也可master+jobmanager)节点服务器添加为本 Flink 集群的 TaskManager 节点, 具体修改如下:

vim workers

centf11104

centf11105

centf11106

4.3修改masters文件,指定flink-webui界面地址:

vi masters

192.168.11.104:8084

4.4创建好配置里面所需目录(在/usr/local/flink-1.15.0路径下):

mkdir flink-checkpoints

mkdir flink-savepoints

mkdir blob_server_log

4.5赋予目录权限和用户组

chown -R hdfs:hdfs /usr/local/flink-1.15.0/

chmod 777 /usr/local/flink-1.15.0/

4.6分发安装目录

配置修改完毕后, 将 Flink 安装目录发给另外两个节点服务器。

scp -r ./flink-1.15.0 root@centf11105: /usr/local

scp -r ./flink-1.15.0 root@centf11106: /usr/local

两台从节点需要修改的地方vi flink-conf.yaml:

taskmanager.host: 192.168.11.105

taskmanager.bind-host: 192.168.11.105

4.7三个节点都配置环境变量

其实master 节点可以不配置,master 节点可以通过全路径进行启动集群。workers 节点必须配置环境变量。/etc/profile 增加如下配置:

export JAVA_HOME=/usr/local/jdk-12.0.2

export PATH=$PATH:$JAVA_HOME/bin:/usr/local/flink-1.15.0/bin

立即生效:source /etc/profile

4.8启动集群

(1)在centf11104(主节点jobmanager) 节点服务器上执行 start-cluster.sh 启动 Flink 集群:

./bin/start-cluster.sh

./bin/stop-cluster.sh

(2)进入flink clinet命令:

./bin/sql-client.sh embedded

(3)进入flink cline通过set命令看配置或是UI看:

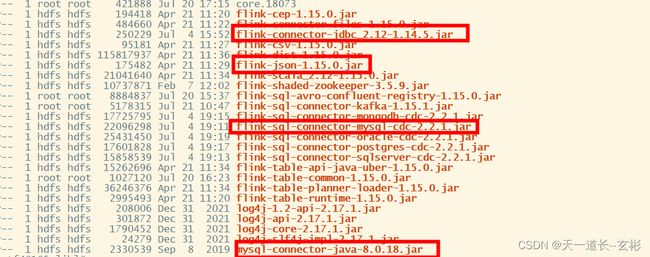

flink Jar包下载参考:

Central Repository: org/apache/flink

部署完成,其余需要注意点:

1.jar包需要上传到lib目录下,找到对应版本

2.防火墙端口:8084,6123,43976,最好三台机器之间端口都开放。

3.这三台机器配置ssh免密登入

4.jobmanager.bind-host: localhost这个参数最好不动否则报错

5.可用资源不够,需要调整内存使用:

jobmanager.memory.process.size: 2600m

taskmanager.memory.process.size: 2728m

taskmanager.memory.flink.size: 2280m

5.使用flink mysql cdc时报错,原因是该用户没有mysql的reload权限

grant reload on *.* to 'user_name'@'%';

GRANT SELECT,UPDATE,INSERT, RELOAD, SHOW DATABASES, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'bigdata' IDENTIFIED BY 'password';

FLUSH PRIVILEGES;

数据流测试:

mysql-CDC流测试:

mysql数据库建好源表:

CREATE TABLE `user_source` (

`id` INT NOT NULL,

`user_name` VARCHAR(255) NOT NULL DEFAULT 'flink',

`address` VARCHAR(1024) DEFAULT NULL,

`phone_number` VARCHAR(512) DEFAULT NULL,

`email` VARCHAR(255) DEFAULT NULL,

`ct_date` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

);

CREATE TABLE `all_users_sink` (

`id` INT NOT NULL,

`user_name` VARCHAR(255) NOT NULL DEFAULT 'flink',

`address` VARCHAR(1024) DEFAULT NULL,

`phone_number` VARCHAR(512) DEFAULT NULL,

`email` VARCHAR(255) DEFAULT NULL,

`ct_date` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

);

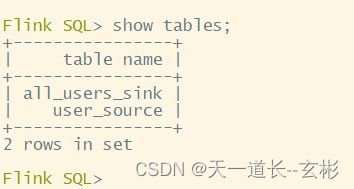

flink-clinet表mysql->mysql创建:

CREATE TABLE user_source (

`id` INT NOT NULL,

user_name STRING,

address STRING,

phone_number STRING,

email STRING,

`ct_date` TIMESTAMP(3),

WATERMARK FOR ct_date AS ct_date- INTERVAL '5' SECOND,

PRIMARY KEY (`id`) NOT ENFORCED

) WITH (

'connector' = 'mysql-cdc',

'hostname' = '192.168.11.19',

'port' = '3306',

'username' = 'test1_u',

'password' = 'mysql_202207120901',

'database-name' = 'test1',

'table-name' = 'user_source'

);

CREATE TABLE all_users_sink (

id INT,

user_name STRING,

address STRING,

phone_number STRING,

email STRING,

`ct_date` TIMESTAMP(3),

PRIMARY KEY (`id`) NOT ENFORCED

) WITH (

'connector.type' = 'jdbc',

'connector.url' = 'jdbc:mysql://192.168.11.19:3306/test1',

'connector.table' = 'all_users_sink',

'connector.username' = 'test1_u',

'connector.password' = 'mysql_202207120901'

);

INSERT INTO all_users_sink SELECT * FROM user_source;

或者:INSERT INTO `all_users_sink`(id,user_name,address,phone_number,email,ct_date)

SELECT id,user_name,address,phone_number,email,ct_date FROM user_source;

mysql数据库原表数据写入:

INSERT INTO `user_source`(id,user_name,address,phone_number,email) VALUES(126,'user_126','Shanghai','123567891234','[email protected]')

Flink SQL从保存点恢复数据:

https://blog.csdn.net/weixin_40344051/article/details/125938116