RayMarching实现体积光渲染

RayMarching实现体积光效果(平行光)

前言

本次Demo核心代码来自github https://github.com/AsehesL/VolumetricLight

本次Demo分享简化了部分代码包含模型自定义调节代码,改为统一用Cube代替模型,并追加光照计算以及体积雾效果,本次demo是在平行光下计算的体积光。

提示:以下是本篇文章正文内容,下面案例可供参考

一、体积光是什么?

游戏中,遮光物体被光源照射时,在其周围呈现的光的放射性泄露,称其为体积光。例如太阳照到树上,会从树叶的缝隙中透过形成光柱。之所以称之为体积光,是因为这种特效下的光照相比以往游戏中的光照给人视觉上以空间的感觉。体积光让游戏玩家更真实的感觉。有时也指实现这一特效的技术。

游戏效果

二、实现步骤

大致流程如下

1.首先我们来实现前两步

场景摆放

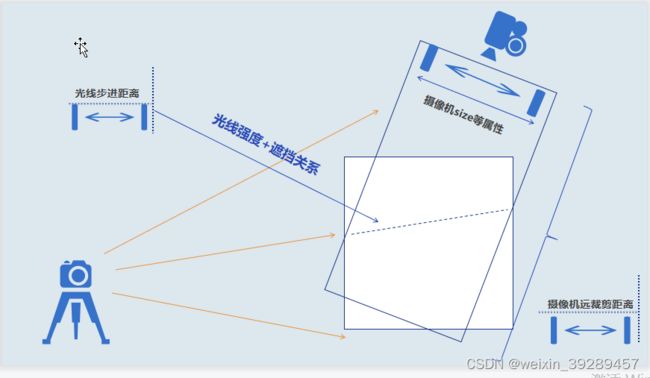

上图是我们场景元素的大概位置。首先定义一个体积光空间,可以用cube代替。就是白色区域位置。其次我们需要定义两个摄像机,一个作为主摄像机,就是左边这一台,主要拍摄场景元素。另一个摄像机作体积光空间的摄像机,主要生成体积光区域内的深度信息,即上图右上角位置。这里我们使用的平行光,深度摄像机使用正交投影。为了确保摄像机拍摄内容处于体积光区域,我们会设置摄像机的远近裁剪距离,以及size等属性,通过该摄像机能得到这样的深度图。深度图如下:

深度图实现

public class VolumetricLightDepthCamera

{

public Camera depthRenderCamera { get { return m_DepthRenderCamera; } }

private Camera m_DepthRenderCamera;

private RenderTexture m_ShadowMap;

private Shader m_ShadowRenderShader;

private const string shadowMapShaderPath = "Shaders/VolumetricLight/ShadowMapRenderer";

private int m_InternalShadowMapID;

private bool m_IsSupport = false;

public bool CheckSupport()

{

m_ShadowRenderShader = Resources.Load<Shader>(shadowMapShaderPath);

if (m_ShadowRenderShader == null || !m_ShadowRenderShader.isSupported)

return false;

m_IsSupport = true;

return m_IsSupport;

}

public void InitCamera(VolumetricLight light)

{

if (!m_IsSupport)

return;

m_InternalShadowMapID = Shader.PropertyToID("internalShadowMap");

if (m_DepthRenderCamera == null)

{

m_DepthRenderCamera = light.gameObject.GetComponent<Camera>();

if (m_DepthRenderCamera == null)

m_DepthRenderCamera = light.gameObject.AddComponent<Camera>();

m_DepthRenderCamera.aspect = light.aspect;

m_DepthRenderCamera.backgroundColor = new Color(0,0,0,0);

m_DepthRenderCamera.clearFlags = CameraClearFlags.SolidColor;

m_DepthRenderCamera.depth = 0;

m_DepthRenderCamera.farClipPlane = light.range;

m_DepthRenderCamera.nearClipPlane = 0.01f;

m_DepthRenderCamera.fieldOfView = light.angle;

m_DepthRenderCamera.orthographic = light.directional;

m_DepthRenderCamera.orthographicSize = light.size;

m_DepthRenderCamera.cullingMask = light.cullingMask;

m_DepthRenderCamera.SetReplacementShader(m_ShadowRenderShader, "RenderType");

}

if (m_ShadowMap == null)

{

int size = 0;

switch (light.quality)

{

case VolumetricLight.Quality.High:

case VolumetricLight.Quality.Middle:

size = 1024;

break;

case VolumetricLight.Quality.Low:

size = 512;

break;

}

m_ShadowMap = new RenderTexture(size, size, 16);

m_DepthRenderCamera.targetTexture = m_ShadowMap;

Shader.SetGlobalTexture(m_InternalShadowMapID, m_ShadowMap);

}

}

public void Destroy()

{

if (m_ShadowMap)

Object.Destroy(m_ShadowMap);

m_ShadowMap = null;

if (m_ShadowRenderShader)

Resources.UnloadAsset(m_ShadowRenderShader);

m_ShadowRenderShader = null;

}

}

这里直接添加camera组件后开始设置摄像机参数,想远近裁剪距离等。这里近裁剪距离选择0.01,远裁剪激励选择range,就是我们体积光的高度范围。这两个参数很重要,因为后面我们借助这个计算该摄像机线性空间下的深度信息。最后会用到关键接口,Camera.SetReplacementShader 接口。

这里官方的定义是:调用这个函数之后,相机将使用替换的shader来渲染它的视图。这里提供两个参数,一个是要替换的shader,另一个是标签。我这里理解的是将该摄像机下的所有该标签的渲染对象都用这个shader来渲染。

这里我们使用渲染深度图的shader,“ShadowMapRenderer”以及 RenderType 作为标签。我们来看一下这个shader的实现。

Shader "Hidden/ShadowMapRenderer"

{

Properties

{

_MainTex("", 2D) = "white" {}

_Cutoff("", Float) = 0.5

_Color("", Color) = (1,1,1,1)

}

SubShader

{

Tags{ "RenderType" = "Opaque" }

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct v2f

{

float4 vertex : SV_POSITION;

float depth : TEXCOORD0;

};

v2f vert(appdata_base v)

{

v2f o;

UNITY_INITIALIZE_OUTPUT(v2f, o);

o.vertex = UnityObjectToClipPos(v.vertex);

o.depth = COMPUTE_DEPTH_01;

return o;

}

fixed4 frag(v2f i) : SV_Target

{

return EncodeFloatRGBA(i.depth);

}

ENDCG

}

}

}

这里的代码很简单,使用 RenderType 标签,并且标记渲染对象为固体。顶点着色器中为拿到对象的深度信息,我们这里使用了 COMPUTE_DEPTH_01 宏定义。

我们知道物体的深度信息来自摄像机空间下的z坐标,,以摄像机为原点unity的摄像机空间采用的是右手坐标系,而世界空间用的是左手坐标系,就是我们的左右手定则,所以导致转换过来z变成负数,这里先* _ProjectionParams.w,_ProjectionParams.w这个是shader的公共变量,存储着 1/far ,即1/远裁剪距离。摄像机空间的z值的值域在0到far之间, 这里我们要得到一个值域 为 0 -1的值,所以直接 * _ProjectionParams.w ,我们要取正所以这里要取反。片段着色器中 直接调用EncodeFloatRGBA 接口,将深度值转换为一个rgb值输出,这里没啥好解释的。游戏中我们可以得到这样的渲染图。如下

2.其次我们来实现光照计算(3,4,5,6步)

接下来我们开始光线步进,就是我们上图的白色区域。我们将步进定义在裁剪空间下,方便后面采用深度图。用当前像素坐标 - 摄像机坐标得到步进方向,循环32次开始步进,每次步进计算光照强度,以及遮挡关系。体积光的光照 = 光照散射 + 光照吸收。

首先是光照散射。

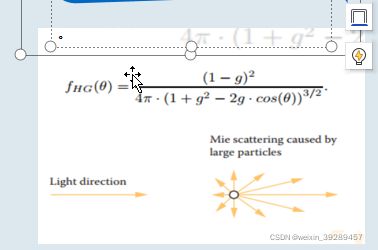

从光源点发出的光线透过一些介质时,通常是灰尘等之类会造成反射,这个反射来自四面八方,只有部分光线会进入眼睛变成我们看到的光线。我们可以简单称之为光照的散射。这里我们使用Henyey-Greenstein相位函数计算光照强度。

传入顶点视线光线与光源方向的点积,具体表现为逆光方向上的散射光强度较大

。以及可控参数g,这里的g是光的散射系数。g越大散射越少,光线更亮,g越小则散射越多,光照越暗,这里的公式原理不做阐述。

以下是我们对应的代码实现

其次是光照散射

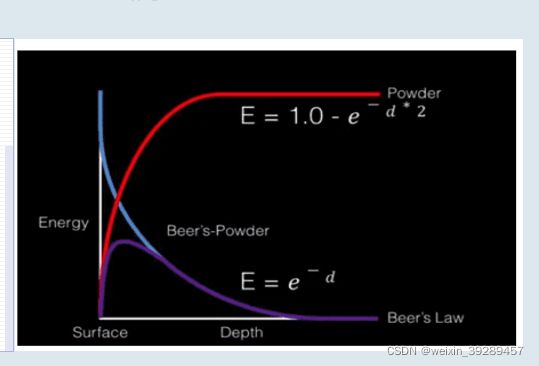

光线的吸收,我们使用朗伯比尔定律。朗伯比尔定律(Lambert-Beer Law)是光吸收的基本定律,适用于所有的电磁辐射和所有的吸光物质,包括气体、固体、液体、分子、原子和离子。在体积光的情况下,它可以用于基于介质密度可靠地计算透射率

•exp(-c*d) 透光强度随着介质的密度,光传播的距离的增加成指数下降,其中c是物质密度,d是距离。

我们在这个的基础上可以添加一个体积雾效果。

为了生成体积雾效果我们模拟空气中不同介质对光线的吸收效果。直接套用刚才的朗伯比尔定律。我们需要两个参数分别是我们的深度以及介质密度。针对物质密度我们会用当前步进坐标,也就是裁剪空间的坐标乘以时间变量去采样一张3D噪声纹理得到随机密度值,d即使我们的深度。将两者带入就可以得到体积雾效果。

接下来是处理遮挡关系

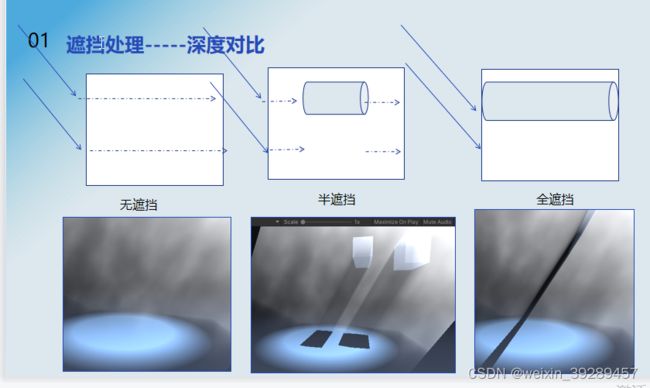

接下里我们开始对比深度。得出遮挡区域。

物体的遮挡有三种情况分别为无遮挡,半遮挡与全遮挡,可以看到他们的实际效果是不一样的。每个像素只叠加摄像机方向上未被遮挡光照。这里我们需要对比的是当前步进位置的深度以及深度摄像机拍摄的深度信息。

深度获取---------获取当前步进深度

我们当前步进坐标的深度就是我们的z坐标,但是他是裁剪空间下。是非线性的,这里主要是因为从摄像机空间变换到裁剪空间的变换矩阵是非线性,所以我们这里要倒推深度值,将z坐标倒推到摄像机空间。我们先来看一下正交投影的投影矩阵。

只需要关注z坐标的变化。通过移位变化,我们可以得到这样的式子。

Far,Near分别是我们的远近裁剪距离。

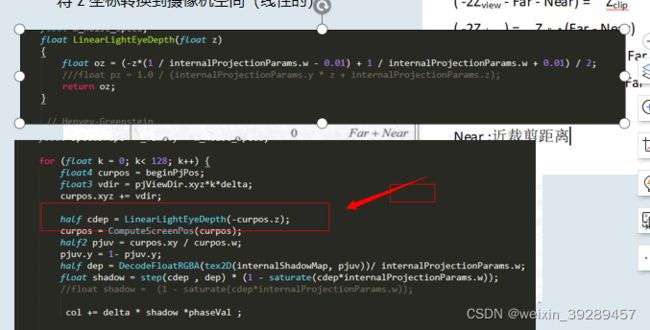

这里的参数 w以及常量0.01分别是我们之前设置深度摄像机的远近裁剪距离。我们将取反z值代入,这里因为我们要得到一个正数所以取反带入。得到当前步进坐标在摄像机空间下的深度值。

摄像机空间的深度值

我们将深度摄像机的拍摄的深度图传入shader,就是我们的左下角,接下来就是采样这个深度图。我们将调用接口ComputeScreenPos(),传入裁剪空间坐标得到屏幕坐标,得到该屏幕坐标的深度值在0-1之间,再乘以far得到值域在近远裁剪之间0-far。

这里我们用/是因为我们是先除以1再传入的。

对比深度图

我们已知步进深度以及空间深度,调用step()函数。遮挡关系为,没有遮挡则返回 1,全遮挡返回0,半遮挡则返回 1- 深度值。然后去乘以光照得到体积光效果

代码如下(示例):

half cdep = LinearLightEyeDepth(-curpos.z);

curpos = ComputeScreenPos(curpos);

half2 pjuv = curpos.xy / curpos.w;

pjuv.y = 1- pjuv.y;

half dep = DecodeFloatRGBA(tex2D(internalShadowMap, pjuv))/ internalProjectionParams.w;

float shadow = step(cdep , dep) * (1 - saturate(cdep*internalProjectionParams.w));

//float shadow = (1 - saturate(cdep*internalProjectionParams.w));

col += delta * shadow *phaseVal ;

整体代码如下

体积光shader

Shader "Unit/VolumetricLight"

{

Properties

{

}

SubShader

{

Tags { "RenderType" = "Transparent" "Queue" = "Transparent" "IgnoreProjector"="true" }

LOD 100

Pass

{

zwrite off

blend srcalpha one

colormask rgb

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#pragma multi_compile_fog

#pragma multi_compile __ USE_COOKIE

#pragma multi_compile VOLUMETRIC_LIGHT_QUALITY_LOW VOLUMETRIC_LIGHT_QUALITY_MIDDLE VOLUMETRIC_LIGHT_QUALITY_HIGH

#include "UnityCG.cginc"

#if VOLUMETRIC_LIGHT_QUALITY_LOW

#define RAY_STEP 16

#elif VOLUMETRIC_LIGHT_QUALITY_MIDDLE

#define RAY_STEP 32

#elif VOLUMETRIC_LIGHT_QUALITY_HIGH

#define RAY_STEP 64

#endif

struct appdata

{

float4 vertex : POSITION;

float3 color : COLOR;

};

struct v2f

{

UNITY_FOG_COORDS(0)

float4 vertex : SV_POSITION;

float4 viewPos : TEXCOORD1;

float4 viewCamPos : TEXCOORD2;

float3 vcol : COLOR;

};

uniform float4 internalWorldLightColor;

uniform float4 internalWorldLightPos;

sampler2D internalShadowMap;

#ifdef USE_COOKIE

sampler2D internalCookie;

#endif

float4x4 internalWorldLightVP;

float4 internalProjectionParams;

float4x4 internalWorldLightMV;

float4 m_InternalLightPosID;

float4 _phaseParams;

float d;

sampler3D _noise3d;

float m_noise_speed;

float LinearLightEyeDepth(float z)

{

float oz = (-z*(1 / internalProjectionParams.w - 0.01) + 1 / internalProjectionParams.w + 0.01) / 2;

///float pz = 1.0 / (internalProjectionParams.y * z + internalProjectionParams.z);

return oz;

}

// Henyey-Greenstein

float hg(float a, float g) {

float g2 = g * g;

return (1 - g2) / (4 * 3.1415 * pow(1 + g2 - 2 * g * (a), 1.5));

}

float phase(float a) {

float hgBlend = hg(a, _phaseParams.x);

return hgBlend * _phaseParams.w;

}

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

UNITY_TRANSFER_FOG(o,o.vertex);

//o.viewPos = float4(v.vertex.xyz, 1);

o.viewPos = mul(unity_ObjectToWorld, float4(v.vertex.xyz, 1));

o.viewCamPos = float4(_WorldSpaceCameraPos.xyz, 1);

o.vcol = v.color;

return o;

}

fixed4 frag (v2f i) : SV_Target

{

float delta = 2.0 / 64;

float col = 0;

float4 beginPjPos = mul(internalWorldLightMV, i.viewPos);

beginPjPos = mul(internalWorldLightVP, beginPjPos);

beginPjPos /= beginPjPos.w;

float4 pjCamPos = mul(internalWorldLightMV, i.viewCamPos);

pjCamPos = mul(internalWorldLightVP, pjCamPos);

pjCamPos /= pjCamPos.w;

float3 pjViewDir = normalize(beginPjPos.xyz - pjCamPos.xyz);

float4 pjLightPos = mul(internalWorldLightMV, internalWorldLightPos);

pjLightPos = mul(internalWorldLightVP, pjLightPos);

// float4 pjLightPos = mul(internalWorldLightVP, internalWorldLightPos);

float cosAngle = dot(pjViewDir, pjLightPos.xyz);

float phaseVal = phase(cosAngle);

float speedShape = _Time.y * m_noise_speed;

for (float k = 0; k< 64; k++) {

float4 curpos = beginPjPos;

float3 vdir = pjViewDir.xyz*k*delta;

curpos.xyz += vdir;

half cdep = LinearLightEyeDepth(-curpos.z);

curpos = ComputeScreenPos(curpos);

half2 pjuv = curpos.xy / curpos.w;

pjuv.y = 1- pjuv.y;

half dep = DecodeFloatRGBA(tex2D(internalShadowMap, pjuv))/ internalProjectionParams.w;

float shadow = step(cdep , dep) * (1 - saturate(cdep*internalProjectionParams.w));

//float shadow = (1 - saturate(cdep*internalProjectionParams.w));

col += delta * shadow *phaseVal ;

float4 uvwShape = curpos + float4(speedShape, speedShape * 0.2,0, 0);

float4 shapeNoise = tex3Dlod(_noise3d, uvwShape);

float noise = shapeNoise.r * d;

col *= exp(-noise * cdep *internalProjectionParams.w) ;

}

//col = col *2 ;

return fixed4(col,col,col,1);

// return shapeNoise;

}

ENDCG

}

}

}

体积光控制脚步Cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

/// 该处使用的url网络请求的数据。

总结

提示:这里对文章进行总结:

以上就是本次体积光的分享了,欢迎交流。计划出一个图形学基础概念的专题。等后续更新吧