使用Springboot+websocket+kafka模拟实时数据传输

文章目录

- 使用Springboot+websocket+kafka模拟实时数据传输

-

- 环境准备

- 数据源读取

- WebSocket服务器

- 网页

- 启动

使用Springboot+websocket+kafka模拟实时数据传输

环境准备

环境:

- 本地Spark版本为:3.0.0

- scala版本:2.12.10

- kafka版本:kafak_2.12-2.4.0

- sbt版本:1.8.2

注意环境变量的设置

本地有goods-input.csv文件作为数据源, 该文件是gbk编码

数据源读取

首先需要读取该文件数据,并发送到kafka

在spark的安装目录下准备项目结构:./mycode/producer/src/main/scala/

注意要把kafka安装目录下libs中的jar包复制到spark安装目录下jars文件夹下

使用scala来编写读取数据源代码, 在scala目录下创建SalesProducer.scala:

import org.apache.hadoop.io.{LongWritable, Text}

import org.apache.hadoop.mapred.TextInputFormat

import org.apache.kafka.clients.producer.{KafkaProducer, ProducerRecord}

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

import java.util.Properties

import java.util.concurrent.TimeUnit

object SalesProducer {

private val KAFKA_TOPIC = "sales"

def main(args: Array[String]): Unit = {

val props = new Properties()

props.put("bootstrap.servers", "localhost:9092")

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer")

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer")

val sparkConf = new SparkConf().setAppName("Sales").setMaster("local[2]")

val sc = new SparkContext(sparkConf)

val producer = new KafkaProducer[String, String](props)

val filePath = "file:///...../goods-input.csv"

//使用hadoopFile读取本地的文件

val fileRDD: RDD[String] = sc

.hadoopFile(filePath, classOf[TextInputFormat], classOf[LongWritable], classOf[Text])

.map(p => {

//gbk读取

new String(p._2.getBytes, 0, p._2.getLength, "GBK")

})

//读取到的数据发送到kafka

fileRDD.collect.foreach(line => {

System.out.println("send: " + line);

producer.send(new ProducerRecord[String, String](TOPIC, line))

//间隔100ms发送

TimeUnit.MILLISECONDS.sleep(100)

})

producer.close()

}

}

接着编写一个Consumer来测试Proudcer能否正常发送数据

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.rdd.RDD

import org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribe

import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistent

import org.apache.spark.streaming.kafka010.{HasOffsetRanges, KafkaUtils}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

object SalesConsumer {

private val KAFKA_TOPIC = "sales"

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf().setAppName("Sales").setMaster("local[2]")

val sc = new SparkContext(sparkConf)

sc.setLogLevel("ERROR")

val ssc = new StreamingContext(sc, Seconds(10))

ssc.checkpoint("file:///...../checkpoint")

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> "localhost:9092",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> "sales",

"auto.offset.reset" -> "latest",

"enable.auto.commit" -> (true: java.lang.Boolean)

)

val topics = Array(KAFKA_TOPIC)

val stream = KafkaUtils.createDirectStream[String, String](

ssc,

PreferConsistent,

Subscribe[String, String](topics, kafkaParams)

)

stream.foreachRDD(rdd => {

val offsetRange = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

val mapped: RDD[(String, String)] = rdd.map(record => (record.key, record.value))

val lines = mapped.map(_._2)

//retain the second element but ignore the first element

lines.foreach(println)

})

ssc.start

ssc.awaitTermination

}

}

然后在producer目录下编写build.sbt

里面的依赖是文件中所要用到的,且需匹配自己本地上的包版本

name := "Sales"

version := "1.0"

scalaVersion := "2.12.10"

libraryDependencies += "org.apache.spark" %% "spark-core" % "3.0.0"

libraryDependencies += "org.apache.spark" %% "spark-streaming" % "3.0.0" % "provided"

libraryDependencies += "org.apache.spark" %% "spark-streaming-kafka-0-10" % "3.0.0"

libraryDependencies += "org.apache.kafka" % "kafka-clients" % "2.6.0"

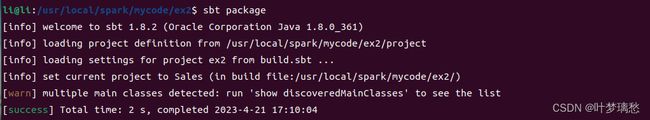

在build.sbt所在的目录下运行

sbt package

打包成功后将其提交给Spark运行

打包后的文件在

./target/scala-xxx/这个目录下

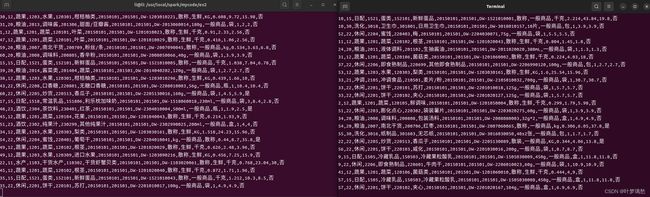

gnome-terminal -- bash -c "spark-submit --class SalesConsumer ./target/scala-2.12/sales_2.12-1.0.jar localhost:9092"

spark-submit --class SalesProducer ./target/scala-2.12/sales_2.12-1.0.jar localhost:9092

如果原有窗口(Proudcer)和新开窗口(Consumer)可以持续输出数据,那么测试成功

如果出现原有窗口要间隔很久才能输出一条数据,且新开窗口没有数据输出,请检查kafka是否正常启动

WebSocket服务器

使用springboot和WebSocket实现简单的服务器

pom.xml文件依赖:

请注意结合自己的对应版本

<dependencies>

<dependency>

<groupId>javax.websocketgroupId>

<artifactId>javax.websocket-apiartifactId>

<version>1.1version>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-websocketartifactId>

<version>3.0.0version>

dependency>

<dependency>

<groupId>org.springframework.kafkagroupId>

<artifactId>spring-kafkaartifactId>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-streaming_2.12artifactId>

<version>3.0.0version>

dependency>

<dependency>

<groupId>org.apache.kafkagroupId>

<artifactId>kafka-clientsartifactId>

<version>2.4.0version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-streaming-kafka-0-10_2.12artifactId>

<version>3.0.0version>

dependency>

dependencies>

首先实现一个Websocket来充当服务器

package org.example;

import org.springframework.stereotype.Component;

import javax.websocket.*;

import javax.websocket.server.ServerEndpoint;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

@ServerEndpoint("/sales")

@Component

public class WebSocket {

private Session session;

public static List<WebSocket> list = new ArrayList<>();

@OnOpen

public void onOpen(Session session){

System.out.println("Session " + session.getId() + " has opened a connection");

try {

this.session = session;

//保存实例,快速访问

list.add(this);

sendMessage("Connection Established");

} catch (IOException ex) {

ex.printStackTrace();

}

}

@OnMessage

public void onMessage(String message, Session session){

System.out.println("Message from " + session.getId() + ": " + message);

}

@OnClose

public void onClose(Session session){

System.out.println("Session " + session.getId() +" has closed!");

list.remove(this); //移除该实例

}

@OnError

public void onError(Session session, Throwable t) {

t.printStackTrace();

}

public void sendMessage(String msg) throws IOException {

try {

this.session.getBasicRemote().sendText(msg);

} catch (IllegalStateException e) {

e.printStackTrace();

}

}

}

实现一个Consumer来持续读取kafka的数据,继承Thread以便可以后台读取

package org.example;

import org.apache.kafka.clients.consumer.*;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.io.IOException;

import java.net.InetAddress;

import java.net.UnknownHostException;

import java.time.Duration;

import java.util.Collections;

import java.util.Properties;

public class SaleConsumer extends Thread {

private KafkaConsumer<String, String> kafkaConsumer;

@Override

public void run() {

Properties properties = new Properties();

try {

properties.put("client.id", InetAddress.getLocalHost().getHostName());

} catch (UnknownHostException e) {

e.printStackTrace();

}

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "sales");

kafkaConsumer = new KafkaConsumer<>(properties);

kafkaConsumer.subscribe(Collections.singletonList("sales"));// subscribe message from producer via kafka

while (true) {

ConsumerRecords<String, String> records = kafkaConsumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> record : records) {

System.out.println("receive from kafka: " + record.value());

//对所有连接到服务器的websocket发送

for (WebSocket webSocket : WebSocket.list) {

try {

webSocket.sendMessage(record.value());

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

}

}

编写配置类WebSocketConfig:

package org.example;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.socket.config.annotation.EnableWebSocket;

import org.springframework.web.socket.server.standard.ServerEndpointExporter;

@Configuration

@EnableWebSocket

public class WebSocketConfig {

//declare the annotation @ServerEndpoint

@Bean

public ServerEndpointExporter serverEndpoint() {

return new ServerEndpointExporter();

}

}

最后编写应用类 SaleApplication:

package org.example;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.web.socket.config.annotation.EnableWebSocket;

@SpringBootApplication(exclude = {

org.springframework.boot.autoconfigure.gson.GsonAutoConfiguration.class,

})

@EnableWebSocket

public class SaleApplication {

public static void main(String[] args) {

SaleConsumer consumer = new SaleConsumer();

consumer.start();

SpringApplication.run(SaleApplication.class, args);

}

}

网页

编写一个网页,用来接收服务器发送的数据,将数据处理后使用HighCharts将其展示出来

DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>Realtime Sales Datatitle>

<script src="./static/js/jquery-3.1.1.min.js">script>

<script src="https://code.highcharts.com/highcharts.js">script>

<script src="./static/js/exporting.js">script>

<style>

.container {

width: 1200px;

height: 600px;

}

#container2 {

margin-top: 20px;

}

style>

head>

<body>

<div>

<b>SalesCount: b><b id="sale_count" style="color: cornflowerblue;">0b><br/>

<b>SalesAmount: b><b id="sale_amount" style="color: cornflowerblue;">0b><br/>

<b>SalesDate: b><b id="sale_date" style="color: cornflowerblue;">b><br/>

div>

<div class="container" id="container1">div>

<hr style="color: aliceblue">

<div class="container" id="container2">div>

<script type="text/javascript" charset="utf-8">

$(document).ready(function () {

Highcharts.setOptions({

global: {

useUTC: false

}

});

let temp = undefined, timestamp = 0;

const saleChart = Highcharts.chart('container1', {

chart: {

type: 'line',

animation: Highcharts.svg, // use SVG animate

events: {

load: function() {

let series = this.series[0]

setInterval(function () {

let saleAmount = parseFloat($("#sale_amount").text());

let saleDate = $("#sale_date").text();

if (temp !== saleDate) {

temp = saleDate;

timestamp = 0;

} else {

timestamp += 10

}

let date = new Date(saleDate.replace(/^(\d{4})(\d{2})(\d{2})$/, "$1-$2-$3")).getTime() + timestamp

if (series.points.length > 15) series.addPoint([date, saleAmount], true, true);

else series.addPoint([date, saleAmount], true);

}, 666)

}

}

},

title: {

text: 'Shopping Sales'

},

xAxis: {

type: 'datetime',

labels: {

formatter: function() {

return Highcharts.dateFormat('%Y-%m-%d', this.value);

}

}

},

yAxis: {

title: {

text: 'Amount'

},

plotLines: [{

value: 0,

width: 1,

color: '#808080'

}]

},

tooltip: {

formatter: function () {

return 'Date: ' + Highcharts.dateFormat('%Y-%m-%d', this.x) + '

' +

'Amount: ' + Highcharts.numberFormat(this.y, 2);

}

},

legend: {

enabled: true

},

exporting: {

enabled: true

},

series: [{

name: 'Amount',

}]

});

let categories = []

let cateData = []

const cateChart = Highcharts.chart('container2', {

chart: {

type: 'column',

animation: Highcharts.svg, // use SVG animate

events: {

load: function() {

let series = this.series[0];

setInterval(() => {

cateData.forEach((value, index) => {

series.points[index].update(value);

})

}, 1500)

}

}

},

title: {

text: 'Sales By Category'

},

xAxis: {

categories: categories,

title: {

text: 'Category Name'

}

},

yAxis: {

data: cateData,

title: {

text: 'Amount'

},

plotLines: [{

value: 0,

width: 1,

color: '#808080'

}]

},

tooltip: {

formatter: function () {

return 'Categroy: ' + this.x + '

' +

'Amount: ' + Highcharts.numberFormat(this.y, 2);

}

},

legend: {

enabled: true

},

exporting: {

enabled: true

},

series: [{

name: 'Amount',

}]

});

var websocket = null;

var saleCount = 0;

var saleAmount = 0;

// check whether the browser support the websocket

if ('WebSocket' in window) {

//connect to springboot server

websocket = new WebSocket("ws://localhost:8080/sales");

} else {

alert('Not support websocket')

}

if (websocket != null) {

//call it when websocket occurred error

websocket.onerror = (error) => {

console.log("error: ", error.data);

};

//call it when websocket connected successfully

websocket.onopen = (event) => {

console.log("client opened");

}

//call it when websocket receive message

websocket.onmessage = (event) => {

setMessageInnerHTML(event.data);

}

//call it when websocket close

websocket.onclose = () => {

console.log("client closed");

}

window.onbeforeunload = () => {

closeWebSocket()

}

function setMessageInnerHTML(data) {

//pre-handle the primitive string

const arr = data.split(',');

const d = {

"customerCode": arr[0],

"categoryCode": arr[1],

"categoryName": arr[2],

"subCategoryCode": arr[3],

"subCategoryName": arr[4],

"productCode": arr[5],

"productName": arr[6],

"saleDate": arr[7],

"saleMonth": arr[8],

"goodsCode": arr[9],

"specification": arr[10],

"productType": arr[11],

"unit": arr[12],

"saleQuantity": arr[13],

"saleAmount": arr[14],

"unitPrice": arr[15],

"isPromotion": arr[16]

};

//update relevant date

if (d.saleAmount !== undefined) {

saleCount += 1;

$("#sale_count").html(saleCount)

saleAmount += parseFloat(d.saleAmount)

$("#sale_amount").html(Math.round(saleAmount * 100) / 100)

$("#sale_date").html(d.saleDate)

let index = categories.indexOf(d.categoryName)

// if specify category doesn't exist

if (index === -1) {

categories.push(d.categoryName);

cateData.push(parseFloat(d.saleAmount));

cateChart.series[0].addPoint({name: d.categoryName, y: saleAmount}, true);

} else {

//update amount of the existed category

cateData[index] += Math.round(parseFloat(d.saleAmount) * 100) / 100;

}

}

}

//do something when close websocket

function closeWebSocket() {

websocket.close();

}

}

});

script>

body>

html>

启动

实现成功

再打一剂希望麻醉了痛苦

只能进 不能退 扛不起 放不下

不得不走下去