【GAM】《Global Attention Mechanism:Retain Information to Enhance Channel-Spatial Interactions》

arXiv-2021

文章目录

- 1 Background and Motivation

- 2 Related Work

- 3 Advantages / Contributions

- 4 Method

- 5 Experiments

-

- 5.1 Datasets and Metrics

- 5.2 Classification on CIFAR-100 and ImageNet datasets

- 5.3 Ablation studies

- 6 Conclusion(own)

1 Background and Motivation

注意力是很有效的提点方法,但是 the prior methods overlooked the significance of retaining the information on both channel and spatial aspects to enhance the cross-dimension interactions

作者提出 Global Attention Mechanism 注意力,加强 Channel-Spatial Interactions

2 Related Work

- SENet

- CBAM

- BAM

- TAM(channel,height,width)Triplet attention module

作者也是 capturing significant features across all three dimensions

3 Advantages / Contributions

- 提出 GAM 注意力,进一步 Enhance Channel-Spatial Interactions

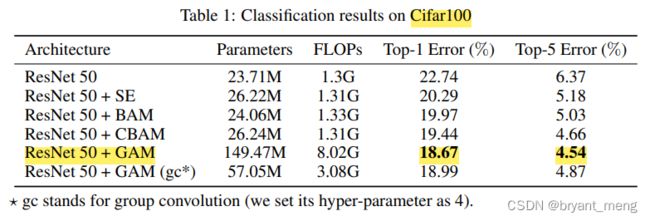

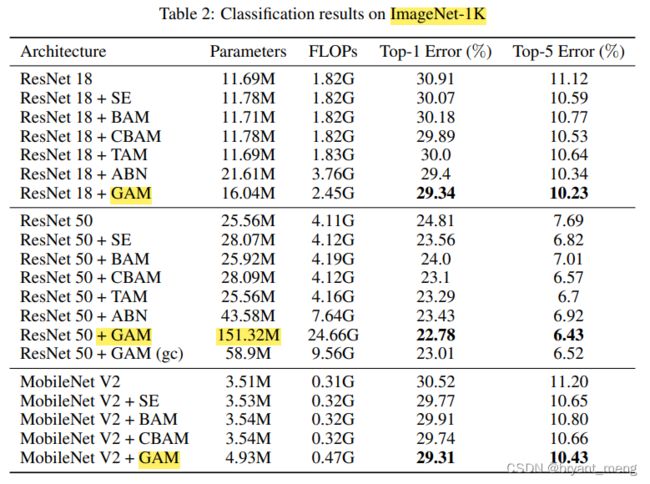

- 在 CIFAR-100 and ImageNet-1K 上验证了其有效性

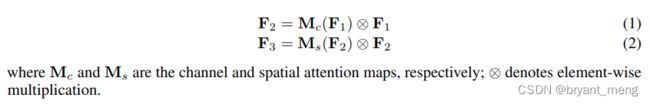

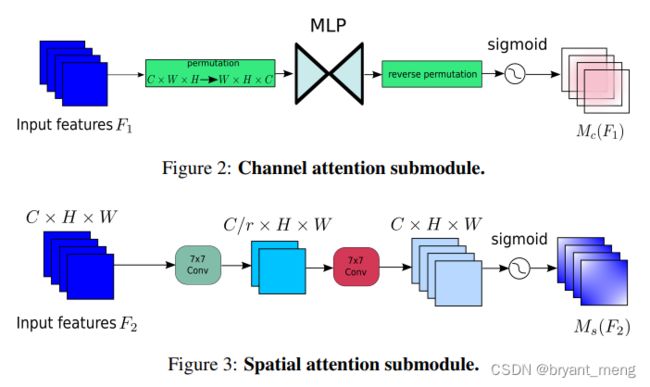

4 Method

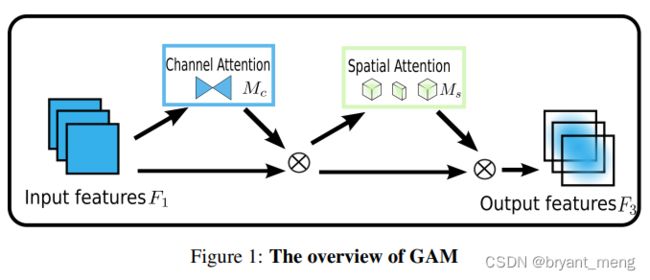

整体流程

简单明了,看起来和 CBAM 一样(【Attention】《CBAM: Convolutional Block Attention Module》),公式也是

做 channel attention 的时候保留了 H 和 W

做 spatial attention 的时候保留了 C

参数量太多,spatial attention 的时候引入了channel groups 和 channel shuffle

import numpy as np

import torch

from torch import nn

from torch.nn import init

class GAMAttention(nn.Module):

#https://paperswithcode.com/paper/global-attention-mechanism-retain-information

def __init__(self, c1, c2, group=True,rate=4):

super(GAMAttention, self).__init__()

self.channel_attention = nn.Sequential(

nn.Linear(c1, int(c1 / rate)),

nn.ReLU(inplace=True),

nn.Linear(int(c1 / rate), c1)

)

self.spatial_attention = nn.Sequential(

nn.Conv2d(c1, c1//rate, kernel_size=7, padding=3,groups=rate)if group else nn.Conv2d(c1, int(c1 / rate), kernel_size=7, padding=3),

nn.BatchNorm2d(int(c1 /rate)),

nn.ReLU(inplace=True),

nn.Conv2d(c1//rate, c2, kernel_size=7, padding=3,groups=rate) if group else nn.Conv2d(int(c1 / rate), c2, kernel_size=7, padding=3),

nn.BatchNorm2d(c2)

)

def forward(self, x):

b, c, h, w = x.shape

x_permute = x.permute(0, 2, 3, 1).view(b, -1, c)

x_att_permute = self.channel_attention(x_permute).view(b, h, w, c)

x_channel_att = x_att_permute.permute(0, 3, 1, 2)

x = x * x_channel_att

x_spatial_att = self.spatial_attention(x).sigmoid()

x_spatial_att=channel_shuffle(x_spatial_att,4) #last shuffle

out = x * x_spatial_att

return out

def channel_shuffle(x, groups=2):

B, C, H, W = x.size()

out = x.view(B, groups, C // groups, H, W).permute(0, 2, 1, 3, 4).contiguous()

out=out.view(B, C, H, W)

return out

可以看到 channel attention 并没有用到 sigmoid,整个 GAM 也没有进行 residual connection 操作。

5 Experiments

5.1 Datasets and Metrics

- CIFAR100

- ImageNet 1K

top1 and top5 error

5.2 Classification on CIFAR-100 and ImageNet datasets

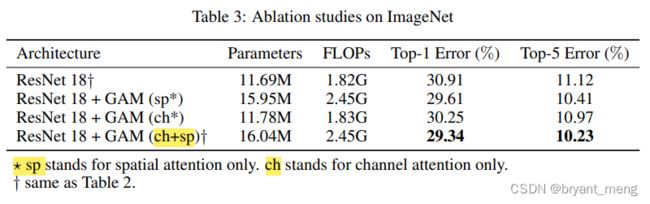

5.3 Ablation studies

消融了下 channel attention 和 spatial attention

二合一比较猛

作者的观点:It is possible for max-pooling to contribute negatively in spatial attention depends on the neural architecture.

6 Conclusion(own)

Enhance Channel-Spatial Interactions,做 channel 的时候保留了 spatial,做 spatial 的时候保留了 channel.