PyTorch自学&遇到的一些错误

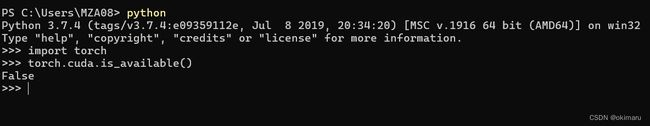

为什么是false?

①检查GPU是否支持CUDA?

支持

理解Package结构及法宝函数的作用

pytorch就像一个工具箱

dir():打开操作,能看到里面有什么东西---->dir(torch)

help():说明书---->help(torch.cuda.is_available)

From torch.utils.data import Dataset

pytorch如何读取数据?

①Dataset

提供一种方式去获取数据及其label

如何获取每一个数据及其label

告诉我们总共有多少的数据

②Dataloader

为网络提供不同的数据形式

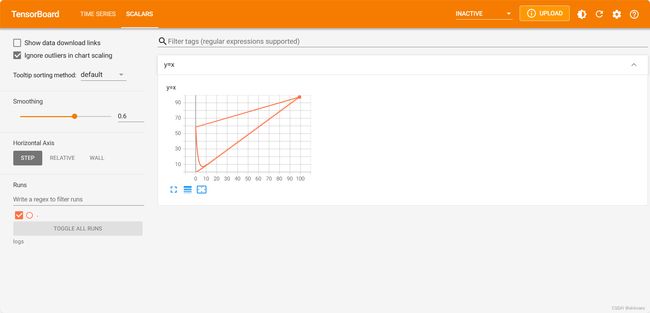

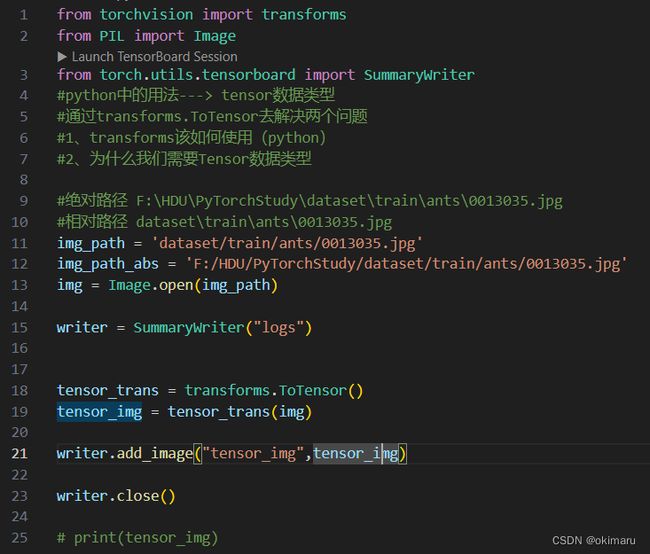

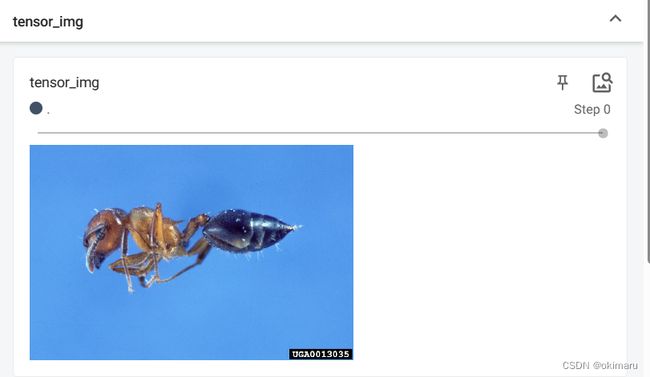

Tensorboard的使用

Global_step:x轴

Scalar_value:y轴

如何读取logs里面的文件

PS F:\HDU\PyTorchStudy> tensorboard --logdir=logs

指定端口:tensorboard --logdir=logs --port=6007

结果:有图像展示

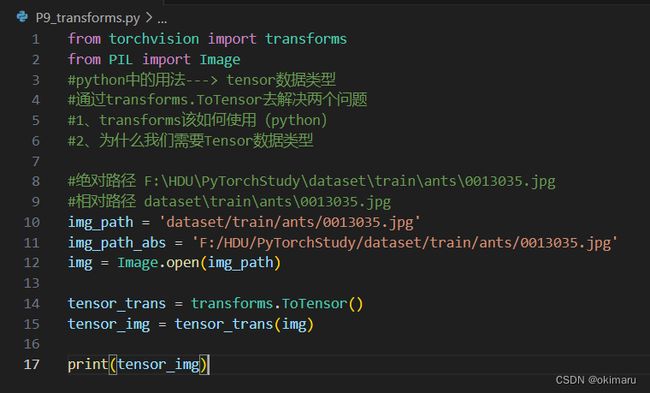

图像变换,transform的使用

利用numpy.array(),对PIL图片进行转换

torchvision中的transforms:对图像进行一个变换

Transforms.py 工具箱

Totensor/resize/。。。

图片--->工具 --->结果

结果:

tensor([[[0.3137, 0.3137, 0.3137, ..., 0.3176, 0.3098, 0.2980],

[0.3176, 0.3176, 0.3176, ..., 0.3176, 0.3098, 0.2980],

[0.3216, 0.3216, 0.3216, ..., 0.3137, 0.3098, 0.3020],

...,

[0.3412, 0.3412, 0.3373, ..., 0.1725, 0.3725, 0.3529],

[0.3412, 0.3412, 0.3373, ..., 0.3294, 0.3529, 0.3294],

[0.3412, 0.3412, 0.3373, ..., 0.3098, 0.3059, 0.3294]],

[[0.5922, 0.5922, 0.5922, ..., 0.5961, 0.5882, 0.5765],

[0.5961, 0.5961, 0.5961, ..., 0.5961, 0.5882, 0.5765],

[0.6000, 0.6000, 0.6000, ..., 0.5922, 0.5882, 0.5804],

...,

[0.6275, 0.6275, 0.6235, ..., 0.3608, 0.6196, 0.6157],

[0.6275, 0.6275, 0.6235, ..., 0.5765, 0.6275, 0.5961],

[0.6275, 0.6275, 0.6235, ..., 0.6275, 0.6235, 0.6314]],

[[0.9137, 0.9137, 0.9137, ..., 0.9176, 0.9098, 0.8980],

[0.9176, 0.9176, 0.9176, ..., 0.9176, 0.9098, 0.8980],

[0.9216, 0.9216, 0.9216, ..., 0.9137, 0.9098, 0.9020],

...,

[0.9294, 0.9294, 0.9255, ..., 0.5529, 0.9216, 0.8941],

[0.9294, 0.9294, 0.9255, ..., 0.8863, 1.0000, 0.9137],

[0.9294, 0.9294, 0.9255, ..., 0.9490, 0.9804, 0.9137]]])

常见的Transforms

| 输入 |

PIL |

Image.open() |

| 输出 |

tensor |

ToTensor() |

| 作用 |

narrays |

Cv.imread() |

call的使用

__call__Hello zhangsan

Hellolisi

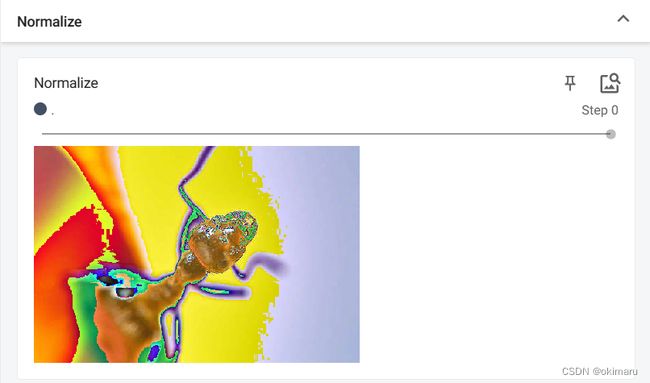

归一化:Normalize

output[channel] = (input[channel] - mean[channel]) / std[channel]

tensor(0.8863)

tensor(0.7725)

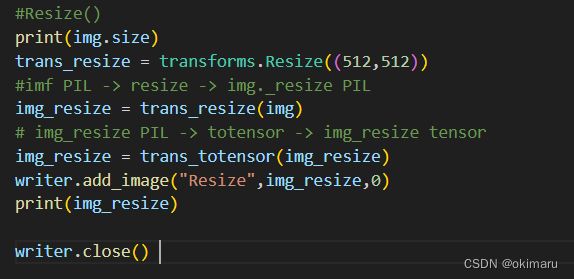

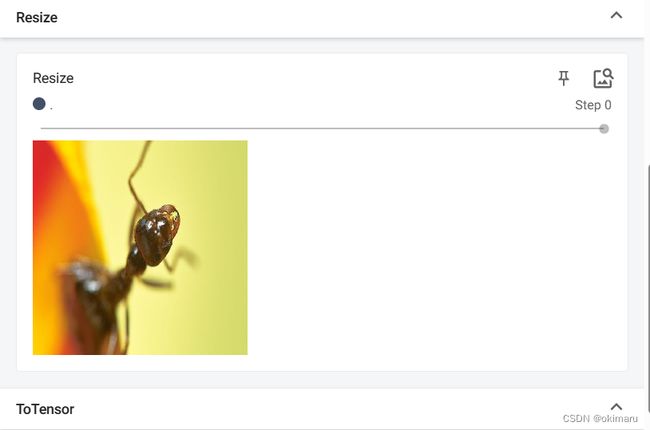

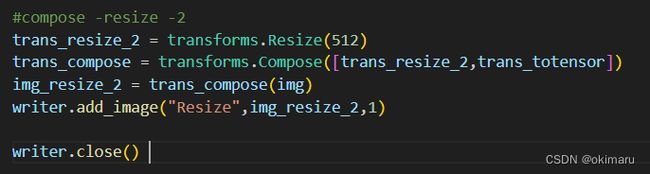

Resize()的使用

(500, 333)

tensor([[[0.8863, 0.8824, 0.8745, ..., 0.8392, 0.8392, 0.8392],

[0.8824, 0.8784, 0.8706, ..., 0.8392, 0.8392, 0.8392],

[0.8784, 0.8745, 0.8667, ..., 0.8353, 0.8353, 0.8353],

...,

[1.0000, 1.0000, 1.0000, ..., 0.8314, 0.8314, 0.8314],

[1.0000, 1.0000, 1.0000, ..., 0.8314, 0.8314, 0.8314],

[1.0000, 1.0000, 1.0000, ..., 0.8314, 0.8314, 0.8314]],

[[0.1451, 0.1412, 0.1333, ..., 0.8471, 0.8471, 0.8471],

[0.1451, 0.1412, 0.1333, ..., 0.8471, 0.8471, 0.8471],

[0.1490, 0.1451, 0.1373, ..., 0.8431, 0.8431, 0.8431],

...,

[0.8275, 0.8275, 0.8275, ..., 0.8549, 0.8549, 0.8549],

[0.8275, 0.8275, 0.8275, ..., 0.8549, 0.8549, 0.8549],

[0.8275, 0.8275, 0.8275, ..., 0.8549, 0.8549, 0.8549]],

[[0.1686, 0.1647, 0.1569, ..., 0.4275, 0.4275, 0.4275],

[0.1686, 0.1647, 0.1569, ..., 0.4275, 0.4275, 0.4275],

[0.1686, 0.1647, 0.1569, ..., 0.4235, 0.4235, 0.4235],

...,

[0.0078, 0.0078, 0.0078, ..., 0.4235, 0.4235, 0.4235],

[0.0078, 0.0078, 0.0078, ..., 0.4235, 0.4235, 0.4235],

[0.0078, 0.0078, 0.0078, ..., 0.4235, 0.4235, 0.4235]]])

Compose()用法

数据需要时transforms类型,所以得到Compose([transforms参数1,transforms参数2,…])

RandomCrop

关注输入和输出类型

多看官方文档

关注方法需要什么参数

不知道返回值的时候

*print(type())

*debug

DataLoader的使用

例:

Dataloader(batch_size=4)

Img0,target0 = dataset[0]

Img1,target1 = dataset[1]

Img2,target2 = dataset[2]

Img3,target3 = dataset[3]

Getitem():

Return img,target

神经网络的搭建nn.Module