作者: Liuhaoao 原文来源: https://tidb.net/blog/170d6d47

近期有个生产系统,计划做一套dr-autosync的集群,但是之前并没有这种类型系统的生产实施经验,就一点点的摸索,好在最后是顺利搭建成功了,把搭建过程分享出来给大家参考下。

1、集群架构

2、规划拓扑

根据集群架构规划拓扑文件

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/tidb/tidb-deploy"

data_dir: "/tidb/tidb-data"

arch: "arm64"

monitored:

node_exporter_port: 19100

blackbox_exporter_port: 19115

server_configs:

tidb:

tikv:

pd:

dashboard.enable-telemetry: false

log.file.max-backups: 100

log.file.max-days: 90

replication.isolation-level: logic

replication.location-labels:

- dc

- logic

- rack

- host

replication.max-replicas: 5

schedule.max-store-down-time: 30m

pd_servers:

- host: 10.3.65.1

client_port: 12379

peer_port: 12380

- host: 10.3.65.2

client_port: 12379

peer_port: 12380

- host: 10.3.65.3

client_port: 12379

peer_port: 12380

- host: 10.3.65.1

client_port: 12379

peer_port: 12380

- host: 10.3.65.2

client_port: 12379

peer_port: 12380

tidb_servers:

- host: 10.3.65.1

port: 24000

status_port: 20080

- host: 10.3.65.2

port: 24000

status_port: 20080

- host: 10.3.65.3

port: 24000

status_port: 20080

- host: 10.3.65.1

port: 24000

status_port: 20080

- host: 10.3.65.2

port: 24000

status_port: 20080

- host: 10.3.65.3

port: 24000

status_port: 20080

tikv_servers:

- host: 10.3.65.1

port: 20160

status_port: 20180

config:

server.labels:

dc: dc1

logic: logic1

rack: rack1

host: host1

- host: 10.3.65.2

port: 20160

status_port: 20180

config:

server.labels:

dc: dc1

logic: logic2

rack: rack1

host: host1

- host: 10.3.65.3

port: 20160

status_port: 20180

config:

server.labels:

dc: dc1

logic: logic3

rack: rack1

host: host1

- host: 10.3.65.1

port: 20160

status_port: 20180

config:

server.labels:

dc: dc2

logic: logic4

rack: rack1

host: host1

- host: 10.3.65.2

port: 20160

status_port: 20180

config:

server.labels:

dc: dc2

logic: logic5

rack: rack1

host: host1

- host: 10.3.65.3

port: 20160

status_port: 20180

config:

server.labels:

dc: dc2

logic: logic6

rack: rack1

host: host1

monitoring_servers:

- host: 10.3.65.3

port: 29090

ng_port: 22020

grafana_servers:

- host: 10.3.65.3

port: 23000

alertmanager_servers:

- host: 10.3.65.3

web_port: 29093

cluster_port: 29094

3、集群部署

1、部署集群

tiup cluster deploy dr-auto-sync v6.5.4 dr-auto-sync.yaml --user tidb -p

2、编写dr-auto-sync集群的json文件

vim rule.json

[

{

"group_id": "pd",

"group_index": 0,

"group_override": false,

"rules": [

{

"group_id": "pd",

"id": "dc1",

"start_key": "",

"end_key": "",

"role": "voter",

"count": 3,

"location_labels": ["dc", "logic", "rack", "host"],

"label_constraints": [{"key": "dc", "op": "in", "values": ["dc1"]}]

},

{

"group_id": "pd",

"id": "dc2",

"start_key": "",

"end_key": "",

"role": "follower",

"count": 2,

"location_labels": ["dc", "logic", "rack", "host"],

"label_constraints": [{"key": "dc", "op": "in", "values": ["dc2"]}]

},

{

"group_id": "pd",

"id": "dc2-1",

"start_key": "",

"end_key": "",

"role": "learner",

"count": 1,

"location_labels": ["dc", "logic", "rack", "host"],

"label_constraints": [{"key": "dc", "op": "in", "values": ["dc2"]}]

}

]

}

]

3、配置placement rule json文件,使其生效

[tidb@tidb141 ~]$ tiup ctl:v6.5.4 pd -u 10.3.65.141:22379 -i

» config placement-rules rule-bundle save --in="/home/tidb/rule.json"

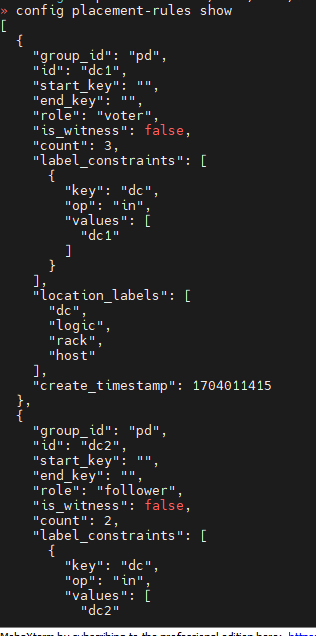

检查配置是否生效

» config placement-rules show

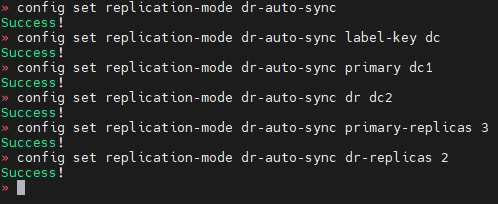

4、修改dr-auto-sync 模式

config set replication-mode dr-auto-sync

5、配置dr-auto-sync 的机房标签

config set replication-mode dr-auto-sync label-key dc

6、配置主机房

config set replication-mode dr-auto-sync primary dc1

7、配置从机房

config set replication-mode dr-auto-sync dr dc2

8、配置主机房副本数量

config set replication-mode dr-auto-sync primary-replicas 3

9、配置从机房副本数量

config set replication-mode dr-auto-sync dr-replicas 2

10、如果集群为跨机房部署的dr-auto-sync 架构,需要确保pd leader 始终位于主机房,可以配置主机房pd权重高于备机房,数值越大权重越高,越优先考虑成为pd leader

tiup ctl:v6.5.3 pd –u 192.168.113.1:12379 -i

member leader_prioritypd-192.168.113.1-12379 100

member leader_prioritypd-192.168.113.2-12379 100

member leader_prioritypd-192.168.113.3-12379 100

member leader_prioritypd-192.168.113.4-12379 50

member leader_prioritypd-192.168.113.5-12379 50

11、检查集群同步状态

[tidb@tidb141 ~]$ curl http://10.3.65.141:22379/pd/api/v1/replication_mode/status

4、测试

1、手动关停备机房tikv节点,等待约一分钟左右,检查同步级别是否自动降级为async

[tidb@tidb141 ~]$ tiup cluster stop dr-auto-sync -N 10.3.65.142:10160,10.3.65.142:40160,10.3.65.142:50160

同步级别自动降级为async(异步)

2、启动关停的tikv节点,等待约一分钟左右,检查同步级别是否自动升级为sync

[tidb@tidb141 ~]$ tiup cluster start dr-auto-sync -N 10.3.65.142:10160,10.3.65.142:40160,10.3.65.142:50160

同步级别自动升级为sync,符合预期

至此,dr-auto-sync集群部署成功

5、总结

dr-auto-sync集群,较普通集群其实区别不大,只要按需规划好集群拓扑及、abels、json文件,基本上不会有什么问题,把它当作普通集群部署就可以,但有几点需要注意:

1、跨机房的话,需要配置pd的权重,防止pd leader跑到备机房,影响整体性能

2、我使用的6.5.4版本,dr-auto-sync有个bug,配置完成后,需要reload一下tikv节点,触发region leader重新选举,同步链路才会升级为sync状态,否则会一直卡在sync_recover阶段