【RT-DETR有效改进】ShapeIoU、InnerShapeIoU关注边界框本身的IoU(包含二次创新)

前言

大家好,我是Snu77,这里是RT-DETR有效涨点专栏。

本专栏的内容为根据ultralytics版本的RT-DETR进行改进,内容持续更新,每周更新文章数量3-10篇。

专栏以ResNet18、ResNet50为基础修改版本,同时修改内容也支持ResNet32、ResNet101和PPHGNet版本,其中ResNet为RT-DETR官方版本1:1移植过来的,参数量基本保持一致(误差很小很小),不同于ultralytics仓库版本的ResNet官方版本,同时ultralytics仓库的一些参数是和RT-DETR相冲的所以我也是会教大家调好一些参数,真正意义上的跑ultralytics的和RT-DETR官方版本的无区别。

欢迎大家订阅本专栏,一起学习RT-DETR!

一、本文介绍

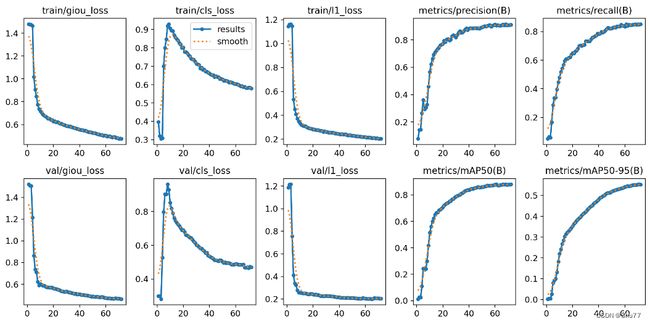

本文给大家带来的改进机制是ShapeIoU其是一种关注边界框本身形状和尺度的边界框回归方法(IoU),同时本文的内容包括过去到现在的百分之九十以上的损失函数的实现,使用方法非常简单,在本文的末尾还会教大家在改进模型时何时添加损失函数才能达到最好的效果,以下为修改了我的调参结果训练的结果图像。

官方链接:RT-DETR剑指论文专栏,持续复现各种顶会内容——论文收割机RT-DETR

目录

一、本文介绍

二、ShapeIoU

三、ShapeIoU的核心代码

四、ShapeIoU的使用方式

4.1 修改一

4.2 修改二

五、总结

二、ShapeIoU

官方代码地址: 官方代码地址

这幅图展示了在目标检测任务中,两种不同情况或方法下的边界框回归的对比。

GT (Ground Truth): 用桃色框表示,指的是图像中物体实际的位置和形状。在目标检测中,算法试图尽可能准确地预测这个框。

Anchor: 蓝色框代表一个预定义的框,是算法预设的一系列框,用于与GT框进行匹配,寻找最佳的候选框。

在图中,我们看到四个不同的情况(A、B、C、D),每个都显示了一个anchor与GT的对比,并给出了IoU(交并比)的数值。IoU是一个常用的度量,用来评估预测边界框与真实边界框之间的重叠程度。

论文中给了一堆公式,我也看不太懂,大家有兴趣可以看看。

三、ShapeIoU的核心代码

其中缺少一个模块ops,大家根据自己结构代码中进行导入即可。

import numpy as np

import torch

import math

class WIoU_Scale:

''' monotonous: {

None: origin v1

True: monotonic FM v2

False: non-monotonic FM v3

}

momentum: The momentum of running mean'''

iou_mean = 1.

monotonous = False

_momentum = 1 - 0.5 ** (1 / 7000)

_is_train = True

def __init__(self, iou):

self.iou = iou

self._update(self)

@classmethod

def _update(cls, self):

if cls._is_train: cls.iou_mean = (1 - cls._momentum) * cls.iou_mean + \

cls._momentum * self.iou.detach().mean().item()

@classmethod

def _scaled_loss(cls, self, gamma=1.9, delta=3):

if isinstance(self.monotonous, bool):

if self.monotonous:

return (self.iou.detach() / self.iou_mean).sqrt()

else:

beta = self.iou.detach() / self.iou_mean

alpha = delta * torch.pow(gamma, beta - delta)

return beta / alpha

return 1

def bbox_iou(box1, box2, xywh=True, GIoU=False, DIoU=False, CIoU=False, EIoU=False, SIoU=False, WIoU=False, ShapeIoU=False,

hw=1, mpdiou=False, Inner=False, alpha=1, ratio=0.7, eps=1e-7, scale=0.0):

"""

Calculate Intersection over Union (IoU) of box1(1, 4) to box2(n, 4).

Args:

box1 (torch.Tensor): A tensor representing a single bounding box with shape (1, 4).

box2 (torch.Tensor): A tensor representing n bounding boxes with shape (n, 4).

xywh (bool, optional): If True, input boxes are in (x, y, w, h) format. If False, input boxes are in

(x1, y1, x2, y2) format. Defaults to True.

GIoU (bool, optional): If True, calculate Generalized IoU. Defaults to False.

DIoU (bool, optional): If True, calculate Distance IoU. Defaults to False.

CIoU (bool, optional): If True, calculate Complete IoU. Defaults to False.

EIoU (bool, optional): If True, calculate Efficient IoU. Defaults to False.

SIoU (bool, optional): If True, calculate Scylla IoU. Defaults to False.

eps (float, optional): A small value to avoid division by zero. Defaults to 1e-7.

Returns:

(torch.Tensor): IoU, GIoU, DIoU, or CIoU values depending on the specified flags.

"""

if Inner:

if not xywh:

box1, box2 = ops.xyxy2xywh(box1), ops.xyxy2xywh(box2)

(x1, y1, w1, h1), (x2, y2, w2, h2) = box1.chunk(4, -1), box2.chunk(4, -1)

b1_x1, b1_x2, b1_y1, b1_y2 = x1 - (w1 * ratio) / 2, x1 + (w1 * ratio) / 2, y1 - (h1 * ratio) / 2, y1 + (

h1 * ratio) / 2

b2_x1, b2_x2, b2_y1, b2_y2 = x2 - (w2 * ratio) / 2, x2 + (w2 * ratio) / 2, y2 - (h2 * ratio) / 2, y2 + (

h2 * ratio) / 2

# Intersection area

inter = (b1_x2.minimum(b2_x2) - b1_x1.maximum(b2_x1)).clamp_(0) * \

(b1_y2.minimum(b2_y2) - b1_y1.maximum(b2_y1)).clamp_(0)

# Union Area

union = w1 * h1 * ratio * ratio + w2 * h2 * ratio * ratio - inter + eps

iou = inter / union

# Get the coordinates of bounding boxes

else:

if xywh: # transform from xywh to xyxy

(x1, y1, w1, h1), (x2, y2, w2, h2) = box1.chunk(4, -1), box2.chunk(4, -1)

w1_, h1_, w2_, h2_ = w1 / 2, h1 / 2, w2 / 2, h2 / 2

b1_x1, b1_x2, b1_y1, b1_y2 = x1 - w1_, x1 + w1_, y1 - h1_, y1 + h1_

b2_x1, b2_x2, b2_y1, b2_y2 = x2 - w2_, x2 + w2_, y2 - h2_, y2 + h2_

else: # x1, y1, x2, y2 = box1

b1_x1, b1_y1, b1_x2, b1_y2 = box1.chunk(4, -1)

b2_x1, b2_y1, b2_x2, b2_y2 = box2.chunk(4, -1)

w1, h1 = b1_x2 - b1_x1, b1_y2 - b1_y1 + eps

w2, h2 = b2_x2 - b2_x1, b2_y2 - b2_y1 + eps

# Intersection area

inter = (b1_x2.minimum(b2_x2) - b1_x1.maximum(b2_x1)).clamp_(0) * \

(b1_y2.minimum(b2_y2) - b1_y1.maximum(b2_y1)).clamp_(0)

# Union Area

union = w1 * h1 + w2 * h2 - inter + eps

# IoU

iou = inter / union

if CIoU or DIoU or GIoU or EIoU or SIoU or ShapeIoU or mpdiou or WIoU:

cw = b1_x2.maximum(b2_x2) - b1_x1.minimum(b2_x1) # convex (smallest enclosing box) width

ch = b1_y2.maximum(b2_y2) - b1_y1.minimum(b2_y1) # convex height

if CIoU or DIoU or EIoU or SIoU or mpdiou or WIoU or ShapeIoU: # Distance or Complete IoU https://arxiv.org/abs/1911.08287v1

c2 = cw ** 2 + ch ** 2 + eps # convex diagonal squared

rho2 = ((b2_x1 + b2_x2 - b1_x1 - b1_x2) ** 2 + (b2_y1 + b2_y2 - b1_y1 - b1_y2) ** 2) / 4 # center dist ** 2

if CIoU: # https://github.com/Zzh-tju/DIoU-SSD-pytorch/blob/master/utils/box/box_utils.py#L47

v = (4 / math.pi ** 2) * (torch.atan(w2 / h2) - torch.atan(w1 / h1)).pow(2)

with torch.no_grad():

alpha = v / (v - iou + (1 + eps))

return iou - (rho2 / c2 + v * alpha) # CIoU

elif EIoU:

rho_w2 = ((b2_x2 - b2_x1) - (b1_x2 - b1_x1)) ** 2

rho_h2 = ((b2_y2 - b2_y1) - (b1_y2 - b1_y1)) ** 2

cw2 = cw ** 2 + eps

ch2 = ch ** 2 + eps

return iou - (rho2 / c2 + rho_w2 / cw2 + rho_h2 / ch2) # EIoU

elif SIoU:

# SIoU Loss https://arxiv.org/pdf/2205.12740.pdf

s_cw = (b2_x1 + b2_x2 - b1_x1 - b1_x2) * 0.5 + eps

s_ch = (b2_y1 + b2_y2 - b1_y1 - b1_y2) * 0.5 + eps

sigma = torch.pow(s_cw ** 2 + s_ch ** 2, 0.5)

sin_alpha_1 = torch.abs(s_cw) / sigma

sin_alpha_2 = torch.abs(s_ch) / sigma

threshold = pow(2, 0.5) / 2

sin_alpha = torch.where(sin_alpha_1 > threshold, sin_alpha_2, sin_alpha_1)

angle_cost = torch.cos(torch.arcsin(sin_alpha) * 2 - math.pi / 2)

rho_x = (s_cw / cw) ** 2

rho_y = (s_ch / ch) ** 2

gamma = angle_cost - 2

distance_cost = 2 - torch.exp(gamma * rho_x) - torch.exp(gamma * rho_y)

omiga_w = torch.abs(w1 - w2) / torch.max(w1, w2)

omiga_h = torch.abs(h1 - h2) / torch.max(h1, h2)

shape_cost = torch.pow(1 - torch.exp(-1 * omiga_w), 4) + torch.pow(1 - torch.exp(-1 * omiga_h), 4)

return iou - 0.5 * (distance_cost + shape_cost) + eps # SIoU

elif ShapeIoU:

#Shape-Distance #Shape-Distance #Shape-Distance #Shape-Distance #Shape-Distance #Shape-Distance #Shape-Distance

ww = 2 * torch.pow(w2, scale) / (torch.pow(w2, scale) + torch.pow(h2, scale))

hh = 2 * torch.pow(h2, scale) / (torch.pow(w2, scale) + torch.pow(h2, scale))

cw = torch.max(b1_x2, b2_x2) - torch.min(b1_x1, b2_x1) # convex width

ch = torch.max(b1_y2, b2_y2) - torch.min(b1_y1, b2_y1) # convex height

c2 = cw ** 2 + ch ** 2 + eps # convex diagonal squared

center_distance_x = ((b2_x1 + b2_x2 - b1_x1 - b1_x2) ** 2) / 4

center_distance_y = ((b2_y1 + b2_y2 - b1_y1 - b1_y2) ** 2) / 4

center_distance = hh * center_distance_x + ww * center_distance_y

distance = center_distance / c2

#Shape-Shape #Shape-Shape #Shape-Shape #Shape-Shape #Shape-Shape #Shape-Shape #Shape-Shape #Shape-Shape

omiga_w = hh * torch.abs(w1 - w2) / torch.max(w1, w2)

omiga_h = ww * torch.abs(h1 - h2) / torch.max(h1, h2)

shape_cost = torch.pow(1 - torch.exp(-1 * omiga_w), 4) + torch.pow(1 - torch.exp(-1 * omiga_h), 4)

return iou - distance - 0.5 * shape_cost

elif mpdiou:

d1 = (b2_x1 - b1_x1) ** 2 + (b2_y1 - b1_y1) ** 2

d2 = (b2_x2 - b1_x2) ** 2 + (b2_y2 - b1_y2) ** 2

return iou - d1 / hw.unsqueeze(1) - d2 / hw.unsqueeze(1) # MPDIoU

elif WIoU:

self = WIoU_Scale(1 - iou)

dist = getattr(WIoU_Scale, '_scaled_loss')(self)

return iou * dist # WIoU https://arxiv.org/abs/2301.10051

return iou - rho2 / c2 # DIoU

c_area = cw * ch + eps # convex area

return iou - (c_area - union) / c_area # GIoU https://arxiv.org/pdf/1902.09630.pdf

return iou # IoU

四、ShapeIoU的使用方式

4.1 修改一

第一步我们需要找到如下的文件ultralytics/utils/metrics.py,找到如下的代码,下面的图片是原先的代码部分截图的正常样子,然后我们将上面的整个代码块将下面的整个方法(这里这是部分截图)内容全部替换。

![]()

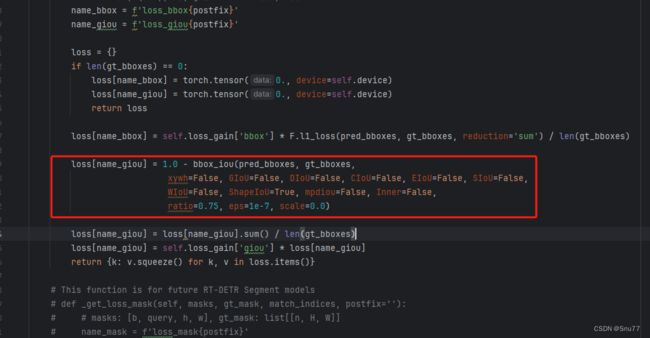

4.2 修改二

第二步我们找到另一个文件如下->"ultralytics/models/utils/loss.py",(注意这个文件和YOLOv8的修改内容不是一个!!!!)我们找到如下的代码块,初始样子如下,然后用我下面给的代码块替换红框内的代码。

loss[name_giou] = 1.0 - bbox_iou(pred_bboxes, gt_bboxes,

xywh=False, GIoU=False, DIoU=False, CIoU=False, EIoU=False, SIoU=False,

WIoU=False, ShapeIoU=True, hw=2, mpdiou=False, Inner=False,

ratio=0.75, eps=1e-7, scale=0.0)替换完成的样子如下所示。

到此我们就可以进行设置使用了,看到我这里以及将ShapeIoU都设置成True了,同时我们使用Inner思想将其设置为True即可,此时使用的就是InnerShapeIoU。

如果inner为False,ShapeIoU为True那么使用的就是ShapeIoU。

五、总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的RT-DETR改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充,如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~

RT-DETR改进专栏:RT-DETR专栏——持续复现各种顶会内容——论文收割机