数仓项目6.0配置大全(hadoop/Flume/zk/kafka/mysql配置)

配置背景

我使用的root用户,懒得加sudo

所有文件夹在/opt/module

所有安装包在/opt/software

所有脚本文件在/root/bin

三台虚拟机:hadoop102-103-104

分发脚本 fenfa,放在~/bin下,chmod 777 fenfa给权限

#!/bin/bash

#1. 判断参数个数

if [ $# -lt 1 ]

then

echo XXXXXXXXX No Arguement XXXXXXXXX!

exit;

fi

#2. 遍历集群所有机器

for host in hadoop103 hadoop104

do

echo ==================== $host ====================

#3. 遍历所有目录,挨个发送

for file in $@

do

#4. 判断文件是否存在

if [ -e $file ]

then

#5. 获取父目录

pdir=$(cd -P $(dirname $file); pwd)

#6. 获取当前文件的名称

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

doneHadoop3.3.4

集群规划

注意:NameNode和SecondaryNameNode不要安装在同一台服务器

注意:ResourceManager也很消耗内存,不要和NameNode、SecondaryNameNode配置

| hadoop102 |

hadoop103 |

hadoop104 |

|

| HDFS |

NameNode DataNode |

DataNode |

SecondaryNameNode DataNode |

| YARN |

NodeManager |

ResourceManager NodeManager |

NodeManager |

集群安装步骤

下载https://archive.apache.org/dist/hadoop/common/hadoop-3.3.4/hadoop-3.3.4.tar.gz

用xftp工具把安装包传到/opt/software

解压安装包

cd /opt/software/

tar -zxvf hadoop-3.3.4.tar.gz -C /opt/module/

改名、软连接(为了之后使用方便)

cd /opt/module

mv hadoop-3.3.4XXX hadoop-334

ln -s hadoop-334 hadoop

环境变量

vim /etc/profile.d/my_env.sh

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

分发hadoop和环境变量

fenfa /opt/module/hadoop-334

fenfa /opt/module/hadoop

fenfa /etc/profile.d/my_env.sh

配置文件

配置core-site.xml

cd $HADOOP_HOME/etc/hadoop

fs.defaultFS

hdfs://hadoop102:8020

hadoop.tmp.dir

/opt/module/hadoop/data

hadoop.http.staticuser.user

root

hadoop.proxyuser.atguigu.hosts

*

hadoop.proxyuser.atguigu.groups

*

hadoop.proxyuser.atguigu.users

*

-->

配置hdfs-site.xml

dfs.namenode.http-address

hadoop102:9870

dfs.namenode.secondary.http-address

hadoop104:9868

dfs.replication

1

配置yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.hostname

hadoop103

yarn.nodemanager.env-whitelist

JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME

yarn.scheduler.minimum-allocation-mb

512

yarn.scheduler.maximum-allocation-mb

4096

yarn.nodemanager.resource.memory-mb

4096

yarn.nodemanager.pmem-check-enabled

true

yarn.nodemanager.vmem-check-enabled

false

配置mapred-site.xml

mapreduce.framework.name

yarn

配置workers

hadoop102

hadoop103

hadoop104

配置历史服务器mapred-site.xml

mapreduce.jobhistory.address

hadoop102:10020

mapreduce.jobhistory.webapp.address

hadoop102:19888

开启日志聚集功能,应用运行完成以后,将程序运行日志信息上传到HDFS系统上

yarn-site.xml

yarn.log-aggregation-enable

true

yarn.log.server.url

http://hadoop102:19888/jobhistory/logs

yarn.log-aggregation.retain-seconds

604800

fenfa配置文件夹$HADOOP_HOME/etc/hadoop

启动

如果集群是第一次启动,需要在hadoop102节点格式化NameNode(注意格式化之前,一定要先停止上次启动的所有namenode和datanode进程,然后再删除data和log数据)

hdfs namenode -format

start-dfs.sh

start-yarn.shWeb端查看HDFS的Web页面:http://hadoop102:9870/

启停脚本

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop集群 ==================="

echo " --------------- 启动 hdfs ---------------"

ssh hadoop102 "/opt/module/hadoop/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh hadoop103 "/opt/module/hadoop/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh hadoop102 "/opt/module/hadoop/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh hadoop102 "/opt/module/hadoop/bin/mapred --daemon stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh hadoop103 "/opt/module/hadoop/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh hadoop102 "/opt/module/hadoop/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac

给权限!!!!

Zookeeper

步骤

Index of /zookeeper

tar -zxvf apache-zookeeper-3.7.1-bin.tar.gz -C /opt/module/

mv apache-zookeeper-3.7.1-bin/ zookeeper

在/opt/module/zookeeper/目录下创建zkData

在/opt/module/zookeeper/zkData目录下创建一个myid的文件

在文件中添加与server对应的编号,hadoop102写2,103写3,104写4

2

配置zoo.cfg文件

重命名/opt/module/zookeeper/conf目录下的zoo_sample.cfg为zoo.cfg

修改数据存储路径配置

dataDir=/opt/module/zookeeper/zkData

#######################cluster##########################

server.2=hadoop102:2888:3888

server.3=hadoop103:2888:3888

server.4=hadoop104:2888:3888

fenfa整个zookeeper文件夹

记得修改myid文件

启动

#!/bin/bash

case $1 in

"start"){

for i in hadoop102 hadoop103 hadoop104

do

echo ---------- zookeeper $i 启动 ------------

ssh $i "/opt/module/zookeeper/bin/zkServer.sh start"

done

};;

"stop"){

for i in hadoop102 hadoop103 hadoop104

do

echo ---------- zookeeper $i 停止 ------------

ssh $i "/opt/module/zookeeper/bin/zkServer.sh stop"

done

};;

"status"){

for i in hadoop102 hadoop103 hadoop104

do

echo ---------- zookeeper $i 状态 ------------

ssh $i "/opt/module/zookeeper/bin/zkServer.sh status"

done

};;

esac

Kafka

步骤

Apache Kafka

tar -zxvf kafka_2.12-3.3.1.tgz -C /opt/module/

mv kafka_2.12-3.3.1/ kafka

进入到/opt/module/kafka

vim config/server.properties

#broker的全局唯一编号,不能重复,只能是数字。

broker.id=0

#broker对外暴露的IP和端口 (每个节点单独配置)

advertised.listeners=PLAINTEXT://hadoop102:9092

#kafka运行日志(数据)存放的路径,路径不需要提前创建,kafka自动帮你创建,可以配置多个磁盘路径,路径与路径之间可以用","分隔

log.dirs=/opt/module/kafka/datas

#配置连接Zookeeper集群地址(在zk根目录下创建/kafka,方便管理)

zookeeper.connect=hadoop102:2181,hadoop103:2181,hadoop104:2181/kafka

fenfa整个kafka文件夹

分别在hadoop103和hadoop104上修改配置文件/opt/module/kafka/config/server.properties中的broker.id(三个虚拟机分别是1/2/3)及advertised.listeners

在/etc/profile.d/my_env.sh文件中增加kafka环境变量配置

vim /etc/profile.d/my_env.sh

#KAFKA_HOME

export KAFKA_HOME=/opt/module/kafka

export PATH=$PATH:$KAFKA_HOME/bin

fenfa环境变量

启动

#! /bin/bash

case $1 in

"start"){

for i in hadoop102 hadoop103 hadoop104

do

echo " --------启动 $i Kafka-------"

ssh $i "/opt/module/kafka/bin/kafka-server-start.sh -daemon /opt/module/kafka/config/server.properties"

done

};;

"stop"){

for i in hadoop102 hadoop103 hadoop104

do

echo " --------停止 $i Kafka-------"

ssh $i "/opt/module/kafka/bin/kafka-server-stop.sh "

done

};;

esac

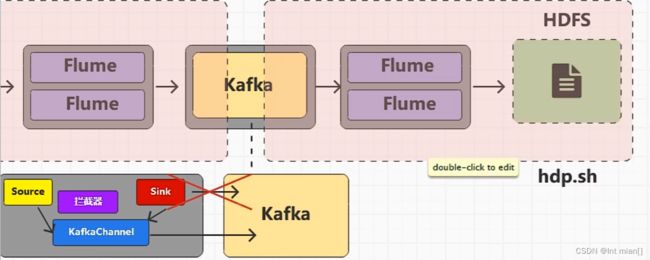

Flume

步骤

Index of /dist/flume

(1)将apache-flume-1.10.1-bin.tar.gz上传到linux的/opt/software目录下

(2)解压apache-flume-1.10.1-bin.tar.gz到/opt/module/目录下

mv /opt/module/apache-flume-1.10.1-bin /opt/module/flume

改vim conf/log4j2.xml

/opt/module/flume/log

# 引入控制台输出,方便学习查看日志

不用分发

配置采集文件

创建Flume配置文件

在hadoop102节点的Flume的job目录下创建file_to_kafka.conf。

#定义组件

a1.sources = r1

a1.channels = c1

#配置source

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /opt/module/applog/log/app.*

a1.sources.r1.positionFile = /opt/module/flume/taildir_position.json

这里真泥马坑,不知道尚硅谷怎么顺利运行的,

这里如果taildir_position.json的上级目录存在,是无法运行的,需要多加一个不存在的目录

#配置channel

a1.channels.c1.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.c1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092

a1.channels.c1.kafka.topic = topic_log

a1.channels.c1.parseAsFlumeEvent = false

#组装

a1.sources.r1.channels = c1

测试

#!/bin/bash

case $1 in

"start"){

echo " --------启动 hadoop102 采集flume-------"

ssh hadoop102 "nohup /opt/module/flume/bin/flume-ng agent -n a1 -c /opt/module/flume/conf/ -f /opt/module/flume/job/file_to_kafka.conf >/dev/null 2>&1 &"

};;

"stop"){

echo " --------停止 hadoop102 采集flume-------"

ssh hadoop102 "ps -ef | grep file_to_kafka | grep -v grep |awk '{print \$2}' | xargs -n1 kill -9 "

};;

esac