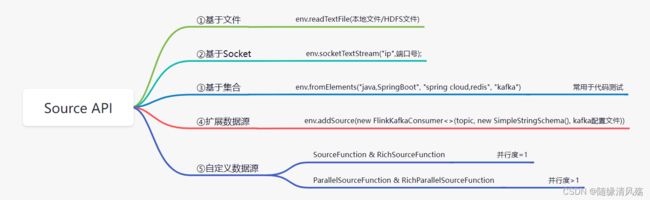

第四章 FlinkAPI之Source基础练习

1、元素集合

- 应用场景:测试代码时使用

env.fromElements

env.fromColletion

env.fromSequence(start,end)

- 代码实战

package com.hxjy.app;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.DataSet;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class flink02 {

public static void main(String[] args) throws Exception {

// 1、构建执行任务以及任务的启动入口,存储全局相关参数

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

// 2、相同类型元素的数据流

DataSet<String> stringDS = env.fromElements("java,SpringBoot", "spring cloud,redis", "kafka");

//DataStream stringDS1 = env.fromCollection(Arrays.asList("java,SpringBoot", "spring cloud,redis", "kafka"));

//DataStream stringDS2 = env.fromSequence(1,10);

stringDS.print("执行前");

// 3、进行flatmap操作

DataSet<String> flatmapStream = stringDS.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String value, Collector<String> collector) throws Exception {

// 3.1、进行切割转换

String [] arr = value.split(",");

// 3.2、遍历收集每一个

for(String str : arr){

collector.collect(str);

}

}

});

// 4、输出

flatmapStream.print("执行后");

// 5、/DataStream需要调⽤execute,可以取个名称

env.execute("flink");

}

}

- 运行结果

2、文件/文件系统

- 应用场景:读取落地后的文件

env.readTextFile(本地文件/HDFS文件)

- 代码实战

package com.hxjy.app;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.DataSet;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

import java.util.Arrays;

public class flink02 {

public static void main(String[] args) throws Exception {

// 1、构建执行任务以及任务的启动入口,存储全局相关参数

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 2、获取数据源 - 从数组/集合/序列读取

DataStream<String> stringDS = env.readTextFile("C:/Users/PROTH/Desktop/shenme/test.txt");

//DataStream stringDS = env.readTextFile("hdfs://hadoop102:9870/user/demo/wd.txt");

stringDS.print("执行前");

// 3、进行flatmap操作

DataStream<String> flatmapStream = stringDS.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String value, Collector<String> collector) throws Exception {

// 3.1、进行切割转换

String [] arr = value.split(",");

// 3.2、遍历收集每一个

for(String str : arr){

collector.collect(str);

}

}

});

// 4、输出

flatmapStream.print("执行后");

// 5、/DataStream需要调⽤execute,可以取个名称

env.execute("flink");

}

}

- 运行结果

3、监听端口读取数据

- 应用场景:Socket数据源 - 从linux端口读取数据

env.socketTextStream("ip",端口号);

- 代码实战

package com.hxjy.app;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.DataSet;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

import java.util.Arrays;

public class flink02 {

public static void main(String[] args) throws Exception {

// 1、构建执行任务以及任务的启动入口,存储全局相关参数

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 2、获取Socket数据源 - 从linux端口读取数据

env.setParallelism(1);

DataStream<String> stringDataStream = env.socketTextStream("192.168.6.103",8888);

stringDataStream.print("执行前");

// 3、输出

stringDataStream.print("执行后");

// 4、/DataStream需要调⽤execute,可以取个名称

env.execute("flink01");

}

}

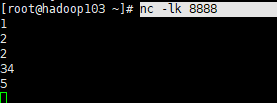

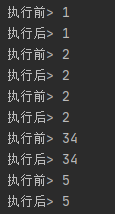

- 运行结果

(1)在linux端输入nc -lk 8888

(2)运行结果

4、自定义数据源

-

应用场景:⾃定义Source,实现接⼝⾃定义数据源

并⾏度为1:SourceFunction & RichSourceFunction 并⾏度⼤于1:ParallelSourceFunction & RichParallelSourceFunction -

代码实战

(1)定义数据源接口

package com.lihaiwei.text1.model;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import java.util.Date;

@Data

@AllArgsConstructor

@NoArgsConstructor

public class VideoOrder {

private String tradeNo;

private String title;

private int money;

private int userId;

private Date createTime;

}

(2)获取随机数据

package com.lihaiwei.text1.source;

import com.lihaiwei.text1.model.VideoOrder;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.source.RichParallelSourceFunction;

import java.util.*;

public class VideoOrderSource extends RichParallelSourceFunction<VideoOrder> {

private volatile Boolean flag = true;

private Random random = new Random();

private static List<String> list = new ArrayList<>();

static {

list.add("spark课程");

list.add("oracle课程");

list.add("RabbitMQ消息队列");

list.add("Kafka课程");

list.add("hadoop课程");

list.add("Flink流式技术课程");

list.add("工业级微服务项目大课训练营");

list.add("Linux课程");

}

/**

* run 方法调用前 用于初始化连接

* @param parameters

* @throws Exception

*/

@Override

public void open(Configuration parameters) throws Exception {

System.out.println("-----open-----");

}

/**

* 用于清理之前

* @throws Exception

*/

@Override

public void close() throws Exception {

System.out.println("-----close-----");

}

/**

* 产生数据的逻辑

* @param ctx

* @throws Exception

*/

@Override

public void run(SourceContext<VideoOrder> ctx) throws Exception {

while (flag){

Thread.sleep(1000);

String id = UUID.randomUUID().toString();

int userId = random.nextInt(10);

int money = random.nextInt(100);

int videoNum = random.nextInt(list.size());

String title = list.get(videoNum);

VideoOrder videoOrder = new VideoOrder(id,title,money,userId,new Date());

ctx.collect(videoOrder);

}

}

/**

* 控制任务取消

*/

@Override

public void cancel() {

flag = false;

}

}

(3)创建main方法

package com.lihaiwei.text1.app;

import com.lihaiwei.text1.model.VideoOrder;

import com.lihaiwei.text1.source.VideoOrderSource;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class Flink01App {

public static void main(String[] args) throws Exception {

// 1、构建执行任务以及任务的启动入口,存储全局相关参数

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 2、获取数据源 -

DataStreamSource<VideoOrder> stringDS = env.addSource(new VideoOrderSource());

// 4、输出

stringDS.print("执行后");

// 5、/DataStream需要调⽤execute,可以取个名称

env.execute("flink");

}

}

- 运行结果