爬虫安居客新房

一、首先看网址

后面有全部代码

https://hf.fang.anjuke.com/loupan/baohequ/p3

这种形式很好分析,https://hf.fang.anjuke.com/loupan/+行政区域+页码

xinfang_area = ["feixixian", "baohequ", "shushanqu", "luyangqu", "yaohaiqu", "gaoxinqu","feidongxian", "zhengwuqu", "jingjikaifaqu"] # 行政区域

url = "https://hf.fang.anjuke.com/loupan" # 新房

new_url = f"{url}/{area}/p{n}" # 网页

我们用requests库获取页面内容,再用bs解析,获得bs对象,代码:

for area in xinfang_area:

n = 1

while True:

headers = make_headers()

if n == 1:

new_url = f"{url}/{area}"

else:

new_url = f"{url}/{area}/p{n}"

print(new_url)

res = requests.get(new_url, headers=headers).text

content = BeautifulSoup(res, "html.parser")

if content is None: # 重试

n = 1

continue二、看内容

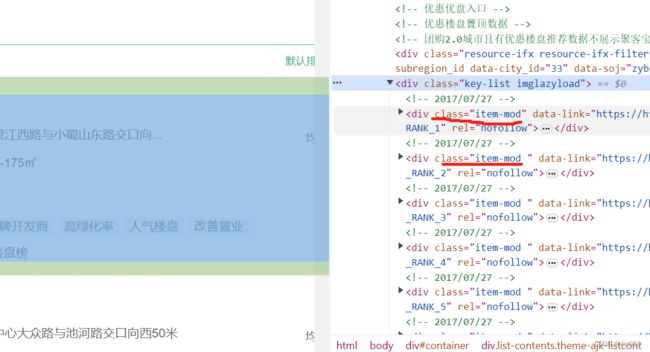

每一块的内容都是在

标签下面

根据刚获取的页面内容(页面包含当页所有楼盘的内容),用bs的find_all根据class:item-mod获得所有块的列表,我们看看每一块的网页是什么:

根据每一块的,内容代码基本完成了:

data = content.find_all('div', attrs={'class': 'item-mod'})

for d in data:

lp_name = d.find_next("a", attrs={"class": "lp-name"}).text

address = d.find_next("a", attrs={"class": "address"}).text

huxing = d.find_next("a", attrs={"class": "huxing"}).text

tags = d.find_next("a", attrs={"class": "tags-wrap"}).text

prices = d.find_next("a", attrs={"class": "favor-pos"}).text

price = re.findall(r'\d+', prices)[0] # 具体价格

# 写入数据

row_data = [area, lp_name, address, huxing, tags, prices, price]

with open(file_name, 'a', encoding='utf-8') as f:

writer = csv.writer(f)

writer.writerow(row_data)

m += 1

print(area, f"第{n}页第{m}条数据")三、换区域逻辑

不废话,直接分析

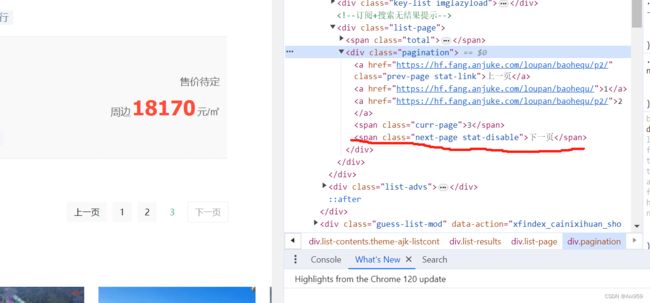

我们看到页面有下一页标签,我们对比有下一页与尾页的下一页标签的不同

这是有下一页的

这是尾页的

我们发现,如果尾页的下一页标签

此时我们的网页可以到下一个区域爬取了

next_page = content.find('span', attrs={'class': 'next-page stat-disable'})

if next_page is not None: # 没有下一页

break

四、全部代码

注意,如果没有数据可能是网页需要验证!

其他城市自己分析网页试试吧,我就不解释了

import requests

import csv

import time

import re

from bs4 import BeautifulSoup

from user_agent import make_headers

xinfang_area = ["feixixian", "baohequ", "shushanqu", "luyangqu", "yaohaiqu", "gaoxinqu",

"feidongxian", "zhengwuqu", "jingjikaifaqu"]

url = "https://hf.fang.anjuke.com/loupan" # 新房

file_name = 'anjuke/xinfang.csv'

headers = {"User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0"}

with open(file_name, 'w', encoding='utf-8') as f:

writer = csv.writer(f)

# 2:写表头

writer.writerow(['区域', '楼盘', '地址', '户型', "其他", '价格', '单价'])

for area in xinfang_area:

n = 1

while True:

# headers = make_headers()

if n == 1:

new_url = f"{url}/{area}"

else:

new_url = f"{url}/{area}/p{n}"

print(new_url)

res = requests.get(new_url, headers=headers).text

content = BeautifulSoup(res, "html.parser")

if content is None: # 重试

n = 1

print("正在重试")

continue

# 当前页和尾页判断

next_page = content.find('span', attrs={'class': 'next-page stat-disable'})

# 解析数据

print(area, f"第{n}页数据")

m = 0

data = content.find_all('div', attrs={'class': 'item-mod'})

for d in data:

lp_name = d.find_next("a", attrs={"class": "lp-name"}).text

address = d.find_next("a", attrs={"class": "address"}).text

huxing = d.find_next("a", attrs={"class": "huxing"}).text

tags = d.find_next("a", attrs={"class": "tags-wrap"}).text

prices = d.find_next("a", attrs={"class": "favor-pos"}).text

price = re.findall(r'\d+', prices) # 具体价格

if len(price) > 0:

price = price[0]

# 写入数据

row_data = [area, lp_name, address, huxing, tags, prices, price]

with open(file_name, 'a', encoding='utf-8') as f:

writer = csv.writer(f)

writer.writerow(row_data)

m += 1

print(area, f"第{n}页第{m}条数据")

if next_page is not None: # 没有下一页

break

n += 1

time.sleep(2)

new_url = None

代码更新:

import os

import requests

import csv

import time

import re

from bs4 import BeautifulSoup

from user_agent import make_headers

class CrawlAnJuKeXinFang:

def __init__(self, areas, url, path, file_name):

self.areas = areas

self.url = url

self.path = path

self.file_name = file_name

self.save_path = self.path + "/" + self.file_name

self.__headers = make_headers()

self.__create_file()

self.__nums = 0

self.__crawl()

print(f"完成!总计条{self.__nums}数据")

# 创建文件

def __create_file(self):

if not os.path.exists(self.path):

os.makedirs(self.path)

with open(self.save_path, 'w', encoding='utf-8', newline="") as f: # newline=""防止空行

writer = csv.writer(f)

writer.writerow(['区域', '楼盘', '地址', '户型', "其他", '价格', '单价', '在售'])

# 保存数据

def __save_data(self, row_data):

with open(self.save_path, 'a', encoding='utf-8', newline="") as f:

writer = csv.writer(f)

writer.writerow(row_data)

# 处理数据

def __handle_data(self, data, area):

m = 0

for d in data:

try:

lp_name = d.find_next("a", attrs={"class": "lp-name"}).text

address = d.find_next("a", attrs={"class": "address"}).text

huxing = d.find_next("a", attrs={"class": "huxing"}).text

tags = d.find_next("a", attrs={"class": "tags-wrap"}).text.replace("\n", ";")

tags = tags[2:]

onsale = tags.split(';')[0]

prices = d.find_next("a", attrs={"class": "favor-pos"}).text

price = re.findall(r'\d+', prices) # 具体价格

price = price[0] if len(price) > 0 else "待定"

row_data = [area, lp_name, address, huxing, tags, prices, price, onsale]

self.__save_data(row_data)

except Exception as err:

print(err)

print("数据获取有误!")

# 写入数据

m += 1

print(area, f"第{m}条数据")

self.__nums += m

def __crawl(self):

for area in self.areas:

n = 1

while True:

if n == 1:

new_url = f"{self.url}/{area}"

else:

new_url = f"{self.url}/{area}/p{n}"

print(new_url)

print(f"{area}第{n}页数据——————————————————————————————————")

res = requests.get(new_url, headers=self.__headers).text

content = BeautifulSoup(res, "html.parser")

# 当前页和尾页判断

next_page = content.find('span', attrs={'class': 'next-page stat-disable'})

# 解析数据

data = content.find_all('div', attrs={'class': 'item-mod'})

if data is None: # 重试

n = 1

print("正在重试!")

continue

# 处理数据

self.__handle_data(data, area)

footer = content.find('div', attrs={"class": "pagination"}) # 是否有换页的控件没有就换一个区县

# print(footer)

if next_page is not None or footer is None: # 没有下一页

break

n += 1

time.sleep(2)

if __name__ == '__main__':

# 江宁 浦口 栖霞 六合 溧水 雨花台 建邺 秦淮 鼓楼 高淳 玄武 南京周边

# xinfang_area = ["jiangning", "pukou", "xixia", "liuhe", "yuhuatai", "jianye",

# "nanjingzhoubian","xuanwu", "gaochun", "qinhuai"]

# url = "https://nj.fang.anjuke.com/loupan" # 安庆新房

# file_name = 'anjuke/anqing_xinfang.csv'

xinfang_area = ["feixixian", "baohequ", "shushanqu", "luyangqu", "yaohaiqu", "gaoxinqu",

"feidongxian", "zhengwuqu", "jingjikaifaqu"]

url = "https://hf.fang.anjuke.com/loupan" # 新房

path = "anjuke/"

file_name = '合肥2024-1-24.csv'

Crawler = CrawlAnJuKeXinFang(xinfang_area, url, path, file_name)