Ubuntu22.04环境下使用kubeadm部署kubernetes1.28.2

文章参考信息来源:

作者:Pokeya

链接:https://juejin.cn/post/7300419978486169641

来源:稀土掘金

1.安装前准备

说明:操作系统版本:Ubuntu 22.04 LTS

1.1安装docker

apt install docker.io可以看到这里会把containerd一起安装了

docker版本

containerd版本

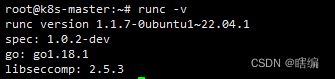

runc版本

修改docker的相关配置

cat > /etc/docker/daemon.json << EOF

{

"data-root": "/data2/docker",

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"http://hub-mirror.c.163.com"

],

"insecure-registries": ["harbor.local","harbor.localdomain:20004"],

"max-concurrent-downloads": 10,

"live-restore": true,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "50m",

"max-file": "1"

},

"storage-driver": "overlay2"

}

EOF

# data-root:修改docker的数据目录

# registry-mirrors:镜像加速

# insecure-registries:添加内网的镜像仓库

# log-opts:docker日志参数防止爆盘

修改后重启docker

systemctl restart docker可以查看到服务已启动

1.2 修改主机名并写到hosts

hostnamectl set-hostname "k8s-master"

# /etc/hosts 添加

10.0.20.7 k8s-master1.3 设置软件源

cat > /etc/apt/sources.list << EOF

deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

EOF

# 清除apt的软件包缓存

apt clean

# 清除apt的旧版本软件包

apt autoclean

# 刷新软件包列表

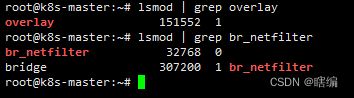

apt update1.4 检查是否加载了br_netfilt模块

lsmod | grep br_netfilt

如果没有则配置

# 创建一个名为 kubernetes.conf 的内核配置文件,并写入以下配置内容

cat > /etc/sysctl.d/kubernetes.conf << EOF

# 允许 IPv6 转发请求通过iptables进行处理(如果禁用防火墙或不是iptables,则该配置无效)

net.bridge.bridge-nf-call-ip6tables = 1

# 允许 IPv4 转发请求通过iptables进行处理(如果禁用防火墙或不是iptables,则该配置无效)

net.bridge.bridge-nf-call-iptables = 1

# 启用IPv4数据包的转发功能

net.ipv4.ip_forward = 1

# 禁用发送 ICMP 重定向消息

# net.ipv4.conf.all.send_redirects = 0

# net.ipv4.conf.default.send_redirects = 0

# 提高 TCP 连接跟踪的最大数量

# net.netfilter.nf_conntrack_max = 1000000

# 提高连接追踪表的超时时间

# net.netfilter.nf_conntrack_tcp_timeout_established = 86400

# 提高监听队列大小

# net.core.somaxconn = 1024

# 防止 SYN 攻击

# net.ipv4.tcp_syncookies = 1

# net.ipv4.tcp_max_syn_backlog = 2048

# net.ipv4.tcp_synack_retries = 2

# 提高文件描述符限制

fs.file-max = 65536

# 设置虚拟内存交换(swap)的使用策略为0,减少对磁盘的频繁读写

vm.swappiness = 0

EOF

# 前面3条是官网推荐添加的,后面按需再添加。然后执行

sysctl -p /etc/sysctl.d/kubernetes.conf注意:以上内核参数有些仅供参考,可以不用开

# 加载或启动内核模块 br_netfilter,该模块提供了网络桥接所需的网络过滤功能

modprobe br_netfilter

modprobe overlay

#再次查看是由有启动该模块

lsmod | grep br_netfilt

lsmod | grep overlay

1.5 安装ipvsadm和ipset

ipset 主要用于支持 Service 的负载均衡和网络策略。它可以帮助实现高性能的数据包过滤和转发,以及对 IP 地址和端口进行快速匹配。

ipvsadm 主要用于配置和管理 IPVS 负载均衡器,以实现 Service 的负载均衡。

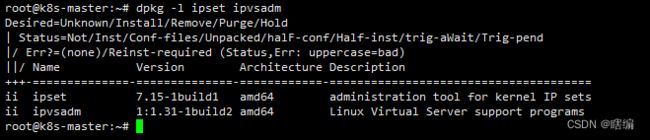

# 检查是否安装了

dpkg -l ipset ipvsadm

# apt安装

apt install -y ipset ipvsadm

安装完后可以看到相关版本信息

1.6 添加内核模块配置

# 将自定义在系统引导时自动加载的内核模块

cat > /etc/modules-load.d/kubernetes.conf << EOF

# /etc/modules-load.d/kubernetes.conf

# Linux 网桥支持

br_netfilter

# IPVS 加载均衡器

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

# IPv4 连接跟踪

nf_conntrack_ipv4

# IP 表规则

ip_tables

EOF

# 添加可执行权限

chmod a+x /etc/modules-load.d/kubernetes.conf

如图所示:

1.7 关闭swap分区

# 显示当前正在使用的 swap 分区

swapon --show

# 关闭所有已激活的 swap 分区

swapoff -a

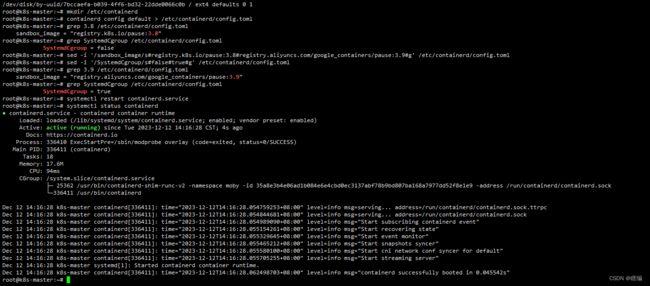

# 注释掉/etc/fstab swap分区的配置1.8 修改containerd的默认配置

# 创建目录,该目录用于存放 containerd 配置文件

mkdir /etc/containerd

# 创建一个默认的 containerd 配置文件

containerd config default > /etc/containerd/config.toml

# 修改配置文件中使用的沙箱镜像版本是registry.k8s.io/pause:3.8修改成阿里源的3.9

sed -i '/sandbox_image/s#registry.k8s.io/pause:3.8#registry.aliyuncs.com/google_containers/pause:3.9#g' /etc/containerd/config.toml

# 设置容器运行时(containerd + CRI)在创建容器时使用 Systemd Cgroups 驱动

sed -i '/SystemdCgroup/s/false/true/' /etc/containerd/config.toml

# 重启containerd

systemctl restart containerd

1.9 安装crictl

下载地址:GitHub - kubernetes-sigs/cri-tools: CLI and validation tools for Kubelet Container Runtime Interface (CRI) .

# 下载

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.28.0/crictl-v1.28.0-linux-amd64.tar.gz

tar xf crictl-v1.28.0-linux-amd64.tar.gz

mv crictl /usr/local/bin/执行crictl报错

WARN[0000] runtime connect using default endpoints: [unix:///var/run/dockershim.sock unix:///run/containerd/containerd.sock unix:///run/crio/crio.sock unix:///var/run/cri-dockerd.sock]. As the default settings are now deprecated, you should set the endpoint instead.

ERRO[0000] validate service connection: validate CRI v1 runtime API for endpoint "unix:///var/run/dockershim.sock": rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing: dial unix /var/run/dockershim.sock: connect: no such file or directory"

WARN[0000] image connect using default endpoints: [unix:///var/run/dockershim.sock unix:///run/containerd/containerd.sock unix:///run/crio/crio.sock unix:///var/run/cri-dockerd.sock]. As the default settings are now deprecated, you should set the endpoint instead.

ERRO[0000] validate service connection: validate CRI v1 image API for endpoint "unix:///var/run/dockershim.sock": rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing: dial unix /var/run/dockershim.sock: connect: no such file ordirectory"

解决

cat >> /etc/crictl.yaml << EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: true

pull-image-on-create: false

EOF

# 可以将debug改为false,不然执行命令会出现一些debug信息

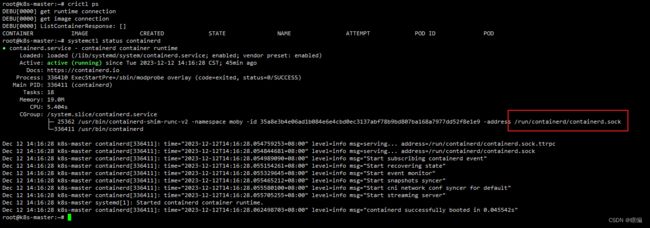

# 注意:runtime-endpoint和image-endpoint需要看containerd的sock文件是在哪里,可以systemctl status containerd看看2.开始安装

2.1 添加kubernetes源

# 更新 apt 包索引并安装使用 Kubernetes apt 仓库所需要的包

apt-get install -y gnupg gnupg2 curl software-properties-common

# 下载 Google Cloud 的 GPG 密钥(这个在可能会被墙无法访问)

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | gpg --dearmour -o /etc/apt/trusted.gpg.d/cgoogle.gpg

# 添加 Kubernetes 官方软件源到系统的 apt 软件源列表

apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main"

# 可以使用国内的地址

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

# 添加 Kubernetes 官方软件源到系统的 apt 软件源列表

cat > /etc/apt/sources.list.d/kubernetes.list << EOF

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

# 更新 apt 包索引

apt-get update

开始安装

root@k8s-master:~# apt-get install -y kubelet kubeadm kubectl

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

conntrack cri-tools ebtables kubernetes-cni socat

The following NEW packages will be installed:

conntrack cri-tools ebtables kubeadm kubectl kubelet kubernetes-cni socat

0 upgraded, 8 newly installed, 0 to remove and 186 not upgraded.

Need to get 87.1 MB of archives.

After this operation, 336 MB of additional disk space will be used.

Get:1 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 cri-tools amd64 1.26.0-00 [18.9 MB]

Get:2 http://mirrors.aliyun.com/ubuntu jammy/main amd64 conntrack amd64 1:1.4.6-2build2 [33.5 kB]

Get:3 http://mirrors.aliyun.com/ubuntu jammy/main amd64 ebtables amd64 2.0.11-4build2 [84.9 kB]

Get:4 http://mirrors.aliyun.com/ubuntu jammy/main amd64 socat amd64 1.7.4.1-3ubuntu4 [349 kB]

Get:5 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 kubernetes-cni amd64 1.2.0-00 [27.6 MB]

Get:6 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 kubelet amd64 1.28.2-00 [19.5 MB]

Get:7 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 kubectl amd64 1.28.2-00 [10.3 MB]

Get:8 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 kubeadm amd64 1.28.2-00 [10.3 MB]

Fetched 87.1 MB in 3min 3s (476 kB/s)

Selecting previously unselected package conntrack.

(Reading database ... 89064 files and directories currently installed.)

Preparing to unpack .../0-conntrack_1%3a1.4.6-2build2_amd64.deb ...

Unpacking conntrack (1:1.4.6-2build2) ...

Selecting previously unselected package cri-tools.

Preparing to unpack .../1-cri-tools_1.26.0-00_amd64.deb ...

Unpacking cri-tools (1.26.0-00) ...

Selecting previously unselected package ebtables.

Preparing to unpack .../2-ebtables_2.0.11-4build2_amd64.deb ...

Unpacking ebtables (2.0.11-4build2) ...

Selecting previously unselected package kubernetes-cni.

Preparing to unpack .../3-kubernetes-cni_1.2.0-00_amd64.deb ...

Unpacking kubernetes-cni (1.2.0-00) ...

Selecting previously unselected package socat.

Preparing to unpack .../4-socat_1.7.4.1-3ubuntu4_amd64.deb ...

Unpacking socat (1.7.4.1-3ubuntu4) ...

Selecting previously unselected package kubelet.

Preparing to unpack .../5-kubelet_1.28.2-00_amd64.deb ...

Unpacking kubelet (1.28.2-00) ...

Selecting previously unselected package kubectl.

Preparing to unpack .../6-kubectl_1.28.2-00_amd64.deb ...

Unpacking kubectl (1.28.2-00) ...

Selecting previously unselected package kubeadm.

Preparing to unpack .../7-kubeadm_1.28.2-00_amd64.deb ...

Unpacking kubeadm (1.28.2-00) ...

Setting up conntrack (1:1.4.6-2build2) ...

Setting up kubectl (1.28.2-00) ...

Setting up ebtables (2.0.11-4build2) ...

Setting up socat (1.7.4.1-3ubuntu4) ...

Setting up cri-tools (1.26.0-00) ...

Setting up kubernetes-cni (1.2.0-00) ...

Setting up kubelet (1.28.2-00) ...

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /lib/systemd/system/kubelet.service.

Setting up kubeadm (1.28.2-00) ...

Processing triggers for man-db (2.10.2-1) ...

Scanning processes...

Scanning linux images...

Running kernel seems to be up-to-date.

No services need to be restarted.

No containers need to be restarted.

No user sessions are running outdated binaries.

No VM guests are running outdated hypervisor (qemu) binaries on this host.# 锁定软件包版本以防止其被自动更新

apt-mark hold kubelet kubeadm kubectl2.2 配置kubelet

# 使用 /etc/default/kubelet 文件来设置 kubelet 的额外参数

cat > /etc/default/kubelet << EOF

# 该参数指定了 kubelet 使用 systemd 作为容器运行时的 cgroup 驱动程序

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

EOF

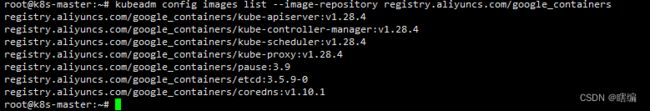

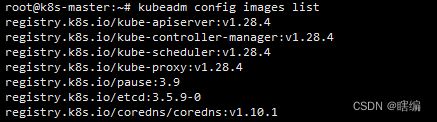

2.3 查看所需镜像(指定镜像仓库为阿里源)

kubeadm config images list \

--image-repository registry.aliyuncs.com/google_containers默认是从docker官方源可能拉取不下来

2.3 kubeadm初始化

# 自定义一个 Kubernetes 的 Pod 网络 CIDR 地址段,

# 其中 10.244.0.0/16 是一个较为常见的默认选择。

kubeadm init --control-plane-endpoint=k8s-master \

--image-repository=registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.28.2 \

--pod-network-cidr=10.244.0.0/16 \

--apiserver-advertise-address=10.0.20.7 \

--cri-socket unix://var/run/containerd/containerd.sock安装完成输出

root@k8s-master:~# kubeadm init --control-plane-endpoint=k8s-master \

> --image-repository=registry.aliyuncs.com/google_containers \

> --kubernetes-version=v1.28.2 \

> --pod-network-cidr=10.244.0.0/16 \

> --apiserver-advertise-address=10.0.20.7 \

> --cri-socket unix://var/run/containerd/containerd.sock

[init] Using Kubernetes version: v1.28.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.20.7]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [10.0.20.7 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [10.0.20.7 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 7.502828 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: ttsz42.fwrlnl6iz65nlija

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

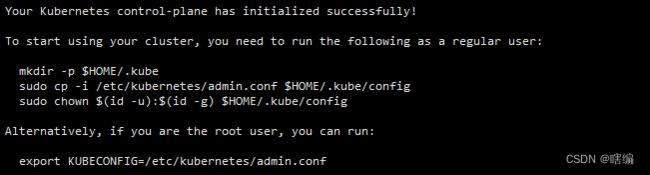

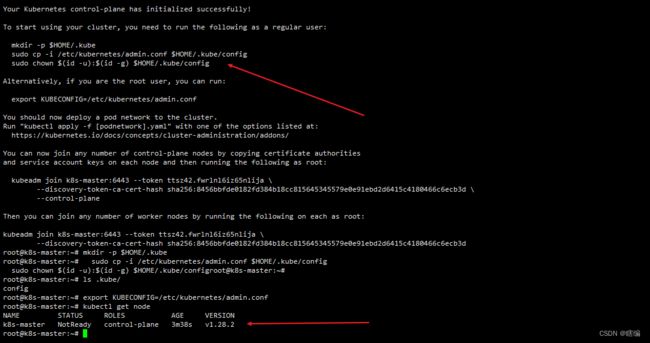

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join k8s-master:6443 --token ttsz42.fwrlnl6iz65nlija \

--discovery-token-ca-cert-hash sha256:8456bbfde0182fd384b18cc815645345579e0e91ebd2d6415c4180466c6ecb3d \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8s-master:6443 --token ttsz42.fwrlnl6iz65nlija \

--discovery-token-ca-cert-hash sha256:8456bbfde0182fd384b18cc815645345579e0e91ebd2d6415c4180466c6ecb3d初始化操作了哪些相关信息

- 生成了证书和密钥用于 API Server、etcd 和其他组件。

- 创建了 kubeconfig 文件,其中包含了访问集群所需的配置信息。

- 创建了 etcd 的静态 Pod 配置文件。

- 创建了 kube-apiserver、kube-controller-manager 和 kube-scheduler 的静态 Pod 配置文件。

- 启动了 kubelet。

- 标记了节点 k8s-master 为控制平面节点,并添加了相应的标签和容忍度设置。

- 配置了 Bootstrap Token、RBAC 角色和 Cluster Info ConfigMap 等。

执行完后记得安装提示执行

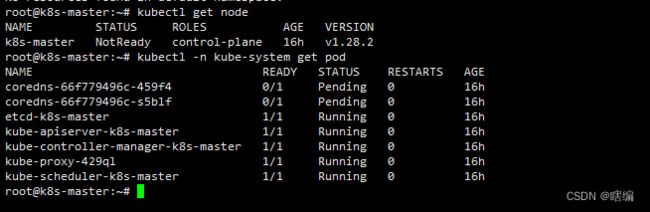

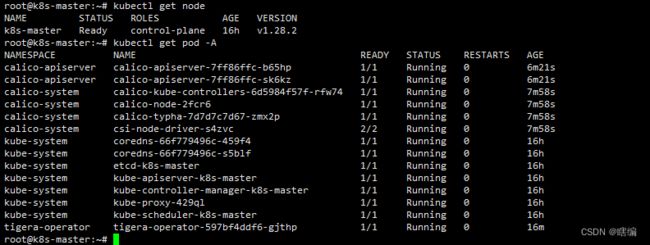

执行kubectl get node可以看到NotReady

crictl查看运行的镜像

添加node节点命令(本次使用的只有一个节点就不加了)

# You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join k8s-master:6443 --token ttsz42.fwrlnl6iz65nlija \

--discovery-token-ca-cert-hash sha256:8456bbfde0182fd384b18cc815645345579e0e91ebd2d6415c4180466c6ecb3d \

--control-plane

# Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8s-master:6443 --token ttsz42.fwrlnl6iz65nlija \

--discovery-token-ca-cert-hash sha256:8456bbfde0182fd384b18cc815645345579e0e91ebd2d6415c4180466c6ecb3d配置完后可以看到k8s集群还没弄好,这是为啥呢?因为网络还没弄好

2.4安装calico

Calico是 目前开源的最成熟的纯三层网络框架之一, 是一种广泛采用、久经考验的开源网络和网络安全解决方案,适用于 Kubernetes、虚拟机和裸机工作负载。 Calico 为云原生应用提供两大服务:工作负载之间的网络连接和工作负载之间的网络安全策略。

# calico官网地址

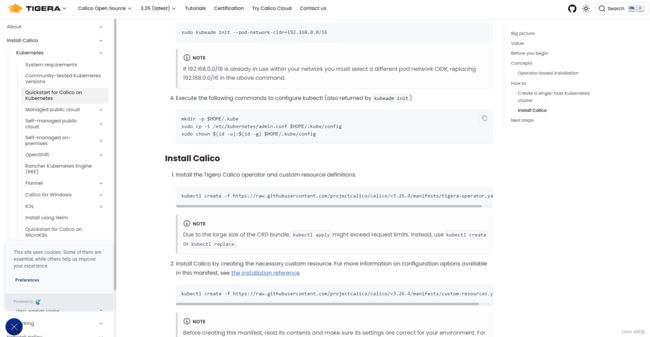

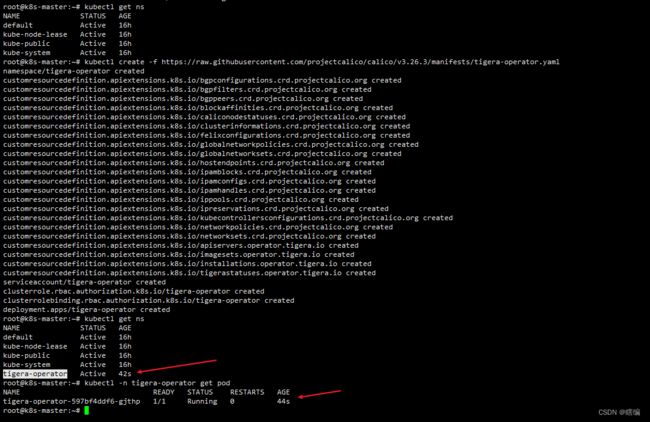

https://docs.tigera.io/calico/latest/about安装 Tigera Calico operator

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.3/manifests/tigera-operator.yaml

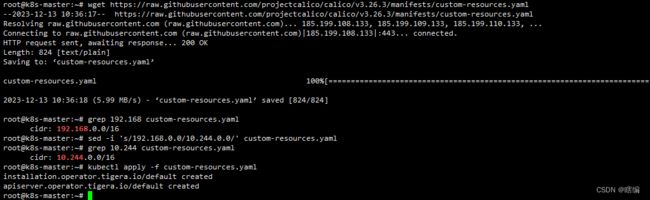

通过创建必要的自定义资源来安装 Calico

# 下载

wget https://raw.githubusercontent.com/projectcalico/calico/v3.26.3/manifests/custom-resources.yaml

# calico默认用的192.168.0.0作为pod的网络范围 初始化时我们使用的是10.244所以需要修改

sed -i 's/192.168.0.0/10.244.0.0/' custom-resources.yaml

# 安装

kubectl create -f custom-resources.yaml启动成功

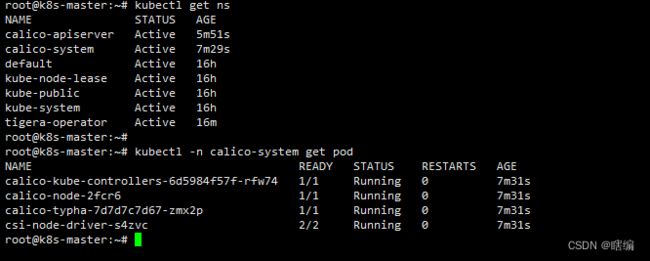

查看集群状态

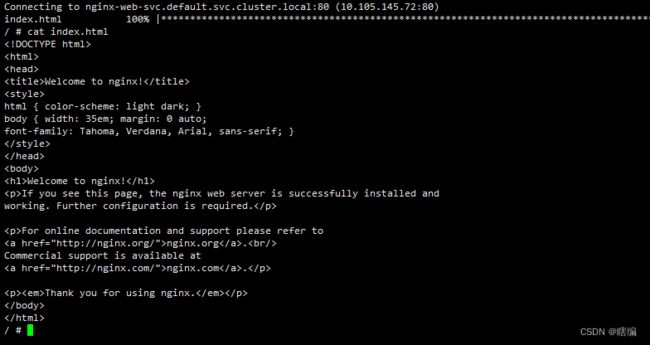

2.5 测试网络是否正常

因为是单节点部署pod的时候需要将污点去除

kubectl taint nodes k8s-master node-role.kubernetes.io/control-plane:NoSchedule-

部署两个pod进行测试

# 部署个nginx

kubectl create deployment nginx-app --image=nginx

# 创建nodeport的svc

kubectl expose deployment nginx-app --name=nginx-web-svc --type NodePort --port 80 --target-port 80

# 部署个busybox

kubectl create deployment bs --image=busybox:1.28.2 -- sleep 3600

# 进入bs查看

kubectl exec -it bs-55fdf9474-gssxv -- sh

2.6 安装ingress-nginx

github地址:https://github.com/kubernetes/ingress-nginx

安装前注意对应版本安装(本次k8s版本是1.28.2,ingress-nginx用的是1.9.4)

# 拉取安装包

wget https://github.com/kubernetes/ingress-nginx/archive/refs/tags/controller-v1.9.4.tar.gz

# 解压包

tar xf ingress-nginx-controller-v1.9.4.tar.gz

cd ingress-nginx-controller-v1.9.4/deploy/static/provider/baremetal

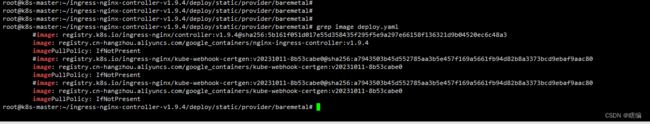

# 将镜像源修改为国内的源,注意对应版本

#image: registry.k8s.io/ingress-nginx/controller:v1.9.4@sha256:5b161f051d017e55d358435f295f5e9a297e66158f136321d9b04520ec6c48a3

>>

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.9.4

#image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20231011-8b53cabe0@sha256:a7943503b45d552785aa3b5e457f169a5661fb94d82b8a3373bcd9ebaf9aac80

>>

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v20231011-8b53cabe0

#image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20231011-8b53cabe0@sha256:a7943503b45d552785aa3b5e457f169a5661fb94d82b8a3373bcd9ebaf9aac80

>>

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v20231011-8b53cabe0

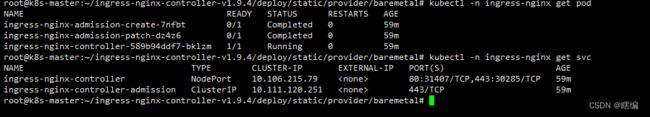

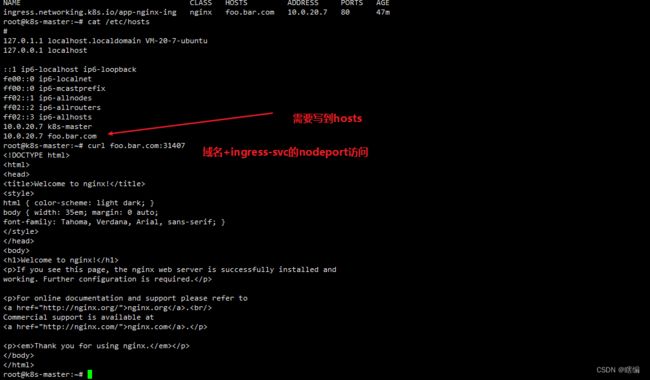

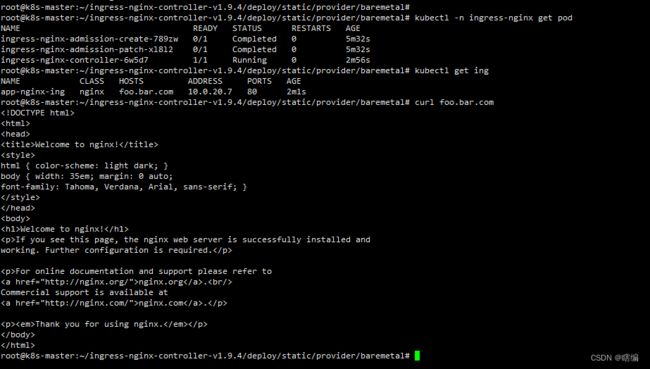

完成部署后,测试网络是否正常

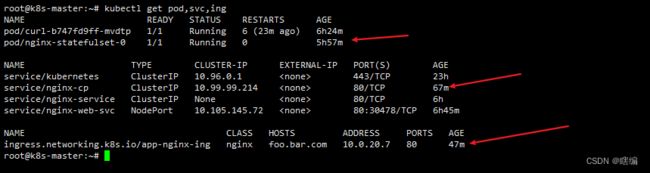

创建nginx-pod,svc,ingreess

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nginx-statefulset

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-app

name: nginx-cp

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: app-nginx-ing

#annotations:

# kubernetes.io/ingress.class: nginx

spec:

ingressClassName: nginx

rules:

- host: "foo.bar.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

#name: nginx-web-svc

name: nginx-cp

port:

number: 80

这种方式是需要域名+端口才能访问的显然比较不好,而且如果多个节点这个节点IP和域名不固定,所以这里改用别的方式部署ingress

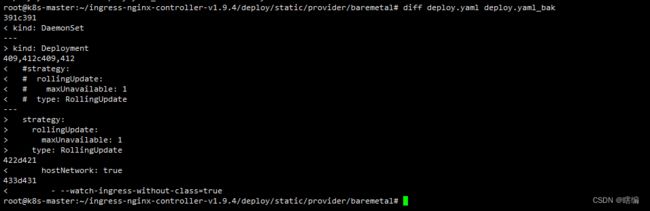

# 修改ingress-nginx-controller-v1.9.4/deploy/static/provider/baremetal/deploy.yaml文件

把 kind: Deployment 改为 kind: DaemonSet 模式,这样每台node上都有

ingress-nginx-controller pod 副本。

使用hostNetwork: true,默认 ingress-nginx 随机提供 nodeport 端口,

开启 hostNetwork 启用80、443端口。

如果不关心 ingressClass 或者很多没有 ingressClass 配置的 ingress 对象,

添加参数 --watch-ingress-without-class=true先delete掉之前的deploy.yaml然后再重新apply已修改的

已完成