Hive实战 —— 电商数据分析(全流程详解 真实数据)

目录

- 前言

- 需求概述

- 数据清洗

- 数据分析

- 一、前期准备

- 二、项目

-

-

- 1. 数据准备和了解

- 2.确定数据粒度和有效列

- 3.HDFS创建用于上传数据的目录

- 4.建库

-

-

-

- 数仓分层

-

-

- 5.建表

-

- 5.1近源层建表

- 5.2. 明细层建表

-

-

- 为什么要构建时间维度表?

- 如何构建时间维度表?

-

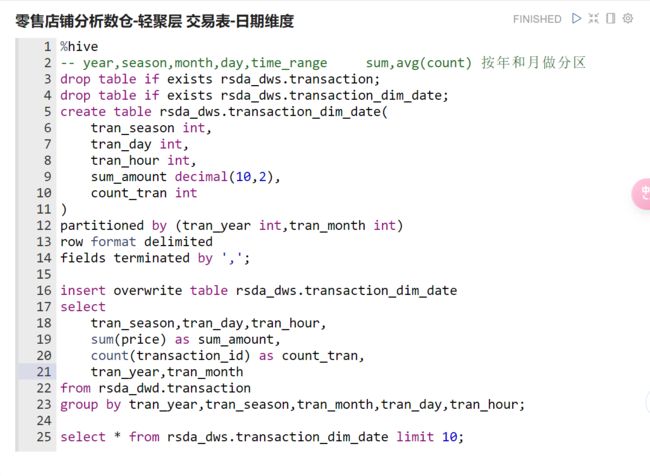

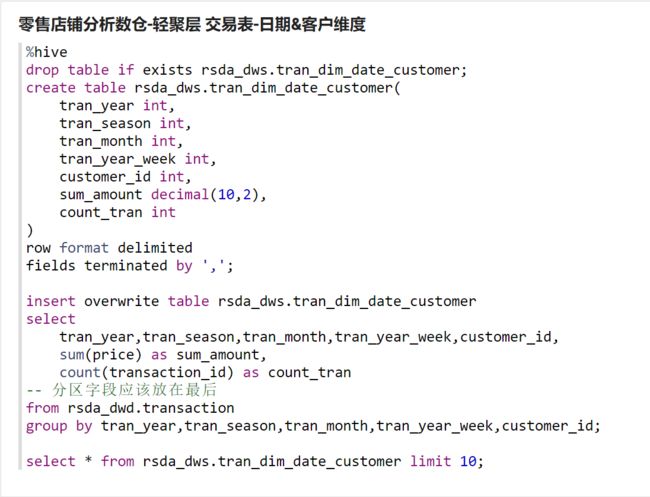

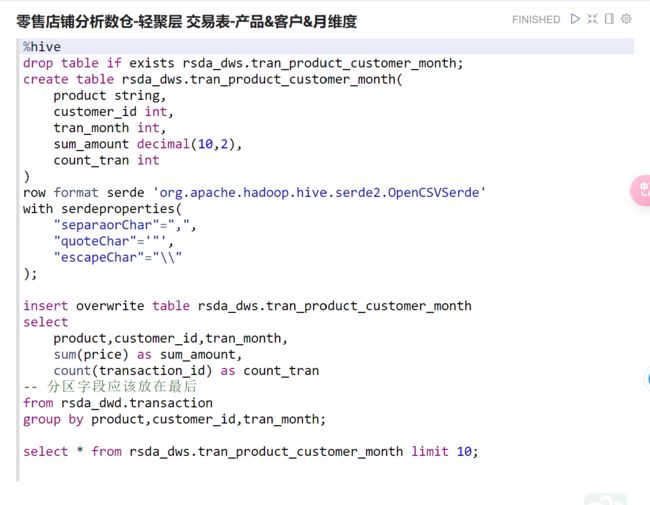

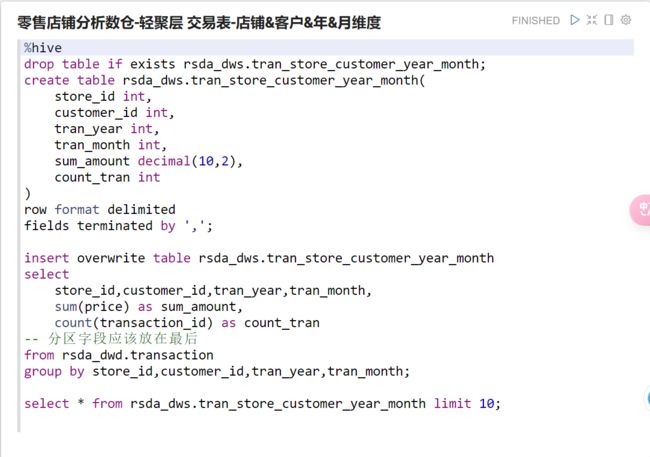

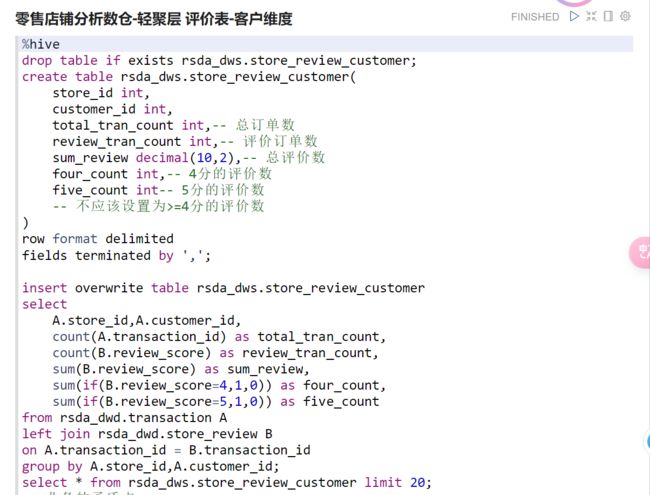

- 5.3 轻聚层建表

- 6. 指标数据分析

-

-

- 7.1 计算每月总收入

- 7.2 计算每个季度的总收入

- 7.3 按年计算总收入

- 7.4 按工作日计算总收入

- 7.5 按时间段计算总收入

- 7.6 按时间段计算平均消费

- 7.7 按工作日计算平均消费

- 7.8 计算年、月、日的交易总数

- 7.9 找出交易量最大的10个客户

- 7.10找出消费最多的前10位顾客

- 7.11 统计该期间交易数量最少的用户

- 7.12 计算每个季度的独立客户总数

- 7.13 计算每周的独立客户总数

- 7.14 计算整个活动客户平均花费的最大值

- 7.15 统计每月花费最多的客户

- 7.16 统计每月访问次数最多的客户

- 7.17 按总价找出最受欢迎的5种产品

- 7.18 根据购买频率找出最畅销的5种产品

- 7.19 根据客户数量找出最受欢迎的5种产品

- 8.1 按客流量找出最受欢迎的商店

- 8.2 根据顾客消费价格找出最受欢迎的商店

- 8.3 根据顾客交易情况找出最受欢迎的商店

- 8.4 根据商店和唯一的顾客id获取最受欢迎的产品

- 8.5缺失指标,不写

- 8.6 按年和月计算每家店的收入

- 8.7 按店铺制作总收益饼图

- 8.8 找出每个商店最繁忙的时间段(3小时)

- 8.9 找出每家店的忠实顾客

- 9.1 在ext_store_review中找出存在冲突的交易映射关系

- 9.2 了解客户评价的覆盖率

- 9.3 根据评分了解客户的分布情况(均分)

- 9.4 根据交易了解客户的分布情况

- 9.5 客户给出的最佳评价是否总是同一家门店

-

-

前言

该实战项目的目的在于通过基于小型数据的Hive数仓构建进行的业务分析来做到以小见大,熟悉实际生产情况下构建Hive数仓解决实际问题的场景。本文内容较多,包含了从前期准备到数据分析的方案,代码,问题,解决方法等等,分析的数据文件 和 Zeppelin中的源文件 都已放在文章顶部,请先行下载,并配置好Zeppelin Hive相关环境后再进行阅读。相信认真读完并参与你一定会有收获!

需求概述

- 对某零售企业最近1年门店收集的数据进行数据分析

- 潜在客户画像

- 用户消费统计

- 门店的资源利用率

- 消费的特征人群定位

- 数据的可视化展现

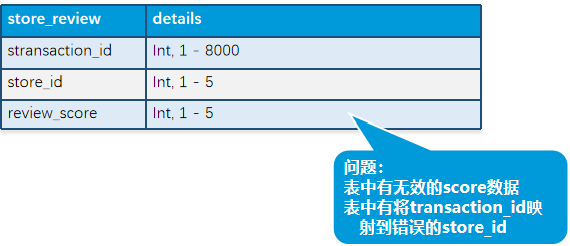

- Customer表

- Transaction表

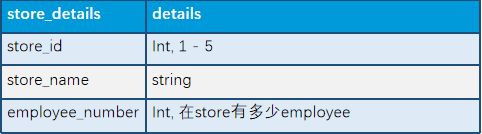

- Store表

- Review表

- 表间关系

数据清洗

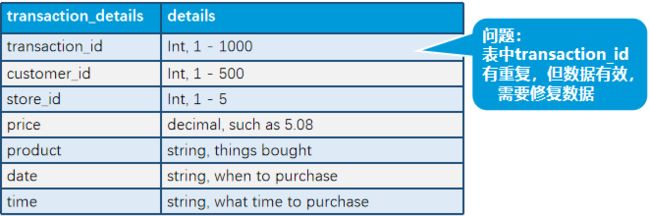

- 对transaction_details中的重复数据生成新ID

- 过滤掉store_review中没有评分的数据(保留)

- 可以把清洗好的数据放到另一个表或者用View表示

- 找出PII (personal information identification) 或PCI (personal confidential information) 数据进行加密或hash

- 重新组织transaction数据按照日期YYYY-MM做分区

数据分析

- Customer分析

- 6.1找出顾客最常用的信用卡

- 6.2找出客户资料中排名前五的职位名称

- 6.3在美国女性最常用的信用卡

- 6.4按性别和国家进行客户统计

- Transaction分析-1

- 7.1计算每月总收入

- 7.2计算每个季度的总收入

- 7.3按年计算总收入

- 7.4按工作日计算总收入

- 7.5按时间段计算总收入(需要清理数据)

- 7.6按时间段计算平均消费

- 7.7按工作日计算平均消费

- 7.8计算年、月、日的交易总数

- 7.9找出交易量最大的10个客户

- 7.10找出消费最多的前10位顾客

- Transaction分析-2

- 7.11统计该期间交易数量最少的用户

- 7.12计算每个季度的独立客户总数

- 7.13计算每周的独立客户总数

- 7.14计算整个活动客户平均花费的最大值

- 7.15统计每月花费最多的客户

- 7.16统计每月访问次数最多的客户

- 7.17按总价找出最受欢迎的5种产品

- 7.18根据购买频率找出最畅销的5种产品

- 7.19根据客户数量找出最受欢迎的5种产品

- Store分析

- 8.1按客流量找出最受欢迎的商店

- 8.2根据顾客消费价格找出最受欢迎的商店

- 8.3根据顾客交易情况找出最受欢迎的商店

- 8.4根据商店和唯一的顾客id获取最受欢迎的产品

- 8.5获取每个商店的员工与顾客比

- 8.6按年和月计算每家店的收入

- 8.7按店铺制作总收益饼图

- 8.8找出每个商店最繁忙的时间段(3小时)

- 8.9找出每家店的忠实顾客

- Review分析

- 9.1在ext_store_review中找出存在冲突的交易映射关系

transaction_id at store_id in transaction_details

transaction_id at store_id in store_review - 9.2了解客户评价的覆盖率

- 9.3根据评分了解客户的分布情况(均分)

- 9.4根据交易了解客户的分布情况

- 9.5客户给出的最佳评价是否总是同一家门店

- 9.1在ext_store_review中找出存在冲突的交易映射关系

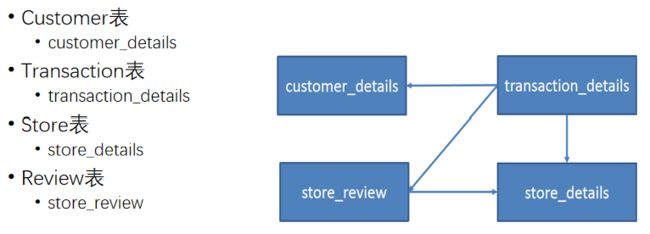

一、前期准备

打开Zeppelin,Zeppelin相关安装与配置详见Zeppelin安装教程。

创建notebook:

此处需要自定义一个Hive编辑器,教程如下:

#web页面配置hive翻译器

# 右上角anonymous => interpreter => 右上角 create =>

Interpreter Name

hive

Interpreter group

jdbc

#=> 设置properties

default.driver org.apache.hive.jdbc.HiveDriver

default.url jdbc:hive2://single:10000

default.user root

#=> 左下角 save

#web界面 create note

# 以%hive开启作为第一行

使用Zeppelin的一些基础知识

1.set hive.server2.logging.operation.level=NONE;// 设置日志文件不输出

2.在paragraph首行都应该有一个类似`%hive`的语法(表示该paragraph使用了说明hive编译器)

3.对于数据量较小的情况,Zeppelin会不生成一些图像(显示为Data Avaliable),此时需要先在settings中配置后才会生成图像

二、项目

每部分都需要在Notebook下创建一个新的paragraph并重命名。

(注释的语句是需要执行的)

1. 数据准备和了解

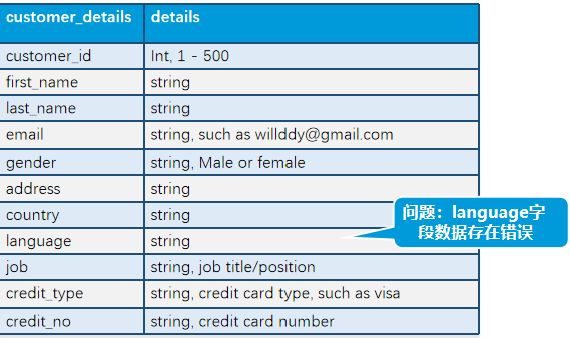

下载绑定的电商数据资源,将其上传到虚拟机上。查看资源下的文件,整理其字段信息和行数待用,并且检查各份数据可能存在的问题,比如文件乱码,评分数据空缺等。

小Tips:先行备好各表的字段信息有助于轻松构建ODS层的外部表,备好行数有助于在建表之后快速检查是否建表成功。

2.确定数据粒度和有效列

- 数据表对于指标的有效列 DWD

- Customer:credit_type,job,gender,country

- Review&Store:store_id,review_score

- Transaction:transaction_id,customer_id,store_id,price,product

- 数据聚合维度和基于该维度的最小粒度 DWT

每一行都作为一张表,行内容包括字段和聚合函数,字段即为聚合的分组字段。字段的排列需要遵循一定顺序,如果题目有确定的要求,例如按性别和国家进行客户统计,则分组字段的排序必须为gender,country。如果题目没有确定要求,通常粒度大的在前( 聚合粒度更大的字段能减少更多的数据量 ),有时如果为了保证出指标,我们可能也需要将粒度不够大的字段排前面。

如何判断多个指标是否属于同一张表?

如果某指标有新增维度,那么说明该指标是前一个指标范围的结束。例如:7.9指标新增了一个客户维度,因此7.1~7.8属于同一张表。- Transaction表

- 指标7.1~7.8:year,season,month,day,time_range

sum(price),count(transaction_id) - 指标7.9~7.16:season,month,week,customer_id

sum,avg(count) - 指标7.17~7.20:

product,customer_id,monthsum,avg(count) - 指标8.1~8.9:

store_id,customer_id,year,monthsum,count(distinct)

store_id,product,customer_idcount(distinct)

- 指标7.1~7.8:year,season,month,day,time_range

- Review表

- 指标9.1~9.5:

customer_idcount(transaction_id),count(review_score),sum(price)

需要与交易表聚合

- 指标9.1~9.5:

- Transaction表

3.HDFS创建用于上传数据的目录

4.建库

数仓分层

ODS 近源层(`外部表`)

ODS层的表通常包括两类,一个用于存储当前需要加载的数据,一个用于存储处理完后的历史数据。(历史数据一般保存3-6个月后需要清除)

数据经过ETL装入本层,接近源数据

DWD 明细层(`内部表`)

表合并(列),行不变

ODS层要尽可能地合并,去除无用字段,扩展维度入DWD层

时间维度表

订单表 => pro_dwd.order_detail_by_date

订单详情表

省份表

城市表

店铺表 => pro_dwd.order_detail_by_add_shop

订单表

订单详情表

会采用`维度退化`手法:当一个维度没有数据仓库需要的任何数据时,就可以退化维度,将维度退化至事实表中,减少事实表和维表的关联。

DWT 轻聚层

1.实现聚合操作

聚合操作包括对多个指标(如销售额、用户数等)在多个维度(如时间、地区、产品类别等)上进行统计汇总。

2.提取聚合需求共性

DWS 汇总层

接近指标对应的结果数据,尽量提取出来就能用

大多都是按照主题划分的涵盖多个字段的宽表

DM 数据集市

涉及权限管理和权限申请,不同人看到不同权限的数据,也可以申请查看某份数据的权限。

5.建表

- 建表过程中,由于数仓层之间的

数据传递性,建表时,下一层可以直接对上一层的建表代码进行改动;下一层数据传入时的表数据字段也来源于上一层的基础上。 - 每建一张表,都需要养成

检查数据的习惯,通过select * from TABLE_NAME limit N对数据进行检查。 - 如何理解近源层中的表为外部表,明细层中的表为内部表?

- 外部表的数据实际存储在外部系统(如HDFS)上,内部表的数据存储是由Hive管理的。

这是因为ODS层存储的原始数据还可能被其他应用所使用,而DWD层存储的经过转换、清洗和集成的数据专属于Hive。 - 近源层的表通常存储原始数据,直接读取原始数据文件即可,适合外部表。

明细层的表结构会经过更多加工和转换,可以创造不同的表结构来满足需求,再筛选有用数据导入,适合内部表。

- 外部表的数据实际存储在外部系统(如HDFS)上,内部表的数据存储是由Hive管理的。

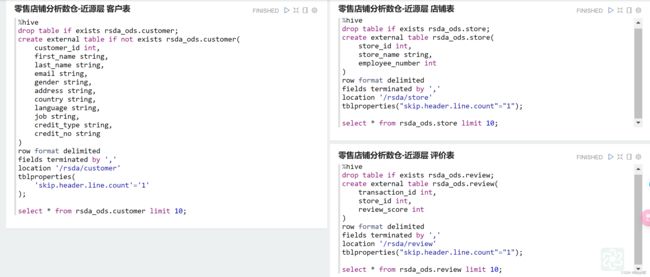

5.1近源层建表

近源层的表都为外部表,结合前期的数据准备和数据文件上传目录的创建创建近源层的表。

5.2. 明细层建表

- 明细层涉及

数据清洗(列裁剪,行压缩) - 明细层必要时构建

时间维度表 - 明细层需要尽可能地对

不必要的维度进行维度合并- 上层的表就已经够用

- 明细层表字段的几种情况:

- 黑色框选部分为

加密,对客户表需要对用户的关键信息(包括名,邮箱,住址,信用卡号)进行脱敏操作,根据信息的敏感度选取不同的脱敏方式和加密函数。例如:对邮箱,即对@前半部分的邮箱号码进行md5加密,后半部分保持原样并进行拼接。(代码只是将每种加密方式都尝试了一次,实际上更敏感的信息要用非对称加密) - 红色框选部分为

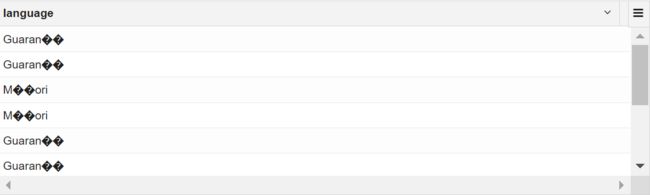

问题解决,我们需要对language的乱码问题进行解决,先筛选出存在乱码的language,并搜索到这两种语言的正确格式,进行替换。

# 筛选出language列存在乱码的列

select language from rsda_ods.customer where language rlike '.*[^a-zA-Z ].*';

-

新增

original字段来表示review_score是否非空。

通常为了保证数据的整体正态分布不受影响,避免可能产生的数据倾斜,我们会选择在数据清洗阶段将缺失数据替换为平均值。 -

由于平均分的结果通常是小数,如果对int类型数求平均数会导致精度缺失从而导致没有实现正态分布的目标,因此此处修改

review_score的数据类型为decimal(3,2) -

如何解决store_id的映射错误?

交易表中的数据才是正确的。

关联transaction_id,并且判断store_id的映射是否相同,如果相同,取谁都行;如果不相同,只能取交易表中的标准的store_id。 -

明细层不需要出现店铺表的原因?

近源层的店铺表就已经很适合,无需再多加列裁剪或行筛选,并且没有数据清洗任务,因此不需要在明细层中出现。

总结:不是所有表都必须出现在数仓的每一层,真正决定表是否在这一层的是数据的粒度。

- 交易表是一张

分区表(注意:要有动态分区配置)。分区字段不需要出现在表中,因此tran_year,tran_month都不需要在建表的时候出现。 - 这里选择

OpenCSVSerde是因为product列中存在形如"Soup - Campbells, Minestrone"的数据,如果直接用row format delimited fields terminated by ','会导致误解析列中多出来的,,因此需要选用OpenCSVSerde。 - 在面对复杂查询时,可以适当灵活地用一些优化配置来提升查询速度。详见我的博客Hive优化总结。

tran_date和tran_time可以合并为一个完整的时间维度- 我们需要解决交易表中数据清洗的问题:

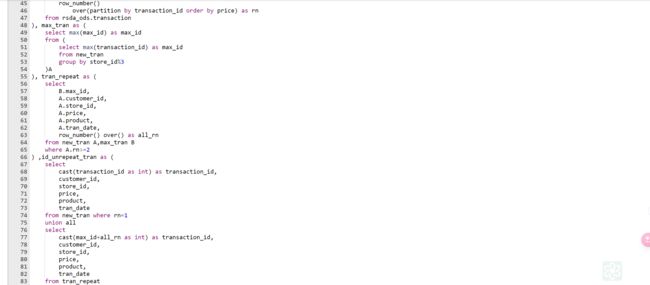

给transaction_id重复的数据一个新的,未重复的transaction_id。解决思路如下:我们先以transaction_id分组对全局开一个row_number()的窗口,将rn>=2的数据(即重复数据)拿出来放在另一个表B,对表B再全局开一个row_number()的窗口,使每个重复数据都获得一个新行号all_rn,同时我们求出原始交易表中transaction_id的最大值max_id,将all_rn+max_id的值作为重复数据的新行号。 - max_tran表中求最大值的优化上文已提过,不再赘述。

为什么要构建时间维度表?

用交易表已有的时间维度分析,可能会有缺失的时间维度。为了保证时间维度的健全性,需要单独构建一张时间维度表。

如何构建时间维度表?

- 查找时间维度边界

在构建时间维度表之前,需要全局查找交易表中最大和最小的时间,以确定时间维度的边界。

注意:查找最值一般通过部分聚合-全局聚合进行优化。

set mapreduce.job.reduces = 3;

with temp_tran as(

select store_id,to_date(replace(tran_date,'/','-')) as tran_date

from rsda_ods.transaction

),max_date_by_score as(

select min(tran_date) as min_date,max(tran_date) as max_date

from temp_tran

group by store_id

)

select min(min_date) as min_date,max(max_date) as max_date

from max_date_by_score;

-----------------------------------------------------------------------------------------

min_date max_date

2018-01-01 2018-12-31

日期数据进行操作前,都需要先转化为标准日期格式:YYYY-MM-DD:

tran_date的原始格式为2018/1/31,需要先替换分隔符,再通过to_date()补上缺失的前置零

- 编写时间维度构建脚本

#!/bin/bash

startDate=$1

endDate=$2

startTime=`date -d "$startDate" +%s`

endTime=`date -d "$endDate +1 day" +%s`

while((startTime<endTime));do

year=`date -d "@$startTime" +%Y`

month=`date -d "@$startTime" +%m`

quarter=$[(10#$month-1)/3+1]

yearweek=`date -d "@$startTime" +%W`

day=`date -d "@$startTime" +%d`

hour=`date -d "@$startTime" +%H`

echo "$year,$quarter,$month,$yearweek,$day,$hour" >> dim_date.csv

startDate=`date -d "@$startTime" +"%F %T"`

startTime=`date -d "$startDate 1 hour" +%s`

done

- 调用脚本(传入时间维度的

开始边界和结束边界)

chmod u+x dim_date_create.sh

./dim_date_create.sh 2018-01-01 2018-12-31

- 将写入时间维度的文件传到HDFS的指定目录下,作为时间维度表的数据文件

hdfs dfs -put dim_date.csv /rsda/dim_date

5.3 轻聚层建表

- 进行不同维度的聚合,需要参照提前

第2部分的数据基本粒度和有效列进行轻聚层的建表

这张表涉及到分析9.5指标中的最佳评价如何定义的问题。

如果最佳评价指的是用户所给的最高评分,那么如果一个用户始终打出低分,那么也不符合最佳的定义,这就是业务的矛盾点,面对业务的矛盾点,我们通常需要与客户经理对接去定义出更加复杂的指标来解决这类问题。如题目中定义出了4分的评价数和5分的评价数的两个指标来作为最佳评价的指标(不设置为>=4分的评价数是对于没有5分评价的情况下便于拆分)。

同时我们也需要考虑到数据是否存在偶然性,即如果顾客的交易数、参评数、参评率、好评数、好评率太低是不能够作为指标去进行数据分析的。

6. 指标数据分析

7.1 计算每月总收入

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=3;

select tran_month,sum(sum_amount) as sum_amount

from rsda_dws.transaction_dim_date

group by tran_month;

7.2 计算每个季度的总收入

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

select tran_season,sum(sum_amount) as sum_amount

from rsda_dws.transaction_dim_date

group by tran_season;

7.3 按年计算总收入

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

select

sum(sum_amount)

from(

select sum(sum_amount) as sum_amount

from rsda_dws.transaction_dim_date

group by tran_season

)A;

7.4 按工作日计算总收入

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

select

sum(sum_amount) as sum_amount

from(

select sum(sum_amount) as sum_amount

from rsda_dws.transaction_dim_date

where dayofweek(concat(tran_year,'-',tran_month,'-',tran_day))<6

group by tran_season

)A;

此处不能用concat_ws的原因是concat_ws的参数必须是string or array,int类型数不能作为concat_ws的参数。

7.5 按时间段计算总收入

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

select

floor((tran_hour/3)+1) as time_range,

sum(sum_amount) as sum_amount

from rsda_dws.transaction_dim_date

group by floor((tran_hour/3)+1);

此处以3个小时为一个时段

7.6 按时间段计算平均消费

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

select

floor((tran_hour/3)+1) as time_range,

cast(sum(sum_amount)/sum(count_tran) as decimal(10,2)) as avg_amount

from rsda_dws.transaction_dim_date

group by floor((tran_hour/3)+1);

7.7 按工作日计算平均消费

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

select

cast(sum(sum_amount)/sum(count_tran) as decimal(10,2)) as avg_amount

from(

select sum(sum_amount) as sum_amount,sum(count_tran) as count_tran

from rsda_dws.transaction_dim_date

where dayofweek(concat(tran_year,'-',tran_month,'-',tran_day))<6

group by tran_season

)A;

7.8 计算年、月、日的交易总数

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

select

tran_year,tran_month,tran_day,

sum(count_tran) as tran_count

from rsda_dws.transaction_dim_date

group by tran_year,tran_month,tran_day;

7.9 找出交易量最大的10个客户

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

with customer_count_tran as(

select customer_id,sum(count_tran) as count_tran

from rsda_dws.tran_dim_date_customer

group by customer_id

),customer_count_tran_rank as(

select customer_id,count_tran,dense_rank() over(order by count_tran desc) as rnk

from customer_count_tran

)

select customer_id,count_tran,rnk

from customer_count_tran_rank

where rnk<=10;

7.10找出消费最多的前10位顾客

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

with customer_sum_amount as(

select customer_id,sum(sum_amount) as count_tran

from rsda_dws.tran_dim_date_customer

group by customer_id

),customer_sum_amount_rank as(

select customer_id,sum_amount,dense_rank() over(order by sum_amount desc) as rnk

from customer_sum_amount

)

select customer_id,sum_amount,rnk

from customer_sum_amount_rank

where rnk<=10;

7.11 统计该期间交易数量最少的用户

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

with customer_sum_amount as(

select customer_id,sum(sum_amount) as sum_amount

from rsda_dws.tran_dim_date_customer

group by customer_id

)

select customer_id,sum_amount

from customer_sum_amount

where sum_amount = (

select min(sum_amount) as sum_amount

from customer_sum_amount

);

7.12 计算每个季度的独立客户总数

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

SELECT tran_year, tran_season, COUNT(*) AS unique_customers

FROM (

SELECT tran_year, tran_season, customer_id

FROM rsda_dws.tran_dim_date_customer

GROUP BY tran_year, tran_season, customer_id

) AS temp

GROUP BY tran_year, tran_season

ORDER BY tran_year, tran_season;

7.13 计算每周的独立客户总数

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

SELECT tran_year_week, COUNT(*) AS unique_customers

FROM (

SELECT tran_year_week, customer_id

FROM rsda_dws.tran_dim_date_customer

GROUP BY tran_year_week, customer_id

) AS temp

GROUP BY tran_year_week

ORDER BY tran_year_week;

7.14 计算整个活动客户平均花费的最大值

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

with customer_sum_amount_count_tran as(

select customer_id,sum(sum_amount) as sum_amount,sum(count_tran) as count_tran

from rsda_dws.tran_dim_date_customer

group by customer_id

),customer_avg_amount as(

select customer_id,cast(sum_amount/count_tran as decimal(10,2)) as avg_amount

from customer_sum_amount_count_tran

group by customer_id

)

select max(avg_amount) as max_amount

from customer_avg_amount;

7.15 统计每月花费最多的客户

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

WITH MonthlySpending AS (

SELECT tran_month, customer_id, SUM(sum_amount) AS total_spending,

DENSE_RANK() OVER (PARTITION BY tran_month ORDER BY SUM(sum_amount) DESC) AS rank

FROM rsda_dws.tran_dim_date_customer

GROUP BY tran_month, customer_id

)

SELECT tran_month, customer_id, total_spending

FROM MonthlySpending

WHERE rank = 1;

7.16 统计每月访问次数最多的客户

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

WITH MonthlyVisits AS (

SELECT tran_month, customer_id, SUM(count_tran) AS total_visits,

DENSE_RANK() OVER (PARTITION BY tran_month ORDER BY SUM(count_tran) DESC) AS rank

FROM rsda_dws.tran_dim_date_customer

GROUP BY tran_month, customer_id

)

SELECT tran_month, customer_id, total_visits

FROM MonthlyVisits

WHERE rank = 1;

7.17 按总价找出最受欢迎的5种产品

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

SELECT product, SUM(sum_amount) AS total_sales_amount

FROM rsda_dws.tran_product_customer_month

GROUP BY product

ORDER BY total_sales_amount DESC

LIMIT 5;

7.18 根据购买频率找出最畅销的5种产品

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

SELECT product, SUM(count_tran) AS total_transactions

FROM rsda_dws.tran_product_customer_month

GROUP BY product

ORDER BY total_transactions DESC

LIMIT 5;

7.19 根据客户数量找出最受欢迎的5种产品

set hive.server2.logging.operation.level=NONE;

set mapreduce.job.reduces=4;

SELECT product,count(customer_id) AS customer_count

FROM rsda_dws.tran_product_customer_month

GROUP BY product

ORDER BY total_transactions DESC

LIMIT 5;

8.1 按客流量找出最受欢迎的商店

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

select store_id,count(customer_id) as customer_count

from rsda_dws.tran_store_customer_year_month

group by store_id

order by customer_count desc

limit 1;

8.2 根据顾客消费价格找出最受欢迎的商店

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT store_id, SUM(sum_amount) AS total_spending

FROM rsda_dws.tran_store_customer_year_month

GROUP BY store_id

ORDER BY total_spending DESC

LIMIT 1;

8.3 根据顾客交易情况找出最受欢迎的商店

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT store_id, SUM(count_tran) AS total_transactions

FROM rsda_dws.tran_store_customer_year_month

GROUP BY store_id

ORDER BY total_transactions DESC

LIMIT 1;

8.4 根据商店和唯一的顾客id获取最受欢迎的产品

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT store_id,customer_id, product, MAX(sum_amount) as max_spent

FROM (

SELECT store_id,

customer_id,

product,

SUM(sum_amount) as sum_amount

FROM rsda_dws.tran_store_product_customer

GROUP BY store_id, customer_id, product

) AS customer_product_sales

GROUP BY store_id, customer_id;

8.5缺失指标,不写

8.6 按年和月计算每家店的收入

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT store_id, tran_year, tran_month, SUM(sum_amount) AS monthly_revenue

FROM rsda_dws.tran_store_customer_year_month

GROUP BY store_id, tran_year, tran_month;

8.7 按店铺制作总收益饼图

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT store_id, SUM(sum_amount) AS total_revenue

FROM rsda_dws.tran_store_customer_year_month

GROUP BY store_id;

8.8 找出每个商店最繁忙的时间段(3小时)

缺失指标,不写。

8.9 找出每家店的忠实顾客

我将忠实顾客定义为在这一年至少访问商店10次的顾客。

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT customer_id, COUNT(*) AS months_visited

FROM rsda_dws.tran_store_customer_year_month

GROUP BY customer_id

HAVING months_visited >= 10;

9.1 在ext_store_review中找出存在冲突的交易映射关系

定义冲突的映射关系:一个交易ID在store_review中对应多个不同的store_id

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT

transaction_id

FROM

ext_store_review

GROUP BY

transaction_id

HAVING

COUNT(store_id) > 1;

9.2 了解客户评价的覆盖率

定义覆盖率:客户提交评价的交易数与总交易数的比例。

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT

store_id,

customer_id,

(review_tran_count / total_tran_count) * 100 AS review_coverage

FROM

rsda_dws.store_review_customer;

9.3 根据评分了解客户的分布情况(均分)

定义:通过评分的均分了解客户在各评分段的分布情况

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT

average_score,

COUNT(*) AS customer_count

FROM

(SELECT

customer_id,

(sum_review / review_tran_count) AS average_score

FROM

rsda_dws.store_review_customer

WHERE

review_tran_count > 0) AS customer_scores

GROUP BY

average_score;

9.4 根据交易了解客户的分布情况

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT

total_tran_count,

COUNT(*) AS customer_count

FROM

rsda_dws.store_review_customer

GROUP BY

total_tran_count;

9.5 客户给出的最佳评价是否总是同一家门店

题目转化:筛选出最佳评价不总是同一家门店的客户ID

SET hive.server2.logging.operation.level=NONE;

SET mapreduce.job.reduces=4;

SELECT

customer_id

FROM

rsda_dws.store_review_customer

WHERE

four_count > 0 OR five_count > 0

GROUP BY

customer_id, store_id

HAVING

COUNT(customer_id) > 1;