大数据系列之ELK集群环境部署

本文主要介绍ELK相关组件的环境部署和配置,并以系统syslog作为源数据输入测试验证elasticsearch端数据接收和Kibana端数据展示。

1、基本概念和环境配置介绍

1.1 ELK基本概念

ELK是一款开源的海量日志搜索分析平台,能够完美的解决我们上述的问题,对日志进行集中采集和实时索引,提供实时搜索、分析、可视化、报警等功能,帮助企业在统一平台实时管理日志数据,进行线上业务实时监控、异常原因定位、数据统计分析。ELK由ElasticSearch、Logstash和Kiabana三个开源工具组成。官方网站:https://www.elastic.co/products

- Elasticsearch是实时全文搜索和分析引擎,提供搜集、分析、存储数据三大功能;是一套开放REST和JAVA API等结构提供高效搜索功能,可扩展的分布式系统;它构建于Apache Lucene搜索引擎库之上

- Logstash是一个用来搜集、分析、过滤日志的工具。它支持几乎任何类型的日志,包括系统日志、错误日志和自定义应用程序日志。它可以从许多来源接收日志,这些来源包括 syslog、消息传递(例如 RabbitMQ)和JMX,它能够以多种方式输出数据,包括电子邮件、websockets和Elasticsearch

- Kibana是一个基于Web的图形界面,用于搜索、分析和可视化存储在 Elasticsearch指标中的日志数据。它利用Elasticsearch的REST接口来检索数据,不仅允许用户创建他们自己的数据的定制仪表板视图,还允许他们以特殊的方式查询和过滤数据

1.2 基础环境配置

服务器环境配置信息如下表所示:

| 角色 | 主机名 | IP | 系统 |

|---|---|---|---|

| 系统SYSLOG | tango-01 | 192.168.112.10 | Centos7-X86_64 |

| ES集群 | tango-centos01-03 | 192.168.112.101-103 | Centos7-X86_64 |

| Kibana客户端 | tango-ubntu01 | 192.168.112.20 | Centos7-X86_64 |

2、基础环境搭建

2.1 Elasticsearch集群环境

2.1.1 配置Elasticsearch

1)解压安装包

[root@tango-centos01 src-install]# tar -xzvf elasticsearch-6.2.0.tar.gz -C /usr/local/elk/

2)编辑修改elasticsearch配置文件

[root@tango-centos01 config]# vi elasticsearch.yml

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

cluster.name: es_cluster_01

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

node.name: node01

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

node.master: true

node.data: true

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

path.data: /usr/local/elk/es-data/data

#

# Path to log files:

#

path.logs: /usr/local/elk/es-data/logs

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: 192.168.112.101

http.host: 0.0.0.0

#

# Set a custom port for HTTP:

#

http.port: 9200

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when new node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

discovery.zen.ping.unicast.hosts: ["192.168.112.101", "192.168.112.102","192.168.112.103"]

#

# Prevent the "split brain" by configuring the majority of nodes (total number of master-eligible nodes / 2 + 1):

#

discovery.zen.minimum_master_nodes: 1

3)创建es-data目录

[root@tango-centos01 elk]# mkdir es-data

[root@tango-centos01 elk]# cd es-data

[root@tango-centos01 es-data]# mkdir data

[root@tango-centos01 es-data]# mkdir logs

[root@tango-centos01 es-data]# ls

data logs

4)配置系统环境参数

- 修改用户的max file descriptors

[root@tango-centos01 ~]# vi /etc/sysctl.conf

vm.max_map_count = 262144

- 修改用户的max number of threads

[root@tango-centos01 ~]# vi /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 4096

* hard nproc 4096

- 修改max virtual memory areas

[root@tango-centos01 ~]# vi /etc/security/limits.d/20-nproc.conf ^C

tango soft nproc unlimited

- 修改默认分配jvm空间大小

[root@tango-centos01 config]# vi jvm.options

-Xms512m

-Xmx512m

2.1.2 启动elasticsearch

1)nohup启动,检查输出日志,注不能以root方式启动

[tango@tango-centos01 elasticsearch-6.2.0]$ nohup ./bin/elasticsearch &

[2018-05-11T17:30:32,777][INFO ][o.e.c.s.MasterService ] [node01] zen-disco-elected-as-master ([0] nodes joined), reason: new_master {node01}{Ed33-TuWRaO3KXZNEZcobg}{zfy-Ac2EQ4ymAbVjuR2Iog}{192.168.112.101}{192.168.112.101:9300}

[2018-05-11T17:30:32,785][INFO ][o.e.c.s.ClusterApplierService] [node01] new_master {node01}{Ed33-TuWRaO3KXZNEZcobg}{zfy-Ac2EQ4ymAbVjuR2Iog}{192.168.112.101}{192.168.112.101:9300}, reason: apply cluster state (from master [master {node01}{Ed33-TuWRaO3KXZNEZcobg}{zfy-Ac2EQ4ymAbVjuR2Iog}{192.168.112.101}{192.168.112.101:9300} committed version [1] source [zen-disco-elected-as-master ([0] nodes joined)]])

[2018-05-11T17:30:32,834][INFO ][o.e.g.GatewayService ] [node01] recovered [0] indices into cluster_state

[2018-05-11T17:30:32,857][INFO ][o.e.h.n.Netty4HttpServerTransport] [node01] publish_address {192.168.112.101:9200}, bound_addresses {[::]:9200}

[2018-05-11T17:30:32,858][INFO ][o.e.n.Node ] [node01] started

[tango@tango-centos01 elasticsearch-6.2.0]$

2)检查服务正常

[tango@tango-centos01 elasticsearch-6.2.0]$ netstat -nltp|grep -E "9200|9300"

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

tcp6 0 0 :::9200 :::* LISTEN

tcp6 0 0 192.168.112.101:9300 :::* LISTEN

3)访问端口9200查看ES信息

[tango@tango-centos01 elasticsearch-6.2.0]$ curl -X GET http://localhost:9200

{

"name" : "node01",

"cluster_name" : "es_cluster_01",

"cluster_uuid" : "PTvcSNeFSrCO14dpelxPxA",

"version" : {

"number" : "6.2.0",

"build_hash" : "37cdac1",

"build_date" : "2018-02-01T17:31:12.527918Z",

"build_snapshot" : false,

"lucene_version" : "7.2.1",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

4)停止elasticsearch可通过ps -ef|grep elasticsearch命令查找进程然后kill -9 进程号的方式结束进程

[root@tango-centos01 local]# ps -ef|grep elas

2.1.3 安装Elasticsearch插件-head

head(集群几乎所有信息,还能进行简单的搜索查询,观察自动恢复的情况等),安装elasticsearch-head插件需要nodejs的支持

1)安装elasticsearch-head插件

git clone git://github.com/mobz/elasticsearch-head.git

cd elasticsearch-head

npm install --registry=https://registry.npm.taobao.org

npm run start

2)修改elasticsearch-head的配置文件Gruntfile.js

connect: {

server: {

options: {

port: 9100,

hostname:'192.168.112.101',

base: '.',

keepalive: true

}

}

}

3)启动elasticsearch-head

[root@tango-centos01 elasticsearch-head]# npm run start

> [email protected] start /usr/local/elk/elasticsearch-6.2.0/plugins/elasticsearch-head

> grunt server

>> Local Npm module "grunt-contrib-jasmine" not found. Is it installed?

(node:3892) ExperimentalWarning: The http2 module is an experimental API.

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://192.168.112.101:9100

4)Elasticsearch重启后可能出现以下问题

这是因为插件不能放在elasticsearch的 plugins、modules 目录下,同时elasticsearch服务与elasticsearch-head之间可能存在跨越,修改elasticsearch配置即可,在elastichsearch.yml中添加如下命名即可:

#allow origin

http.cors.enabled: true

http.cors.allow-origin: "*"

5)关闭防火墙

[root@tango-centos02 /]# firewall-cmd --state

running

[root@tango-centos02 /]# systemctl stop firewalld.service

[root@tango-centos02 /]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@tango-centos02 /]# firewall-cmd --state

not running

2.1.4 配置其它节点

1)将节点1的目录复制到其它节点

[root@tango-centos01 local]# scp -r elk 192.168.112.102:/usr/local/

[root@tango-centos01 local]# scp -r elk 192.168.112.103:/usr/local/

2)配置节点2的elasticsearch配置文件,只需要修改配置节点信息,其它保持不变

# Use a descriptive name for the node:

#

node.name: node02

node.master: false

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: 192.168.112.102

3)启动节点2的elasticsearch

[tango@tango-centos02 elasticsearch-6.2.0]$ nohup ./bin/elasticsearch &

4)配置节点3的elasticsearch配置文件,只需要修改配置节点信息,其它保持不变

# Use a descriptive name for the node:

#

node.name: node03

node.master: false

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: 192.168.112.103

5)启动节点3的elasticsearch

[tango@tango-centos03 elasticsearch-6.2.0]$ nohup ./bin/elasticsearch &

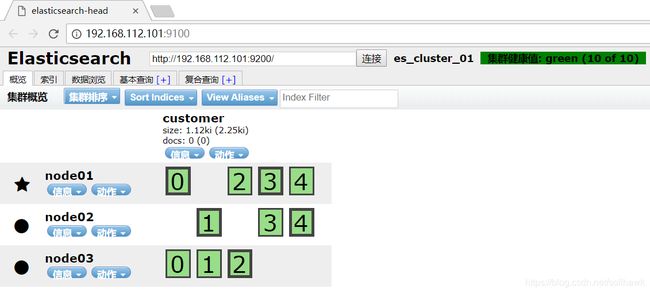

2.1.5 查看集群环境状态

- 访问以下地址,查看集群环境的状态http://192.168.112.101:9200/_cat/nodes?v

-

通过header插件查看集群状态

-

创建索引customer

[tango@tango-centos01 elasticsearch-6.2.0]$ curl -XPUT 'localhost:9200/customer?pretty'

{

"acknowledged" : true,

"shards_acknowledged" : true,

"index" : "customer"

}

- 查看索引状态

[tango@tango-centos01 elasticsearch-6.2.0]$ curl 'localhost:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open customer d_tLB6IDSFaPXmqPdhLZow 5 1 0 0 2.2kb 1.1kb

2.1.6 常用命令

a) 启动elasticsearch:

nohup ./bin/elasticsearch &

b) 检查elasticsearch端口:

netstat -nltp|grep -E "9200|9300"

c) 检查elasticsearch服务:

curl -X GET http://localhost:9200

d) 访问header:

http://192.168.112.101:9100

e) 创建索引:

curl -XPUT 'localhost:9200/customer?pretty'

f) 查看索引信息:

curl 'http://localhost:9200/_cat/indices?v'

g) 删除索引DELETE

curl -XDELETE 'localhost:9200/customer?pretty'

h) 统计索引数据信息:

curl -XGET 'http://localhost:9200/_stats?pretty'

i) 查看集群状态:

http://192.168.112.101:9200/_cat/nodes?v

j) 查看进程号:

ps -ef|grep elasticsearch

- 插入JSON格式语句

[tango@tango-centos01 elasticsearch-6.2.0]$ curl -H "Content-Type: application/json" -XPUT 'localhost:9200/customer/external/1?pretty' -d '

{

"name": "John Doe"

}

'

{

"_index" : "customer",

"_type" : "external",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1

}

- 通过GET获取数据内容

[tango@tango-centos01 elasticsearch-6.2.0]$ curl -XGET 'localhost:9200/customer/external/1?pretty'

{

"_index" : "customer",

"_type" : "external",

"_id" : "1",

"_version" : 1,

"found" : true,

"_source" : {

"name" : "John Doe"

}

}

2.2 Logstash环境

2.2.1 配置Logstash环境

1)安装logstash,如下命令解压安装包:

[root@tango-01 src]# tar -xzvf logstash-6.2.0.tar.gz -C /usr/local/elk

- 创建配置文件,输入分别到Kafka、Elasticsearch和MongoDB

[root@tango-01 config]# mkdir elk-syslog

[root@tango-01 config]# cd elk-syslog

[root@tango-01 elk-syslog]# pwd

/usr/local/elk/logstash-6.2.0/config/elk-syslog

- Logstash-es配置文件

# For detail structure of this file

# Set: https://www.elastic.co/guide/en/logstash/current/configuration-file-structure.html

input {

file {

type => "system-message"

path => "/var/log/messages"

start_position => "beginning"

}

}

filter {

#Only matched data are send to output.

}

output {

elasticsearch {

hosts=> [ "192.168.112.101:9200","192.168.112.102:9200","192.168.112.103:9200" ]

action => "index"

index => "syslog-tango01-%{+yyyyMMdd}"

}

}

- Logstash-kafka配置文件

# For detail structure of this file

# Set: https://www.elastic.co/guide/en/logstash/current/configuration-file-structure.html

input {

file {

type => "system-message"

path => "/var/log/messages"

start_position => "beginning"

}

}

filter {

#Only matched data are send to output.

}

output {

kafka {

bootstrap_servers => "192.168.112.101:9092,192.168.112.102:9092,192.168.112.103:9092"

topic_id => "system-messages-tango-01"

compression_type => "snappy"

}

}

- Logstash-mongo配置文件

# For detail structure of this file

# Set: https://www.elastic.co/guide/en/logstash/current/configuration-file-structure.html

input {

file {

type => "system-message"

path => "/var/log/messages"

start_position => "beginning"

}

}

filter {

#Only matched data are send to output.

}

output {

mongodb {

uri => "mongodb://192.168.112.101:27017" ——MongoDB集群的主节点

database => "syslogdb"

collection => "syslog_tango_01"

}

}

2.2.2 安装logstash-output-mongodb插件

默认情况下Logstash不安装logstash-output-mongodb插件,需要手动安装。将打包好的插件安装包上传到目标环境和目录,执行以下命令即可:

[root@tango-01 logstash-6.2.0]# bin/logstash-plugin install file:///usr/local/elk/logstash-6.2.0/logstash-offline-plugins-6.2.0.zip

Installing file: /usr/local/elk/logstash-6.2.0/logstash-offline-plugins-6.2.0.zip

Install successful

2.2.3 启动并验证

1)启动Logstash,指定目录则将目录下的配置文件作为输入

[root@tango-01 logstash-6.2.0]# nohup ./bin/logstash -f ./config/elk-syslog/ &

指定单个输出配置文件,如Elasticsearch:

nohup ./bin/logstash -f ./config/elk-syslog/logstash-es.conf &

2)查看输出日志

[2018-05-24T10:26:42,045][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.2.0"}

[2018-05-24T10:26:43,121][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2018-05-24T10:26:50,125][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2018-05-24T10:26:53,486][INFO ][logstash.pipeline ] Pipeline started succesfully {:pipeline_id=>"main", :thread=>"#"}

[2018-05-24T10:26:53,841][INFO ][logstash.agent ] Pipelines running {:count=>1, :pipelines=>["main"]}

3)统计Logstash传输数据到Elasticsearch中索引信息

curl -XGET 'http://localhost:9200/_stats?pretty'

2.3 Kibana环境

1)解压Kibana安装文件

root@Tango:/usr/local/src# tar -xzvf kibana-6.2.0-linux-x86_64.tar.gz -C /usr/local/elk

2)编辑配置文件

root@Tango:/usr/local/elk/kibana-6.2.0-linux-x86_64/config# gedit kibana.yml

# Kibana is served by a back end server. This setting specifies the port to use.

server.port: 5601

# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: 192.168.112.20

# The URL of the Elasticsearch instance to use for all your queries.

elasticsearch.url: "http://192.168.112.101:9200"

3)后台启动Kibna服务

root@Tango:/usr/local/elk/kibana-6.2.0-linux-x86_64# nohup ./bin/kibana &

4)停止kibana进程

root@Tango:/usr/local/elk/kibana-6.2.0-linux-x86_64# fuser -n tcp 5601

5601/tcp: 3942

root@Tango:/usr/local/elk/kibana-6.2.0-linux-x86_64# kill -9 3942

root@Tango:/usr/local/elk/kibana-6.2.0-linux-x86_64# fuser -n tcp 5601

5)通过浏览器地址http://192.168.112.20:5601访问kibana

转载请注明原文地址: https://blog.csdn.net/solihawk/article/details/115860781

文章会同步在公众号“牧羊人的方向”更新,感兴趣的可以关注公众号,谢谢!