【Android】MediaCodec学习

在开源Android屏幕投屏代码scrcpy中,使用了MediaCodec去获取和display关联的surface的内容,再通过写fd的方式(socket等)传给PC端,

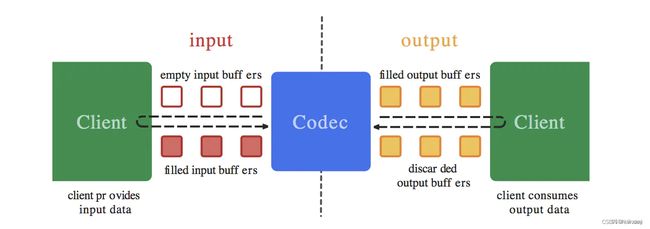

MediaCodec的处理看起来比较清楚,数据in和数据out

这里我们做另外一个尝试,读取手机中的mp4文件,显示到app的surface上,来学习MediaCodec的使用。

code

import android.media.MediaCodec;

import android.media.MediaExtractor;

import android.media.MediaFormat;

import android.os.Bundle;

import android.util.Log;

import android.view.Surface;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

import androidx.appcompat.app.AppCompatActivity;

import java.io.IOException;

public class PlayActivity2 extends AppCompatActivity implements SurfaceHolder.Callback {

private static final int REQUEST_PERMISSION = 1;

private static final String SAMPLE_MP4_FILE = "/sdcard/Download/test.mp4";

private SurfaceView surfaceView;

private MediaExtractor mediaExtractor;

private MediaCodec mediaCodec;

private boolean isPlaying = false;

private String TAG = "testPlay";

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_new);

Log.i(TAG, "onCreate");

surfaceView = findViewById(R.id.surfaceView);

surfaceView.getHolder().addCallback(this);

}

@Override

protected void onResume() {

super.onResume();

Log.i(TAG, "onResume");

if (!isPlaying) {

Log.i(TAG, "set isPlaying true");

isPlaying = true;

// playVideo();

}

}

@Override

protected void onPause() {

super.onPause();

if (isPlaying) {

Log.i(TAG, "onPause");

isPlaying = false;

releaseMediaCodec();

}

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

isPlaying = true;

Log.i(TAG, "surfaceCreated");

//需要另外启动一个线程去处理

new Thread() {

@Override

public void run() {

playVideo();

}

}.start();

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

Log.i(TAG, "surfaceChanged");

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

Log.i(TAG, "surfaceDestroyed");

releaseMediaCodec();

}

private void playVideo() {

try {

Log.i(TAG, "playVideo");

mediaExtractor = new MediaExtractor();

mediaExtractor.setDataSource(SAMPLE_MP4_FILE);

int videoTrackIndex = getVideoTrackIndex();

if (videoTrackIndex >= 0) {

MediaFormat format = mediaExtractor.getTrackFormat(videoTrackIndex);

String mimeType = format.getString(MediaFormat.KEY_MIME);

mediaCodec = MediaCodec.createDecoderByType(mimeType);

Surface surface = surfaceView.getHolder().getSurface();

mediaCodec.configure(format, surface, null, 0);

mediaCodec.start();

Log.i(TAG, "mediaCodec.start");

decodeFrames(videoTrackIndex);

}

} catch (IOException e) {

e.printStackTrace();

}

}

private int getVideoTrackIndex() {

for (int i = 0; i < mediaExtractor.getTrackCount(); i++) {

MediaFormat format = mediaExtractor.getTrackFormat(i);

String mime = format.getString(MediaFormat.KEY_MIME);

if (mime.startsWith("video/")) {

mediaExtractor.selectTrack(i);

return i;

}

}

return -1;

}

private void decodeFrames(int videoTrackIndex) {

boolean isEOS = false;

final int TIMEOUT_US = 10000;

while (!Thread.interrupted()) {

if (!isPlaying)

break;

Log.i(TAG, "decodeFrames=, isPlaying=" + isPlaying);

int inputBufferIndex = mediaCodec.dequeueInputBuffer(TIMEOUT_US);

Log.i(TAG, "inputBufferIndex=" + inputBufferIndex);

if (inputBufferIndex >= 0) {

int sampleSize = mediaExtractor.readSampleData(mediaCodec.getInputBuffer(inputBufferIndex), 0);

if (sampleSize < 0) {

isEOS = true;

sampleSize = 0;

}

long presentationTimeUs = mediaExtractor.getSampleTime();

mediaCodec.queueInputBuffer(inputBufferIndex, 0, sampleSize, presentationTimeUs, isEOS ? MediaCodec.BUFFER_FLAG_END_OF_STREAM : 0);

if (!isEOS) {

Log.i(TAG, "mediaExtractor.advance()=" + sampleSize);

mediaExtractor.advance();

}

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_US);

Log.i(TAG, "outputBufferIndex======" + outputBufferIndex);

if (outputBufferIndex >= 0) {

mediaCodec.releaseOutputBuffer(outputBufferIndex, true);

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0) {

Log.i(TAG, "inputBufferIndex=, break");

break;

}

}

try {

Thread.sleep(10);

} catch (Exception e) {

}

}

}

private void releaseMediaCodec() {

if (mediaCodec != null) {

mediaCodec.stop();

mediaCodec.release();

mediaCodec = null;

}

if (mediaExtractor != null) {

mediaExtractor.release();

mediaExtractor = null;

}

}

}注意,这里的mp4文件放在了sdcard中,需要获取读取权限

public void requestPermission() {

if (Build.VERSION.SDK_INT >= 30) {

if (!Environment.isExternalStorageManager()) {

Intent intent = new Intent(Settings.ACTION_MANAGE_ALL_FILES_ACCESS_PERMISSION);

startActivity(intent);

return;

}

} else {

if (Build.VERSION.SDK_INT > Build.VERSION_CODES.M) {

if (PermissionChecker.checkSelfPermission(this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PermissionChecker.PERMISSION_GRANTED) {

requestPermissions(requestPermission, requestPermissionCode);

}

}

}

}

activity_new.xml里定义一个SurfaceView

playVideo的处理需要在另外一个线程中执行,不能在主线程执行,不然只能显示停止的一个画面。

01-28 18:14:19.388 21442 21442 I testPlay: set isPlaying true

01-28 18:14:19.431 21442 21442 I testPlay: surfaceCreated

01-28 18:14:19.431 21442 21442 I testPlay: playVideo

01-28 18:14:19.479 21442 21442 I testPlay: mediaCodec.start

01-28 18:14:19.479 21442 21442 I testPlay: decodeFrames=, isPlaying=true

01-28 18:14:19.480 21442 21442 I testPlay: inputBufferIndex=2

01-28 18:14:19.483 21442 21442 I testPlay: mediaExtractor.advance()=85878

01-28 18:14:19.493 21442 21442 I testPlay: outputBufferIndex======-1

01-28 18:14:19.504 21442 21442 I testPlay: decodeFrames=, isPlaying=true

01-28 18:14:19.504 21442 21442 I testPlay: inputBufferIndex=3

01-28 18:14:19.507 21442 21442 I testPlay: mediaExtractor.advance()=3049在上述代码中,视频帧是通过 MediaCodec 解码后,使用 Surface 对象在 SurfaceView 上进行渲染的。

以下代码片段展示了视频帧的渲染过程:

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_US);

if (outputBufferIndex >= 0) {

mediaCodec.releaseOutputBuffer(outputBufferIndex, true);

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0) {

break;

}

}

在每次循环中,首先调用 dequeueOutputBuffer() 方法来获取可用的输出缓冲区的索引。如果返回的索引大于等于0,则说明有可用的输出缓冲区。

然后,通过调用 releaseOutputBuffer() 方法,将输出缓冲区的索引传递给 MediaCodec,通知它可以释放该缓冲区并将其渲染到指定的 Surface 上。

最后,检查 BufferInfo 的 flags 标志,如果标志中包含 BUFFER_FLAG_END_OF_STREAM,则说明已经解码并渲染完整个视频帧序列,可以退出循环。

在循环中不断解码和渲染视频帧,就可以在 SurfaceView 上实时显示视频内容。

参考资料

Android MediaCodec解析-CSDN博客