分库分表 Sharding-JDBC (详解 3/6)

-

狂创客圈 经典图书 : 《Netty Zookeeper Redis 高并发实战》 面试必备 + 面试必备 + 面试必备 【博客园总入口 】

-

疯狂创客圈 经典图书 : 《SpringCloud、Nginx高并发核心编程》 大厂必备 + 大厂必备 + 大厂必备 【博客园总入口 】

-

入大厂+涨工资必备: 高并发【 亿级流量IM实战】 实战系列 【 SpringCloud Nginx秒杀】 实战系列 【博客园总入口 】

目录:分库分表 -Sharding-JDBC

| 组件 | 链接地址 |

|---|---|

| 准备一: 在window安装虚拟机集群 | 分布式 虚拟机 linux 环境制作 GO |

| 而且:在虚拟机上需要安装 mysql | centos mysql 笔记(内含vagrant mysql 镜像)GO |

| 分库分表 -Sharding-JDBC- 从入门到精通 1 | Sharding-JDBC 分库、分表(入门实战) GO |

| 分库分表 -Sharding-JDBC- 从入门到精通 2 | Sharding-JDBC 基础知识 GO |

| 分库分表 -Sharding-JDBC- 从入门到精通 3 | MYSQL集群主从复制,原理与实战 GO |

| 分库分表 Sharding-JDBC 从入门到精通之4 | 自定义主键、分布式主键,原理与实战 GO |

| 分库分表 Sharding-JDBC 从入门到精通之5 | 读写分离,原理与实战GO |

| 分库分表 Sharding-JDBC 从入门到精通之6 | Sharding-JDBC执行原理 GO |

| 分库分表 Sharding-JDBC 从入门到精通之源码 | git |

1、概述:sharding-jdbc 三种主键生成策略

传统数据库软件开发中,主键自动生成技术是基本需求。而各大数据库对于该需求也提供了相应的支持,比如MySQL的自增键。 对于MySQL而言,分库分表之后,不同表生成全局唯一的Id是非常棘手的问题。因为同一个逻辑表内的不同实际表之间的自增键是无法互相感知的, 这样会造成重复Id的生成。我们当然可以通过约束表生成键的规则来达到数据的不重复,但是这需要引入额外的运维力量来解决重复性问题,并使框架缺乏扩展性。

sharding-jdbc提供的分布式主键主要接口为ShardingKeyGenerator, 分布式主键的接口主要用于规定如何生成全局性的自增、类型获取、属性设置等。

sharding-jdbc提供了两种主键生成策略UUID、SNOWFLAKE ,默认使用SNOWFLAKE,其对应实现类为UUIDShardingKeyGenerator和SnowflakeShardingKeyGenerator。

除了以上两种内置的策略类,也可以基于ShardingKeyGenerator,定制主键生成器。

2、自定义的自增主键生成器

shardingJdbc 抽离出分布式主键生成器的接口 ShardingKeyGenerator,方便用户自行实现自定义的自增主键生成器。

2.1自定义的主键生成器的参考代码

package com.crazymaker.springcloud.sharding.jdbc.demo.strategy;

import lombok.Data;

import org.apache.shardingsphere.spi.keygen.ShardingKeyGenerator;

import java.util.Properties;

import java.util.concurrent.atomic.AtomicLong;

// 单机版 AtomicLong 类型的ID生成器

@Data

public class AtomicLongShardingKeyGenerator implements ShardingKeyGenerator

{

private AtomicLong atomicLong = new AtomicLong(0);

private Properties properties = new Properties();

@Override

public Comparable generateKey() {

return atomicLong.incrementAndGet();

}

@Override

public String getType() {

//声明类型

return "AtomicLong";

}

}

2.2SPI接口配置

在Apache ShardingSphere中,很多功能实现类的加载方式是通过SPI注入的方式完成的。 Service Provider Interface (SPI)是一种为了被第三方实现或扩展的API,它可以用于实现框架扩展或组件替换。

SPI全称Service Provider Interface,是Java提供的一套用来被第三方实现或者扩展的接口,它可以用来启用框架扩展和替换组件。 SPI 的作用就是为这些被扩展的API寻找服务实现。

SPI 实际上是“基于接口的编程+策略模式+配置文件”组合实现的动态加载机制。

Spring中大量使用了SPI,比如:对servlet3.0规范对ServletContainerInitializer的实现、自动类型转换Type Conversion SPI(Converter SPI、Formatter SPI)等

Apache ShardingSphere之所以采用SPI方式进行扩展,是出于整体架构最优设计考虑。 为了让高级用户通过实现Apache ShardingSphere提供的相应接口,动态将用户自定义的实现类加载其中,从而在保持Apache ShardingSphere架构完整性与功能稳定性的情况下,满足用户不同场景的实际需求。

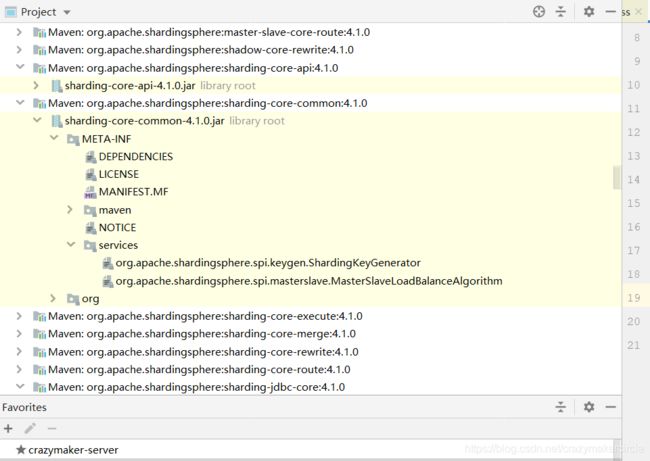

添加如下文件:META-INF/services/org.apache.shardingsphere.spi.keygen.ShardingKeyGenerator,

文件内容为:com.crazymaker.springcloud.sharding.jdbc.demo.strategy.AtomicLongShardingKeyGenerator.

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

#配置自己的 AtomicLongShardingKeyGenerator

com.crazymaker.springcloud.sharding.jdbc.demo.strategy.AtomicLongShardingKeyGenerator

#org.apache.shardingsphere.core.strategy.keygen.SnowflakeShardingKeyGenerator

#org.apache.shardingsphere.core.strategy.keygen.UUIDShardingKeyGenerator

以上文件的原始文件,是从 sharding-core-common-4.1.0.jar 的META-INF/services 复制出来的spi配置文件。

2.3使用自定义的 ID 生成器

在配置分片策略是,可以配置自定义的 ID 生成器,使用 生成器的的 type类型即可,具体的配置如下:

spring:

shardingsphere:

datasource:

names: ds0,ds1

ds0:

type: com.alibaba.druid.pool.DruidDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

filters: com.alibaba.druid.filter.stat.StatFilter,com.alibaba.druid.wall.WallFilter,com.alibaba.druid.filter.logging.Log4j2Filter

url: jdbc:mysql://cdh1:3306/store?useUnicode=true&characterEncoding=utf8&allowMultiQueries=true&useSSL=true&serverTimezone=UTC

password: 123456

username: root

maxActive: 20

initialSize: 1

maxWait: 60000

minIdle: 1

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: select 'x'

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

maxOpenPreparedStatements: 20

connection-properties: druid.stat.merggSql=ture;druid.stat.slowSqlMillis=5000

ds1:

type: com.alibaba.druid.pool.DruidDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

filters: com.alibaba.druid.filter.stat.StatFilter,com.alibaba.druid.wall.WallFilter,com.alibaba.druid.filter.logging.Log4j2Filter

url: jdbc:mysql://cdh2:3306/store?useUnicode=true&characterEncoding=utf8&allowMultiQueries=true&useSSL=true&serverTimezone=UTC

password: 123456

username: root

maxActive: 20

initialSize: 1

maxWait: 60000

minIdle: 1

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: select 'x'

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

maxOpenPreparedStatements: 20

connection-properties: druid.stat.merggSql=ture;druid.stat.slowSqlMillis=5000

sharding:

tables:

#逻辑表的配置很重要,直接关系到路由是否能成功

#shardingsphere会根据sql语言类型使用对应的路由印象进行路由,而logicTable是路由的关键字段

# 配置 t_order 表规则

t_order:

#真实数据节点,由数据源名 + 表名组成,以小数点分隔。多个表以逗号分隔,支持inline表达式

actual-data-nodes: ds$->{0..1}.t_order_$->{0..1}

key-generate-strategy:

column: order_id

key-generator-name: snowflake

table-strategy:

inline:

sharding-column: order_id

algorithm-expression: t_order_$->{order_id % 2}

database-strategy:

inline:

sharding-column: user_id

algorithm-expression: ds$->{user_id % 2}

key-generator:

column: order_id

type: AtomicLong

props:

worker.id: 123

2.4自定义主键的测试

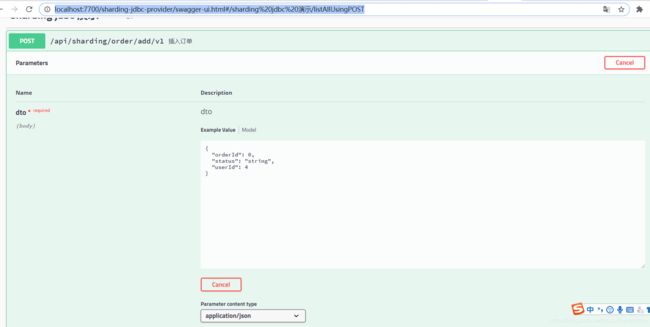

启动应用,访问其swagger ui界面,连接如下:

http://localhost:7700/sharding-jdbc-provider/swagger-ui.html#/sharding%20jdbc%20%E6%BC%94%E7%A4%BA/listAllUsingPOST

增加一条订单,订单的 user id=4,其orderid不填,让后台自动生成,如下图:

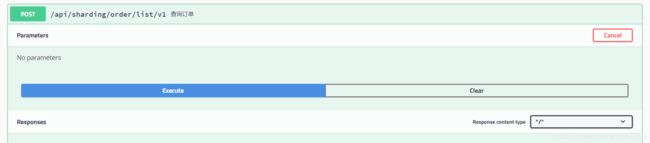

提交订单后,再通过swagger ui上的查询接口, 查看全部的订单,如下图:

通过上图可以看到, 新的订单id为1, 不再是之前的雪花算法生成的id。

另外,通过控制台打印的日志,也可以看出所生成的id为 1, 插入订单的日志如下

[http-nio-7700-exec-8] INFO ShardingSphere-SQL - Actual SQL: ds0 ::: insert into t_order_1 (status, user_id, order_id) values (?, ?, ?) ::: [INSERT_TEST, 4, 1]

反复插入订单,订单的id会通过 AtomicLongShardingKeyGenerator 生成,从 1/2/3/4/5/6/…开始一直向后累加

3.UUID生成器

ShardingJdbc内置ID生成器实现类有UUIDShardingKeyGenerator和SnowflakeShardingKeyGenerator。依靠UUID算法自生成不重复的主键键,UUIDShardingKeyGenerator的实现很简单,其源码如下:

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.shardingsphere.core.strategy.keygen;

import lombok.Getter;

import lombok.Setter;

import org.apache.shardingsphere.spi.keygen.ShardingKeyGenerator;

import java.util.Properties;

import java.util.UUID;

/**

* UUID key generator.

*/

@Getter

@Setter

public final class UUIDShardingKeyGenerator implements ShardingKeyGenerator {

private Properties properties = new Properties();

@Override

public String getType() {

return "UUID";

}

@Override

public synchronized Comparable generateKey() {

return UUID.randomUUID().toString().replaceAll("-", "");

}

}

由于InnoDB采用的B+Tree索引特性,UUID生成的主键插入性能较差, UUID常常不推荐作为主键。

4雪花算法

4.1雪花算法简介

分布式id生成算法的有很多种,Twitter的SnowFlake就是其中经典的一种。

有这么一种说法,自然界中并不存在两片完全一样的雪花的。每一片雪花都拥有自己漂亮独特的形状、独一无二。雪花算法也表示生成的ID如雪花般独一无二。

1. 雪花算法概述

雪花算法生成的ID是纯数字且具有时间顺序的。其原始版本是scala版,后面出现了许多其他语言的版本如Java、C++等。

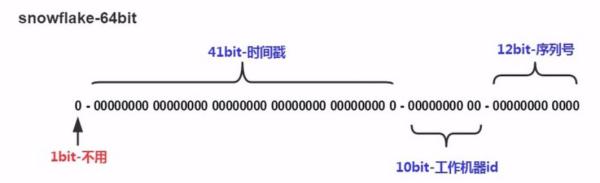

2. 组成结构

大致由:首位无效符、时间戳差值,机器(进程)编码,序列号四部分组成。

基于Twitter Snowflake算法实现,长度为64bit;64bit组成如下:

-

1bit sign bit.

-

41bits timestamp offset from 2016.11.01(Sharding-JDBC distributed primary key published data) to now.

-

10bits worker process id.

-

12bits auto increment offset in one mills.

| Bits | 名字 | 说明 |

|---|---|---|

| 1 | 符号位 | 0,通常不使用 |

| 41 | 时间戳 | 精确到毫秒数,支持 2 ^41 /365/24/60/60/1000=69.7年 |

| 10 | 工作进程编号 | 支持 1024 个进程 |

| 12 | 序列号 | 每毫秒从 0 开始自增,支持 4096 个编号 |

snowflake生成的ID整体上按照时间自增排序,一共加起来刚好64位,为一个Long型(转换成字符串后长度最多19)。并且整个分布式系统内不会产生ID碰撞(由datacenter和workerId作区分),工作效率较高,经测试snowflake每秒能够产生26万个ID。

3. 特点(自增、有序、适合分布式场景)

- 时间位:可以根据时间进行排序,有助于提高查询速度。

- 机器id位:适用于分布式环境下对多节点的各个节点进行标识,可以具体根据节点数和部署情况设计划分机器位10位长度,如划分5位表示进程位等。

- 序列号位:是一系列的自增id,可以支持同一节点同一毫秒生成多个ID序号,12位的计数序列号支持每个节点每毫秒产生4096个ID序号

snowflake算法可以根据项目情况以及自身需要进行一定的修改。

三、雪花算法的缺点

- 强依赖时间,

- 如果时钟回拨,就会生成重复的ID

sharding-jdbc的分布式ID采用twitter开源的snowflake算法,不需要依赖任何第三方组件,这样其扩展性和维护性得到最大的简化;

但是snowflake算法的缺陷(强依赖时间,如果时钟回拨,就会生成重复的ID),sharding-jdbc没有给出解决方案,如果用户想要强化,需要自行扩展;

4.2SnowflakeShardingKeyGenerator 源码

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.shardingsphere.core.strategy.keygen;

import com.google.common.base.Preconditions;

import lombok.Getter;

import lombok.Setter;

import lombok.SneakyThrows;

import org.apache.shardingsphere.spi.keygen.ShardingKeyGenerator;

import java.util.Calendar;

import java.util.Properties;

/**

* Snowflake distributed primary key generator.

*

*

* Use snowflake algorithm. Length is 64 bit.

*

*

*

* 1bit sign bit.

* 41bits timestamp offset from 2016.11.01(ShardingSphere distributed primary key published data) to now.

* 10bits worker process id.

* 12bits auto increment offset in one mills

*

*

*

* Call @{@code SnowflakeShardingKeyGenerator.setWorkerId} to set worker id, default value is 0.

*

*

*

* Call @{@code SnowflakeShardingKeyGenerator.setMaxTolerateTimeDifferenceMilliseconds} to set max tolerate time difference milliseconds, default value is 0.

*

*/

public final class SnowflakeShardingKeyGenerator implements ShardingKeyGenerator {

public static final long EPOCH;

private static final long SEQUENCE_BITS = 12L;

private static final long WORKER_ID_BITS = 10L;

private static final long SEQUENCE_MASK = (1 << SEQUENCE_BITS) - 1;

private static final long WORKER_ID_LEFT_SHIFT_BITS = SEQUENCE_BITS;

private static final long TIMESTAMP_LEFT_SHIFT_BITS = WORKER_ID_LEFT_SHIFT_BITS + WORKER_ID_BITS;

private static final long WORKER_ID_MAX_VALUE = 1L << WORKER_ID_BITS;

private static final long WORKER_ID = 0;

private static final int DEFAULT_VIBRATION_VALUE = 1;

private static final int MAX_TOLERATE_TIME_DIFFERENCE_MILLISECONDS = 10;

@Setter

private static TimeService timeService = new TimeService();

@Getter

@Setter

private Properties properties = new Properties();

private int sequenceOffset = -1;

private long sequence;

private long lastMilliseconds;

static {

Calendar calendar = Calendar.getInstance();

calendar.set(2016, Calendar.NOVEMBER, 1);

calendar.set(Calendar.HOUR_OF_DAY, 0);

calendar.set(Calendar.MINUTE, 0);

calendar.set(Calendar.SECOND, 0);

calendar.set(Calendar.MILLISECOND, 0);

EPOCH = calendar.getTimeInMillis();

}

@Override

public String getType() {

return "SNOWFLAKE";

}

@Override

public synchronized Comparable generateKey() {

long currentMilliseconds = timeService.getCurrentMillis();

if (waitTolerateTimeDifferenceIfNeed(currentMilliseconds)) {

currentMilliseconds = timeService.getCurrentMillis();

}

if (lastMilliseconds == currentMilliseconds) {

if (0L == (sequence = (sequence + 1) & SEQUENCE_MASK)) {

currentMilliseconds = waitUntilNextTime(currentMilliseconds);

}

} else {

vibrateSequenceOffset();

sequence = sequenceOffset;

}

lastMilliseconds = currentMilliseconds;

return ((currentMilliseconds - EPOCH) << TIMESTAMP_LEFT_SHIFT_BITS) | (getWorkerId() << WORKER_ID_LEFT_SHIFT_BITS) | sequence;

}

@SneakyThrows

private boolean waitTolerateTimeDifferenceIfNeed(final long currentMilliseconds) {

if (lastMilliseconds <= currentMilliseconds) {

return false;

}

long timeDifferenceMilliseconds = lastMilliseconds - currentMilliseconds;

Preconditions.checkState(timeDifferenceMilliseconds < getMaxTolerateTimeDifferenceMilliseconds(),

"Clock is moving backwards, last time is %d milliseconds, current time is %d milliseconds", lastMilliseconds, currentMilliseconds);

Thread.sleep(timeDifferenceMilliseconds);

return true;

}

//取得节点的ID

private long getWorkerId() {

long result = Long.valueOf(properties.getProperty("worker.id", String.valueOf(WORKER_ID)));

Preconditions.checkArgument(result >= 0L && result < WORKER_ID_MAX_VALUE);

return result;

}

private int getMaxVibrationOffset() {

int result = Integer.parseInt(properties.getProperty("max.vibration.offset", String.valueOf(DEFAULT_VIBRATION_VALUE)));

Preconditions.checkArgument(result >= 0 && result <= SEQUENCE_MASK, "Illegal max vibration offset");

return result;

}

private int getMaxTolerateTimeDifferenceMilliseconds() {

return Integer.valueOf(properties.getProperty("max.tolerate.time.difference.milliseconds", String.valueOf(MAX_TOLERATE_TIME_DIFFERENCE_MILLISECONDS)));

}

private long waitUntilNextTime(final long lastTime) {

long result = timeService.getCurrentMillis();

while (result <= lastTime) {

result = timeService.getCurrentMillis();

}

return result;

}

private void vibrateSequenceOffset() {

sequenceOffset = sequenceOffset >= getMaxVibrationOffset() ? 0 : sequenceOffset + 1;

}

}

EPOCH = calendar.getTimeInMillis(); 计算 2016/11/01 零点开始的毫秒数。

generateKey() 实现逻辑

校验当前时间小于等于最后生成编号时间戳,避免服务器时钟同步,可能产生时间回退,导致产生重复编号

获得序列号。当前时间戳可获得自增量到达最大值时,调用 #waitUntilNextTime() 获得下一毫秒

设置最后生成编号时间戳,用于校验时间回退情况

位操作生成编号

根据代码可以得出,如果一个毫秒内只产生一个id,那么12位序列号全是0,所以这种情况生成的id全是偶数。

4.3workerId(节点)的配置问题?

问题:Snowflake 算法需要保障每个分布式节点,有唯一的workerId(节点),怎么解决工作进程编号分配?

Twitter Snowflake 算法实现上是相对简单易懂的,较为麻烦的是怎么解决工作进程编号的分配? 怎么保证全局唯一?

解决方案:

可以通过IP、主机名称等信息,生成workerId(节点Id)。还可以通过 Zookeeper、Consul、Etcd 等提供分布式配置功能的中间件。

由于ShardingJdbc的雪花算法,不是那么的完成。比较简单粗暴的解决策略为:

-

在生产项目中,可以基于 百度的非常成熟、高性能的雪花ID库,实现一个自定义的ID生成器。

-

在学习项目中,可以基于疯狂创客圈的学习类型雪花ID库,实现一个自定义的ID生成器。

参考文献:

http://shardingsphere.io/document/current/cn/overview/

https://blog.csdn.net/tianyaleixiaowu/article/details/70242971

https://blog.csdn.net/clypm/article/details/54378502

https://blog.csdn.net/u011116672/article/details/78374724

https://blog.csdn.net/feelwing1314/article/details/80237178

高并发开发环境系列:springcloud环境

| 组件 | 链接地址 |

|---|---|

| windows centos 虚拟机 安装&排坑 | vagrant+java+springcloud+redis+zookeeper镜像下载(&制作详解)) |

| centos mysql 安装&排坑 | centos mysql 笔记(内含vagrant mysql 镜像) |

| linux kafka安装&排坑 | kafka springboot (或 springcloud ) 整合 |

| Linux openresty 安装 | Linux openresty 安装 |

| 【必须】Linux Redis 安装(带视频) | Linux Redis 安装(带视频) |

| 【必须】Linux Zookeeper 安装(带视频) | Linux Zookeeper 安装, 带视频 |

| Windows Redis 安装(带视频) | Windows Redis 安装(带视频) |

| RabbitMQ 离线安装(带视频) | RabbitMQ 离线安装(带视频) |

| ElasticSearch 安装, 带视频 | ElasticSearch 安装, 带视频 |

| Nacos 安装(带视频) | Nacos 安装(带视频) |

| 【必须】Eureka | Eureka 入门,带视频 |

| 【必须】springcloud Config 入门,带视频 | springcloud Config 入门,带视频 |

| 【必须】SpringCloud 脚手架打包与启动 | SpringCloud脚手架打包与启动 |

| Linux 自启动 假死自启动 定时自启 | Linux 自启动 假死启动 |

回到◀疯狂创客圈▶

疯狂创客圈 - Java高并发研习社群,为大家开启大厂之门