kubernetes集群环境搭建(kubeadm-v1.20.6)

1. kubernetes高可用集群环境搭建(kubeadm-v1.20.6)

1.1 前置知识点

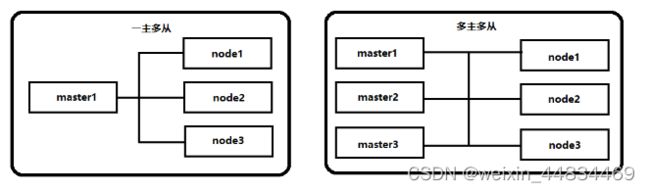

目前生产部署Kubernetes 集群主要有两种方式:

kubeadm

Kubeadm 是一个K8s 部署工具,提供kubeadm init 和kubeadm join,用于快速部署Kubernetes 集群。

官方地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

二进制包

从github 下载发行版的二进制包,手动部署每个组件,组成Kubernetes 集群。

Kubeadm 降低部署门槛,但屏蔽了很多细节,遇到问题很难排查。如果想更容易可控,推荐使用二进制包部署Kubernetes 集群,虽然手动部署麻烦点,期间可以学习很多工作原理,也利于后期维护。

1.2 kubeadm 部署方式介绍

kubeadm 是官方社区推出的一个用于快速部署kubernetes 集群的工具,这个工具能通过两条指令完成一个kubernetes 集群的部署:

- 创建一个master01 节点kubeadm init

- 将Node 节点加入到当前集群中$ kubeadm join

1.3 安装要求

在开始之前,部署Kubernetes 集群机器需要满足以下几个条件: - 一台或多台机器,操作系统CentOS7.x-86_x64

- 硬件配置:2GB 或更多RAM,2 个CPU 或更多CPU,硬盘30GB 或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像

- 禁止swap 分区

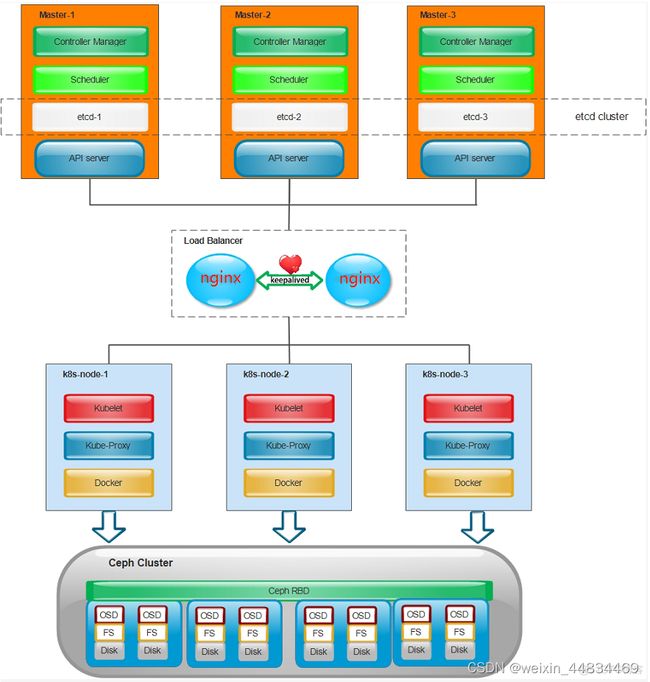

1.4 最终目标 - 在所有节点上安装Docker 和kubeadm

- 部署Kubernetes 多master01

- 部署容器网络插件(calico)

- 部署高可用组件(nginx+keepalived)

- 部署Kubernetes Node,将节点加入Kubernetes 集群中

- 部署Dashboard Web 页面,可视化查看Kubernetes 资源

- 部署metrics-server

- 部署Prometheus+Grafana

- 部署EFK+logstash构建日志收集平台

- 部署Rancher管理k8s集群

- 部署helm v3包管理工具

- 部署ingress控制器

- 部署Harbor私有镜像仓库

1.5 准备环境

角色 IP地址 组件

master1 192.168.225.138 apiserver、controller-manager、scheduler、etcd、calico、docker,kubectl,kubeadm,kubelet、kube-proxy

master2 192.168.225.139 apiserver、controller-manager、scheduler、etcd、calico、docker,kubectl,kubeadm,kubelet、kube-proxy

master3 192.168.225.140 apiserver、controller-manager、scheduler、etcd、calico、docker,kubectl,kubeadm,kubelet、kube-proxy

node01 192.168.225.141 docker,kube-proxy,kubeadm,kubelet、calico、coredns

node02 192.168.225.142 docker,kube-proxy,kubeadm,kubelet、calico

node03 192.168.225.143 docker,kube-proxy,kubeadm,kubelet、calico

vip 192.168.4.150

1.6 环境初始化

1.6.1 检查操作系统的版本,安装规划修改所有节点hostname(所有节点操作)

#1 此方式下安装kubernetes集群要求Centos版本要在7.5或之上

[root@master01 ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

修改主机名称

[root@master01 ~]# hostnamectl set-hostname master01

1.6.2 配置集群节点免密登录(所有节点操作)

#1 生成ssh密钥对:

[root@master01 ~]# ssh-keygen -t rsa #一路回车,不输入密码

#2 把本地的ssh公钥文件安装到远程主机对应的账号中:

[root@master01 ~]# ssh-copy-id -i .ssh/id_rsa.pub master02

[root@master01 ~]# ssh-copy-id -i .ssh/id_rsa.pub master03

[root@master01 ~]# ssh-copy-id -i .ssh/id_rsa.pub node01

[root@master01 ~]# ssh-copy-id -i .ssh/id_rsa.pub node02

[root@master01 ~]# ssh-copy-id -i .ssh/id_rsa.pub node03

#其他几个节点的操作方式跟master01一致,在此只展示master01的操作方式;

1.6.3 主机名解析(所有节点操作)

为了方便集群节点间的直接调用,配置一下主机名解析,企业中推荐使用内部DNS服务器

#1 主机名成解析 编辑6台服务器的/etc/hosts文件,添加下面内容

[root@master0101 ~]# cat >> /etc/hosts <

192.168.225.138 master01

192.168.225.139 master02

192.168.225.140 master03

192.168.225.141 node01

192.168.225.142 node02

192.168.225.143 node03

EOF

1.6.4 时间同步(所有节点操作)

#1 安装ntpdate命令,

[root@master0101 ~]# yum install ntpdate -y

#跟网络源做同步

[root@master0101 ~]# ntpdate ntp.aliyun.com

#2 把时间同步做成计划任务

[root@master0101 ~]# crontab -e

* */1 * * * /usr/sbin/ntpdate ntp.aliyun.com

#3 重启crond服务,修改时区

[root@master0101 ~]# systemctl restart crond.service && timedatectl set-timezone Asia/Shanghai

1.6.5 禁用iptable和firewalld服务(所有节点操作)

kubernetes和docker 在运行的中会产生大量的iptables规则,为了不让系统规则跟它们混淆,直接关闭系统的规则

#1 关闭firewalld服务

[root@master0101 ~]# systemctl stop firewalld && systemctl disable firewalld

#2 安装iptables服务,同时关闭iptables服务

[root@master0101 ~]# yum install -y iptables-services.x86_64

[root@master0101 ~]# systemctl stop iptables && systemctl disable iptables

#3 清空iptables规则

[root@master0101 ~]# iptables -F

1.6.6 禁用selinux(所有节点操作)

selinux是linux系统下的一个安全服务,如果不关闭它,在安装集群中会产生各种各样的奇葩问题

#1 关闭selinux,注意修改完毕之后需要重启服务器才可以生效

[root@master0101 ~]# sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

1.6.7 禁用swap分区(所有节点操作)

swap分区指的是虚拟内存分区,它的作用是物理内存使用完,之后将磁盘空间虚拟成内存来使用,启用swap设备会对系统的性能产生非常负面的影响,因此kubernetes要求每个节点都要禁用swap设备,但是如果因为某些原因确实不能关闭swap分区,就需要在集群安装过程中通过明确的参数进行配置说明

#1 编辑分区配置文件/etc/fstab,注释掉swap分区一行,如果是克隆的虚拟机,需要删除UUID,注意修改完毕之后需要重启服务器

[root@master0101 ~]# vim /etc/fstsb

1.6.8 修改linux的内核参数(所有节点操作)

#1 修改linux的内核采纳数,添加网桥过滤和地址转发功能

#2 加载网桥过滤模块

[root@master01 ~]# modprobe br_netfilter

#3 编辑/etc/sysctl.d/k8s.conf文件,添加如下配置:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@master01 ~]#cat >> /etc/sysctl.conf <

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

#4 重新加载配置

[root@master01 ~]# sysctl -p /etc/sysctl.d/k8s.conf

#5 查看网桥过滤模块是否加载成功

[root@master01 ~]# lsmod | grep br_netfilter

#6 开机加载内核模块

[root@master01 ~]# echo "modprobe br_netfilter" >> /etc/profile

1.6.9 配置ipvs功能(所有节点操作)

在Kubernetes中Service有两种带来模型,一种是基于iptables的,一种是基于ipvs的两者比较的话,ipvs的性能明显要高一些,但是如果要使用它,需要手动载入ipvs模块

#1 安装ipset和ipvsadm

[root@master01 ~]# yum install ipvsadm.x86_64 ipset -y

#2 添加需要加载的模块写入脚本文件

[root@master01 ~]# cat < /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

#3 为脚本添加执行权限

[root@master01 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

1.6.10 配置部署集群所需要的yum源(所有节点操作)

阿里云官方镜像站:https://developer.aliyun.com/mirror/?spm=a2c6h.13651102.0.0.77ae1b112Ukw1G&serviceType=mirror

需要的yum.repo源

---CentOS-Base.repo

---docker-ce.repo

---epel.repo

---kubernetes.repo

#1 备份原有yum.repo源

[root@master01 ~]# mkdir /root/yum.bak

[root@master01 ~]# mv /etc/yum.repos.d/* /root/yum.repos.bak

#2 将准备好的yum源通过scp的方式上传到集群所有节点的yum.repo目录下,也可以通过一下方式下载,

安装一些依赖

[root@master01 yum.repos.d]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@master01 yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

下载的CentOS-Base.repo后面安转启动docker会无法启动,

解决办法参考:https://blog.csdn.net/qq_47855463/article/details/119656678

[root@master01 yum.repos.d]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@master01 yum.repos.d]# curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@master01 yum.repos.d]# sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

[root@master01 yum.repos.d]# cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@master01 yum.repos.d]# ll

total 16

-rw-r--r--. 1 root root 2523 Mar 17 09:44 CentOS-Base.repo

-rw-r--r--. 1 root root 2081 Mar 22 21:43 docker-ce.repo

-rw-r--r--. 1 root root 1050 Mar 22 22:43 epel.repo

-rw-r--r--. 1 root root 133 Mar 22 22:23 kubernetes.repo

#3 更新yum源

[root@master01 ~]# yum makecache

#4 配置安装基础依赖包

[root@master01 ~]# yum install -y wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack

1.6.11 安装docker(所有节点操作)

#1 查看当前镜像源中支持的docker版本

[root@master01 ~]# yum list docker-ce --showduplicates

#2 安装特定版本的docker-ce,必须制定--setopt=obsoletes=0,否则yum会自动安装更高版本

[root@master01 ~]# yum install docker-ce docker-ce-cli containerd.io --skip-broken

#3 添加一个配置文件,Docker 在默认情况下使用Vgroup Driver为cgroupfs,而Kubernetes推荐使用systemd来替代cgroupfs,同时配置docker镜像源加速

[root@master01 ~]# mkdir /etc/docker

[root@master01 ~]# cat < /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://kn0t2bca.mirror.aliyuncs.com"]

}

EOF

#4 启动dokcer

[root@master01 ~]# systemctl start docker.service && systemctl enable docker.service

[root@master01 ~]# systemctl status docker.service

1.6.12 安装Kubernetes组件(所有节点操作)

#1 安装kubeadm、kubelet和kubectl

[root@master01 ~]# yum install --setopt=obsoletes=0 kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 -y

#2 启动kubelet,并设置为开机自启

[root@master01 ~]# systemctl enable --now kubelet

(systemctl enable --now kubelet = systemctl start kubelet && systemctl enable kubelet)

[root@master01 ~]# systemctl status kubelet

#可以看到kubelet状态不是running(auto-restart)状态,这个是正常的不用管,等k8s组件起来这个kubelet就正常了。

*tab补全键设置

[root@master01 ~]# kubectl completion bash >/etc/bash_completion.d/kubectl

[root@master01 ~]# kubeadm completion bash >/etc/bash_completion.d/kubeadm

1.6.13 准备集群镜像(所有节点操作)

下载路径:https://blog.51cto.com/transfer?https://dl.k8s.io/v1.20.7/kubernetes-server-linux-amd64.tar.gz

#1 在安装kubernetes集群之前,必须要提前准备好集群需要的镜像,将部署集群需要的镜像通过scp的方式上传到集群的每个节点上面

[root@master01 ~]# ll

-rw-r--r-- 1 root root 1083635200 Mar 17 10:00 k8simage-1-20-6.tar.gz

#2 在各个节点上面使用docker命令解压集群镜像文件,不能使用tar直接去解压

[root@master01 ~]# docker load -i kube-controller-manager-v1.20.6.tar.gz

把下载得tar包挨个导入

#3 查看解压完成后的docker镜像

[root@master01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.20.6 9a1ebfd8124d 11 months ago 118MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.20.6 560dd11d4550 11 months ago 116MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.20.6 b93ab2ec4475 11 months ago 47.3MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.20.6 b05d611c1af9 11 months ago 122MB

calico/pod2daemon-flexvol v3.18.0 2a22066e9588 12 months ago 21.7MB

calico/node v3.18.0 5a7c4970fbc2 13 months ago 172MB

calico/cni v3.18.0 727de170e4ce 13 months ago 131MB

calico/kube-controllers v3.18.0 9a154323fbf7 13 months ago 53.4MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 19 months ago 253MB

registry.aliyuncs.com/google_containers/coredns 1.7.0 bfe3a36ebd25 21 months ago 45.2MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 2 years ago 683kB

1.6.14 配置nginx和keepalived(3个master节点操作)

#1 安装keepalived和nginx

[root@master01 ~]# yum install nginx keepalived -y

在三个master节点配置

检查nginx状态脚本:

cat > /etc/keepalived/check_nginx.sh << "EOF"

#!/bin/bash

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

Keepalived配置(master)

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.4.150

}

track_script {

check_nginx

}

}

EOF

Keepalived配置(backup)

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.4.150

}

track_script {

check_nginx

}

}

EOF

检查nginx状态脚本:

cat > /etc/keepalived/check_nginx.sh << "EOF"

#!/bin/bash

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

``

#2 修改3个节点上面的keepalived配置文件,修改角色、优先级、网卡名称,其他保持不变即可,nginx配置文件不用修改

[root@master01 ~]# ll /etc/keepalived/

total 8

-rw-r--r-- 1 root root 134 Mar 22 23:16 check_nginx.sh

-rw-r--r-- 1 root root 986 Mar 23 22:51 keepalived.conf

[root@master01 ~]# ll /etc/nginx/nginx.conf

-rw-r--r-- 1 root root 1442 Mar 22 23:12 /etc/nginx/nginx.conf

#3 先启动3个节点的nginx服务,在启动keepalived服务

[root@master01 ~]# systemctl daemon-reload

[root@master01 ~]# systemctl restart nginx && systemctl enable nginx && systemctl status nginx

[root@master01 ~]# systemctl restart keepalived && systemctl enable keepalived && systemctl status keepalived

#4 查看VIP是否生成

[root@master01 ~]# ip addr

2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:ba:33:03 brd ff:ff:ff:ff:ff:ff

inet 192.168.225.138/24 brd 192.168.225.255 scope global noprefixroute ens192

valid_lft forever preferred_lft forever

inet 192.168.225.150/24 scope global secondary ens192

valid_lft forever preferred_lft forever

inet6 fe80::88c2:50d5:146:e608/64 scope link noprefixroute

valid_lft forever preferred_lft forever

1.6.15 集群初始化(master01节点操作)

```#1 创建集群初始化文件

[root@master01 ~]# vim kubeadm-config.yaml

# 根据实际部署环境修改信息:

vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.20.6

controlPlaneEndpoint: "192.168.1.18:6443" #vip和端口

imageRepository: k8s.gcr.io

apiServer:

timeoutForControlPlane: 4m0s

certSANs:

- 192.168.4.138

- 192.168.4.139

- 192.168.4.140

- 192.168.4.141

- 192.168.4.142

- 192.168.4.143

- 192.168.4.150

extraArgs:

authorization-mode: "Node,RBAC"

service-node-port-range: 30000-36000 # service端口范围

networking:

dnsDomain: cluster.local

serviceSubnet: "10.96.0.0/16" # service网段信息

podSubnet: "10.244.0.0/16" # pod网段信息

---

#开启ipvs模式

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

也可以下面命令来集群初始化

[root@master01 ~]# kubeadm init \

--image-repository=registry.cn-hangzhou.aliyuncs.com/k8sos \

--kubernetes-version=v1.20.6 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

#2 使用配置文件初始化集群

[root@master01 ~]# kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=SystemVerification

依次执行提示命令:

[root@master01 ~]# mkdir -p $HOME/.kube

[root@master01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

1.6.16 master节点加入集群(master02-03节点操作)

#1 创建目录,拷贝证书

创建目录

[root@master02 ~]# cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

拷贝证书

[root@master01 ~]# scp /etc/kubernetes/pki/ca.crt master02:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/ca.key master02:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/sa.key master02:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/sa.pub master02:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.crt master02:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.key master02:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/etcd/ca.crt master02:/etc/kubernetes/pki/etcd/

[root@master01 ~]# scp /etc/kubernetes/pki/etcd/ca.key master02:/etc/kubernetes/pki/etcd/

[root@master03 ~]# cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

[root@master01 ~]# scp /etc/kubernetes/pki/ca.crt master03:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/ca.key master03:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/sa.key master03:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/sa.pub master03:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.crt master03:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.key master03:/etc/kubernetes/pki/

[root@master01 ~]# scp /etc/kubernetes/pki/etcd/ca.crt master03:/etc/kubernetes/pki/etcd/

[root@master01 ~]# scp /etc/kubernetes/pki/etcd/ca.key master0:/etc/kubernetes/pki/etcd/

同上脚本进行拷贝:

vim cert-rsync-master.sh

#!/bin/bash

USER=root

CONTROL_PLANE_IPS="192.168.4.139 192.168.4.140"

for host in ${CONTROL_PLANE_IPS}; do

ssh "${USER}"@$host mkdir -p /etc/kubernetes/pki/etcd

scp /etc/kubernetes/pki/ca.crt "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host: /etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:/etc/kubernetes/pki/etcd/ca.crt

scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:/etc/kubernetes/pki/etcd/ca.key

done

[root@master01 ~] bash cert-rsync-master.sh

#2 查看token文件,token文件的有效期为24h,过期后无法在使用该token加入集群

[root@master01 ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

6zqaxk.te6y81dkvy73j5hs 23h 2022-03-26T10:43:37+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

#3 重新生成token文件的方法

[root@master01 ~]# kubeadm token create --print-join-command

![]()

#4 查看token文件的sha256字段

[root@master01 ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

![]()

#5 使用token将master02和master03加入到集群中

[root@master02 ~]# kubeadm join 192.168.225.150:16443 --token 6zqaxk.te6y81dkvy73j5hs --discovery-token-ca-cert-hash sha256:f4694c021a9c1467b40254518358f2abf5e50f775e48907f3e08502a09dcc7db --control-plane --ignore-preflight-errors=SystemVerification

[root@master03 ~]# kubeadm join 192.168.225.150:16443 --token 6zqaxk.te6y81dkvy73j5hs --discovery-token-ca-cert-hash sha256:f4694c021a9c1467b40254518358f2abf5e50f775e48907f3e08502a09dcc7db --control-plane --ignore-preflight-errors=SystemVerification

1.6.18 安装calico网络插件(master01节点操作)

#1 将calico的yaml文件通过scp的方式上传到master01上面,然后使用kubectl安装calico

--- 在文末会提供calico.yaml的下载链接

[root@master01 ~]# kubectl apply -f calico.yaml

1.6.19 查看集群状态(master01节点操作)

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane,master 35h v1.20.6

master02 Ready control-plane,master 35h v1.20.6

master03 Ready control-plane,master 22h v1.20.6

node01 Ready <none> 24h v1.20.6

node02 Ready <none> 24h v1.20.6

node03 Ready <none> 24h v1.20.6

操作过程中就会遇到每个人各种不中的问题,可根据报错百度解决,

1.6.20 为node节点打标签(扩展)(master01节点操作)

#1 我们可以看到,节点列表中ROLES这块,node节点为node,如果想标识node节点,使用下面命令实现

[root@master01 ~]# kubectl label node node01 node-role.kubernetes.io/worker=worker

[root@master01 ~]# kubectl label node node02 node-role.kubernetes.io/worker=worker

[root@master01 ~]# kubectl label node node03 node-role.kubernetes.io/worker=worker

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane,master 35h v1.20.6

master02 Ready control-plane,master 35h v1.20.6

master03 Ready control-plane,master 22h v1.20.6

node01 Ready worker 24h v1.20.6

node02 Ready worker 24h v1.20.6

node03 Ready worker 24h v1.20.6

1.7 集群测试

[root@master01 ~]# kubectl create deployment nginx --image=nginx:1.14-alpine

deployment.apps/nginx created

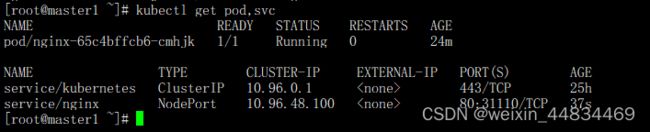

1.7.2 暴露端口

[root@master01 ~]# kubectl expose deploy nginx --port=80 --target-port=80 --type=NodePort

service/nginx exposed

1.7.3 查看服务

[root@master01 ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-65c4bffcb6-2qxzh 1/1 Running 0 49s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx NodePort 10.10.153.8 <none> 80:31076/TCP 20s

1.7.4 查看pod

浏览器测试结果:

1.7.5 测试coredns服务是否正常

[root@master01 ~]# kubectl exec -it nginx-65c4bffcb6-2qxzh -- sh

/ # ping nginx

PING nginx (10.10.153.8): 56 data bytes

64 bytes from 10.10.153.8: seq=0 ttl=64 time=0.066 ms

64 bytes from 10.10.153.8: seq=1 ttl=64 time=0.151 ms

/ # nslookup nginx.default.svc.cluster.local

Name: nginx.default.svc.cluster.local

Address 1: 10.10.153.8 nginx.default.svc.cluster.local

#10.10.153.8 是创建的nginx的service ip

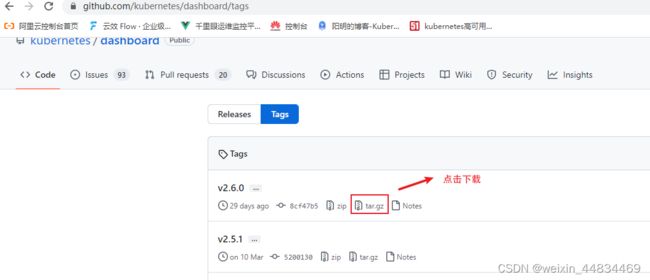

1.8 部署Dashboard Web

GitHub地址:https://github.com/kubernetes/dashboard

[root@master01 ~]# docker load -i dashboard-2.6.0.tar.gz

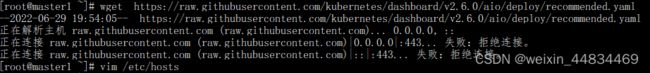

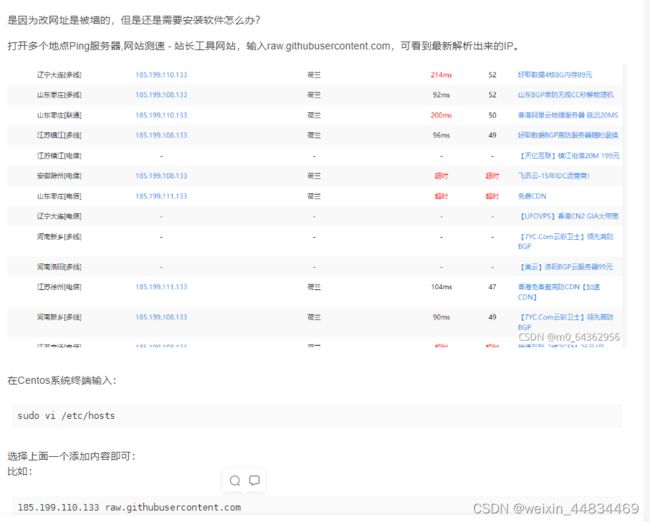

[root@master01 ~]# wget https://github.com/kubernetes/dashboard/blob/v2.6.0/aio/deploy/recommended.yaml

[root@master01 ~]# vim recommended.yaml

修改完后的配置如下:

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 31443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

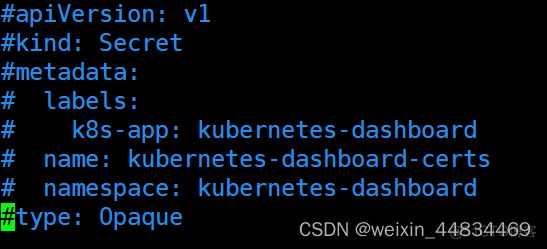

###########因为自动生成的证书很多浏览器无法使用,所以自己创建证书

#apiVersion: v1

#kind: Secret

#metadata:

# labels:

# k8s-app: kubernetes-dashboard

# name: kubernetes-dashboard-key-holder

# namespace: kubernetes-dashboard

#type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.6.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.8

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

#2 修改配置文件,增加直接访问端口

#3 修改配置文件,注释原kubernetes-dashboard-certs对象声明

#4 自签证书

#1 新建证书存放目录

[root@master01 ~]# mkdir /etc/kubernetes/dashboard-certs

[root@master01 ~]# cd /etc/kubernetes/dashboard-certs/

#2 创建命名空间

[root@master01 ~]# kubectl create namespace kubernetes-dashboard

#3 创建key文件

[root@master01 ~]# openssl genrsa -out dashboard.key 2048

#4 证书请求

[root@master01 ~]# openssl req -days 36000 -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert'

#5 自签证书

[root@master01 ~]# openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

#6 创建kubernetes-dashboard-certs对象

[root@master01 ~]# kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

#4 运行dashboard

[root@master01 ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

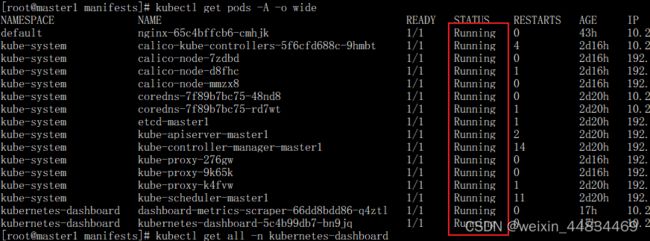

#5 查看dashboard组件状态

[root@master01 dashboard-certs]# kubectl get all -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-7b59f7d4df-z24lb 1/1 Running 0 4m20s

pod/kubernetes-dashboard-74d688b6bc-wd54z 1/1 Running 0 78s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.10.42.68 <none> 8000/TCP 4m20s

service/kubernetes-dashboard NodePort 10.10.172.2 <none> 443:30001/TCP 4m21s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/dashboard-metrics-scraper 1/1 1 1 4m20s

deployment.apps/kubernetes-dashboard 1/1 1 1 4m20s

NAME DESIRED CURRENT READY AGE

replicaset.apps/dashboard-metrics-scraper-7b59f7d4df 1 1 1 4m20s

replicaset.apps/kubernetes-dashboard-74d688b6bc 1 1 1 4m20s

#6 创建Dashboard管理员账号dashboard-admin.yaml,并apply

[root@master01 ~]# vim dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: dashboard-admin

namespace: kubernetes-dashboard

[root@master01 ~]# kubectl apply -f dashboard-admin.yaml

#7 赋权dashboard-admin-bind-cluster-role.yaml,并apply

[root@master01 ~]# vim dashboard-admin-bind-cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-bind-cluster-role

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

[root@master01 ~]# kubectl apply -f dashboard-admin-bind-cluster-role.yaml

#8 获取token信息

[root@master01 ~]# kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}'

dashboard-admin-token-w69c5

#9 登录访问https://192.168.225.138:30001/#/login

#1 设置集群参数

[root@master01 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.crt --server="https://192.168.225.150:16443" --embed-certs=true --kubeconfig=/root/shibosen-admin.conf

#2 设置变量

[root@master01 ~]# DEF_NS_ADMIN_TOKEN=$(kubectl get secret dashboard-admin-token-hj6j4 -n kubernetes-dashboard -o jsonpath={.data.token}|base64 -d)

#3 设置客户端认证参数

[root@master01 ~]# kubectl config set-credentials dashboard-admin --token=$DEF_NS_ADMIN_TOKEN --kubeconfig=/root/shibosen-admin.conf

#4 设置安全上下文参数

[root@master01 ~]# kubectl config set-context dashboard-admin@kubernetes --cluster=kubernetes --user=dashboard-admin --kubeconfig=/root/shibosen-admin.conf

#5 设置当前上下文参数

[root@master01 ~]# kubectl config use-context dashboard-admin@kubernetes --kubeconfig=/root/shibosen-admin.conf

[root@master01 ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/tag/v0.6.0

[root@master01 ~]# https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.0/components.yaml

[root@master01 ~]# vim components.yaml

修改后的文件内容如下所示:

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

image: k8s.gcr.io/metrics-server/metrics-server:v0.6.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

执行如下命令安装Metrics-Server。

[root@master01 ~]# kubectl apply -f components-v0.6.0.yaml

查看metrics-server的pod运行状态

[root@master01 ~]# kubectl get pods -n kube-system| egrep 'NAME|metrics-server'

[root@master01 ~]# kubectl get pod -n kube-system | grep metrics-server

metrics-server-6f9f86ddf9-zphlw 1/1 Running 0 11s

1.10 部署Prometheus+Grafana

下载地址:https://prometheus.io/download/

[root@master01 ~]# cd /usr/local/prometheus

[root@master01 prometheus ]# ./prometheus --config.file=/usr/local/prometheus/prometheus.yml &

[root@master01 ~]#

页面访问:192.168.255.183:9090