基于WGAN-GP方法的时间序列信号生成(以轴承振动信号为例)

生成对抗网络GAN作为非监督学习,由生成器和判别器两个神经网络构成。生成器从潜在空间中随机取样作为输入,试图生成与真实样本数据相仿的数据。判别器的输入则为真实样本数据或生成器生成数据,进而判断其输入是真实数据还是生成数据。两个网络相互对抗、不断调整参数,最终目的是使判别网络无法判断生成网络的输出结果是否真实。

WGAN作为GAN的改进模型,使用Wasserstein距离来替代JS散度作为优化目标,从根本上解决了GAN的梯度消失、训练不稳定以及模式崩溃的问题。但WGAN采用权重截断方式来满足Lipschitz连续性条件,将导致部分权重最终落在上下限附近,使神经网络的权重走向极端(取最大值,或取最小值),极大地限制了其拟合能力,导致梯度消失或爆炸。因此在原网络损失函数基础上加入惩罚项,实现Lipschitz约束,以弥补WGAN的缺陷,即WGAN-GP模型。

提出一种基于WGAN-GP方法的时间序列信号生成方法,并以轴承振动信号为例进行说明。

关于python的集成环境,采用的Winpython环境,IDE为spyder(类MATLAB界面)。

winpython脱胎于pythonxy,面向科学计算,兼顾数据分析与挖掘;Anaconda主要面向数据分析与挖掘方面,在大数据处理方面有自己特色的一些包;winpython强调便携性,被做成绿色软件,不写入注册表,安装其实就是解压到某个文件夹,移动文件夹甚至放到U盘里在其他电脑上也能用;Anaconda则算是传统的软件模式。winpython是由个人维护;Anaconda由数据分析服务公司维护,意味着Winpython在很多方面都从简,而Anaconda会提供一些人性化设置。Winpython 只能在windows上用,Anaconda则有linux的版本。

抛开软件包的差异,我个人也推荐初学者用winpython,正因为其简单,问题也少点,由于便携性的特点系统坏了,重装后也能直接用。

请直接安装、使用winPython:WinPython download因为很多模块以及集成的模块.

程序部分代码如下:

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import scipy.io as sio

import numpy as np

import math

import os

import sys

import matplotlib.pyplot as plt

import time

import matplotlib.gridspec as gridspec

import preprocess_for_gan

import argparse

args = None

#相关参数,以B007(滚动体轻微故障)为目标生成样本

def parse_args():

parser = argparse.ArgumentParser(description='GAN')

# basic parameters

parser.add_argument('--phase', type=str, default='train', help='train or generate')

parser.add_argument('--GAN_type', type=str, default='WGAN-GP', help='the name of the model: WGAN, WGAN-GP, DCGAN, LSGAN, SNGAN, RSGAN, RaSGAN')

parser.add_argument('--data_dir', type=str, default= 'data\\0HP', help='the directory of the data')

parser.add_argument('--target', type=str, default='B007', help='target signal to generate')

parser.add_argument('--imbalance_ratio', type=int, default=100, help='imbalance ratio between major class samples and minor class samples')

parser.add_argument('--checkpoint_dir', type=str, default='samples\WGAN-GP\ORDER\ratio-50\orderIR021-05-17-20_22\checkpoint\model-97000', help='the saved checkpoint to generate signals')

parser.add_argument('--batch_size', type=int, default=5, help='batchsize of the training process')

parser.add_argument('--swith_threshold', type=int, default=2.5, help='threshold of G-D loss difference for determining to train G or D')

parser.add_argument('--normalization', type=str, default='minmax', help='way to process data: minmax or mean')

parser.add_argument('--sampling', type=str, default='order', help='way to sample signals from original dataset: enc, order, random')

# optimization information

parser.add_argument('--lr', type=float, default=2e-4, help='the initial learning rate')

parser.add_argument('--epsilon', type=float, default=1e-14, help='if epsilon is too big, training of DCGAN is failure')

# save, load and display information

parser.add_argument('--max_epoch', type=int, default=30000, help='max number of epoch')

parser.add_argument('--sample_step', type=int, default=1000, help='the interval of log training information')

args = parser.parse_args()

return args

def get_sin_training_data(shape):

'''

使用预加载的数据_正弦信号

'''

half_T = 30 # T/2 of sin function

length = shape[0] * shape[1]

array = np.arange(0, length)

ori_data = np.sin(array*np.pi/half_T)

training_data = np.reshape(ori_data, shape)

return training_data

def shuffle_set(x, y):

size = np.shape(x)[0]

x_row = np.arange(0, size)

permutation = np.random.permutation(x_row.shape[0])

x_shuffle = x[permutation,:,:]

y_shuffle = np.array(y)[permutation]

return x_shuffle, y_shuffle

def get_batch(x, y, now_batch, batch_size, total_batch):

if now_batch < total_batch - 1:

x_batch = x[now_batch*batch_size:(now_batch+1)*batch_size,:]

y_batch = y[now_batch*batch_size:(now_batch+1)*batch_size]

else:

x_batch = x[now_batch*batch_size:,:]

y_batch = y[now_batch*batch_size:]

return x_batch, y_batch

def plot(samples):

num = np.size(samples,0)

length = np.size(samples,1)

fig = plt.figure()

gs = gridspec.GridSpec(num,1)

gs.update(wspace = 0.05, hspace = 0.05)

samples = np.reshape(samples, [num,length])

x = np.arange(0, length)

for i in range(num):

ax = plt.subplot(gs[i,0])

y = samples[i]

ax.plot(x, y)

return fig

def deconv(inputs, shape, strides, out_num, is_sn=False):

# input [X_batch, in_channels, in_width] // 2D [X_batch, height, width, in_channels]

# shape [filter_width, output_channels, in_channels]

filters = tf.get_variable("kernel", shape=shape, initializer=tf.random_normal_initializer(stddev=0.02))

bias = tf.get_variable("bias", shape=[shape[-2]], initializer=tf.constant_initializer([0]))

if is_sn:

return tf.nn.conv1d_transpose(inputs, spectral_norm("sn", filters), out_num, strides) + bias

else:

return tf.nn.conv1d_transpose(inputs, filters, out_num, strides, "SAME") + bias

def conv(inputs, shape, strides, is_sn=False):

filters = tf.get_variable("kernel", shape=shape, initializer=tf.random_normal_initializer(stddev=0.02))

bias = tf.get_variable("bias", shape=[shape[-1]], initializer=tf.constant_initializer([0]))

if is_sn:

return tf.nn.conv1d(inputs, spectral_norm("sn", filters), strides, "SAME") + bias

else:

return tf.nn.conv1d(inputs, filters, strides, "SAME") + bias

def fully_connected(inputs, num_out, is_sn=False):

W = tf.get_variable("W", [inputs.shape[-1], num_out], initializer=tf.random_normal_initializer(stddev=0.02))

b = tf.get_variable("b", [num_out], initializer=tf.constant_initializer([0]))

if is_sn:

return tf.matmul(inputs, spectral_norm("sn", W)) + b

else:

return tf.matmul(inputs, W) + b

def leaky_relu(inputs, slope=0.2):

return tf.maximum(slope*inputs, inputs)

def spectral_norm(name, w, iteration=1):

#Spectral normalization which was published on ICLR2018,please refer to "https://www.researchgate.net/publication/318572189_Spectral_Normalization_for_Generative_Adversarial_Networks"

#This function spectral_norm is forked from "https://github.com/taki0112/Spectral_Normalization-Tensorflow"

w_shape = w.shape.as_list()

w = tf.reshape(w, [-1, w_shape[-1]])

with tf.variable_scope(name, reuse=False):

u = tf.get_variable("u", [1, w_shape[-1]], initializer=tf.truncated_normal_initializer(), trainable=False)

u_hat = u

v_hat = None

def l2_norm(v, eps=1e-12):

return v / (tf.reduce_sum(v ** 2) ** 0.5 + eps)

for i in range(iteration):

v_ = tf.matmul(u_hat, tf.transpose(w))

v_hat = l2_norm(v_)

u_ = tf.matmul(v_hat, w)

u_hat = l2_norm(u_)

sigma = tf.matmul(tf.matmul(v_hat, w), tf.transpose(u_hat))

w_norm = w / sigma

with tf.control_dependencies([u.assign(u_hat)]):

w_norm = tf.reshape(w_norm, w_shape)

return w_norm

def mapping(x, epsilon):

max = np.max(x)

min = np.min(x)

return (x - min) * 255.0 / (max - min + epsilon)

def instanceNorm(inputs, epsilon):

mean, var = tf.nn.moments(inputs, axes=[1], keep_dims=True) # axes=[1,2]

scale = tf.get_variable("scale", shape=mean.shape[-1], initializer=tf.constant_initializer([1.0]))

shift = tf.get_variable("shift", shape=mean.shape[-1], initializer=tf.constant_initializer([0.0]))

return (inputs - mean) * scale / (tf.sqrt(var + epsilon)) + shift

def make_dir(args):

now = time.strftime("%m-%d-%H_%M", time.localtime(time.time()))

# samples_dir

# if args.phase == 'train':

samples_dir = "samples/"+args.GAN_type+'/'+'ratio-'+str(args.imbalance_ratio)+'/'+args.sampling+args.target+'-'+now

if not os.path.exists(samples_dir):

os.makedirs(samples_dir)

figure_dir = samples_dir + "/figure"

if not os.path.exists(figure_dir):

os.makedirs(figure_dir)

checkpoint_dir = samples_dir + "/checkpoint"

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

signals_dir = samples_dir + "/signals"

if not os.path.exists(signals_dir):

os.makedirs(signals_dir)

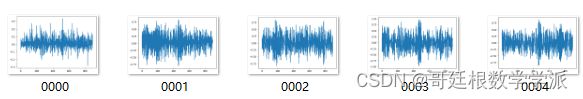

return samples_dir, figure_dir, checkpoint_dir, signals_dir部分出图如下:

生成文件如下:

工学博士,担任《Mechanical System and Signal Processing》审稿专家,担任

《中国电机工程学报》优秀审稿专家,《控制与决策》,《系统工程与电子技术》,《电力系统保护与控制》,《宇航学报》等EI期刊审稿专家,担任《计算机科学》,《电子器件》 , 《现代制造过程》 ,《电源学报》,《船舶工程》 ,《轴承》 ,《工矿自动化》 ,《重庆理工大学学报》 ,《噪声与振动控制》 ,《机械传动》 ,《机械强度》 ,《机械科学与技术》 ,《机床与液压》,《声学技术》,《应用声学》,《石油机械》,《西安工业大学学报》等中文核心审稿专家。

擅长领域:现代信号处理,机器学习,深度学习,数字孪生,时间序列分析,设备缺陷检测、设备异常检测、设备智能故障诊断与健康管理PHM等。