qemu-kvm-1.1.0源代码中关于迁移的代码分析

这篇文档基于qemu-kvm-1.1.0源代码进行分析。

首先,源代码中的hmp-commands.hx文件里有下面内容:

{

.name = "migrate",/* 在monitor命令行中所使用的命令名称 */

.args_type = "detach:-d,blk:-b,inc:-i,uri:s",

.params = "[-d] [-b] [-i] uri",/* 重要,是命令的附加參数,详细參照后文的分析 */

.help = "migrate to URI (using -d to not wait for completion)"

"\n\t\t\t -b for migration without shared storage with"

" full copy of disk\n\t\t\t -i for migration without "

"shared storage with incremental copy of disk "

"(base image shared between src and destination)",

.mhandler.cmd = hmp_migrate,/* 相应的处理函数 */

},

STEXI

@item migrate [-d] [-b] [-i] @var{uri}

@findex migrate

Migrate to @var{uri} (using -d to not wait for completion).

-b for migration with full copy of disk

-i for migration with incremental copy of disk (base image is shared)

ETEXI

这部分内容是与迁移命令相相应的。能够看到,与迁移命令相相应的处理函数是hmp_migrate(hmp.c)。

Qemu-kvm使用hmp-commands.hx这个文件保存命令行參数和相应的常量。然后使用hxtool工具,利用该文件产生相应的c头文件hmp-commands.h。

Sh /root/qemu-kvm-1.1.0/scripts/hxtool -h < /root/qemu-kvm-1.2.0/hmp-commands.hx > hmp-commands.h进行转换的时候,STEXI与ETEXI之间的内容是不被写入头文件的。

在monitor.c源文件里有例如以下内容:

/* mon_cmds andinfo_cmds would be sorted at runtime */

static mon_cmd_tmon_cmds[] = {

#include"hmp-commands.h"

{ NULL, NULL, },

};

所以hmp-commands.hx文件终于的作用是给结构体数组mon_cmds赋值。

然后分析进入函数hmp_migrate后是怎样处理的:

调用关系依次例如以下(每一行就是一次函数调用):

Hmp_migrate(hm是human monitor的意思),

Qmp_migrate(qmp是qemu monitor protocal的意思),

tcp_start_outgoing_migration(以迁移时使用的uri是以tcp开头为例),

migrate_fd_connect,

然后migrate_fd_connect函数会先调用qemu_savevm_state_begin函数(进行迁移工作的初始化工作),然后进入migrate_fd_put_ready函数,

migrate_fd_put_ready则会首先调用qemu_savevm_state_iterate函数,该函数进行迁移的主要工作;完毕后进行新旧虚拟机的切换工作。

我们再来看看qemu_savevm_state_begin和qemu_savevm_state_iterate函数究竟是怎样工作的:

qemu_savevm_state_begin中有例如以下代码段:

QTAILQ_FOREACH(se, &savevm_handlers, entry) {

if(se->set_params == NULL) {

continue;

}

se->set_params(blk_enable, shared, se->opaque);

}

qemu_put_be32(f, QEMU_VM_FILE_MAGIC);

qemu_put_be32(f, QEMU_VM_FILE_VERSION);

QTAILQ_FOREACH(se, &savevm_handlers, entry) {

int len;

if (se->save_live_state == NULL)

continue;

/* Section type */

qemu_put_byte(f, QEMU_VM_SECTION_START);

qemu_put_be32(f, se->section_id);

/* ID string */

len = strlen(se->idstr);

qemu_put_byte(f, len);

qemu_put_buffer(f, (uint8_t *)se->idstr, len);

qemu_put_be32(f, se->instance_id);

qemu_put_be32(f, se->version_id);

ret = se->save_live_state(f, QEMU_VM_SECTION_START, se->opaque);

if (ret < 0) {

qemu_savevm_state_cancel(f);

return ret;

}

}

ret = qemu_file_get_error(f);

if (ret != 0) {

qemu_savevm_state_cancel(f);

}

qemu_savevm_state_iterate中有例如以下代码段:

QTAILQ_FOREACH(se, &savevm_handlers, entry) {

if (se->save_live_state == NULL)

continue;

/* Section type */

qemu_put_byte(f, QEMU_VM_SECTION_PART);

qemu_put_be32(f, se->section_id);

ret = se->save_live_state(f, QEMU_VM_SECTION_PART, se->opaque);

if (ret <= 0) {

/* Do not proceed to the next vmstate before this one reported

completion of the current stage. This serializes the migration

and reduces the probability that a faster changing state is

synchronized over and over again. */

break;

}

}

if (ret != 0) {

return ret;

}

ret = qemu_file_get_error(f);

if (ret != 0) {

qemu_savevm_state_cancel(f);

}

两段代码有一些相似,看了以下的内容就知道这两段代码是怎么回事了。

SaveStateEntry结构体是虚拟机活迁移的核心结构体(定义在savevm.c源文件里):

typedef struct SaveStateEntry {

QTAILQ_ENTRY(SaveStateEntry) entry;

char idstr[256];

int instance_id;

int alias_id;

int version_id;

int section_id;

SaveSetParamsHandler *set_params;

SaveLiveStateHandler *save_live_state;

SaveStateHandler *save_state;

LoadStateHandler *load_state;

const VMStateDescription *vmsd;

void *opaque;

CompatEntry *compat;

int no_migrate;

int is_ram;

} SaveStateEntry;

注意当中的成员变量save_live_state函数指针,会指向详细设备的迁移功能函数。

全部支持虚拟机活迁移的虚拟设备,都须要调用register_savevm_live方法,提供保存状态的SaveLiveStateHandler *save_live_state函数,供活迁移開始时被调用。

#定义在saveam.c源文件里:

/* TODO: Individual devices generally have very little idea about the rest

of the system, so instance_id should be removed/replaced.

Meanwhile pass -1 as instance_id if you do not already have a clearly

distinguishing id for all instances of your device class. */

int register_savevm_live(DeviceState *dev,

const char *idstr,

int instance_id,

int version_id,

SaveSetParamsHandler *set_params,

SaveLiveStateHandler *save_live_state,

SaveStateHandler *save_state,

LoadStateHandler *load_state,

void *opaque)

{

SaveStateEntry *se;

se = g_malloc0(sizeof(SaveStateEntry));

se->version_id = version_id;

se->section_id = global_section_id++;

se->set_params = set_params;

se->save_live_state = save_live_state;

se->save_state = save_state;

se->load_state = load_state;

se->opaque = opaque;

se->vmsd = NULL;

se->no_migrate = 0;

/* if this is a live_savem then set is_ram */

if (save_live_state != NULL) {

se->is_ram = 1;

}

if (dev && dev->parent_bus && dev->parent_bus->info->get_dev_path) {

char *id = dev->parent_bus->info->get_dev_path(dev);

if (id) {

pstrcpy(se->idstr, sizeof(se->idstr), id);

pstrcat(se->idstr, sizeof(se->idstr), "/");

g_free(id);

se->compat = g_malloc0(sizeof(CompatEntry));

pstrcpy(se->compat->idstr, sizeof(se->compat->idstr), idstr);

se->compat->instance_id = instance_id == -1 ?

calculate_compat_instance_id(idstr) : instance_id;

instance_id = -1;

}

}

pstrcat(se->idstr, sizeof(se->idstr), idstr);

if (instance_id == -1) {

se->instance_id = calculate_new_instance_id(se->idstr);

} else {

se->instance_id = instance_id;

}

assert(!se->compat || se->instance_id == 0);

/* add at the end of list */

QTAILQ_INSERT_TAIL(&savevm_handlers, se, entry);

return 0;

}

注冊后,SaveStateEntry对象就增加了savevm_handlers链表中。该链表是有一些SaveStateEntry对象组成的链表。

Vl.c文件里的main函数是整个qemu程序的起始。

对于ram设备,在vl.c的main函数中有例如以下调用:

register_savevm_live(NULL, "ram", 0, 4, NULL, ram_save_live, NULL,

ram_load, NULL);

能够看到,ram_save_live函数被传递给了save_live_state函数指针。而该函数也是真正实现ram的活迁移功能的函数。

所以回到刚才的介绍,对于ram设备,qemu_savevm_state_begin和qemu_savevm_state_iterate函数事实上是调用了ram_save_live函数。

以下简述一下预拷贝算法的实现:

int ram_save_live(QEMUFile *f, int stage, void *opaque)

调用时给stage赋予不同的值,ram_save_live会完毕不同阶段的功能,qemu_savevm_state_begin给stage赋值QEMU_VM_SECTION_START,完毕起始阶段的工作(比方将全部的内存页都设为脏页);qemu_savevm_state_iterate给stage赋值QEMU_VM_SECTION_PART,是内存进行迭代拷贝的阶段。qemu_savevm_state_iterate每调用ram_save_live一次,就迭代一次,它会多次调用ram_save_live进行迭代直至达到目标为止(expected_time <= migrate_max_downtime())。

以下详细描写叙述一下预拷贝算法的实现:

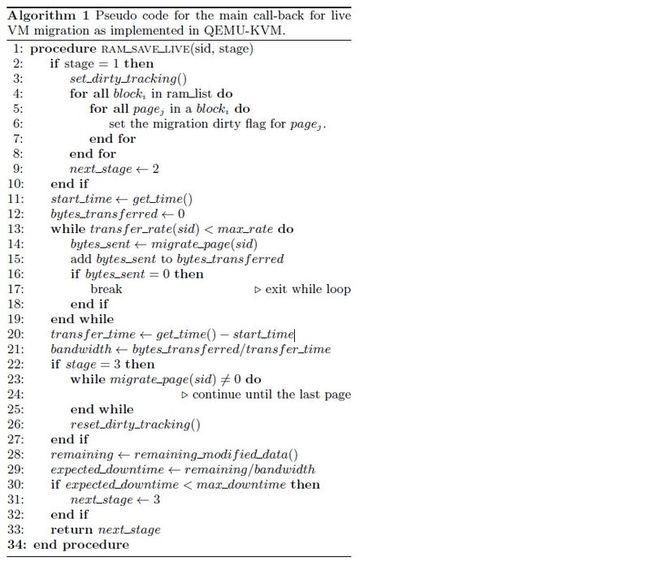

内容摘录自论文《Optimized Pre-Copy Live Migration for Memory Intensive》(后面有算法的伪代码)

2.1 The KVM Implementation

KVM, as of the QEMU-KVM release 0.14.0, uses Algorithm 1. We show the pseudo-code for the call-back function invoked by the helper thread that manages migration. This code runs sequentially, regardless of the number of processors in the virtual machine and it proceeds in three phases.

In the first phase(lines 2-10), all memory pages are marked dirty and the modification tracking mechanism is initialized.

In the second phase, pages are copied if they are marked dirty. The dirty page is reset, and the page write-permission is revoked such that an attempt by an application on the VM to modify the page will result in a soft page fault. While servicing this fault,in addition to granting write-permission, the KVM driver sets the dirty page again to one, indicating the need for retransmission.

Typically, this second phase is the longest in iterative precopy. Pages are being copied as long as the maximum transfer rate to the destination machine is not exceeded. Pages that are modified but not copied are used to estimate the downtime if the migration proceeds to the third stage. If the estimated downtime is still high, the algorithm iterates until it predicts a value lower than the target value. When the target downtime is met, the migration enters the third and final stage,where the source virtual machine (and applications) is stopped. Dirty pages are transmitted to the destination machine, where execution is resumed.

源码例如以下:

int ram_save_live(QEMUFile *f, int stage, void *opaque)

{

uint64_t bytes_transferred_last;

double bwidth = 0;

uint64_t expected_time = 0;

int ret;

if (stage == 1) {

bytes_transferred = 0;

last_block_sent = NULL;

ram_save_set_last_block(NULL, 0);

}

if (outgoing_postcopy) {

return postcopy_outgoing_ram_save_live(f, stage, opaque);

}

if (stage < 0) {

memory_global_dirty_log_stop();

return 0;

}

memory_global_sync_dirty_bitmap(get_system_memory());

if (stage == 1) {

sort_ram_list();

/* Make sure all dirty bits are set */

ram_save_memory_set_dirty();

memory_global_dirty_log_start();

ram_save_live_mem_size(f);

}

bytes_transferred_last = bytes_transferred;

bwidth = qemu_get_clock_ns(rt_clock);

while ((ret = qemu_file_rate_limit(f)) == 0) {

if (ram_save_block(f) == 0) { /* no more blocks */

break;

}

}

if (ret < 0) {

return ret;

}

bwidth = qemu_get_clock_ns(rt_clock) - bwidth;

bwidth = (bytes_transferred - bytes_transferred_last) / bwidth;

/* if we haven't transferred anything this round, force expected_time to a

* a very high value, but without crashing */

if (bwidth == 0) {

bwidth = 0.000001;

}

/* try transferring iterative blocks of memory */

if (stage == 3) {

/* flush all remaining blocks regardless of rate limiting */

while (ram_save_block(f) != 0) {

/* nothing */

}

memory_global_dirty_log_stop();

}

qemu_put_be64(f, RAM_SAVE_FLAG_EOS);

expected_time = ram_save_remaining() * TARGET_PAGE_SIZE / bwidth;

return (stage == 2) && (expected_time <= migrate_max_downtime());

}

以下再来分析一下hmp_migrate函数中的例如以下代码是怎么回事:

拿迁移命令来举例,我们在qemu的monitor中对源虚拟机运行的迁移命令可能会是这样

migrate -d tcp:10.10.10.1:4444

当中 -d 表示“not wait for completion”,也就是有了这个參数后,monitor的命令行提示符不用等待迁移工作完毕就能返回,用户能够在迁移期间同一时候在monitor命令行中运行其它操作(比方查看迁移状态);假设没有这个參数,命令行要一直等待迁移任务完毕后才干返回。

tcp后面是目的机的uri。

而在hmp-commands.hx文件里,在migrate命令的结构体中有例如以下语句:

.args_type = "detach:-d,blk:-b,inc:-i,uri:s",

事实上,我们在命令行中输入的-d是与detach相应的,并存放在qdict中(qdict能够理解为一个存储命令行參数信息的表),qidct中的每一项都是一个“键值对”,比方“detach”是key,而value就是“1”,表示命令行中有“-d”这个參数;“uri”是key,而value就是“tcp:10.10.10.1:4444”。

那我们在命令行中输入的是“-d”,它是如何变成键“detach”存储在qdict中呢?分析一下源文件monitor.c中的monitor_parse_command函数就明确是怎么回事了。

这个函数会命令行进行解析,它将命令的參数与相应的mon_cmd_t结构体中的.args_type成员进行比較。比方migrate命令的參数中带有“-d”,该函数在.args_type中找到“detach:-d”的信息,便把detach作为key存入qdict,并给它以value的值是1;而uri则类似。

Qdict中仅仅存储在monitor命令行输入中所带有的參数相相应的键值对。

假设我们要改动迁移算法,这个过程我们不用改动,我们须要做的是在hmp-commands.hx文件里migrate的结构体中加入对应的命令行參数,并在hmp_migrate函数中进行获取即可了。