大数据基础之Flume——Flume基础及Flume agent配置以及自定义拦截器

Flume简介

- Flume用于将多种来源的日志以流的方式传输至Hadoop或者其他目的地

- 一种可靠、可用的高效分布式数据收集服务 - Flume拥有基于数据流上的简单灵活架构,支持容错、故障转移与恢复

- 由Cloudera 2009年捐赠给Apache,现为Apache顶级项目

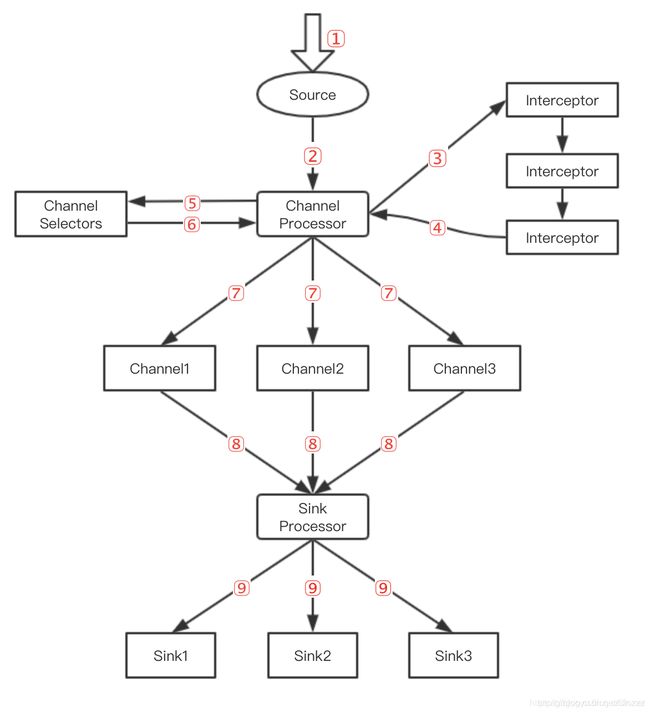

Flume架构

- Client:客户端,数据产生的地方,如Web服务器

- Event:事件,指通过Agent传输的单个数据包,如日志数据通常对应一行数据

- Agent:代理,一个独立的JVM进程

- Flume以一个或多个Agent部署运行

- Agent包含三个组件

- Source

- Channel

- Sink

Flume工作流程:

因为Flume的配置每种文件源、管道、输出在官网上都有详细的讲解和配置案例,搭配过多,我这里就不一一展示,仅展示一些工作中经常用的案例

source(源)

Spooling Directory Source

从磁盘文件夹中获取文件数据,可以避免重启或者发送失败后数据丢失,还可以用于监控文件夹新文件

| 属性 | 缺省值 | 描述 |

|---|---|---|

| Type | - | spooldir |

| spoolDir | - | 需要读取的文件夹的路径 |

| fileSuffix | .COMPLETED | 文件读取完成后添加的后缀 |

| deletePolicy | never | 文件完成后删除策略:never和immediate |

Http源

用于接收HTTP的GET和POST请求

| 属性 | 缺省值 | 描述 |

|---|---|---|

| Type | - | http |

| port | - | 监听的端口号 |

| bind | 0.0.0.0 | 绑定IP |

| handler | org.apache.flume.source.http.JSONHandler | 数据处理程序类全名 |

avro源

监听Avro端口,并从外部Avro客户端接收events

| 属性 | 缺省值 | 描述 |

|---|---|---|

| Type | - | avro |

| bind | - | 绑定IP地址 |

| port | - | 端口 |

| threads | - | 最大工作线程数量 |

Channel(管道)

Memory Channel

event保存在Java Heap中,如果允许数据小量丢失,推荐使用

File Channel

event保存在本地文件中,可靠性高,但吞吐量低于Memory Channel

JDBC Channel

event保存在关系数据库中,一般不推荐使用

Kafka Channel

Sinks(输出)

avro sink

作为avro客户端向avro服务端发送avro事件

| 属性 | 缺省值 | 描述 |

|---|---|---|

| Type | - | avro |

| bind | - | 绑定IP地址 |

| port | - | 端口 |

| batch-size | 100 | 批量发送事件数量 |

HDFS sink

将事件写入Hadoop分布式文件系统

| 属性 | 缺省值 | 描述 |

|---|---|---|

| Type | - | hdfs |

| hdfs.path | - | hdfs文件目录 |

| hdfs.filePrefix | FlumeData | 文件前缀 |

| hdfs.fileSuffix | - | 文件后缀 |

Hive sink

- 包含分隔文本或Json数据流事件直接进入Hive表或分区

- 传入的事件数据字段映射到Hive表中相应的列

| 属性 | 缺省值 | 描述 |

|---|---|---|

| Type | - | hive |

| hive.metastore | - | Hive metastore URI |

| hive.database | - | Hive数据库名称 |

| hive.table | - | Hive表名 |

| serializer | - | 序列化器负责从事件中分析出字段并将它们映射为Hive表中的列。序列化器的选择取决于数据的格式。支持序列化器的格式:DELIMITED和JSON |

HBase sink

| 属性 | 缺省值 | 描述 |

|---|---|---|

| Type | - | hbase |

| table | - | 要写入的Hbase表名 |

| columnFamily | - | 要写入的Hbase列簇 |

| zookeeperQuorum | - | 对应hbase.zookeeper.quorum |

| znodeParent | /hbase | zookeeper.znode.parent |

| serializer | org.apache.flume.sink.hbase.SimpleHbaseEventSerializer | 一次事件插入一列 |

| serializer.payloadColumn | - | 列名col1 |

拦截器

- 拦截器可以修改或丢弃事件

- 设置在source和channel之间 - 内置拦截器

- HostInterceptor:在event header中插入“hostname”

- timestampInterceptor:插入时间戳

- StaticInceptor:插入key-value

- UUIDInceptor:插入UUID

下面是我总结了集中工作中常用情况的Agent配置文件的案例

1.监控文件夹,并将结果输出到hdfs上

a2.channels=c2

a2.sources=s2

a2.sinks=k2

a2.sources.s2.type=spooldir

a2.sources.s2.spoolDir=/opt/data

a2.channels.c2.type=memory

//设置该通道中最大可存储的event数量

a2.channels.c2.capacity=10000

//每次从source中拿到的event数量

a2.channels.c2.transactionCapacity=1000

a2.sinks.k2.type=hdfs

a2.sinks.k2.hdfs.path=hdfs://192.168.56.101:9000/tmp/customs

//当文件写入大小达到该值时创建新文件

a2.sinks.k2.hdfs.rollSize=600000

//当文件偏移量达到该值时创建新文件

a2.sinks.k2.hdfs.rollCount=5000

//每次批量写入的event数量

a2.sinks.k2.hdfs.batchSize=500

a2.sinks.k2.channel=c2

a2.sources.s2.channels=c2

2.配置拦截器的写法

a3.channels=c3

a3.sources=s3

a3.sinks=k3

a3.sources.s3.type=spooldir

a3.sources.s3.spoolDir=/opt/data

a3.sources.s3.interceptors=userid_filter

a3.sources.s3.interceptors.userid_filter.type=regex_filter

a3.sources.s3.interceptors.userid_filter.regex=^userid.*

a3.sources.s3.interceptors.userid_filter.excludeEvents=true

a3.channels.c3.type=memory

a3.sinks.k3.type=logger

a3.sources.s3.channels=c3

a3.sinks.k3.channel=c3

3.自定义拦截器的写法:

//自定义拦截器 Java代码

//基本要求:将学生信息表中的男、女替换成数字1、2。

public class UserInterceptor implements Interceptor {

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

byte[] body = event.getBody();

String str = new String(body, Charset.forName("UTF-8"));

String[] value = str.split(",");

if (value[2].equals("男")){

value[2] = 1+"";

byte[] bytes = (value[0] +"," + value[1] +"," + value[2] +"," + value[3]).getBytes();

event .setBody(bytes);

}else if (value[2].equals("女")){

value[2] = 2+"";

byte[] bytes = (value[0] +"," + value[1] +"," + value[2] +"," + value[3]).getBytes();

event.setBody(bytes);

}else {

value[2] = 0+"";

byte[] bytes = (value[0] +"," + value[1] +"," + value[2] +"," + value[3]).getBytes();

event.setBody(bytes);

}

return event;

}

@Override

public List<Event> intercept(List<Event> list) {

for (Event event : list) {

intercept(event);

}

return list;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder{

@Override

public Interceptor build() {

return new UserInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

//Flume配置文件写法

a4.sources=s4

a4.channels=c4

a4.sinks=k4

a4.sources.s4.type=spooldir

a4.sources.s4.spoolDir=/opt/data

a4.sources.s4.interceptors=myintec

a4.sources.s4.interceptors.myintec.type=com.kb06.UserInterceptor$Builder

a4.channels.c4.type=memory

a4.sinks.k4.type=logger

a4.sinks.k4.channel=c4

a4.sources.s4.channels=c4

4.监控文件夹输出到kafka上

a5.sources=s5

a5.channels=c5

a5.sinks=k5

a5.sources.s5.type=spooldir

a5.sources.s5.spoolDir=/opt/data

a5.sinks.k5.type=org.apache.flume.sink.kafka.KafkaSink

a5.sinks.k5.kafka.bootstrap.servers=192.168.56.101:9092

a5.sinks.k5.kafka.topic=mypart

a5.channels.c5.type=memory

a5.channels.c5.capacity=10000

a5.channels.c5.transactionCapacity=10000

a5.sinks.k5.channel=c5

a5.sources.s5.channels=c5

5.监控文件夹输出到kafka上,并加有过滤表字段的拦截器

a5.sources=s5

a5.channels=c5

a5.sinks=k5

a5.sources.s5.type=spooldir

a5.sources.s5.spoolDir=/opt/data

a5.sources.s5.interceptors=head_filter

a5.sources.s5.interceptors.head_filter.type=regex_filter

a5.sources.s5.interceptors.head_filter.regex=^event_id.*

a5.sources.s5.interceptors.head_filter.excludeEvents=true

a5.sinks.k5.type=org.apache.flume.sink.kafka.KafkaSink

a5.sinks.k5.kafka.bootstrap.servers=192.168.56.101:9092

a5.sinks.k5.kafka.topic=msgEvent

a5.channels.c5.type=memory

a5.channels.c5.capacity=10000

a5.channels.c5.transactionCapacity=10000

a5.sinks.k5.channel=c5

a5.sources.s5.channels=c5

6.利用Flume监控http端口

a4.sources=s4

a4.channels=c4

a4.sinks=k4

a4.sources.s4.type=http

a4.sources.s4.port=5140

a4.channels.c4.type=memory

a4.sinks.k4.type=logger

a4.sinks.k4.channel=c4

a4.sources.s4.channels=c4

7.TailDir Source(监控文件夹,并生成一个读取位置的JSON文件记录每次读取文件的位置,当Flume发生宕机时,再次启动Flume,也会从上次的结束点去继续读取文件)

a4.sources=s4

a4.channels=c4

a4.sinks=k4

a4.sources.s4.type=TAILDIR

a4.sources.s4.filegroups=f1 f2

a4.sources.s1.filegroups.f1=/opt/data/example.log

a4.sources.s1.filegroups.f2=/opt/data/.*log.*

a4.sources.s1.positionFile=/root/data/taildir_position.json

a4.sources.s1.header.f1.headerKeys=value1

a4.sources.s1.header.f2.headerKeys=value2

a4.sources.s1.header.f2.headerKeys=value3

a1.sources.s1.fileHeader = true

a4.channels.c4.type=memory

a4.sinks.k4.type=logger

启动命令:

flume-ng agent -n a4 -c conf -f /opt/flumeconf/conf_0806_custconf.properties -Dflume.root.logger=INFO,console

//-Dflume.root.logger=INFO,console

表示输出的日志等级为INFO,输出到控制台