《微软:DirectShow开发指南》第12章 Writing DirectShow Source Filters

Of the three classes of Microsoft DirectShow filters—source, transform, and renderer—the source filter is the one that generates the stream data manipulated by the filter graph. Every filter graph has at least one source filter, and by its nature the source filter occupies the ultimate upstream position in the filter graph, coming before any other filters. The source filter is responsible for generating a continuous stream of media samples, beginning when the Filter Graph Manager runs the filter graph and ceasing when the filter graph is paused or stopped.

在DS filter的三种类型中—源,转换,和渲染—源filter是由filter graph控制用来生成流数据。每个filter graph至少要有一个源filter 且位于第一级。源filter负责生成连续的媒体样本流,当Filter Graph Manager启动filter graph时开始,当filter graph暂停时或停止时结束。

Source Filter Types

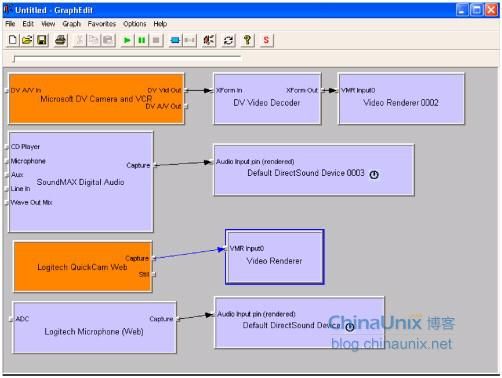

In a broad sense, there are three types of source filters used in DirectShow filter graphs—capture source filters, file source filters, and “creator” source filters. We’re already quite familiar with capture source filters, such as the Microsoft DV Camcorder and VCR filter and the Logitech QuickCam Web filter, which we’ve used in numerous DirectShow applications. A capture source filter captures a live sample, converts it to a stream of media samples, and presents that stream on its output pins. Capture source filters are nearly always associated with devices, and in general, there’s rarely a need for DirectShow programmers to write their own capture source filters. Any device that has a correctly written Windows Driver Model (WDM) driver will automatically appear as a filter available to DirectShow applications. DirectShow puts a filter wrapper around WDM and Video for Windows (VFW) device drivers so that the capture device, for example, can be used in a filter graph as a capture source filter. (See Figure 12-1.) If you really want or need to write your own capture driver filter, you can find the gory details in the Microsoft DirectX SDK documentation and Windows DDK documentation.

从广义上来说,DS 的filter graph有三种类型的源filter—捕捉源filter, 文件源filter,和自生成源filter。通常比较熟悉的是捕捉源filter,如DV录像机和VCR filter和摄像头filter,它们已经在前面的章节中多次应用了。捕捉源filter将捕捉实时样本,将其转换成媒体样本流,并将其以流的形式传送到输出pin。捕捉源filter是和设备紧密相关的,而且通常,很少会需要DS程序员定他们自己的捕捉源filter。任何正确驱动了的WDM设备都会自动在DS应用程序中可用。DS还给WDM和VFW实现了一个filter封装以使捕捉设备能被filter graph的源捕捉filter使用。(如图12-1所示)。如果是真的要写一个捕捉驱动filter,可以到DirectX SDK文档去看看。Figure 12-1. A capture source filter encapsulates a WDM interface to a hardware device

A file source filter acts as an interface between the world outside a DirectShow filter graph and the filter graph. The File Source filter, for example, makes the Microsoft Windows file system available to DirectShow; it allows the contents of a disk-based file to be read into the filter graph. (See Figure 12-2.) Another filter, the File Source URL filter, takes a URL as its parameter and allows Web-based files to be read into a filter graph. (This is a technique that could potentially be very powerful.) We’ve already used the Stream Buffer Engine’s source filter, a file source filter that reads stream data from files created by the Stream Buffer Engine’s sink filter. The ASF Reader filter takes an ASF file and generates media streams from its contents.

文件源filter扮演了filter graph和外部世界接口的角色。例如,文件源filter使得DS能访问文件系统;即可以将文件内容读进filter graph。(如图12-2所示)。其它的filter,如文件源URL filter, 可以将URL作为它的输入参数并将基于网络的文件读进filter graph。前面已经使用了流buffer引擎的源filter,它是由流buffer引擎的sink filter创建的从文件中读取流数据的文件源filter。ASF阅读filter能读取ASF文件并从中生成媒体流。

Figure 12-2. The File Source filter followed by a Wave Parser filter

With the notable exceptions of the SBE source filter and the ASF Reader, a file source filter normally sits upstream from a transform filter that acts as a file parser, translating the raw data read in by the file source filter into a series of media samples. The Wave Parser filter, for example, translates the WAV file format into a series of PCM media samples. Because the relationship between a file source filter and a parser filter is driven by the parser filter, the parser filter usually pulls the data from the file source filter. This is the reverse of the situation for capture (“live”) source filters, which produce a regular stream of media samples and then push them to a pin on a downstream filter. Unless you’re adding your own unique file media type to DirectShow, it’s unlikely you’ll need a custom parser transform filter. (You’re a bit on your own here. There aren’t any good examples of parser filters in the DirectX SDK samples.)

除了SBE源filter和ASF Reader这样的例外情况,文件源filter通常位于转换filter的上游,用来解析文件,读取文件的原始数据并转换成媒体样本序列。例如,Wave Parser filter, 就能将WAV文件格式转换成PCM媒体样本序列。因为文件源filter和解析filter都是源自解析filter的关系,解析filter通常会从文件源filter拉数据。这和捕捉“实时源filter—它能生成有规律的媒体样本流并将它们推到下一级filter的pin上—的情形是相反的。除非需要给DS开发自己定制的媒体文件格式,通常是不需要另行开发解析转换filter。

Finally there are source filters that create media samples in their entirety. This means that the sample data originates in the filter, based upon the internal settings and state of the filter. You can imagine a creator source filter acting as a “tone generator,” for example, producing a stream of PCM data reflecting a waveform of a particular frequency. (If it were designed correctly, the COM interfaces on such a filter would allow you to adjust the frequency on the fly with a method invocation.) Another creator source filter could produce the color bars that video professionals use to help them adjust their equipment, which would clearly be useful in a DirectShow application targeted at videographers.

对于内部自生成媒体样本的源filter来说,这些样本数据都是基于filter内部的设置和状态生成的。例如,可以将这样的自生成源filter看作是一个“音频发生器,它能生成反映特定频率的波形的PCM数据流。而其它的自生成源filter可以生成用于视频专家来调试设备的彩条,这个功能对于将DS应用程序用于电视制作人来说很有用。

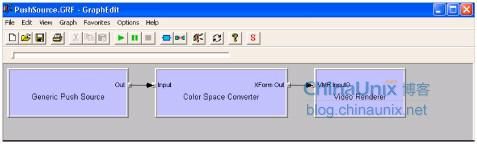

Figure 12-3. The Generic Push Source filter creates its own media stream

The PushSource filter example explored in this chapter (and shown in Figure 12-3) is a creator source filter that produces a media stream with a numbered series of video frames and that stops automatically when a certain number of frames have been generated. (When a source filter runs dry, all filters downstream of it eventually stop, waiting for more stream data.) PushSource is not complicated and only scratches the surface of what’s possible with source filters. An excellent teaching example, PushSource exposes the internals of the source filter and, through that, much of how DirectShow handles the execution of the filter graph.

本章中(如图12-3所示)的PushSource filter是一个自生成源filter的例子,它能生成一个有固定视频序列的媒体流,视频帧数生成完成后自动停止。(当源filter运行数据干涸后,所有的下游filter都会最终停止,直到有数据流)。PushSource并不复杂而且它只涉及了源filter的一些基本工作。但是它是一个很好的例子,PushSource揭示了源filter的内部原理并介绍了它是如何在filter graph中工作的。

Source Filter Basics

As explained in the previous section, a source filter usually pushes its media samples to the input pin of a downstream filter. (The exception is file source filters.) Live sources, such as capture source filters, are always push sources because the data is generated somewhere outside the filter graph (such as in a camera or a microphone) and presented through a driver to the filter as a continuous stream of data. The PushSource filter covered in this chapter also pushes media samples to downstream filters, but these samples are generated in system memory rather than from a capture device.

如前所述,源filter会将它的媒体样本推到它下游filter的输入pin。(文件源filter是一个例外),实时源,如捕捉源filter,都是推类型的源,因为这些数据通常是在filter graph外部生成的(例如摄像头和话筒)并能过驱动给filter作为连续的数据流。本章的PushSource filter同样也会将媒体样本推到下一级的filter,但是这些样本是在系统内存中生成的,而不是从捕捉设备生成。

A source filter generally doesn’t have any input pins, but it can have as many output pins as desired. As an example, a source filter could have both capture and preview pins, or video and audio pins, or color and black-and-white pins. Because the output pins on a source filter are the ultimate source of media samples in the filter graph, the source filter fundamentally controls the execution of the filter graph. Filters downstream of a source filter will not begin execution until they receive samples from the source filter. If the source filter stops pushing samples downstream, the downstream filters will empty their buffers of media samples and then wait for more media samples to arrive.

源filter通常不需要任何输入pin,但是可能依据需求有多个输出pin。作为一个示例程序,源filter可以有捕捉和预览pin,或视频和音频pin,或彩色和黑白pin。因为源filter上的输出pin是最终的filter graph的媒体样本源,源filter从根本上控制了filter graph的执行。源filter的下游filter在没有收到源filter的样本时是不会执行的。如果源filter停止向下游推送样本,那么下游filter将会清空它们的媒体样本缓存区并等待媒体样本的到来。

The DirectShow filter graph has at least two execution threads: one or more streaming threads and the application thread. The application thread is created by the Filter Graph Manager and handles messages associated with filter graph execution, such as run, pause, and stop. You’ll have one streaming thread per source filter in the filter graph, so a filter graph with separate source filters to capture audio and video would have at least two streaming threads. A streaming thread is typically shared by all the filters downstream of the source filter that creates it (although downstream filters can create their own threads for special processing needs, as parsers do regularly), and it’s on these threads that transforms and rendering occur.

DS的filter graph至少有两个执行线程:一个或多个流线程和一个应用程序线程。应用程序线程由Filter Graph Manager创建并处理filter graph的消息,如运行,暂停,和停止。在filter graph的每个源filter都一个流线程,因此,用来捕捉音频和视频的分立源filter的filter graph至少有两个流线程。流线程通常会被创建了它的源filter的所有下游filter所共享(尽管下游filter也可以根据需要创建它们自己的线程,像解析器通常所做的),转换和渲染都是在这个线程上执行。

Once the streaming thread has been created, it enters a loop with the following steps. A free (that is, empty) sample is acquired from the allocator. This sample is then filled with data, timestamped, and delivered downstream. If no samples are available, the thread blocks until a sample becomes available. This loop is iterated as quickly as possible while the filter graph is executing, but the renderer filter can throttle execution of the filter graph. If a renderer’s sample buffers fill up, filters upstream are forced to wait while their buffers fill up, a delay that could eventually propagate all the way back to the source filter. A renderer might block the streaming thread for one of two reasons: the filter graph could be paused, in which case the renderer is not rendering media samples (and the graph backs up with samples); or the renderer could be purposely slowing the flow of samples through the filter graph to ensure that each sample is presented at the appropriate time. When the renderer doesn’t care about presentation time—such as in the case of a filter that renders to a file—the streaming thread runs as quickly as possible.

当流线程被创建后,它就进入下面的循环。向分配器请求一个空样本,这个空样本会接着被填充数据,时间戳,并传送给下一级。如果没有样本可用,那么线程就阻塞直到有样本可用。这个循环在filter graph执行时会尽可能地快,但是渲染filter能减速filter graph的执行。如果渲染filter的样本缓存区已满,那么上游的filter将会被强制等待,通过这种方式,延迟会最终传播到源filter。渲染器将会阻塞线程流的另两个原因是:filter graph可以暂停,在这种情况下渲染器停止媒体样本的渲染(但filter graph备份了媒体样本);或者渲染器通过filter graph有意地减慢样本流以确保每个样本都在合适的时间表示。当渲染器不关心表示时间时—如写文件的filter—那么流线程就可以运行得尽可能地快。

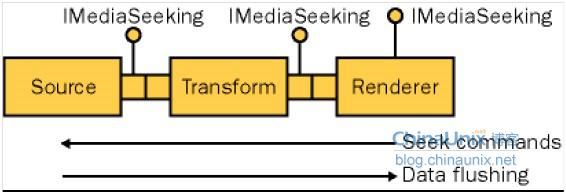

Seeking functionality should be implemented by the source filters where appropriate. Downstream filters and the Filter Graph Manager can use the IMediaSeeking interface to rewind or fast-forward through a stream. Seeking a live stream doesn’t have to be implemented (it could be useful, such as in the case of a TV broadcast), but source filters should be able to seek as appropriate. When a seek command is issued, the Filter Graph Manager distributes it to all the filters in the filter graph. The command propagates upstream across the filter graph, from input pin to output pin, until a filter that can accept and process the seek command is found. Typically, the filter that creates the streaming thread implements the seek command because that filter is the one providing the graph with media samples and therefore can be sent requests for earlier or later samples. This is generally a source filter, although it can also be a parser transform filter.

查找功能适合在源filter中实现。下游filter和Filter Graph Manager可以使用IMediaSeeking接口来回退和快进流。在实时流中查找没有实现,但是源filter应该以适当的方式查找。当查找命令发出后,Filter Graph Manager会将这个命令发给filter graph中的所有filter。它是在filter graph中向上传播的,从输入pin到输出pin,直到发现有一个filter能接受并处理这个查找命令。通常,创建流线程的filter会实现查找命令,因为是它提供了媒体样本并能请求向前或向后的样本。这样的filter通常是源filter,也可以是解析用的转换filter。

When a source filter responds to a seek command, the media samples flowing through the filter graph change abruptly and discontinuously. The filter graph needs to flush all the data residing in the buffers of all downstream filters because all the data in the filter graph has become “stale”; that is, no longer synchronized with the new position in the media stream. The source filter flushes the data by issuing an IPin::BeginFlush call on the pin immediately downstream of the filter. This command forces the downstream filter to flush its buffers, and that downstream filter propagates the IPin::BeginFlush command further downstream until it encounters a renderer filter. The whole process of seeking and flushing looks like that shown in Figure 12-4. Both seek and flush commands are executed on the application thread, independent of the streaming thread.

当源filter响应查找命令时,流经filter graph的媒体样本会中断和改变。Filter graph需要所有下游filter的缓存区中的数据,因为此时filter graph中的所有数据都变得“过时了;而且对于新位置来说,媒体流也失去了同步。源filter通过调用IPin::BeginFlush清空数据。这个命令会强制下游filter清空它的缓存区,并将IPin::BeginFlush命令传播到下游filter直到渲染filter。整个查找和清空的过程可见图12-4。查找和清空命令都是在应用程序线程中执行,独立于流线程。Figure 12-4. Seek and flush commands move in opposite directions across the filter graph

PushSource Source Filter

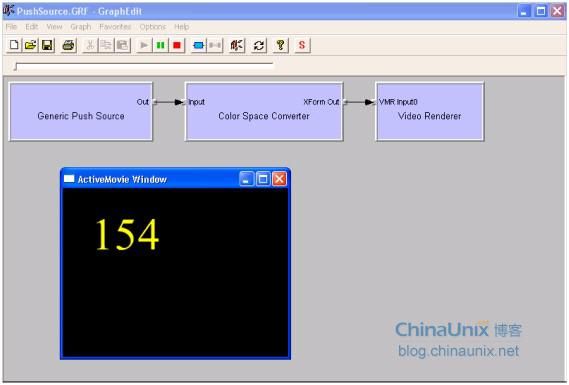

The PushSource source filter example generates a stream of black video frames (in RGB32 format) that have yellow frame numbers printed on them. The PushSource filter generates frames at a rate of 30 per second at standard video speed, and it will keep going for 10 seconds (the value set by the DEFAULT_DURATION constant) for a total of 300 frames. In operation, the PushSource filter—which appears in the list of DirectShow filters under the name “Generic Push Source”—looks like Figure 12-5.

PushSource 源filter的例子会生成一个RGB32格式黑色背景黄色帧数字的流,其帧率为30帧/秒,保持10秒共计300帧。在操作上,PushSource filter—DS filter的名称为“Generic Push Sourc—如图12-5所示。Figure 12-5. The PushSource filter creating a video stream with numbered frames

The DirectShow C base classes include three classes that are instrumental in implementing PushSource: CSource, which implements a basic source filter; CSourceStream, which implements the presentation of a stream on the source filter’s output pin; and CSourceSeeking, which implements the seek command on the output pin of the source filter. If your source filter has more than one output pin (some do, most don’t), you can’t use CSourceSeeking as a basis for an implementation of the seek command. In that case, you’d need to coordinate between the two pins, which requires careful thread synchronization that CSourceSeeking doesn’t handle. (You can use CSourceStream for multiple output pins.) In a simple source filter, such as PushSource, only a minimal implementation is required and nearly all of that is in the implementation of methods in CSourceStream and CSourceSeeking.

DirectShow C 基类包含了三个类,它们在实现PushSourc::CSource上各有任用并共同实现了一个基本的源filter:

CSourceStream: 实现了源filter的输出pin上的流的表示;

CSourceSeeking: 查找命令的基本实现,在这种情况下,需要两个pin的协作。

在PushSource这个比较简单的例子中,只需要CSourceStream和CSourceSeeking的简单实现。

Implementing the Source Filter Class

The implementation of the source filter class CPushSource for the PushSource example is entirely straightforward. Only two methods need to be overridden: the class constructor, which creates the output pin, and CPushSource::CreateInstance, which calls the class constructor to create an instance of the filter. Here’s the complete implementation of CPushSource:

PushSource的源filter类CPushSource的实现很简单。只有两个方法需要覆盖:类构造函数,它创建了输出pin。CPushSource::CreateInstance, 它调用了类构造函数来创建filter的实例。下面的代码是CPushSource的完整实现:

点击(此处)折叠或打开

- // CPushSource class: Our source filter.

- class CPushSource : public CSource

- {

- private:

- // Constructor is private

- // You have to use CreateInstance to create it.

- CPushSource(IUnknown *pUnk, HRESULT *phr);

- public:

- static CUnknown * WINAPI CreateInstance(IUnknown *pUnk, HRESULT *phr);

- };

- CPushSource::CPushSource(IUnknown *pUnk, HRESULT *phr)

- : CSource(NAME("PushSource"), pUnk, CLSID_PushSource)

- {

- // Create the output pin.

- // The pin magically adds itself to the pin array.

- CPushPin *pPin = new CPushPin(phr, this);

- if (pPin == NULL)

- {

- *phr = E_OUTOFMEMORY;

- }

- }

- CUnknown * WINAPI CPushSource::CreateInstance(IUnknown *pUnk, HRESULT *phr)

- {

- CPushSource *pNewFilter = new CPushSource(pUnk, phr );

- if (pNewFilter == NULL)

- {

- *phr = E_OUTOFMEMORY;

- }

- return pNewFilter;

- }

This is all the code required to create the source filter object.

这就是创建源filter对象的所有代码。

Implementing the Source Filter Output Pin Class

All the real work in the PushSource filter takes place inside the filter’s output pin. The output pin class, CPushPin, is declared as a descendant of both the CSourceStream and CSourceSeeking classes, as shown in the following code:

PushSource filter的所有实际工作都是发生成filter的输出pin内部,输出pin类,CPushPin,被声明为CSourceStream和CSourceSeeking的继承类,如下源码所示:

点击(此处)折叠或打开

- class CPushPin : public CSourceStream, public CSourceSeeking

- {

- private:

- REFERENCE_TIME m_rtStreamTime; // Stream time (relative to when the graph started)

- REFERENCE_TIME m_rtSourceTime; // Source time (relative to ourselves)

- // A note about seeking and time stamps:

- // Suppose you have a file source that is N seconds long.

- // If you play the file from

- // the beginning at normal playback rate (1x),

- // the presentation time for each frame

- // will match the source file:

- // Frame: 0 1 2 ... N

- // Time: 0 1 2 ... N

- // Now suppose you seek in the file to an arbitrary spot,

- // frame i. After the seek

- // command, the graph's clock resets to zero.

- // Therefore, the first frame delivered

- // after the seek has a presentation time of zero:

- // Frame: i i+1 i+2 ...

- // Time: 0 1 2 ...

- // Therefore we have to track

- // stream time and source time independently.

- // (If you do not support seeking,

- // then source time always equals presentation time.)

- REFERENCE_TIME m_rtFrameLength; // Frame length

- int m_iFrameNumber; // Current frame number that we are rendering

- BOOL m_bDiscontinuity; // If true, set the discontinuity flag

- CCritSec m_cSharedState; // Protects our internal state

- ULONG_PTR m_gdiplusToken; // GDI+ initialization token

- // Private function to draw our bitmaps.

- HRESULT WriteToBuffer(LPWSTR wszText, BYTE *pData,

- VIDEOINFOHEADER *pVih);

- // Update our internal state after a seek command.

- void UpdateFromSeek();

- // The following methods support seeking

- // using other time formats besides

- // reference time. If you want to support only

- // seek-by-reference-time, you

- // do not have to override these methods.

- STDMETHODIMP SetTimeFormat(const GUID *pFormat);

- STDMETHODIMP GetTimeFormat(GUID *pFormat);

- STDMETHODIMP IsUsingTimeFormat(const GUID *pFormat);

- STDMETHODIMP IsFormatSupported(const GUID *pFormat);

- STDMETHODIMP QueryPreferredFormat(GUID *pFormat);

- STDMETHODIMP ConvertTimeFormat(LONGLONG *pTarget,

- const GUID *pTargetFormat,

- LONGLONG Source,

- const GUID *pSourceFormat );

- STDMETHODIMP SetPositions(LONGLONG *pCurrent, DWORD CurrentFlags,

- LONGLONG *pStop, DWORD StopFlags);

- STDMETHODIMP GetDuration(LONGLONG *pDuration);

- STDMETHODIMP GetStopPosition(LONGLONG *pStop);

- // Conversions between reference times and frame numbers.

- LONGLONG FrameToTime(LONGLONG frame) {

- LONGLONG f = frame * m_rtFrameLength;

- return f;

- }

- LONGLONG TimeToFrame(LONGLONG rt) { return rt / m_rtFrameLength; }

- GUID m_TimeFormat; // Which time format is currently active

- protected:

- // Override CSourceStream methods.

- HRESULT GetMediaType(CMediaType *pMediaType);

- HRESULT CheckMediaType(const CMediaType *pMediaType);

- HRESULT DecideBufferSize(IMemAllocator *pAlloc,

- ALLOCATOR_PROPERTIES *pRequest);

- HRESULT FillBuffer(IMediaSample *pSample);

- // The following methods support seeking.

- HRESULT OnThreadStartPlay();

- HRESULT ChangeStart();

- HRESULT ChangeStop();

- HRESULT ChangeRate();

- STDMETHODIMP SetRate(double dRate);

- public:

- CPushPin(HRESULT *phr, CSource *pFilter);

- ~CPushPin();

- // Override this to expose ImediaSeeking.

- STDMETHODIMP NonDelegatingQueryInterface(REFIID riid, void **ppv);

- // We don't support any quality control.

- STDMETHODIMP Notify(IBaseFilter *pSelf, Quality q)

- {

- return E_FAIL;

- }

- };

Let’s begin with an examination of the three public methods defined in CPushPin and implemented in

the following code: the class constructor, the destructor, and CPushPin::NonDelegatingQueryInterface.

下面看CPushPin的三个公共方法,类构造函数,析构函数和CPushPin::NonDelegatingQueryInterface的定义:

点击(此处)折叠或打开

- CPushPin::CPushPin(HRESULT *phr, CSource *pFilter)

- : CSourceStream(NAME("CPushPin"), phr, pFilter, L"Out"),

- CSourceSeeking(NAME("PushPin2Seek"), (IPin*)this, phr, &m_cSharedState),

- m_rtStreamTime(0),

- m_rtSourceTime(0),

- m_iFrameNumber(0),

- m_rtFrameLength(Fps2FrameLength(DEFAULT_FRAME_RATE))

- {

- // Initialize GDI+.

- GdiplusStartupInput gdiplusStartupInput;

- Status s = GdiplusStartup(&m_gdiplusToken, &gdiplusStartupInput, NULL);

- if (s != Ok)

- {

- *phr = E_FAIL;

- }

- // SEEKING: Set the source duration and the initial stop time.

- m_rtDuration = m_rtStop = DEFAULT_DURATION;

- CPushPin::~CPushPin()

- {

- // Shut down GDI+.

- GdiplusShutdown(m_gdiplusToken);

- }

- STDMETHODIMP CPushPin::NonDelegatingQueryInterface(REFIID riid, void **ppv)

- {

- if( riid == IID_IMediaSeeking )

- {

- return CSourceSeeking::NonDelegatingQueryInterface( riid, ppv );

- }

- return CSourceStream::NonDelegatingQueryInterface(riid, ppv);

- }

The class constructor invokes the constructors for the two ancestor classes of CPushPin, CSourceStream and CSourceSeeking, and then the GDI+ (Microsoft’s 2D graphics library) is initialized. GDI+ will create the numbered video frames streamed over the pin. The class destructor shuts down GDI+, and CPushPin::NonDelegatingQueryInterface determines whether a QueryInterface call issued to the PushSource filter object is handled by CSourceStream or CSourceSeeking. Because only seek commands are handled by CSourceSeeking, only those commands are passed to that implementation.

类构造函数调用了CPushPin的两个父类CSourceStream和CSourceSeeking的构造函数,然后初始化了GDI+(微软的2D图形库)。GDI+将会创建pin上的有编号的视频帧流。类析构函数用来关闭GDI+。CPushPin::NonDelegatingQueryInterface确认是否发送给PushSource filter对象的QueryInterface调用可以被 CSourceStream 和CSourceSeeking处理。因为只有查找命令能被CSourceSeeking处理,只有这些命令被传输给这些实现。

Negotiating Connections on a Source Filter

CPushPin has two methods to handle the media type negotiation, CPushPin::GetMediaType and CPushPin::CheckMediaType. These methods, together with the method CPushPin::DecideBufferSize, handle pin-to-pin connection negotiation, as implemented in the following code:

CPushPin有两个处理媒体类型协商的方法,CPushPin::GetMediaType和CPushPin::CheckMediaType。这两个方法再加上CPushPin::DecideBufferSize方法一起处理pin到pin的连接协商。代码实现如下:

点击(此处)折叠或打开

- HRESULT CPushPin::GetMediaType(CMediaType *pMediaType)

- {

- CheckPointer(pMediaType, E_POINTER);

- CAutoLock cAutoLock(m_pFilter->pStateLock());

- // Call our helper function that fills in the media type.

- return CreateRGBVideoType(pMediaType, 32, DEFAULT_WIDTH,

- DEFAULT_HEIGHT, DEFAULT_FRAME_RATE);

- }

- HRESULT CPushPin::CheckMediaType(const CMediaType *pMediaType)

- {

- CAutoLock lock(m_pFilter->pStateLock());

- // Is it a video type?

- if (pMediaType->majortype != MEDIATYPE_Video)

- {

- return E_FAIL;

- }

- // Is it 32-bit RGB?

- if ((pMediaType->subtype != MEDIASUBTYPE_RGB32))

- {

- return E_FAIL;

- }

- // Is it a VIDEOINFOHEADER type?

- if ((pMediaType->formattype == FORMAT_VideoInfo) &&

- (pMediaType->cbFormat >= sizeof(VIDEOINFOHEADER)) &&

- (pMediaType->pbFormat != NULL))

- {

- VIDEOINFOHEADER *pVih = (VIDEOINFOHEADER*)pMediaType->pbFormat;

- // We don't do source rects.

- if (!IsRectEmpty(&(pVih->rcSource)))

- {

- return E_FAIL;

- }

- // Valid frame rate?

- if (pVih->AvgTimePerFrame != m_rtFrameLength)

- {

- return E_FAIL;

- }

- // Everything checked out.

- return S_OK;

- }

- return E_FAIL;

- }

- HRESULT CPushPin::DecideBufferSize(IMemAllocator *pAlloc,

- ALLOCATOR_PROPERTIES *pRequest)

- {

- CAutoLock cAutoLock(m_pFilter->pStateLock());

- HRESULT hr;

- VIDEOINFO *pvi = (VIDEOINFO*) m_mt.Format();

- ASSERT(pvi != NULL);

- if (pRequest->cBuffers == 0)

- {

- pRequest->cBuffers = 1; // We need at least one buffer

- }

- // Buffer size must be at least big enough to hold our image.

- if ((long)pvi->bmiHeader.biSizeImage > pRequest->cbBuffer)

- {

- pRequest->cbBuffer = pvi->bmiHeader.biSizeImage;

- }

- // Try to set these properties.

- ALLOCATOR_PROPERTIES Actual;

- hr = pAlloc->SetProperties(pRequest, &Actual);

- if (FAILED(hr))

- {

- return hr;

- }

- // Check what we actually got.

- if (Actual.cbBuffer < pRequest->cbBuffer)

- {

- return E_FAIL;

- }

- return S_OK;

- }

All three methods begin with the inline creation of a CAutoLock object, a class DirectShow uses to lock a critical section of code. The lock is released when CAutoLock’s destructor is called—in this case, when the object goes out of scope when the method exits. This is a clever and simple way to implement critical sections of code. CPushPin::GetMediaType always returns a media type for RGB32 video because that’s the only type it supports. The media type is built in a call to CreateRGBVideoType, a helper function that can be easily rewritten to handle the creation of a broader array of media types. CPushPin::CheckMediaType ensures that any presented media type exactly matches the RGB32 type supported by the pin; any deviation from the one media type supported by the pin results in failure. Finally, CPushPin::DecideBufferSize examines the size of each sample (in this case, a video frame) and requests at least one buffer of that size. The method will accept more storage if offered but will settle for no less than that minimum amount. These three methods must be implemented by every source filter.

这三个方法都是从创建CAutoLock对象开始,这个类是DS用来锁定重要的代码段。当CAutoLock的解析函数被调用进这个锁就被释放了—在现在的这个例子中,当这个方法结束时这个锁对象也就超出了作用域。这是一个聪明而简单的方式实现重要的代码段。

CPushPin::GetMediaType方法总是为RGB32视频返回一个媒体类型,因为这是它唯一支持的类型。媒体类型是通过调用CreateRGBVideoType创建的,CreateRGBVideoType是一个辅助函数,能比较容易地重写来处理更广泛的媒体类型数组的生成。

CPushPin::CheckMediaType用来确保任何出现在pin上的媒体类型和pin支持的RGB32类型相匹配;任何偏离了pin支持的媒体类型将会导致失败

CPushPin::DecideBufferSize检查每个样本(在本例中是视频帧)的大小并至少申请这么大的缓存区。这个方法可以申请更多的存储空间,如果能够提供但会勉强接受不低于最低数额。这三个方法是每个源filter都必须实现的。

Implementing Media Sample Creation Methods

One media sample creation method must be implemented with CPushPin. This is the method by which video frames are created and passed along as media samples. When the streaming thread is created, it goes into a loop, and CPushPin::FillBuffer is called every time through the loop as long as the filter is active (either paused or running). In CPushPin, this method is combined with another, internal, method, CPushPin::WriteToBuffer, which actually creates the image, as shown in the following code:

生成媒体样本的方法也要在CPushPin中实现。它是视频帧被生成并作为样传输的方法。当流线程被创建后,它就自动进入一个循环,filter被激活后,CPushPin::FillBuffer在每次循环中都会被调用。在CPushPin中,这个方法和另一个内部的方法CPushPin::WriteToBuffer(它实际上用来创建图像)结合到一起使用。代码如下:

点击(此处)折叠或打开

- HRESULT CPushPin::FillBuffer(IMediaSample *pSample)

- {

- HRESULT hr;

- BYTE *pData;

- long cbData;

- WCHAR msg[256];

- // Get a pointer to the buffer.

- pSample->GetPointer(&pData);

- cbData = pSample->GetSize();

- // Check if the downstream filter is changing the format.

- CMediaType *pmt;

- hr = pSample->GetMediaType((AM_MEDIA_TYPE**)&pmt);

- if (hr == S_OK)

- {

- SetMediaType(pmt);

- DeleteMediaType(pmt);

- }

- // Get our format information

- ASSERT(m_mt.formattype == FORMAT_VideoInfo);

- ASSERT(m_mt.cbFormat >= sizeof(VIDEOINFOHEADER));

- VIDEOINFOHEADER *pVih = (VIDEOINFOHEADER*)m_mt.pbFormat;

- {

- // Scope for the state lock,

- // which protects the frame number and ref times.

- CAutoLock cAutoLockShared(&m_cSharedState);

- // Have we reached the stop time yet?

- if (m_rtSourceTime >= m_rtStop)

- {

- // This will cause the base class

- // to send an EndOfStream downstream.

- return S_FALSE;

- }

- // Time stamp the sample.

- REFERENCE_TIME rtStart, rtStop;

- rtStart = (REFERENCE_TIME)(m_rtStreamTime / m_dRateSeeking);

- rtStop = rtStart + (REFERENCE_TIME)(pVih->AvgTimePerFrame / m_dRateSeeking);

- pSample->SetTime(&rtStart, &rtStop);

- // Write the frame number into our text buffer.

- swprintf(msg, L"%d", m_iFrameNumber);

- // Increment the frame number

- // and ref times for the next time through the loop.

- m_iFrameNumber++;

- m_rtSourceTime += pVih->AvgTimePerFrame;

- m_rtStreamTime += pVih->AvgTimePerFrame;

- }

- // Private method to draw the image.

- hr = WriteToBuffer(msg, pData, pVih);

- if (FAILED(hr))

- {

- return hr;

- }

- // Every frame is a key frame.

- pSample->SetSyncPoint(TRUE);

- return S_OK;

- }

- HRESULT CPushPin::WriteToBuffer(LPWSTR wszText, BYTE *pData,

- VIDEOINFOHEADER *pVih)

- {

- ASSERT(pVih->bmiHeader.biBitCount == 32);

- DWORD dwWidth, dwHeight;

- long lStride;

- BYTE *pbTop;

- // Get the width, height, top row of pixels, and stride.

- GetVideoInfoParameters(pVih, pData, &dwWidth, &dwHeight,

- &lStride, &pbTop, false);

- // Create a GDI+ bitmap object to manage our image buffer.

- Bitmap bitmap((int)dwWidth, (int)dwHeight, abs(lStride),

- PixelFormat32bppRGB, pData);

- // Create a GDI+ graphics object to manage the drawing.

- Graphics g(&bitmap);

- // Turn on anti-aliasing.

- g.SetSmoothingMode(SmoothingModeAntiAlias);

- g.SetTextRenderingHint(TextRenderingHintAntiAlias);

- // Erase the background.

- g.Clear(Color(0x0, 0, 0, 0));

- // GDI+ is top-down by default,

- // so if our image format is bottom-up, we need

- // to set a transform on the Graphics object to flip the image.

- if (pVih->bmiHeader.biHeight > 0)

- {

- // Flip the image around the X axis.

- g.ScaleTransform(1.0, -1.0);

- // Move it back into place.

- g.TranslateTransform(0, (float)dwHeight, MatrixOrderAppend);

- }

- SolidBrush brush(Color(0xFF, 0xFF, 0xFF, 0)); // Yellow brush

- Font font(FontFamily::GenericSerif(), 48); // Big serif type

- RectF rcBounds(30, 30, (float)dwWidth,

- (float)dwHeight); // Bounding rectangle

- // Draw the text

- g.DrawString(

- wszText, -1,

- &font,

- rcBounds,

- StringFormat::GenericDefault(),

- &brush);

- return S_OK;

- }

Upon entering CPushPin::FillBuffer, the media sample is examined for a valid media type. Then a thread lock is executed, and the method determines whether the source filter’s stop time has been reached. If it has, the routine returns S_FALSE, indicating that a media sample will not be generated by the method. This will cause the downstream buffers to empty, leaving filters waiting for media samples from the source filter.

具体来看CPushPin::FillBuffer,为了有效的媒体类型先要检查媒体样本。然后执行线程锁,并且这个方法会确认这个源filter是否到了停止的时间。如果是,则返回失败,表示这个方法没有生成媒体本校。这将会导致下游的缓存区清空,并等待源filter的媒体样本的到来。

If the filter stop time has not been reached, the sample’s timestamp is set with a call to IMediaSample::SetTime. This timestamp is composed of two pieces (both in REFERENCE_TIME format of 100-nanosecond intervals), indicating the sample’s start time and stop time. The calculation here creates a series of timestamps separated by the time per frame (in this case, one thirtieth of a second). At this point, the frame number is written to a string stored in the object and then incremented.

如果filter还没有到停止时间,那么就调用IMediaSample::SetTime来设置样本的时间戳。这个时间戳由两部分组成(100纳秒的REFERENCE_TIME格式),指示样本的开始和结束时间。这里的这个计算创建了每个帧独立的时间戳。此时,帧号被存储在对象的字符串变量中并累加。

That frame number is put to work in CPushPin::WriteToBuffer, which creates the RGB32-based video frame using GDI+. The method makes a call to the helper function GetVideoInfoParameters, retrieving the video parameters it uses to create a bitmap of appropriate width and height. With a few GDI+ calls, CPushPin::WriteToBuffer clears the background (to black) and then inverts the drawing image if the video format is bottom-up rather than top-down (the default for GDI+). A brush and then a font are selected, and the frame number is drawn to the bitmap inside a bounding rectangle.

帧号是给CPushPin::WriteToBuffer工作的,它使用GDI+创建基于RGB32的视频帧。这个方法调用了辅助函数GetVideoInfoParameters—它会返回用于创建合适宽度位图的视频参数。通过少量的GDI+调用,CPushPin::WriteToBuffer清除了背景成黑色然后转换图像。然后选择刷子和字体,并将帧号绘制位图到背景框。

Seek Functionality on a Source Filter

CPushPin inherits from both CSourceStream and CSourceSeeking. CSourceSeeking allows the source filter’s output pin to process and respond to seek commands. In the case of the PushSource filter, a seek command causes the frame count to rewind or fast-forward to a new value. Because this filter is frame-seekable (meaning we can position it to any arbitrary frame within the legal range), it needs to implement a number of other methods. The following code shows the basic methods that implement seek functionality:

CPushPin 继承自CSourceStream和CSourceSeeking,CSourceSeeking允许源filter的输出pin能处理和响应查找命令。在CPushPin这个例子中,查找命令会造成帧号向前或向后到一个新值。因为filter是帧事查找,它需要实现一些其它的函数。下面的代码显示了查找功能的基本方法要实现的代码。点击(此处)折叠或打开

- void CPushPin::UpdateFromSeek()

- {

- if (ThreadExists()) // Filter is active?

- {

- DeliverBeginFlush();

- // Shut down the thread and stop pushing data.

- Stop();

- DeliverEndFlush();

- // Restart the thread and start pushing data again.

- Pause();

- // We'll set the discontinuity flag on the next sample.

- }

- }

- HRESULT CPushPin::OnThreadStartPlay()

- {

- m_bDiscontinuity = TRUE; // Set the discontinuity flag on the next sample

- // Send a NewSegment call downstream.

- return DeliverNewSegment(m_rtStart, m_rtStop, m_dRateSeeking);

- }

- HRESULT CPushPin::ChangeStart( )

- {

- // Reset stream time to zero and the source time to m_rtStart.

- {

- CAutoLock lock(CSourceSeeking::m_pLock);

- m_rtStreamTime = 0;

- m_rtSourceTime = m_rtStart;

- m_iFrameNumber = (int)(m_rtStart / m_rtFrameLength);

- }

- UpdateFromSeek();

- return S_OK;

- }

- HRESULT CPushPin::ChangeStop( )

- {

- {

- CAutoLock lock(CSourceSeeking::m_pLock);

- if (m_rtSourceTime < m_rtStop)

- {

- return S_OK;

- }

- }

- // We're already past the new stop time. Flush the graph.

- UpdateFromSeek();

- return S_OK;

- }

- //HRESULT CPushPin::SetRate(double {

- if (dRate <= 0.0)

- {

- return E_INVALIDARG;

- }

- {

- CAutoLock lock(CSourceSeeking::m_pLock);

- m_dRateSeeking = dRate;

- }

- UpdateFromSeek();

- return S_OK;

- }

- // Because we override SetRate,

- // the ChangeRate method won't ever be called. (It is only

- // ever called by SetRate.)

- // But it's pure virtual, so it needs a dummy implementation.

- HRESULT CPushPin::ChangeRate() { return S_OK; }

CPushPin::UpdateFromSeek forces the flush of the filter graph that needs to take place every time a seek is performed on the source filter. The buffer flushing process has four steps. Flushing begins (on the downstream pin) when DeliverBeginFlush is invoked. Next the streaming thread is stopped, suspending buffer processing throughout the filter graph. Then DeliverEndFlush is invoked; this sets up the downstream filters to receive new media samples. The streaming thread is then restarted. When restarted, CPushPin::OnThreadStartPlay is called, and any processing associated with starting the filter must be performed here. The new start, stop, and rate values for the stream are delivered downstream. If the filter does anything complicated to the threads, it’s not hard to create a deadlock situation in which the entire filter graph seizes up, waiting for a lock that will never be released. Filter developers are strongly encouraged to read “Threads and Critical Sections” under “Writing DirectShow Filters” in the DirectX SDK documentation.

CPushPin::UpdateFromSeek强制filter graph的清空,每次源filter执行查找操作时都会要进行清空。缓存区清空操作分成四步。下游的pin调用DeliverBeginFlush时,清空开始。接着停止流线程,挂起整个filter graph的缓存操作。最后调用DeliverEndFlush; 它用来设置下游filter接收新的媒体本校。然后重新启动流线程。当重启后,调用CPushPin::OnThreadStartPlay并且任何和filter启动相关的操作都要在这里执行。新的启动,停止和流的码率值都被传送到下游。如果filter对线程做了任何复杂的工作,就很容易死锁导致整个filter graph都冻结,等待一个永不释放的锁。Filter的开发强烈要求读DirectX SDK文档中Writing DirectShow Filters 下下的Threads and Critical Sections。

When the start position of the stream changes, CPushPin::ChangeStart is invoked. This method resets the stream time to zero (the current time, relatively, as the stream time counts upward while the filter runs) and changes the source time to the requested start time. This translates into a new frame number, which is calculated. CPushPin::UpdateFromSeek is called at the end of the routine so that any buffers in the filter graph can be flushed to make way for the new stream data.

当流的起始位置改变时,就激活了CPushPin::ChangeStart,这个方法重设流时间到零并改变源时间到要求的启动时间。这样就转换到了经过计算的新帧号。在例程的最后,CPushPin::UpdateFromSeek被调用,使得filter graph中的所有缓存区都被清空以接收新的流数据。

When the filter receives a seek command that resets the stop position of the stream, CPushPin::ChangeStop is invoked. This method checks to see whether the new stop time is past the current time. If it is, the graph is flushed with a call to CPushPin::UpdateFromSeek.

当filter收到重设流的停止位置的查找命令时,就激活了CPushPin::ChangeStop,这个方法检查是否有新的停止时间超过了当前时间,如果是,graph就调用CPushPin::UpdateFromSeek来清空。

The playback rate of a stream can be adjusted through a call to the IMediaSeeking interface. The playback rate of the PushSource filter, for example, is 30 frames a second (fps), but it could be adjusted to 15 or 60 fps or any other value. When the playback rate is changed, CPushPin::SetRate is invoked. It modifies the internal variable containing the playback rate and then calls CPushPin::UpdateFromSeek to flush the downstream filters. It’s not necessary to override CSourceSeeking::SetRate, but we’ve overridden it here in CPushPin because this version includes a parameter check on the rate value to ensure its validity.

流找回放速率可以能过调用IMediaSeeking来调整。PushSource filter的回放速率是30fps,但是它可以调整到15到60fps或任何其它值。当回放速率改变时,就激活了CPushPin::SetRate。它改变了包含回放速率的内部变量然后调用CPushPin::UpdateFromSeek来清空下游filter。没有必要重写CSourceSeeking::SetRate。但在CPushPin这个例子中重写了这个方法是因为这个版本包含了参数检查以确保速率的有效性。

All the routines discussed thus far handle seeking from a base of the DirectShow reference time, in units of 100 nanoseconds. DirectShow filters can also support other time formats. This can be handy when dealing with video, which is parceled out in units of frames, or fields. A source filter doesn’t need to implement multiple time formats for seeking, but the PushSource filter does. These CPushPin methods, overridden from CSourceSeeking, allow the PushSource filter to work in a time base of frames, as shown in the following code:

所有处理查找的程序都是基于DS的参数时钟,它的单位是100ns。DS filter也可以支持其它时钟格式。处理视频时还是很麻烦的,因为它是以帧或场作为单位。源filter并不需要为了查找而实现多个时钟格式,但是PushSource实现了。CPushPin方法覆盖了CSourceSeeking,从而允许PushSource filter基于帧工作,代码如下:

点击(此处)折叠或打开

- STDMETHODIMP CPushPin::SetTimeFormat(const GUID *pFormat)

- {

- CAutoLock cAutoLock(m_pFilter->pStateLock());

- CheckPointer(pFormat, E_POINTER);

- if (m_pFilter->IsActive())

- {

- // Cannot switch formats while running.

- return VFW_E_WRONG_STATE;

- }

- if (S_OK != IsFormatSupported(pFormat))

- {

- // We don't support this time format.

- return E_INVALIDARG;

- }

- m_TimeFormat = *pFormat;

- return S_OK;

- }

- STDMETHODIMP CPushPin::GetTimeFormat(GUID *pFormat)

- {

- CAutoLock cAutoLock(m_pFilter->pStateLock());

- CheckPointer(pFormat, E_POINTER);

- *pFormat = m_TimeFormat;

- return S_OK;

- }

- STDMETHODIMP CPushPin::IsUsingTimeFormat(const GUID *pFormat)

- {

- CAutoLock cAutoLock(m_pFilter->pStateLock());

- CheckPointer(pFormat, E_POINTER);

- return (*pFormat == m_TimeFormat ? S_OK : S_FALSE);

- }

- STDMETHODIMP CPushPin::IsFormatSupported( const GUID * pFormat)

- {

- CheckPointer(pFormat, E_POINTER);

- if (*pFormat == TIME_FORMAT_MEDIA_TIME)

- {

- return S_OK;

- }

- else if (*pFormat == TIME_FORMAT_FRAME)

- {

- return S_OK;

- }

- else

- {

- return S_FALSE;

- }

- }

- STDMETHODIMP CPushPin::QueryPreferredFormat(GUID *pFormat)

- {

- CheckPointer(pFormat, E_POINTER);

- *pFormat = TIME_FORMAT_FRAME; // Doesn't really matter which we prefer

- return S_OK;

- }

- STDMETHODIMP CPushPin::ConvertTimeFormat(

- LONGLONG *pTarget, // Receives the converted time value.

- const GUID *pTargetFormat, // Specifies the target format for the conversion.

- LONGLONG Source, // Time value to convert.

- const GUID *pSourceFormat) // Time format for the Source time.

- {

- CheckPointer(pTarget, E_POINTER);

- // Either of the format GUIDs can be NULL,

- // which means "use the current time format"

- GUID TargetFormat, SourceFormat;

- TargetFormat = (pTargetFormat == NULL ? m_TimeFormat : *pTargetFormat );

- SourceFormat = (pSourceFormat == NULL ? m_TimeFormat : *pSourceFormat );

- if (TargetFormat == TIME_FORMAT_MEDIA_TIME)

- {

- if (SourceFormat == TIME_FORMAT_FRAME)

- {

- *pTarget = FrameToTime(Source);

- return S_OK;

- }

- if (SourceFormat == TIME_FORMAT_MEDIA_TIME)

- {

- // no-op

- *pTarget = Source;

- return S_OK;

- }

- return E_INVALIDARG; // Invalid source format.

- }

- if (TargetFormat == TIME_FORMAT_FRAME)

- {

- if (SourceFormat == TIME_FORMAT_MEDIA_TIME)

- {

- *pTarget = TimeToFrame(Source);

- return S_OK;

- }

- if (SourceFormat == TIME_FORMAT_FRAME)

- {

- // no-op

- *pTarget = Source;

- return S_OK;

- }

- return E_INVALIDARG; // Invalid source format.

- }

- return E_INVALIDARG; // Invalid target format.

- }

- STDMETHODIMP CPushPin::SetPositions(

- LONGLONG *pCurrent, // New current position (can be NULL!)

- DWORD CurrentFlags,

- LONGLONG *pStop, // New stop position (can be NULL!)

- DWORD StopFlags)

- {

- HRESULT hr;

- if (m_TimeFormat == TIME_FORMAT_FRAME)

- {

- REFERENCE_TIME rtCurrent = 0, rtStop = 0;

- if (pCurrent)

- {

- rtCurrent = FrameToTime(*pCurrent);

- }

- if (pStop)

- {

- rtStop = FrameToTime(*pStop);

- }

- hr = CSourceSeeking::SetPositions(&rtCurrent, CurrentFlags,

- &rtStop, StopFlags);

- if (SUCCEEDED(hr))

- {

- // The AM_SEEKING_ReturnTime flag

- // means the caller wants the input times

- // converted to the current time format.

- if (pCurrent && (CurrentFlags & AM_SEEKING_ReturnTime))

- {

- *pCurrent = rtCurrent;

- }

- if (pStop && (StopFlags & AM_SEEKING_ReturnTime))

- {

- *pStop = rtStop;

- }

- }

- }

- else

- {

- // Simple pass thru'

- hr = CSourceSeeking::SetPositions(pCurrent, CurrentFlags,

- pStop, StopFlags);

- }

- return hr;

- }

- STDMETHODIMP CPushPin::GetDuration(LONGLONG *pDuration)

- {

- HRESULT hr = CSourceSeeking::GetDuration(pDuration);

- if (SUCCEEDED(hr))

- {

- if (m_TimeFormat == TIME_FORMAT_FRAME)

- {

- *pDuration = TimeToFrame(*pDuration);

- }

- }

- return S_OK;

- }

- STDMETHODIMP CPushPin::GetStopPosition(LONGLONG *pStop)

- {

- HRESULT hr = CSourceSeeking::GetStopPosition(pStop);

- if (SUCCEEDED(hr))

- {

- if (m_TimeFormat == TIME_FORMAT_FRAME)

- {

- *pStop = TimeToFrame(*pStop);

- }

- }

- return S_OK;

- }

A request to change the time format can be set with a call to CPushPin::SetTimeFormat; the passed parameter is a GUID, which describes the time format. (The list of time format GUIDs can be found in the DirectX SDK documentation.) If CPushPin::IsSupportedFormat reports that the time format is supported, the format is changed. The two supported formats for CPushPin are TIME_FORMAT_MEDIA_TIME, which is the reference time of 100-nanosecond units, and TIME_FORMAT_FRAME, in which each unit constitutes one video frame.

CPushPin::SetTimeFormat可以用来改变时钟格式。它的参数为GUID,描述了时钟格式。如果CPushPin::IsSupportedFormat报告支持某种时钟格式,那么这个格式就改好了。CPushPin支持两种格式:TIME_FORMAT_MEDIA_TIME,100ns为单位。TIME_FORMAT_FRAME,,以视频帧为单位。

Conversions between time formats are performed in CPushPin::ConvertTimeFormat. Because PushSource supports two time formats, the routine must perform conversions between TIME_FORMAT_MEDIA_TIME and TIME_FORMAT_FRAME. CPushPin::GetDuration, CPushPin::GetStopPosition, and CPushPin::SetPositions are overridden because their return values must reflect the current time format. (Imagine what would happen if the Filter Graph Manager, expecting frames, was informed there were 300,000,000 of them!)

两种时钟格式在CPushPin::ConvertTimeFormat都能执行。因为PushSource都支持也只支持TIME_FORMAT_MEDIA_TIME和TIME_FORMAT_FRAME这两种。CPushPin::GetDuration, CPushPin::GetStopPosition, 和CPushPin::SetPositions都要被重写,因为它们的返回值必须反映当前的时钟格式。

There’s just a bit more C++ code needed to handle the COM interfaces, which we’ve seen previously in the YUVGray and SampleGrabber filters. When compiled and registered (which should happen automatically), the “Generic Push Source” filter will appear in the list of DirectShow filters in GraphEdit.

这里还有一些C++代码用来处理COM接口,和前面的例子相同的。编译和注册后就能用了。

Summary

Source filters are absolutely essential because they generate the media samples that pass through the filter graph until rendered. Most hardware devices don’t need custom DirectShow filters because DirectShow has wrappers to “automagically” make WDM and VFW device drivers appear as filters to DirectShow applications. Other source filters can read data from a file, from a URL, and so forth. Some source filters, such as the PushSource example explored in this chapter, create their own media streams. In large part, control of the filter graph is driven by the source filters it contains. In the implementation of PushSource, we’ve explored how the different operating states of the filter graph are reflected in the design of a source filter. Therefore, a detailed study of source filters reveals how DirectShow executes a filter graph.

源filter肯定是很重要的,因为它们生成媒体样本。