MNIST Examples for GGML - Fully connected network

MNIST Examples for GGML - Fully connected network

- 1. Build

- 2. MNIST Examples for GGML

-

- 2.1. Obtaining the data

- 2.2. Fully connected network

-

- 2.2.1. To train a fully connected model in PyTorch and save it as a GGUF file

- 2.2.2. To evaluate the model on the CPU using GGML

- 2.2.3. To train a fully connected model on the CPU using GGML

- 2.3. Hardware Acceleration

- References

1. Build

https://github.com/ggml-org/ggml

git clone https://github.com/ggml-org/ggml

cd ggml

# install python dependencies in a virtual environment

python3.10 -m venv .venv

source .venv/bin/activate

pip install -r requirements.txt

# build the examples

mkdir build && cd build

cmake ..

cmake --build . --config Release -j 8

(base) yongqiang@yongqiang:~$ cd llm_work/

(base) yongqiang@yongqiang:~/llm_work$ mkdir ggml_25_02_15

(base) yongqiang@yongqiang:~/llm_work$ cd ggml_25_02_15/

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15$ git clone https://github.com/ggml-org/ggml.git

Cloning into 'ggml'...

remote: Enumerating objects: 13755, done.

remote: Counting objects: 100% (498/498), done.

remote: Compressing objects: 100% (193/193), done.

remote: Total 13755 (delta 331), reused 335 (delta 303), pack-reused 13257 (from 3)

Receiving objects: 100% (13755/13755), 12.88 MiB | 213.00 KiB/s, done.

Resolving deltas: 100% (9411/9411), done.

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15$ cd ggml/

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$ pip install -r requirements.txt

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$ vim build_ggml_linux_cpu.sh

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$ chmod a+x build_ggml_linux_cpu.sh

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$ cat build_ggml_linux_cpu.sh

#! /bin/bash

# build the examples

mkdir build && cd build

cmake ..

cmake --build . --config Debug -j 8

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$ bash build_ggml_linux_cpu.sh

-- The C compiler identification is GNU 9.4.0

-- The CXX compiler identification is GNU 9.4.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD - Failed

-- Check if compiler accepts -pthread

-- Check if compiler accepts -pthread - yes

-- Found Threads: TRUE

-- Warning: ccache not found - consider installing it for faster compilation or disable this warning with GGML_CCACHE=OFF

-- CMAKE_SYSTEM_PROCESSOR: x86_64

-- Including CPU backend

-- Found OpenMP_C: -fopenmp (found version "4.5")

-- Found OpenMP_CXX: -fopenmp (found version "4.5")

-- Found OpenMP: TRUE (found version "4.5")

-- x86 detected

-- Adding CPU backend variant ggml-cpu: -march=native

-- x86 detected

-- Linux detected

-- Configuring done (10.1s)

-- Generating done (0.1s)

-- Build files have been written to: /home/yongqiang/llm_work/ggml_25_02_15/ggml/build

...

[ 99%] Linking CXX executable ../../bin/gpt-j

[ 99%] Built target gpt-j

[100%] Linking CXX executable ../../bin/sam

[100%] Built target sam

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$

2. MNIST Examples for GGML

https://github.com/ggml-org/ggml/tree/master/examples/mnist

This directory contains simple examples of how to use GGML for training and inference using the MNIST dataset. All commands listed in this README assume the working directory to be examples/mnist.

MNIST dataset

https://yann.lecun.com/exdb/mnist/

Please note that training in GGML is a work-in-progress and not production ready.

2.1. Obtaining the data

A description of the dataset can be found on Yann LeCun’s website.

While it is also in principle possible to download the dataset from this website these downloads are frequently throttled and

it is recommended to use HuggingFace instead.

The dataset will be downloaded automatically when running mnist-train-fc.py.

2.2. Fully connected network

For our first example we will train a fully connected network.

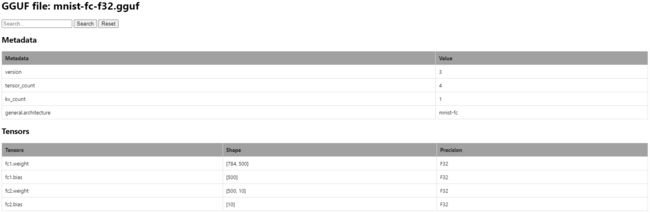

2.2.1. To train a fully connected model in PyTorch and save it as a GGUF file

$ python3 mnist-train-fc.py mnist-fc-f32.gguf

...

Test loss: 0.066377+-0.010468, Test accuracy: 97.94+-0.14%

Model tensors saved to mnist-fc-f32.gguf:

fc1.weight (500, 784)

fc1.bias (500,)

fc2.weight (10, 500)

fc2.bias (10,)

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml$ cd examples/mnist/

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml/examples/mnist$ python3 mnist-train-fc.py mnist-fc-f32.gguf

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Failed to download (trying next):

HTTP Error 404: Not Found

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz to ./data/MNIST/raw/train-images-idx3-ubyte.gz

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 9.91M/9.91M [00:11<00:00, 864kB/s]

Extracting ./data/MNIST/raw/train-images-idx3-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Failed to download (trying next):

HTTP Error 404: Not Found

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz to ./data/MNIST/raw/train-labels-idx1-ubyte.gz

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 28.9k/28.9k [00:00<00:00, 57.4kB/s]

Extracting ./data/MNIST/raw/train-labels-idx1-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Failed to download (trying next):

HTTP Error 404: Not Found

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz to ./data/MNIST/raw/t10k-images-idx3-ubyte.gz

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.65M/1.65M [00:07<00:00, 224kB/s]

Extracting ./data/MNIST/raw/t10k-images-idx3-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

Failed to download (trying next):

HTTP Error 404: Not Found

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz to ./data/MNIST/raw/t10k-labels-idx1-ubyte.gz

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4.54k/4.54k [00:00<00:00, 3.66MB/s]

Extracting ./data/MNIST/raw/t10k-labels-idx1-ubyte.gz to ./data/MNIST/raw

Epoch [01/30], Step [10000/60000], Loss: 1.7476, Accuracy: 61.69%

Epoch [01/30], Step [20000/60000], Loss: 1.2862, Accuracy: 71.74%

Epoch [01/30], Step [30000/60000], Loss: 1.0215, Accuracy: 76.76%

Epoch [01/30], Step [40000/60000], Loss: 0.8656, Accuracy: 79.79%

Epoch [01/30], Step [50000/60000], Loss: 0.7615, Accuracy: 81.86%

Epoch [01/30], Step [60000/60000], Loss: 0.6898, Accuracy: 83.28%

Epoch [02/30], Step [10000/60000], Loss: 0.2972, Accuracy: 91.74%

Epoch [02/30], Step [20000/60000], Loss: 0.2857, Accuracy: 91.98%

Epoch [02/30], Step [30000/60000], Loss: 0.2836, Accuracy: 91.99%

Epoch [02/30], Step [40000/60000], Loss: 0.2780, Accuracy: 92.10%

Epoch [02/30], Step [50000/60000], Loss: 0.2750, Accuracy: 92.20%

Epoch [02/30], Step [60000/60000], Loss: 0.2693, Accuracy: 92.41%

Epoch [03/30], Step [10000/60000], Loss: 0.2285, Accuracy: 93.94%

Epoch [03/30], Step [20000/60000], Loss: 0.2247, Accuracy: 93.82%

Epoch [03/30], Step [30000/60000], Loss: 0.2141, Accuracy: 94.13%

Epoch [03/30], Step [40000/60000], Loss: 0.2140, Accuracy: 94.14%

Epoch [03/30], Step [50000/60000], Loss: 0.2101, Accuracy: 94.17%

Epoch [03/30], Step [60000/60000], Loss: 0.2071, Accuracy: 94.21%

Epoch [04/30], Step [10000/60000], Loss: 0.1752, Accuracy: 94.96%

Epoch [04/30], Step [20000/60000], Loss: 0.1781, Accuracy: 94.88%

Epoch [04/30], Step [30000/60000], Loss: 0.1715, Accuracy: 95.07%

Epoch [04/30], Step [40000/60000], Loss: 0.1702, Accuracy: 95.15%

Epoch [04/30], Step [50000/60000], Loss: 0.1695, Accuracy: 95.19%

Epoch [04/30], Step [60000/60000], Loss: 0.1672, Accuracy: 95.27%

Epoch [05/30], Step [10000/60000], Loss: 0.1426, Accuracy: 96.04%

Epoch [05/30], Step [20000/60000], Loss: 0.1413, Accuracy: 95.99%

Epoch [05/30], Step [30000/60000], Loss: 0.1417, Accuracy: 96.01%

Epoch [05/30], Step [40000/60000], Loss: 0.1425, Accuracy: 95.94%

Epoch [05/30], Step [50000/60000], Loss: 0.1410, Accuracy: 95.98%

Epoch [05/30], Step [60000/60000], Loss: 0.1395, Accuracy: 96.03%

Epoch [06/30], Step [10000/60000], Loss: 0.1234, Accuracy: 96.65%

Epoch [06/30], Step [20000/60000], Loss: 0.1188, Accuracy: 96.71%

Epoch [06/30], Step [30000/60000], Loss: 0.1184, Accuracy: 96.71%

Epoch [06/30], Step [40000/60000], Loss: 0.1169, Accuracy: 96.77%

Epoch [06/30], Step [50000/60000], Loss: 0.1163, Accuracy: 96.78%

Epoch [06/30], Step [60000/60000], Loss: 0.1167, Accuracy: 96.75%

Epoch [07/30], Step [10000/60000], Loss: 0.1041, Accuracy: 97.19%

Epoch [07/30], Step [20000/60000], Loss: 0.1018, Accuracy: 97.28%

Epoch [07/30], Step [30000/60000], Loss: 0.1006, Accuracy: 97.29%

Epoch [07/30], Step [40000/60000], Loss: 0.1017, Accuracy: 97.18%

Epoch [07/30], Step [50000/60000], Loss: 0.1014, Accuracy: 97.19%

Epoch [07/30], Step [60000/60000], Loss: 0.1008, Accuracy: 97.20%

Epoch [08/30], Step [10000/60000], Loss: 0.0929, Accuracy: 97.49%

Epoch [08/30], Step [20000/60000], Loss: 0.0906, Accuracy: 97.53%

Epoch [08/30], Step [30000/60000], Loss: 0.0886, Accuracy: 97.61%

Epoch [08/30], Step [40000/60000], Loss: 0.0897, Accuracy: 97.53%

Epoch [08/30], Step [50000/60000], Loss: 0.0888, Accuracy: 97.56%

Epoch [08/30], Step [60000/60000], Loss: 0.0882, Accuracy: 97.57%

Epoch [09/30], Step [10000/60000], Loss: 0.0721, Accuracy: 98.05%

Epoch [09/30], Step [20000/60000], Loss: 0.0733, Accuracy: 98.03%

Epoch [09/30], Step [30000/60000], Loss: 0.0745, Accuracy: 98.01%

Epoch [09/30], Step [40000/60000], Loss: 0.0762, Accuracy: 97.92%

Epoch [09/30], Step [50000/60000], Loss: 0.0770, Accuracy: 97.90%

Epoch [09/30], Step [60000/60000], Loss: 0.0765, Accuracy: 97.91%

Epoch [10/30], Step [10000/60000], Loss: 0.0676, Accuracy: 98.22%

Epoch [10/30], Step [20000/60000], Loss: 0.0681, Accuracy: 98.22%

Epoch [10/30], Step [30000/60000], Loss: 0.0663, Accuracy: 98.25%

Epoch [10/30], Step [40000/60000], Loss: 0.0663, Accuracy: 98.24%

Epoch [10/30], Step [50000/60000], Loss: 0.0680, Accuracy: 98.18%

Epoch [10/30], Step [60000/60000], Loss: 0.0677, Accuracy: 98.18%

Epoch [11/30], Step [10000/60000], Loss: 0.0584, Accuracy: 98.50%

Epoch [11/30], Step [20000/60000], Loss: 0.0584, Accuracy: 98.51%

Epoch [11/30], Step [30000/60000], Loss: 0.0598, Accuracy: 98.45%

Epoch [11/30], Step [40000/60000], Loss: 0.0605, Accuracy: 98.43%

Epoch [11/30], Step [50000/60000], Loss: 0.0606, Accuracy: 98.40%

Epoch [11/30], Step [60000/60000], Loss: 0.0608, Accuracy: 98.39%

Epoch [12/30], Step [10000/60000], Loss: 0.0576, Accuracy: 98.54%

Epoch [12/30], Step [20000/60000], Loss: 0.0551, Accuracy: 98.61%

Epoch [12/30], Step [30000/60000], Loss: 0.0546, Accuracy: 98.59%

Epoch [12/30], Step [40000/60000], Loss: 0.0544, Accuracy: 98.59%

Epoch [12/30], Step [50000/60000], Loss: 0.0536, Accuracy: 98.57%

Epoch [12/30], Step [60000/60000], Loss: 0.0540, Accuracy: 98.54%

Epoch [13/30], Step [10000/60000], Loss: 0.0482, Accuracy: 98.62%

Epoch [13/30], Step [20000/60000], Loss: 0.0487, Accuracy: 98.69%

Epoch [13/30], Step [30000/60000], Loss: 0.0486, Accuracy: 98.73%

Epoch [13/30], Step [40000/60000], Loss: 0.0492, Accuracy: 98.72%

Epoch [13/30], Step [50000/60000], Loss: 0.0484, Accuracy: 98.73%

Epoch [13/30], Step [60000/60000], Loss: 0.0485, Accuracy: 98.72%

Epoch [14/30], Step [10000/60000], Loss: 0.0416, Accuracy: 98.91%

Epoch [14/30], Step [20000/60000], Loss: 0.0428, Accuracy: 98.84%

Epoch [14/30], Step [30000/60000], Loss: 0.0437, Accuracy: 98.85%

Epoch [14/30], Step [40000/60000], Loss: 0.0433, Accuracy: 98.88%

Epoch [14/30], Step [50000/60000], Loss: 0.0433, Accuracy: 98.87%

Epoch [14/30], Step [60000/60000], Loss: 0.0435, Accuracy: 98.86%

Epoch [15/30], Step [10000/60000], Loss: 0.0366, Accuracy: 99.02%

Epoch [15/30], Step [20000/60000], Loss: 0.0376, Accuracy: 99.03%

Epoch [15/30], Step [30000/60000], Loss: 0.0389, Accuracy: 99.01%

Epoch [15/30], Step [40000/60000], Loss: 0.0386, Accuracy: 99.02%

Epoch [15/30], Step [50000/60000], Loss: 0.0381, Accuracy: 99.05%

Epoch [15/30], Step [60000/60000], Loss: 0.0391, Accuracy: 99.00%

Epoch [16/30], Step [10000/60000], Loss: 0.0372, Accuracy: 99.10%

Epoch [16/30], Step [20000/60000], Loss: 0.0336, Accuracy: 99.19%

Epoch [16/30], Step [30000/60000], Loss: 0.0323, Accuracy: 99.23%

Epoch [16/30], Step [40000/60000], Loss: 0.0338, Accuracy: 99.17%

Epoch [16/30], Step [50000/60000], Loss: 0.0340, Accuracy: 99.17%

Epoch [16/30], Step [60000/60000], Loss: 0.0350, Accuracy: 99.12%

Epoch [17/30], Step [10000/60000], Loss: 0.0313, Accuracy: 99.30%

Epoch [17/30], Step [20000/60000], Loss: 0.0308, Accuracy: 99.33%

Epoch [17/30], Step [30000/60000], Loss: 0.0305, Accuracy: 99.29%

Epoch [17/30], Step [40000/60000], Loss: 0.0311, Accuracy: 99.28%

Epoch [17/30], Step [50000/60000], Loss: 0.0317, Accuracy: 99.27%

Epoch [17/30], Step [60000/60000], Loss: 0.0318, Accuracy: 99.24%

Epoch [18/30], Step [10000/60000], Loss: 0.0281, Accuracy: 99.37%

Epoch [18/30], Step [20000/60000], Loss: 0.0286, Accuracy: 99.36%

Epoch [18/30], Step [30000/60000], Loss: 0.0291, Accuracy: 99.35%

Epoch [18/30], Step [40000/60000], Loss: 0.0291, Accuracy: 99.34%

Epoch [18/30], Step [50000/60000], Loss: 0.0289, Accuracy: 99.35%

Epoch [18/30], Step [60000/60000], Loss: 0.0288, Accuracy: 99.35%

Epoch [19/30], Step [10000/60000], Loss: 0.0245, Accuracy: 99.47%

Epoch [19/30], Step [20000/60000], Loss: 0.0246, Accuracy: 99.46%

Epoch [19/30], Step [30000/60000], Loss: 0.0248, Accuracy: 99.43%

Epoch [19/30], Step [40000/60000], Loss: 0.0250, Accuracy: 99.41%

Epoch [19/30], Step [50000/60000], Loss: 0.0250, Accuracy: 99.43%

Epoch [19/30], Step [60000/60000], Loss: 0.0253, Accuracy: 99.43%

Epoch [20/30], Step [10000/60000], Loss: 0.0216, Accuracy: 99.55%

Epoch [20/30], Step [20000/60000], Loss: 0.0222, Accuracy: 99.59%

Epoch [20/30], Step [30000/60000], Loss: 0.0225, Accuracy: 99.56%

Epoch [20/30], Step [40000/60000], Loss: 0.0228, Accuracy: 99.54%

Epoch [20/30], Step [50000/60000], Loss: 0.0228, Accuracy: 99.55%

Epoch [20/30], Step [60000/60000], Loss: 0.0233, Accuracy: 99.52%

Epoch [21/30], Step [10000/60000], Loss: 0.0211, Accuracy: 99.61%

Epoch [21/30], Step [20000/60000], Loss: 0.0196, Accuracy: 99.62%

Epoch [21/30], Step [30000/60000], Loss: 0.0203, Accuracy: 99.62%

Epoch [21/30], Step [40000/60000], Loss: 0.0202, Accuracy: 99.62%

Epoch [21/30], Step [50000/60000], Loss: 0.0205, Accuracy: 99.60%

Epoch [21/30], Step [60000/60000], Loss: 0.0211, Accuracy: 99.58%

Epoch [22/30], Step [10000/60000], Loss: 0.0178, Accuracy: 99.72%

Epoch [22/30], Step [20000/60000], Loss: 0.0191, Accuracy: 99.68%

Epoch [22/30], Step [30000/60000], Loss: 0.0194, Accuracy: 99.66%

Epoch [22/30], Step [40000/60000], Loss: 0.0192, Accuracy: 99.66%

Epoch [22/30], Step [50000/60000], Loss: 0.0193, Accuracy: 99.64%

Epoch [22/30], Step [60000/60000], Loss: 0.0189, Accuracy: 99.65%

Epoch [23/30], Step [10000/60000], Loss: 0.0167, Accuracy: 99.67%

Epoch [23/30], Step [20000/60000], Loss: 0.0168, Accuracy: 99.69%

Epoch [23/30], Step [30000/60000], Loss: 0.0164, Accuracy: 99.72%

Epoch [23/30], Step [40000/60000], Loss: 0.0165, Accuracy: 99.72%

Epoch [23/30], Step [50000/60000], Loss: 0.0165, Accuracy: 99.72%

Epoch [23/30], Step [60000/60000], Loss: 0.0170, Accuracy: 99.70%

Epoch [24/30], Step [10000/60000], Loss: 0.0141, Accuracy: 99.84%

Epoch [24/30], Step [20000/60000], Loss: 0.0142, Accuracy: 99.83%

Epoch [24/30], Step [30000/60000], Loss: 0.0144, Accuracy: 99.81%

Epoch [24/30], Step [40000/60000], Loss: 0.0152, Accuracy: 99.78%

Epoch [24/30], Step [50000/60000], Loss: 0.0154, Accuracy: 99.77%

Epoch [24/30], Step [60000/60000], Loss: 0.0154, Accuracy: 99.77%

Epoch [25/30], Step [10000/60000], Loss: 0.0136, Accuracy: 99.85%

Epoch [25/30], Step [20000/60000], Loss: 0.0141, Accuracy: 99.81%

Epoch [25/30], Step [30000/60000], Loss: 0.0140, Accuracy: 99.79%

Epoch [25/30], Step [40000/60000], Loss: 0.0138, Accuracy: 99.79%

Epoch [25/30], Step [50000/60000], Loss: 0.0139, Accuracy: 99.79%

Epoch [25/30], Step [60000/60000], Loss: 0.0141, Accuracy: 99.77%

Epoch [26/30], Step [10000/60000], Loss: 0.0116, Accuracy: 99.85%

Epoch [26/30], Step [20000/60000], Loss: 0.0117, Accuracy: 99.85%

Epoch [26/30], Step [30000/60000], Loss: 0.0126, Accuracy: 99.82%

Epoch [26/30], Step [40000/60000], Loss: 0.0129, Accuracy: 99.82%

Epoch [26/30], Step [50000/60000], Loss: 0.0127, Accuracy: 99.82%

Epoch [26/30], Step [60000/60000], Loss: 0.0126, Accuracy: 99.82%

Epoch [27/30], Step [10000/60000], Loss: 0.0103, Accuracy: 99.90%

Epoch [27/30], Step [20000/60000], Loss: 0.0115, Accuracy: 99.89%

Epoch [27/30], Step [30000/60000], Loss: 0.0117, Accuracy: 99.88%

Epoch [27/30], Step [40000/60000], Loss: 0.0118, Accuracy: 99.86%

Epoch [27/30], Step [50000/60000], Loss: 0.0114, Accuracy: 99.88%

Epoch [27/30], Step [60000/60000], Loss: 0.0114, Accuracy: 99.88%

Epoch [28/30], Step [10000/60000], Loss: 0.0102, Accuracy: 99.95%

Epoch [28/30], Step [20000/60000], Loss: 0.0097, Accuracy: 99.91%

Epoch [28/30], Step [30000/60000], Loss: 0.0099, Accuracy: 99.89%

Epoch [28/30], Step [40000/60000], Loss: 0.0100, Accuracy: 99.90%

Epoch [28/30], Step [50000/60000], Loss: 0.0104, Accuracy: 99.89%

Epoch [28/30], Step [60000/60000], Loss: 0.0106, Accuracy: 99.88%

Epoch [29/30], Step [10000/60000], Loss: 0.0091, Accuracy: 99.93%

Epoch [29/30], Step [20000/60000], Loss: 0.0095, Accuracy: 99.92%

Epoch [29/30], Step [30000/60000], Loss: 0.0093, Accuracy: 99.92%

Epoch [29/30], Step [40000/60000], Loss: 0.0095, Accuracy: 99.92%

Epoch [29/30], Step [50000/60000], Loss: 0.0094, Accuracy: 99.92%

Epoch [29/30], Step [60000/60000], Loss: 0.0095, Accuracy: 99.91%

Epoch [30/30], Step [10000/60000], Loss: 0.0083, Accuracy: 99.91%

Epoch [30/30], Step [20000/60000], Loss: 0.0082, Accuracy: 99.92%

Epoch [30/30], Step [30000/60000], Loss: 0.0078, Accuracy: 99.94%

Epoch [30/30], Step [40000/60000], Loss: 0.0080, Accuracy: 99.94%

Epoch [30/30], Step [50000/60000], Loss: 0.0083, Accuracy: 99.93%

Epoch [30/30], Step [60000/60000], Loss: 0.0085, Accuracy: 99.92%

Training took 51.71s

Test loss: 0.065348+-0.011133, Test accuracy: 98.07+-0.14%

Model tensors saved to mnist-fc-f32.gguf:

fc1.weight (500, 784)

fc1.bias (500,)

fc2.weight (10, 500)

fc2.bias (10,)

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml/examples/mnist$

The training script includes an evaluation of the model on the test set.

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml/examples/mnist$ ls -l data/MNIST/raw/

total 65008

-rw-r--r-- 1 yongqiang yongqiang 7840016 Feb 15 23:25 t10k-images-idx3-ubyte

-rw-r--r-- 1 yongqiang yongqiang 1648877 Feb 15 23:25 t10k-images-idx3-ubyte.gz

-rw-r--r-- 1 yongqiang yongqiang 10008 Feb 15 23:25 t10k-labels-idx1-ubyte

-rw-r--r-- 1 yongqiang yongqiang 4542 Feb 15 23:25 t10k-labels-idx1-ubyte.gz

-rw-r--r-- 1 yongqiang yongqiang 47040016 Feb 15 23:25 train-images-idx3-ubyte

-rw-r--r-- 1 yongqiang yongqiang 9912422 Feb 15 23:25 train-images-idx3-ubyte.gz

-rw-r--r-- 1 yongqiang yongqiang 60008 Feb 15 23:25 train-labels-idx1-ubyte

-rw-r--r-- 1 yongqiang yongqiang 28881 Feb 15 23:25 train-labels-idx1-ubyte.gz

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml/examples/mnist$

2.2.2. To evaluate the model on the CPU using GGML

$ ../../build/bin/mnist-eval mnist-fc-f32.gguf data/MNIST/raw/t10k-images-idx3-ubyte data/MNIST/raw/t10k-labels-idx1-ubyte

________________________________________________________

________________________________________________________

________________________________________________________

________________________________________________________

__________________________________####__________________

______________________________########__________________

__________________________##########____________________

______________________##############____________________

____________________######________####__________________

__________________________________####__________________

__________________________________####__________________

________________________________####____________________

______________________________####______________________

________________________##########______________________

______________________########__####____________________

________________________##__________##__________________

____________________________________##__________________

__________________________________##____________________

__________________________________##____________________

________________________________##______________________

____________________________####________________________

__________##____________######__________________________

__________##############________________________________

________________####____________________________________

________________________________________________________

________________________________________________________

________________________________________________________

________________________________________________________

ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no

ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no

ggml_cuda_init: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 3090, compute capability 8.6, VMM: yes

mnist_model: using CUDA0 (NVIDIA GeForce RTX 3090) as primary backend

mnist_model: unsupported operations will be executed on the following fallback backends (in order of priority):

mnist_model: - CPU (AMD Ryzen 9 5950X 16-Core Processor)

mnist_model_init_from_file: loading model weights from 'mnist-fc-f32.gguf'

mnist_model_init_from_file: model arch is mnist-fc

mnist_model_init_from_file: successfully loaded weights from mnist-fc-f32.gguf

main: loaded model in 109.44 ms

mnist_model_eval: model evaluation on 10000 images took 76.92 ms, 7.69 us/image

main: predicted digit is 3

main: test_loss=0.066379+-0.009101

main: test_acc=97.94+-0.14%

In addition to the evaluation on the test set the GGML evaluation also prints a random image from the test set as well as the model prediction for said image.

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml/examples/mnist$ ../../build/bin/mnist-eval mnist-fc-f32.gguf data/MNIST/raw/t10k-images-idx3-ubyte data/MNIST/raw/t10k-labels-idx1-ubyte

...

mnist_model: using CPU (Intel(R) Core(TM) i7-8750H CPU @ 2.20GHz) as primary backend

mnist_model_init_from_file: loading model weights from 'mnist-fc-f32.gguf'

mnist_model_init_from_file: model arch is mnist-fc

mnist_model_init_from_file: successfully loaded weights from mnist-fc-f32.gguf

main: loaded model in 3.84 ms

mnist_model_eval: model evaluation on 10000 images took 83.78 ms, 8.38 us/image

main: predicted digit is 1

main: test_loss=0.065348+-0.009093

main: test_acc=98.07+-0.14%

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml/examples/mnist$

2.2.3. To train a fully connected model on the CPU using GGML

$ ../../build/bin/mnist-train mnist-fc mnist-fc-f32.gguf data/MNIST/raw/train-images-idx3-ubyte data/MNIST/raw/train-labels-idx1-ubyte

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml/examples/mnist$ ../../build/bin/mnist-train mnist-fc mnist-fc-f32.gguf data/MNIST/raw/train-images-idx3-ubyte data/MNIST/raw/train-labels-idx1-ubyte

mnist_model: using CPU (Intel(R) Core(TM) i7-8750H CPU @ 2.20GHz) as primary backend

mnist_model_init_random: initializing random weights for a fully connected model

ggml_opt_fit: epoch 0001/0030:

train: [=========================| data=057000/057000, loss=0.842470+-0.057215, accuracy=81.99+-0.16%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.354736+-0.009396, accuracy=89.77+-0.55%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0002/0030:

train: [=========================| data=057000/057000, loss=0.297789+-0.003739, accuracy=91.54+-0.12%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.267287+-0.011581, accuracy=92.37+-0.48%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0003/0030:

train: [=========================| data=057000/057000, loss=0.236971+-0.003106, accuracy=93.32+-0.10%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.221299+-0.010660, accuracy=93.80+-0.44%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0004/0030:

train: [=========================| data=057000/057000, loss=0.195029+-0.002957, accuracy=94.46+-0.10%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.187524+-0.010246, accuracy=94.50+-0.42%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0005/0030:

train: [=========================| data=057000/057000, loss=0.163346+-0.002484, accuracy=95.41+-0.09%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.167672+-0.008361, accuracy=95.00+-0.40%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0006/0030:

train: [=========================| data=057000/057000, loss=0.140522+-0.002344, accuracy=96.04+-0.08%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.150562+-0.010338, accuracy=95.60+-0.37%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0007/0030:

train: [=========================| data=057000/057000, loss=0.122155+-0.002059, accuracy=96.52+-0.08%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.137456+-0.008758, accuracy=96.43+-0.34%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0008/0030:

train: [=========================| data=057000/057000, loss=0.105244+-0.001992, accuracy=97.05+-0.07%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.126794+-0.008748, accuracy=96.43+-0.34%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0009/0030:

train: [=========================| data=057000/057000, loss=0.094218+-0.001796, accuracy=97.39+-0.07%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.116145+-0.006691, accuracy=96.77+-0.32%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0010/0030:

train: [=========================| data=057000/057000, loss=0.084340+-0.001764, accuracy=97.62+-0.06%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.111088+-0.006398, accuracy=96.80+-0.32%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0011/0030:

train: [=========================| data=057000/057000, loss=0.074447+-0.001579, accuracy=97.94+-0.06%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.104478+-0.008579, accuracy=97.13+-0.30%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0012/0030:

train: [=========================| data=057000/057000, loss=0.066881+-0.001480, accuracy=98.12+-0.06%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.098624+-0.006870, accuracy=97.13+-0.30%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0013/0030:

train: [=========================| data=057000/057000, loss=0.059336+-0.001320, accuracy=98.38+-0.05%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.097907+-0.006335, accuracy=97.37+-0.29%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0014/0030:

train: [=========================| data=057000/057000, loss=0.053577+-0.001345, accuracy=98.58+-0.05%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.096755+-0.007440, accuracy=97.33+-0.29%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0015/0030:

train: [=========================| data=057000/057000, loss=0.048068+-0.001061, accuracy=98.72+-0.05%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.090680+-0.007853, accuracy=97.60+-0.28%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0016/0030:

train: [=========================| data=057000/057000, loss=0.043474+-0.001046, accuracy=98.87+-0.04%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.092639+-0.007943, accuracy=97.37+-0.29%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0017/0030:

train: [=========================| data=057000/057000, loss=0.039780+-0.000824, accuracy=98.95+-0.04%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.082859+-0.006335, accuracy=97.73+-0.27%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0018/0030:

train: [=========================| data=057000/057000, loss=0.035487+-0.001002, accuracy=99.12+-0.04%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.081594+-0.007036, accuracy=97.87+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0019/0030:

train: [=========================| data=057000/057000, loss=0.032300+-0.000904, accuracy=99.23+-0.04%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.082995+-0.007232, accuracy=97.73+-0.27%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0020/0030:

train: [=========================| data=057000/057000, loss=0.028769+-0.000809, accuracy=99.35+-0.03%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.076938+-0.006239, accuracy=98.03+-0.25%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0021/0030:

train: [=========================| data=057000/057000, loss=0.026851+-0.000771, accuracy=99.39+-0.03%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.078551+-0.006827, accuracy=97.83+-0.27%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0022/0030:

train: [=========================| data=057000/057000, loss=0.024355+-0.000553, accuracy=99.48+-0.03%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.077560+-0.007714, accuracy=97.87+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0023/0030:

train: [=========================| data=057000/057000, loss=0.021858+-0.000612, accuracy=99.54+-0.03%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.077453+-0.007612, accuracy=97.97+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0024/0030:

train: [=========================| data=057000/057000, loss=0.019889+-0.000548, accuracy=99.62+-0.03%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.075201+-0.006989, accuracy=97.97+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0025/0030:

train: [=========================| data=057000/057000, loss=0.017892+-0.000533, accuracy=99.72+-0.02%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.075809+-0.007840, accuracy=97.90+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0026/0030:

train: [=========================| data=057000/057000, loss=0.016237+-0.000444, accuracy=99.75+-0.02%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.078347+-0.008378, accuracy=97.97+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0027/0030:

train: [=========================| data=057000/057000, loss=0.015027+-0.000438, accuracy=99.78+-0.02%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.075985+-0.007250, accuracy=97.93+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0028/0030:

train: [=========================| data=057000/057000, loss=0.014067+-0.000477, accuracy=99.80+-0.02%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.076615+-0.008804, accuracy=98.07+-0.25%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0029/0030:

train: [=========================| data=057000/057000, loss=0.012649+-0.000385, accuracy=99.85+-0.02%, t=00:00:01, ETA=00:00:00]

val: [=========================| data=003000/003000, loss=0.076876+-0.009151, accuracy=97.93+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: epoch 0030/0030:

train: [=========================| data=057000/057000, loss=0.011452+-0.000376, accuracy=99.86+-0.02%, t=00:00:01, ETA=00:00:00]]

val: [=========================| data=003000/003000, loss=0.076046+-0.008447, accuracy=98.00+-0.26%, t=00:00:00, ETA=00:00:00]

ggml_opt_fit: training took 00:00:34

mnist_model_save: saving model to 'mnist-fc-f32.gguf'

(base) yongqiang@yongqiang:~/llm_work/ggml_25_02_15/ggml/examples/mnist$

It can then be evaluated with the same binary as above.

2.3. Hardware Acceleration

Both the training and evaluation code is agnostic in terms of hardware as long as the corresponding GGML backend has implemented the necessary operations.

A specific backend can be selected by appending the above commands with a backend name.

The compute graphs then schedule the operations to preferentially use the specified backend.

Note that if a backend does not implement some of the necessary operations a CPU fallback is used instead which may result in bad performance.

References

[1] Yongqiang Cheng, https://yongqiang.blog.csdn.net/