线性回归s

1api的调用

损失函数假设函数优化函数

import torch

import torch.nn as nn

import torch.optim as optim

# 损失函数

def test1():

# 初始化损失函数对象

criterion = nn.MSELoss()

y_pread = torch.randn(3,5,requires_grad=True)

y_test = torch.randn(3,5)

# 计算损失

loss=criterion(y_pread,y_test)

print(loss)

# 假设函数

def test2():

linear = nn.Linear(in_features=5, out_features=2)

input = torch.randn(10,5)

output = linear(input)

print(output)

# 优化方法

def test3():

linear = nn.Linear(in_features=5, out_features=2)

# 优化方法更新模型参数

optimizer = optim.SGD(linear.parameters(), lr=1e-3)

#梯度清零

optimizer.zero_grad()

# backward

# 更新参数

optimizer.step()

数据加载器

dataloader 从数据集中获取特定数目的样本

from torch.utils.data import TensorDataset, DataLoader

from torch.utils.data import dataloader

# 数据加载器

def test4():

x = torch.randn(10,5)

y = torch.randn([x.size()[0],])

data = TensorDataset(x,y)

dataloader = DataLoader(data, batch_size=2, shuffle=True)

''' 一次生成2条随机数据

tensor([[-0.9541, 1.5186, 0.3018, 0.5918, 0.2793],

[ 1.4354, -0.7736, 1.3113, -0.0430, -0.5751]]) tensor([-0.4945, 0.3350])

'''

for x,y in dataloader:

print(x,y)

break

# 构建线性回归

def test5():

# 构建数据加载器

dataloader = DataLoader(TensorDataset(torch.randn(10,5),torch.randn(10,5)),batch_size=2,shuffle=True)

# 构建模型

linear = nn.Linear(in_features=5, out_features=2)

# 构建损失函数

criterion = nn.MSELoss()

# 构建优化器

optimizer = optim.SGD(linear.parameters(), lr=1e-3)

#循环次数

epochs=1000

for epoch in range(epochs):

for x,y in dataloader:

# 训练模型

y_pred=linear(x)

# 计算损失函数

loss= criterion(y_pred,y)

# 梯度清零

optimizer.zero_grad()

# 反向传播

loss.backward()

# 更新参数

optimizer.step()

模型的存储

pickle的序列化存储,受到pytorch版本影响

2采用字典的形式把参数全部写入存储

# 模型的存储

def test6():

model1 = model(in_features=5,out_features=2)

torch.save(model1,'models.pth',pickle_module=pickle,pickle_protocol=2)

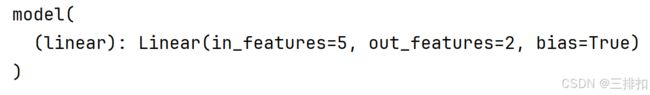

model2=torch.load('models.pth',pickle_module=pickle,map_location='cpu')

print(model2) model = Model(128,10)

optimizer = optim.Adam(model.parameters(), lr=1e-3)

save_params = {

'init_params':{'input_size':128,'output_size':10},

'acc_score':0.98,

'optimizer_params':optimizer.state_dict(),

'model_params':model.state_dict()

}

torch.save(save_params,'moxing/lianxisave_params.pth')

def test7():

model_params = torch.load('moxing/lianxisave_params.pth')

# 初始化模型

model = Model(model_params['init_params']['input_size'],model_params['init_params']['output_size'])

# 模型参数

model.load_state_dict(model_params['model_params'])

optimizer = optim.Adam(model.parameters())

optimizer.load_state_dict(model_params['optimizer_params'])

print('acc',model_params['acc_score'])