dpdk kni exception path

dpdk例子中提供了两种方法与linux kernel协议栈交互: TAP和KNI; 两种方法都是创建虚拟设备用于收发报文;

TAP/TUN设备的创建

static int tap_create(char *name) { struct ifreq ifr; int fd, ret; fd = open("/dev/net/tun", O_RDWR); if (fd < 0) return fd; memset(&ifr, 0, sizeof(ifr)); /* TAP device without packet information */ ifr.ifr_flags = IFF_TAP | IFF_NO_PI; if (name && *name) rte_snprintf(ifr.ifr_name, IFNAMSIZ, name); ret = ioctl(fd, TUNSETIFF, (void *) &ifr); if (ret < 0) { close(fd); return ret; } if (name) rte_snprintf(name, IFNAMSIZ, ifr.ifr_name); return fd;

发送报文到TAP设备

for (;;) { struct rte_mbuf *pkts_burst[PKT_BURST_SZ]; unsigned i; /* 从PMD收包 */ const unsigned nb_rx = rte_eth_rx_burst(port_ids[lcore_id], 0, pkts_burst, PKT_BURST_SZ); core_stats[lcore_id].rx += nb_rx; for (i = 0; likely(i < nb_rx); i++) { struct rte_mbuf *m = pkts_burst[i]; /* 把收到的报文内容写到TAP设备 */ /* Ignore return val from write() */ int ret = write(tap_fd, rte_pktmbuf_mtod(m, void*), rte_pktmbuf_data_len(m)); /* mbuf free */ rte_pktmbuf_free(m); if (unlikely(ret < 0)) lcore_stats[lcore_id].dropped++; else lcore_stats[lcore_id].tx++; } }

报文发送到kernel中后在TAP/TUN设备中需要通过桥接/路由进行L2/L3转发后,到另外一个TAP/TUN设备供应用程序读取

for (;;) { int ret; struct rte_mbuf *m = rte_pktmbuf_alloc(pktmbuf_pool); if (m == NULL) continue; /* 从TAP设备读报文内容 */ ret = read(tap_fd, m->pkt.data, MAX_PACKET_SZ); lcore_stats[lcore_id].rx++; if (unlikely(ret < 0)) { FATAL_ERROR("Reading from %s interface failed", tap_name); } /* 转换为mbuf */ m->pkt.nb_segs = 1; m->pkt.next = NULL; m->pkt.pkt_len = (uint16_t)ret; m->pkt.data_len = (uint16_t)ret; /* 发送报文 */ ret = rte_eth_tx_burst(port_ids[lcore_id], 0, &m, 1); if (unlikely(ret < 1)) { rte_pktmbuf_free(m); lcore_stats[lcore_id].dropped++; } else { lcore_stats[lcore_id].tx++; } }

这种方法比较简单.但是这个方法的效率可能比较低,数据需要从用户空间复制到内核空间,最后生成skb的时候还需要复制一次? 具体TAP/TUN的内核代码后面有需要再进一步学习吧;

KNI的实现, example实现了以下功能

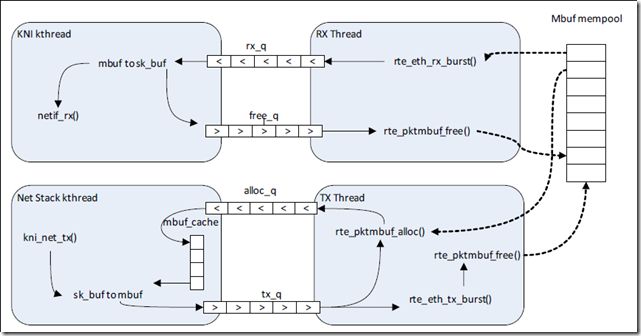

RX方向: PMD分配mbuf, 收包线程收到后把mbuf放入rx_q FIFO, kni线程从rx_q取出mbuf然后转换为skb调用netif_rx把报文发送到协议栈中; 最后rx_q中取出来的mbuf放入free_q中, 由用户空间的收包线程释放;

TX方向: 从协议栈发到kni设备上的报文,kni的发包函数kni_net_tx从alloc_q中取可用的mbuf, 把skb转换为mbuf, 并把mbuf放入tx_q中, 用户空间的tx线程从tx_q取出mbuf并调用PMD驱动的发包函数发送报文;

/* Initialise each port */ for (port = 0; port < nb_sys_ports; port++) { /* Skip ports that are not enabled */ if (!(ports_mask & (1 << port))) continue; /* 初始化端口的收发包队列并启用 */ init_port(port); if (port >= RTE_MAX_ETHPORTS) rte_exit(EXIT_FAILURE, "Can not use more than " "%d ports for kni\n", RTE_MAX_ETHPORTS); /* 为每个物理端口分配一个KNI设备 */ kni_alloc(port); }

static int kni_alloc(uint8_t port_id) { uint8_t i; struct rte_kni *kni; struct rte_kni_conf conf; struct kni_port_params **params = kni_port_params_array; if (port_id >= RTE_MAX_ETHPORTS || !params[port_id]) return -1; /* 根据该端口的内核线程数决定kni设备数 */ /* 多线程模式 如果没有指定内核线程数则每个port创建一个kni设备且不指定内核线程对应的lcore 如果指定了内核线程个数则根据内核线程个数为每个port创建相应个数的kni设备并指定内核线程对应的lcore 单线程模式 如果没有指定内核线程数则每个port创建一个kni设备 如果指定了内核线程个数则根据内核线程个数为每个port创建相应个数的kni设备 */ params[port_id]->nb_kni = params[port_id]->nb_lcore_k ? params[port_id]->nb_lcore_k : 1; for (i = 0; i < params[port_id]->nb_kni; i++) { /* Clear conf at first */ memset(&conf, 0, sizeof(conf)); if (params[port_id]->nb_lcore_k) { rte_snprintf(conf.name, RTE_KNI_NAMESIZE, "vEth%u_%u", port_id, i);

/* 多线程模式强制绑定内核线程到某个lcore */ conf.core_id = params[port_id]->lcore_k[i]; conf.force_bind = 1; } else rte_snprintf(conf.name, RTE_KNI_NAMESIZE, "vEth%u", port_id); conf.group_id = (uint16_t)port_id; conf.mbuf_size = MAX_PACKET_SZ; /* 创建kni设备 */ /* * The first KNI device associated to a port * is the master, for multiple kernel thread * environment. */ if (i == 0) { struct rte_kni_ops ops; struct rte_eth_dev_info dev_info; memset(&dev_info, 0, sizeof(dev_info)); rte_eth_dev_info_get(port_id, &dev_info); conf.addr = dev_info.pci_dev->addr; conf.id = dev_info.pci_dev->id; memset(&ops, 0, sizeof(ops)); ops.port_id = port_id; ops.change_mtu = kni_change_mtu; ops.config_network_if = kni_config_network_interface; kni = rte_kni_alloc(pktmbuf_pool, &conf, &ops); } else kni = rte_kni_alloc(pktmbuf_pool, &conf, NULL); if (!kni) rte_exit(EXIT_FAILURE, "Fail to create kni for " "port: %d\n", port_id); params[port_id]->kni[i] = kni; } return 0; }

struct rte_kni * rte_kni_alloc(struct rte_mempool *pktmbuf_pool, const struct rte_kni_conf *conf, struct rte_kni_ops *ops) { int ret; struct rte_kni_device_info dev_info; struct rte_kni *ctx; char intf_name[RTE_KNI_NAMESIZE]; #define OBJNAMSIZ 32 char obj_name[OBJNAMSIZ]; char mz_name[RTE_MEMZONE_NAMESIZE]; const struct rte_memzone *mz; if (!pktmbuf_pool || !conf || !conf->name[0]) return NULL; /* 通过/dev/kni发送创建kni设备请求 */ /* Check FD and open once */ if (kni_fd < 0) { kni_fd = open("/dev/" KNI_DEVICE, O_RDWR); if (kni_fd < 0) { RTE_LOG(ERR, KNI, "Can not open /dev/%s\n", KNI_DEVICE); return NULL; } } /* vEthx_x (port_thread)或者vEthx(port) */ rte_snprintf(intf_name, RTE_KNI_NAMESIZE, conf->name); rte_snprintf(mz_name, RTE_MEMZONE_NAMESIZE, "KNI_INFO_%s", intf_name); /* rte_kni */ mz = kni_memzone_reserve(mz_name, sizeof(struct rte_kni), SOCKET_ID_ANY, 0); KNI_MZ_CHECK(mz == NULL); ctx = mz->addr; if (ctx->in_use) { RTE_LOG(ERR, KNI, "KNI %s is in use\n", ctx->name); goto fail; } memset(ctx, 0, sizeof(struct rte_kni)); if (ops) memcpy(&ctx->ops, ops, sizeof(struct rte_kni_ops)); memset(&dev_info, 0, sizeof(dev_info)); dev_info.bus = conf->addr.bus; dev_info.devid = conf->addr.devid; dev_info.function = conf->addr.function; dev_info.vendor_id = conf->id.vendor_id; dev_info.device_id = conf->id.device_id; dev_info.core_id = conf->core_id; dev_info.force_bind = conf->force_bind; dev_info.group_id = conf->group_id; dev_info.mbuf_size = conf->mbuf_size; rte_snprintf(ctx->name, RTE_KNI_NAMESIZE, intf_name); rte_snprintf(dev_info.name, RTE_KNI_NAMESIZE, intf_name); RTE_LOG(INFO, KNI, "pci: %02x:%02x:%02x \t %02x:%02x\n", dev_info.bus, dev_info.devid, dev_info.function, dev_info.vendor_id, dev_info.device_id); /* 初始化7个FIFO,分别用于TX RX ALLOC FREE REQ RESP SYNC */ /* TX RING */ rte_snprintf(obj_name, OBJNAMSIZ, "kni_tx_%s", intf_name); mz = kni_memzone_reserve(obj_name, KNI_FIFO_SIZE, SOCKET_ID_ANY, 0); KNI_MZ_CHECK(mz == NULL); ctx->tx_q = mz->addr; kni_fifo_init(ctx->tx_q, KNI_FIFO_COUNT_MAX); dev_info.tx_phys = mz->phys_addr; /* RX RING */ rte_snprintf(obj_name, OBJNAMSIZ, "kni_rx_%s", intf_name); mz = kni_memzone_reserve(obj_name, KNI_FIFO_SIZE, SOCKET_ID_ANY, 0); KNI_MZ_CHECK(mz == NULL); ctx->rx_q = mz->addr; kni_fifo_init(ctx->rx_q, KNI_FIFO_COUNT_MAX); dev_info.rx_phys = mz->phys_addr; /* ALLOC RING */ rte_snprintf(obj_name, OBJNAMSIZ, "kni_alloc_%s", intf_name); mz = kni_memzone_reserve(obj_name, KNI_FIFO_SIZE, SOCKET_ID_ANY, 0); KNI_MZ_CHECK(mz == NULL); ctx->alloc_q = mz->addr; kni_fifo_init(ctx->alloc_q, KNI_FIFO_COUNT_MAX); dev_info.alloc_phys = mz->phys_addr; /* FREE RING */ rte_snprintf(obj_name, OBJNAMSIZ, "kni_free_%s", intf_name); mz = kni_memzone_reserve(obj_name, KNI_FIFO_SIZE, SOCKET_ID_ANY, 0); KNI_MZ_CHECK(mz == NULL); ctx->free_q = mz->addr; kni_fifo_init(ctx->free_q, KNI_FIFO_COUNT_MAX); dev_info.free_phys = mz->phys_addr; /* Request RING */ rte_snprintf(obj_name, OBJNAMSIZ, "kni_req_%s", intf_name); mz = kni_memzone_reserve(obj_name, KNI_FIFO_SIZE, SOCKET_ID_ANY, 0); KNI_MZ_CHECK(mz == NULL); ctx->req_q = mz->addr; kni_fifo_init(ctx->req_q, KNI_FIFO_COUNT_MAX); dev_info.req_phys = mz->phys_addr; /* Response RING */ rte_snprintf(obj_name, OBJNAMSIZ, "kni_resp_%s", intf_name); mz = kni_memzone_reserve(obj_name, KNI_FIFO_SIZE, SOCKET_ID_ANY, 0); KNI_MZ_CHECK(mz == NULL); ctx->resp_q = mz->addr; kni_fifo_init(ctx->resp_q, KNI_FIFO_COUNT_MAX); dev_info.resp_phys = mz->phys_addr; /* Req/Resp sync mem area */ rte_snprintf(obj_name, OBJNAMSIZ, "kni_sync_%s", intf_name); mz = kni_memzone_reserve(obj_name, KNI_FIFO_SIZE, SOCKET_ID_ANY, 0); KNI_MZ_CHECK(mz == NULL); ctx->sync_addr = mz->addr; dev_info.sync_va = mz->addr; dev_info.sync_phys = mz->phys_addr; /* MBUF mempool */ rte_snprintf(mz_name, sizeof(mz_name), RTE_MEMPOOL_OBJ_NAME, pktmbuf_pool->name); mz = rte_memzone_lookup(mz_name); KNI_MZ_CHECK(mz == NULL); /* 记录mbuf pool的虚拟地址和物理地址,用于内核线程计算偏移 */ dev_info.mbuf_va = mz->addr; dev_info.mbuf_phys = mz->phys_addr; ctx->pktmbuf_pool = pktmbuf_pool; ctx->group_id = conf->group_id; ctx->mbuf_size = conf->mbuf_size; /* 发送创建kni设备请求 */ ret = ioctl(kni_fd, RTE_KNI_IOCTL_CREATE, &dev_info); KNI_MZ_CHECK(ret < 0); ctx->in_use = 1; return ctx; fail: return NULL; }

kernel的kni设备收到ioctl后调用kni_ioctl_create创建kni设备

static int kni_ioctl_create(unsigned int ioctl_num, unsigned long ioctl_param) { int ret; struct rte_kni_device_info dev_info; struct pci_dev *pci = NULL; struct pci_dev *found_pci = NULL; struct net_device *net_dev = NULL; struct net_device *lad_dev = NULL; struct kni_dev *kni, *dev, *n; printk(KERN_INFO "KNI: Creating kni...\n"); /* Check the buffer size, to avoid warning */ if (_IOC_SIZE(ioctl_num) > sizeof(dev_info)) return -EINVAL; /* Copy kni info from user space */ ret = copy_from_user(&dev_info, (void *)ioctl_param, sizeof(dev_info)); if (ret) { KNI_ERR("copy_from_user in kni_ioctl_create"); return -EIO; } /** * Check if the cpu core id is valid for binding, * for multiple kernel thread mode. */ if (multiple_kthread_on && dev_info.force_bind && !cpu_online(dev_info.core_id)) { KNI_ERR("cpu %u is not online\n", dev_info.core_id); return -EINVAL; } /* 遍历kni设备链表通过名字比较是否已经创建 */ /* Check if it has been created */ down_read(&kni_list_lock); list_for_each_entry_safe(dev, n, &kni_list_head, list) { if (kni_check_param(dev, &dev_info) < 0) { up_read(&kni_list_lock); return -EINVAL; } } up_read(&kni_list_lock); /* 虚拟设备创建 */ net_dev = alloc_netdev(sizeof(struct kni_dev), dev_info.name, kni_net_init); if (net_dev == NULL) { KNI_ERR("error allocating device \"%s\"\n", dev_info.name); return -EBUSY; } kni = netdev_priv(net_dev);

/* 参数保存在priv中 */ kni->net_dev = net_dev; kni->group_id = dev_info.group_id; kni->core_id = dev_info.core_id; strncpy(kni->name, dev_info.name, RTE_KNI_NAMESIZE); /* Translate user space info into kernel space info */ kni->tx_q = phys_to_virt(dev_info.tx_phys); kni->rx_q = phys_to_virt(dev_info.rx_phys); kni->alloc_q = phys_to_virt(dev_info.alloc_phys); kni->free_q = phys_to_virt(dev_info.free_phys); kni->req_q = phys_to_virt(dev_info.req_phys); kni->resp_q = phys_to_virt(dev_info.resp_phys); kni->sync_va = dev_info.sync_va; kni->sync_kva = phys_to_virt(dev_info.sync_phys); kni->mbuf_kva = phys_to_virt(dev_info.mbuf_phys); kni->mbuf_va = dev_info.mbuf_va; #ifdef RTE_KNI_VHOST kni->vhost_queue = NULL; kni->vq_status = BE_STOP; #endif kni->mbuf_size = dev_info.mbuf_size; KNI_PRINT("tx_phys: 0x%016llx, tx_q addr: 0x%p\n", (unsigned long long) dev_info.tx_phys, kni->tx_q); KNI_PRINT("rx_phys: 0x%016llx, rx_q addr: 0x%p\n", (unsigned long long) dev_info.rx_phys, kni->rx_q); KNI_PRINT("alloc_phys: 0x%016llx, alloc_q addr: 0x%p\n", (unsigned long long) dev_info.alloc_phys, kni->alloc_q); KNI_PRINT("free_phys: 0x%016llx, free_q addr: 0x%p\n", (unsigned long long) dev_info.free_phys, kni->free_q); KNI_PRINT("req_phys: 0x%016llx, req_q addr: 0x%p\n", (unsigned long long) dev_info.req_phys, kni->req_q); KNI_PRINT("resp_phys: 0x%016llx, resp_q addr: 0x%p\n", (unsigned long long) dev_info.resp_phys, kni->resp_q); KNI_PRINT("mbuf_phys: 0x%016llx, mbuf_kva: 0x%p\n", (unsigned long long) dev_info.mbuf_phys, kni->mbuf_kva); KNI_PRINT("mbuf_va: 0x%p\n", dev_info.mbuf_va); KNI_PRINT("mbuf_size: %u\n", kni->mbuf_size); KNI_DBG("PCI: %02x:%02x.%02x %04x:%04x\n", dev_info.bus, dev_info.devid, dev_info.function, dev_info.vendor_id, dev_info.device_id); pci = pci_get_device(dev_info.vendor_id, dev_info.device_id, NULL); /* Support Ethtool */ while (pci) { KNI_PRINT("pci_bus: %02x:%02x:%02x \n", pci->bus->number, PCI_SLOT(pci->devfn), PCI_FUNC(pci->devfn)); if ((pci->bus->number == dev_info.bus) && (PCI_SLOT(pci->devfn) == dev_info.devid) && (PCI_FUNC(pci->devfn) == dev_info.function)) { found_pci = pci; switch (dev_info.device_id) { #define RTE_PCI_DEV_ID_DECL_IGB(vend, dev) case (dev): #include <rte_pci_dev_ids.h> ret = igb_kni_probe(found_pci, &lad_dev); break; #define RTE_PCI_DEV_ID_DECL_IXGBE(vend, dev) \ case (dev): #include <rte_pci_dev_ids.h> ret = ixgbe_kni_probe(found_pci, &lad_dev); break; default: ret = -1; break; } KNI_DBG("PCI found: pci=0x%p, lad_dev=0x%p\n", pci, lad_dev); if (ret == 0) { kni->lad_dev = lad_dev; kni_set_ethtool_ops(kni->net_dev); } else { KNI_ERR("Device not supported by ethtool"); kni->lad_dev = NULL; } kni->pci_dev = found_pci; kni->device_id = dev_info.device_id; break; } pci = pci_get_device(dev_info.vendor_id, dev_info.device_id, pci); } if (pci) pci_dev_put(pci); /* 注册虚拟设备 */ ret = register_netdev(net_dev); if (ret) { KNI_ERR("error %i registering device \"%s\"\n", ret, dev_info.name); kni_dev_remove(kni); return -ENODEV; } #ifdef RTE_KNI_VHOST kni_vhost_init(kni); #endif /** * Create a new kernel thread for multiple mode, set its core affinity, * and finally wake it up. */ if (multiple_kthread_on) { /* 多线程模式为每个kni设备创建一个内核线程 */ kni->pthread = kthread_create(kni_thread_multiple, (void *)kni, "kni_%s", kni->name); if (IS_ERR(kni->pthread)) { kni_dev_remove(kni); return -ECANCELED; } /* 绑定内核线程到对应的lcore */ if (dev_info.force_bind) kthread_bind(kni->pthread, kni->core_id); /* 唤醒该收包线程 */ wake_up_process(kni->pthread); } down_write(&kni_list_lock); list_add(&kni->list, &kni_list_head); up_write(&kni_list_lock); return 0; }

回到主线程的收发包

if (flag == LCORE_RX) { RTE_LOG(INFO, APP, "Lcore %u is reading from port %d\n", kni_port_params_array[i]->lcore_rx, kni_port_params_array[i]->port_id); while (1) { f_stop = rte_atomic32_read(&kni_stop); if (f_stop) break; kni_ingress(kni_port_params_array[i]); } } else if (flag == LCORE_TX) { RTE_LOG(INFO, APP, "Lcore %u is writing to port %d\n", kni_port_params_array[i]->lcore_tx, kni_port_params_array[i]->port_id); while (1) { f_stop = rte_atomic32_read(&kni_stop); if (f_stop) break; kni_egress(kni_port_params_array[i]); } }

收包线程从PMD中收包并发送给kni,然后检查是否有需要处理的请求消息

static void kni_ingress(struct kni_port_params *p) { uint8_t i, port_id; unsigned nb_rx, num; uint32_t nb_kni; struct rte_mbuf *pkts_burst[PKT_BURST_SZ]; if (p == NULL) return; nb_kni = p->nb_kni; port_id = p->port_id; for (i = 0; i < nb_kni; i++) {

/* 从PMD驱动中收包 */

/* Burst rx from eth */ nb_rx = rte_eth_rx_burst(port_id, 0, pkts_burst, PKT_BURST_SZ); if (unlikely(nb_rx > PKT_BURST_SZ)) { RTE_LOG(ERR, APP, "Error receiving from eth\n"); return; }

/* 把所有收到的mbuf都存入rx_q中 */

/* Burst tx to kni */ num = rte_kni_tx_burst(p->kni[i], pkts_burst, nb_rx); kni_stats[port_id].rx_packets += num;

/* 处理端口的状态变化 */ rte_kni_handle_request(p->kni[i]); if (unlikely(num < nb_rx)) { /* Free mbufs not tx to kni interface */ kni_burst_free_mbufs(&pkts_burst[num], nb_rx - num); kni_stats[port_id].rx_dropped += nb_rx - num; } } }

unsigned rte_kni_tx_burst(struct rte_kni *kni, struct rte_mbuf **mbufs, unsigned num) { /* mbuf放入tx_q */ unsigned ret = kni_fifo_put(kni->rx_q, (void **)mbufs, num); /* Get mbufs from free_q and then free them */ kni_free_mbufs(kni); return ret; } static void kni_free_mbufs(struct rte_kni *kni) { int i, ret; struct rte_mbuf *pkts[MAX_MBUF_BURST_NUM]; /* 从free_q中取mbuf并释放 */ ret = kni_fifo_get(kni->free_q, (void **)pkts, MAX_MBUF_BURST_NUM); if (likely(ret > 0)) { for (i = 0; i < ret; i++) rte_pktmbuf_free(pkts[i]); } }

这里由于生产者和消费者都只有一个,因此FIFO中并未用到互斥/同步机制

/** * Adds num elements into the fifo. Return the number actually written */ static inline unsigned kni_fifo_put(struct rte_kni_fifo *fifo, void **data, unsigned num) { unsigned i = 0; /* 把write和read复制到局部变量中 */ unsigned fifo_write = fifo->write; unsigned fifo_read = fifo->read; unsigned new_write = fifo_write; for (i = 0; i < num; i++) { new_write = (new_write + 1) & (fifo->len - 1); /* 空间已满 */ if (new_write == fifo_read) break; /* 存入数据 */ fifo->buffer[fifo_write] = data[i]; fifo_write = new_write; } /* 更新FIFO的write */ fifo->write = fifo_write; return i; } /** * Get up to num elements from the fifo. Return the number actully read */ static inline unsigned kni_fifo_get(struct rte_kni_fifo *fifo, void **data, unsigned num) { unsigned i = 0; /* 把write和read复制到局部变量中 */ unsigned new_read = fifo->read; unsigned fifo_write = fifo->write; for (i = 0; i < num; i++) { /* 没有数据需要读取 */ if (new_read == fifo_write) break; /* 读取数据 */ data[i] = fifo->buffer[new_read]; new_read = (new_read + 1) & (fifo->len - 1); } /* 更新FIFO的read */ fifo->read = new_read; return i; }

发包线程

/** * Interface to dequeue mbufs from tx_q and burst tx */ static void kni_egress(struct kni_port_params *p) { uint8_t i, port_id; unsigned nb_tx, num; uint32_t nb_kni; struct rte_mbuf *pkts_burst[PKT_BURST_SZ]; if (p == NULL) return; nb_kni = p->nb_kni; port_id = p->port_id; for (i = 0; i < nb_kni; i++) { /* 从kni设备收包 */ /* Burst rx from kni */ num = rte_kni_rx_burst(p->kni[i], pkts_burst, PKT_BURST_SZ); if (unlikely(num > PKT_BURST_SZ)) { RTE_LOG(ERR, APP, "Error receiving from KNI\n"); return; } /* 发送给PMD发包 */ /* Burst tx to eth */ nb_tx = rte_eth_tx_burst(port_id, 0, pkts_burst, (uint16_t)num); kni_stats[port_id].tx_packets += nb_tx; if (unlikely(nb_tx < num)) { /* Free mbufs not tx to NIC */ kni_burst_free_mbufs(&pkts_burst[nb_tx], num - nb_tx); kni_stats[port_id].tx_dropped += num - nb_tx; } } }

unsigned rte_kni_rx_burst(struct rte_kni *kni, struct rte_mbuf **mbufs, unsigned num) { /* 从tx_q中取出mbuf */ unsigned ret = kni_fifo_get(kni->tx_q, (void **)mbufs, num); /* 申请mbuf放入allc_q */ /* Allocate mbufs and then put them into alloc_q */ kni_allocate_mbufs(kni); return ret; } static void kni_allocate_mbufs(struct rte_kni *kni) { int i, ret; struct rte_mbuf *pkts[MAX_MBUF_BURST_NUM]; /* Check if pktmbuf pool has been configured */ if (kni->pktmbuf_pool == NULL) { RTE_LOG(ERR, KNI, "No valid mempool for allocating mbufs\n"); return; } /* 每次申请MAX_MBUF_BURST_NUM个mbuf */ for (i = 0; i < MAX_MBUF_BURST_NUM; i++) { pkts[i] = rte_pktmbuf_alloc(kni->pktmbuf_pool); if (unlikely(pkts[i] == NULL)) { /* Out of memory */ RTE_LOG(ERR, KNI, "Out of memory\n"); break; } } /* No pkt mbuf alocated */ if (i <= 0) return; /* 放入allc_q */ ret = kni_fifo_put(kni->alloc_q, (void **)pkts, i); /* 队列已满 回收未入队的mbuf */ /* Check if any mbufs not put into alloc_q, and then free them */ if (ret >= 0 && ret < i && ret < MAX_MBUF_BURST_NUM) { int j; for (j = ret; j < i; j++) rte_pktmbuf_free(pkts[j]); } }

下面看下内核的kni设备的收发包函数

对于kni设备的收包函数,单线程模式下,打开设备的时候会启动kni_thread_single线程; 多线程模式下,创建kni设备的时候会启动kni_thread_multiple线程;

static int kni_thread_single(void *unused) { int j; struct kni_dev *dev, *n; while (!kthread_should_stop()) { down_read(&kni_list_lock); for (j = 0; j < KNI_RX_LOOP_NUM; j++) { /* 单线程模式下遍历所有kni设备 */ list_for_each_entry_safe(dev, n, &kni_list_head, list) { #ifdef RTE_KNI_VHOST kni_chk_vhost_rx(dev); #else /* 从rx_q中收包 */ kni_net_rx(dev); #endif /* 用户空间对request的响应 */ kni_net_poll_resp(dev); } } up_read(&kni_list_lock); /* reschedule out for a while */ schedule_timeout_interruptible(usecs_to_jiffies( \ KNI_KTHREAD_RESCHEDULE_INTERVAL)); } return 0; }

收包函数, 没有配置lo模式的时候就是kni_net_rx_normal

static kni_net_rx_t kni_net_rx_func = kni_net_rx_normal; /* rx interface */ void kni_net_rx(struct kni_dev *kni) { /** * It doesn't need to check if it is NULL pointer, * as it has a default value */ (*kni_net_rx_func)(kni); }

/* * RX: normal working mode */ static void kni_net_rx_normal(struct kni_dev *kni) { unsigned ret; uint32_t len; unsigned i, num, num_rq, num_fq; struct rte_kni_mbuf *kva; struct rte_kni_mbuf *va[MBUF_BURST_SZ]; void * data_kva; struct sk_buff *skb; struct net_device *dev = kni->net_dev; /* 每次收包的个数必须为rx_q和free_q的最小值且不超过MBUF_BURST_SZ */ /* Get the number of entries in rx_q */ num_rq = kni_fifo_count(kni->rx_q); /* Get the number of free entries in free_q */ num_fq = kni_fifo_free_count(kni->free_q); /* Calculate the number of entries to dequeue in rx_q */ num = min(num_rq, num_fq); num = min(num, (unsigned)MBUF_BURST_SZ); /* Return if no entry in rx_q and no free entry in free_q */ if (num == 0) return; /* Burst dequeue from rx_q */ ret = kni_fifo_get(kni->rx_q, (void **)va, num); if (ret == 0) return; /* Failing should not happen */ /* mbuf转换为skb */ /* Transfer received packets to netif */ for (i = 0; i < num; i++) { /* mbuf kva */ kva = (void *)va[i] - kni->mbuf_va + kni->mbuf_kva; len = kva->data_len; /* data kva */ data_kva = kva->data - kni->mbuf_va + kni->mbuf_kva; skb = dev_alloc_skb(len + 2); if (!skb) { KNI_ERR("Out of mem, dropping pkts\n"); /* Update statistics */ kni->stats.rx_dropped++; } else { /* Align IP on 16B boundary */ skb_reserve(skb, 2); memcpy(skb_put(skb, len), data_kva, len); skb->dev = dev; skb->protocol = eth_type_trans(skb, dev); skb->ip_summed = CHECKSUM_UNNECESSARY; /* 发送skb到协议栈 */ /* Call netif interface */ netif_receive_skb(skb); /* Update statistics */ kni->stats.rx_bytes += len; kni->stats.rx_packets++; } } /* 通知用户空间释放mbuf */ /* Burst enqueue mbufs into free_q */ ret = kni_fifo_put(kni->free_q, (void **)va, num); if (ret != num) /* Failing should not happen */ KNI_ERR("Fail to enqueue entries into free_q\n"); }

kni发包函数

static int kni_net_tx(struct sk_buff *skb, struct net_device *dev) { int len = 0; unsigned ret; struct kni_dev *kni = netdev_priv(dev); struct rte_kni_mbuf *pkt_kva = NULL; struct rte_kni_mbuf *pkt_va = NULL; dev->trans_start = jiffies; /* save the timestamp */ /* Check if the length of skb is less than mbuf size */ if (skb->len > kni->mbuf_size) goto drop; /** * Check if it has at least one free entry in tx_q and * one entry in alloc_q. */ if (kni_fifo_free_count(kni->tx_q) == 0 || kni_fifo_count(kni->alloc_q) == 0) { /** * If no free entry in tx_q or no entry in alloc_q, * drops skb and goes out. */ goto drop; } /* skb转mbuf */ /* dequeue a mbuf from alloc_q */ ret = kni_fifo_get(kni->alloc_q, (void **)&pkt_va, 1); if (likely(ret == 1)) { void *data_kva; pkt_kva = (void *)pkt_va - kni->mbuf_va + kni->mbuf_kva; data_kva = pkt_kva->data - kni->mbuf_va + kni->mbuf_kva; len = skb->len; memcpy(data_kva, skb->data, len); if (unlikely(len < ETH_ZLEN)) { memset(data_kva + len, 0, ETH_ZLEN - len); len = ETH_ZLEN; } pkt_kva->pkt_len = len; pkt_kva->data_len = len; /* enqueue mbuf into tx_q */ ret = kni_fifo_put(kni->tx_q, (void **)&pkt_va, 1); if (unlikely(ret != 1)) { /* Failing should not happen */ KNI_ERR("Fail to enqueue mbuf into tx_q\n"); goto drop; } } else { /* Failing should not happen */ KNI_ERR("Fail to dequeue mbuf from alloc_q\n"); goto drop; } /* Free skb and update statistics */ dev_kfree_skb(skb); kni->stats.tx_bytes += len; kni->stats.tx_packets++; return NETDEV_TX_OK; drop: /* Free skb and update statistics */ dev_kfree_skb(skb); kni->stats.tx_dropped++; return NETDEV_TX_OK; }