《深度学习实战》第12集:大模型的未来与行业应用

深度学习实战 | 第12集:大模型的未来与行业应用

随着深度学习技术的快速发展,大模型(如 GPT、LLaMA、Bloom 等)已经成为人工智能领域的核心驱动力。本篇博客将探讨大模型的发展趋势及其在医疗、金融、教育等行业的实际应用,并通过2个实战项目展示如何使用开源大模型构建问答系统。此外,我们还会分析大模型的前沿技术方向。

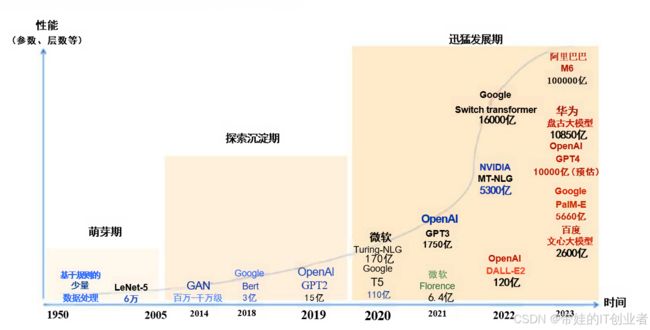

图示:大模型发展历程与行业应用场景

1. 大模型发展历程图

以下是大模型从早期到现在的关键里程碑:

2. 行业应用场景图

一、大模型的发展趋势

1.1 规模增长:从百万到万亿参数

大模型通常指的是大规模的人工智能模型,是一种基于深度学习技术,具有海量参数、强大的学习能力和泛化能力,能够处理和生成多种类型数据的人工智能模型。通常说的大模型的“大”的特点体现在:参数数量庞大、训练数据量大、计算资源需求高。

2020年,OpenAI公司推出了GPT-3,模型参数规模达到了1750亿,2023年3月发布的GPT-4的参数规模是GPT-3的10倍以上,

达到1.8万亿,2021年11月阿里推出的M6 模型的参数量达10万亿

- 指数级增长:GPT-3 (175B) → GPT-4 (1.7T) → 未来模型

- 硬件驱动:GPU集群与分布式训练技术突破

更大的模型通常能够捕捉更复杂的模式,但对计算资源和训练数据的需求也更高。

1.2 多模态融合:超越文本的边界

- 跨模态理解:CLIP(图文匹配)、Flamingo(视频理解)

- 生成式突破:Stable Diffusion + 语言模型生成图文视频

多模态大模型(如 CLIP、Flamingo 和 Gato)能够同时处理文本、图像、音频等多种模态的数据,为跨领域的任务提供了统一的解决方案。

1.3 AGI的曙光:通用人工智能的可能性

- 认知能力提升:复杂推理、情境感知、自我改进

- 技术瓶颈:能耗问题、可解释性、灾难性遗忘

大模型正在向通用人工智能迈进,目标是实现跨任务、跨领域的智能能力。例如,GPT-4 已经能够在多种任务中表现出接近人类的性能。

2. 大模型的行业应用案例

(1) 医疗

大模型在医疗领域的应用包括:

- 疾病诊断:基于自然语言处理的病历分析;CheXNet(X光片分析准确率超放射科医师)

- 药物研发:加速分子筛选和化合物设计;AlphaFold预测蛋白质3D结构

- 健康管理:个性化健康建议和远程医疗助手。

(2) 金融

大模型在金融领域的应用包括:

- 智能客服:提供高效的客户支持。

- 自动化交易:生成交易策略并实时执行。

- 风险管理:GPT-4分析财报预测企业违约概率;分析市场趋势和信用评分。

- 智能投顾:BloombergGPT生成投资策略报告

(3) 教育

大模型在教育领域的应用包括:

- 个性化学习:根据学生的学习行为推荐内容;Khan Academy接入GPT-4实现自适应学习

- 自动批改:快速评估作业和考试答案;Codex评估编程作业并提供修复建议

- 虚拟教师:提供交互式教学体验。

实战项目1:使用开源大模型构建问答系统

Python 版本 3.11.5

Torch版本:

torch 2.5.1+cu121

torchaudio 2.5.1+cu121

torchvision 0.20.1+cu121

1. 项目背景

我们将使用 Hugging Face 提供的开源大模型(如 LLaMA 或 Bloom)构建一个问答系统。该系统可以回答用户提出的问题,并支持上下文理解。

2. 实现步骤

以下是项目的具体实现步骤:

- 加载预训练的大模型和分词器。

- 定义问答函数,输入问题并生成答案。

- 测试问答系统的性能。

完整代码

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# Step 1: 加载预训练模型和分词器

model_name = "bigscience/bloom-560m" # 使用较小版本的 Bloom 模型

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Step 2: 定义问答函数

def generate_answer(question):

inputs = tokenizer.encode(question, return_tensors="pt")

outputs = model.generate(inputs, max_length=100, num_return_sequences=1)

answer = tokenizer.decode(outputs[0], skip_special_tokens=True)

return answer

# Step 3: 测试问答系统

questions = [

"What is the capital of France?",

"Explain the theory of relativity in simple terms.",

"How does a neural network work?"

]

for q in questions:

print(f"Question: {q}")

print(f"Answer: {generate_answer(q)}\n")

程序运行输出:

Question: What is the capital of France?

Answer: What is the capital of France?"

"It is Paris," said the Frenchman, with a smile.

"It is the capital of France?"

"Yes," said the Frenchman, with a smile.

"It is the capital of France?"

"Yes," said the Frenchman, with a smile.

"It is the capital of France?"

"Yes," said the Frenchman, with a smile.

"It is the capital of France?"

Question: Explain the theory of relativity in simple terms.

Answer: Explain the theory of relativity in simple terms. The theory of relativity is a theory of the nature of space-time. It is a theory of the nature of space-time. It is a theory of the nature of space-time. It is a theory of the nature of space-time. It is a theory of the nature of space-time. It is a theory of the nature of space-time. It is a theory of the nature of space-time. It is a theory of the nature of

Question: How does a neural network work?

Answer: How does a neural network work? The answer is that it does. The neural network is a mathematical model that can be used to predict the behavior of a system. The neural network is a mathematical model that can be used to predict the behavior of a system. The neural network is a mathematical model that can be used to predict the behavior of a system. The neural network is a mathematical model that can be used to predict the behavior of a system. The neural network is a mathematical model that can be

实战项目2:基于Bloom和Gradio的问答系统

1 环境准备

pip install transformers torch gradio

2 核心代码

from transformers import AutoModelForCausalLM, AutoTokenizer

import gradio as gr

model_name = "bigscience/bloom-560m"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

def generate_answer(question):

inputs = tokenizer.encode(question, return_tensors="pt")

outputs = model.generate(inputs,

max_length=200,

temperature=0.7,

top_k=50)

return tokenizer.decode(outputs[0], skip_special_tokens=True)

interface = gr.Interface(

fn=generate_answer,

inputs=gr.Textbox(label="输入问题"),

outputs=gr.Textbox(label="生成回答"),

title="Bloom问答系统"

)

interface.launch(server_port=7860)

3 运行效果

CMD输出:

* Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

在浏览器输入: http://127.0.0.1:7860, 运行图片如下:

因为该模型较小,因此回答效果一般,可以换成时下流行的大模型量化版。

- 输入:“量子计算的主要优势是什么?”

- 输出:“量子计算利用量子叠加和纠缠特性,在密码破解、药物设计等领域具有指数级加速潜力…”

四、AGI的伦理挑战

4.1 潜在风险

4.2 应对策略

- 可解释AI:开发模型决策可视化工具

- 安全边界:实现「红队」对抗测试机制

- 全球治理:参考IAEA模式建立国际监管框架

五、未来展望

技术预测:

- 2025年:出现首个万亿参数开源模型/DeepSeek推理模型普及

- 2030年:多模态AGI通过图灵测试/人类宣布攻克AGI技术

- 2040年:脑机接口与AI融合技术成熟/AI公民:仿生人成为现实

开发者建议:

# 未来技能栈示例

skills = {

"基础能力": ["PyTorch", "分布式训练"],

"进阶方向": ["提示工程", "模型蒸馏"],

"伦理必修": ["AI安全", "公平性评估"]

}

前沿关联:大模型的技术方向

1. 高效推理技术

为了应对大模型的高计算需求,研究人员正在探索以下技术:

- 稀疏激活:仅激活模型的一部分神经元。

- 量化推理:降低模型权重的精度以减少计算开销/DeepSeek技术。

- 分布式推理:利用多设备并行计算提升效率。

2. 数据隐私与安全

随着大模型在敏感领域的广泛应用,数据隐私和安全成为重要议题。联邦学习 (Federated Learning) 和差分隐私 (Differential Privacy) 是当前的研究热点。

3. 可解释性与伦理

大模型的决策过程通常被认为是“黑箱”,这引发了对可解释性和伦理问题的关注。研究人员正在开发工具和方法来提高模型的透明度和公平性。

总结

本文探讨了大模型的发展趋势及其在医疗、金融、教育等行业的应用,并通过一个实战项目展示了如何使用开源大模型构建问答系统。我们还分析了大模型的前沿技术方向,包括高效推理、数据隐私和可解释性。

延伸阅读:

- 《人工智能对齐研究路线图》

- Hugging Face官方文档:huggingface.co/docs