基于pytorch的神经病网络搭建学习

1.pycharm中code方法的使用

1.1父类重写技巧

- 操作:在需要重写的方法上右键,选择code-->

Generate > Override Methods。 - 作用:自动生成重写父类或接口的方法

2.简单神经网络

import torch

from torch import nn

class yu(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super().__init__(*args, **kwargs)

def forward(self,input):

output =input+1

return output

hh =yu()

x =torch.tensor(1.0)

output =hh(x)

print(output)

3.卷积操作

import torch

import torch.nn.functional as F

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]

])

kernel = torch.tensor([

[1,2,1],

[0,1,0],

[2,1,0],

])

input =torch.reshape(input,(1,1,5,5))

kernel = torch.reshape(kernel,(1,1,3,3))

print(input.shape)

print(kernel.shape)

#这里进行reshape的目的是卷积要求输入四维(批次,通道,宽和高)

output = F.conv2d(input,kernel,stride=1,padding=1

)

#padding是填充的意思,stride是步长

print(output)3.2卷积实战

import torch

import torchvision

from requests.packages import target

from torch import nn

from torch.nn import Conv2d

from torch.onnx.symbolic_opset9 import conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset_transform = torchvision.transforms.Compose({

torchvision.transforms.ToTensor()

})

train_set = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=dataset_transform, download=False)

test_load =DataLoader(dataset=train_set,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

class yu(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super(yu,self).__init__(*args, **kwargs)

self.conv1 = Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)

def forward(self,x):

x = self.conv1(x)

return x

hh=yu()

#print(hh)

writer = SummaryWriter("C:\yolo\yolov5-5.0\logs")

step =0

for data in test_load:

imgs,targets = data

output = hh(imgs)

print(imgs.shape)

print(output.shape)

writer.add_images("input",imgs,step)

output=torch.reshape(output,(-1,3,30,30))

writer.add_images("output",output,step)

step= step +1

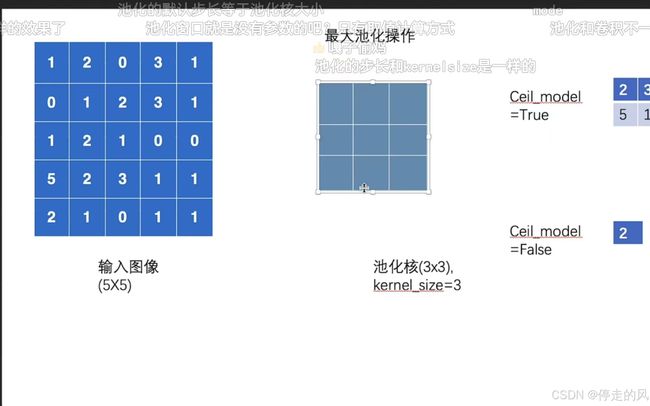

4.池化操作:

池化:提取图片在每一个池化核中的最大值,得到输出一个经过提取的图像,保留一部分特征降低复杂度

---dilation 空洞卷积

---ceil model的概念

import torch

from torch import nn

from torch.nn import MaxPool2d

from torch.nn.functional import DType

input = torch.tensor(

[

[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]

]#,dtype=torch.float32

)

input=torch.reshape(input,(-1,1,5,5))#批次大小,通道数,高度,宽度

print(input.shape)

class yu(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super().__init__(*args, **kwargs)

self.maxpool1=MaxPool2d(kernel_size=3,ceil_mode=False)

def forward(self,input):

output = self.maxpool1(input)

return output

hh=yu()

output = hh(input)

print(output)5.非线性激活操作

import torch

from torch import nn

from torch.nn import MaxPool2d, ReLU

from torch.nn.functional import DType

input = torch.tensor(

[

[1,2,0,-3,1],

[0,1,2,-3,1],

[1,2,-1,0,0],

[5,2,3,1,1],

[2,1,-1,1,1]

]#,dtype=torch.float32

)

input=torch.reshape(input,(-1,1,5,5))#批次大小,通道数,高度,宽度

print(input.shape)

class yu(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super().__init__(*args, **kwargs)

self.relul=ReLU()

def forward(self,input):

output = self.relul(input)

return output

hh=yu()

output = hh(input)

print(output)谢谢~~~~~~