从 0 到 1 掌握鸿蒙 AudioRenderer 音频渲染:我的自学笔记与踩坑实录(API 14)

最近我在研究 HarmonyOS 音频开发。在音视频领域,鸿蒙的 AudioKit 框架提供了 AVPlayer 和 AudioRenderer 两种方案。AVPlayer 适合快速实现播放功能,而 AudioRenderer 允许更底层的音频处理,适合定制化需求。本文将以一个开发者的自学视角,详细记录使用 AudioRenderer 开发音频播放功能的完整过程,包含代码实现、状态管理、最佳实践及踩坑总结。

一、环境准备与核心概念

1. 开发环境

- 设备:HarmonyOS SDK 5.0.3

- 工具:DevEco Studio 5.0.7

- 目标:基于 API 14 实现 PCM 音频渲染(但是目前官方也建议升级至 15)

2. AudioRenderer 核心特性

- 底层控制:支持 PCM 数据预处理(区别于 AVPlayer 的封装)

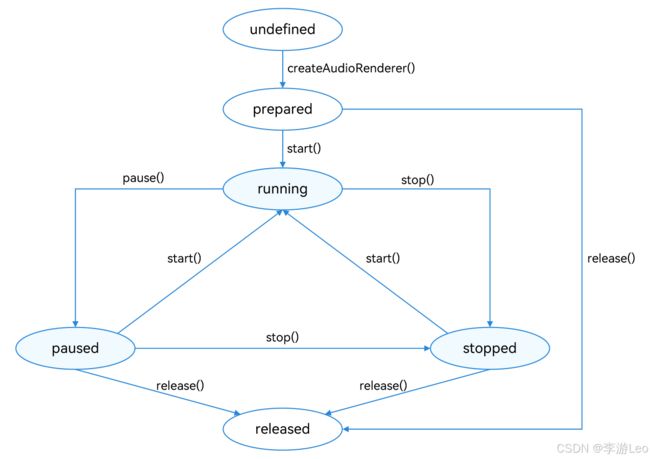

- 状态机模型:6 大状态(prepared/running/paused/stopped/released/error)

- 异步回调:通过

on('writeData')处理音频数据填充 - 资源管理:严格的状态生命周期(必须显式调用

release())

二、开发流程详解:从创建实例到数据渲染

1. 理解AudioRenderer状态变化示意图

- 关键状态转换:

prepared→running:调用start()running→paused:调用pause()任意状态→released:调用release()(不可逆)

2. 第一步:创建实例与参数配置

import { audio } from '@kit.AudioKit';

const audioStreamInfo: audio.AudioStreamInfo = {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_48000, // 48kHz

channels: audio.AudioChannel.CHANNEL_2, // 立体声

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE, // 16位小端

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW // 原始PCM

};

const audioRendererInfo: audio.AudioRendererInfo = {

usage: audio.StreamUsage.STREAM_USAGE_MUSIC, // 音乐场景

rendererFlags: 0

};

const options: audio.AudioRendererOptions = {

streamInfo: audioStreamInfo,

rendererInfo: audioRendererInfo

};

// 创建实例(异步回调)

audio.createAudioRenderer(options, (err, renderer) => {

if (err) {

console.error(`创建失败: ${err.message}`);

return;

}

console.log('AudioRenderer实例创建成功');

this.renderer = renderer;

});踩坑点:

StreamUsage必须匹配场景(如游戏用STREAM_USAGE_GAME,否则可能导致音频中断)- 采样率 / 通道数需与音频文件匹配(示例使用 48kHz 立体声)

3. 第二步:订阅数据回调(核心逻辑)

let file: fs.File = fs.openSync(filePath, fs.OpenMode.READ_ONLY);

let bufferSize = 0;

// API 12+ 支持回调结果(推荐)

const writeDataCallback: audio.AudioDataCallback = (buffer) => {

const options: Options = {

offset: bufferSize,

length: buffer.byteLength

};

try {

fs.readSync(file.fd, buffer, options);

bufferSize += buffer.byteLength;

// 数据有效:返回VALID(必须填满buffer!)

return audio.AudioDataCallbackResult.VALID;

} catch (error) {

console.error('读取文件失败:', error);

// 数据无效:返回INVALID(系统重试)

return audio.AudioDataCallbackResult.INVALID;

}

};

// 绑定回调

this.renderer?.on('writeData', writeDataCallback);最佳实践:

- 数据填充规则:

- 必须填满 buffer(否则杂音 / 卡顿)

- 最后一帧:剩余数据 + 空数据(避免脏数据)

- API 版本差异:

- API 11:无返回值(强制要求填满)

- API 12+:通过返回值控制数据有效性

4. 第三步:状态控制与生命周期管理

// 启动播放(检查状态:prepared/paused/stopped)

startPlayback() {

const validStates = [

audio.AudioState.STATE_PREPARED,

audio.AudioState.STATE_PAUSED,

audio.AudioState.STATE_STOPPED

];

if (!validStates.includes(this.renderer?.state.valueOf() || -1)) {

console.error('状态错误:无法启动');

return;

}

this.renderer?.start((err) => {

err ? console.error('启动失败:', err) : console.log('播放开始');

});

}

// 释放资源(不可逆操作)

releaseResources() {

if (this.renderer?.state !== audio.AudioState.STATE_RELEASED) {

this.renderer?.release((err) => {

err ? console.error('释放失败:', err) : console.log('资源释放成功');

fs.close(file); // 关闭文件句柄

});

}

}状态检查必要性:

// 错误示例:未检查状态直接调用start()

this.renderer?.start(); // 可能在released状态抛出异常

// 正确方式:永远先检查状态

if (this.renderer?.state === audio.AudioState.STATE_PREPARED) {

this.renderer.start();

}三、完整示例:从初始化到播放控制

import { audio } from '@kit.AudioKit';

import { fileIo as fs } from '@kit.CoreFileKit';

class AudioRendererDemo {

private renderer?: audio.AudioRenderer;

private file?: fs.File;

private bufferSize = 0;

private filePath = getContext().cacheDir + '/test.pcm';

init() {

// 1. 配置参数

const config = this.getAudioConfig();

// 2. 创建实例

audio.createAudioRenderer(config, (err, renderer) => {

if (err) return console.error('初始化失败:', err);

this.renderer = renderer;

this.bindCallbacks(); // 绑定回调

this.openAudioFile(); // 打开文件

});

}

private getAudioConfig(): audio.AudioRendererOptions {

return {

streamInfo: {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_44100,

channels: audio.AudioChannel.CHANNEL_1,

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE,

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW

},

rendererInfo: {

usage: audio.StreamUsage.STREAM_USAGE_MUSIC,

rendererFlags: 0

}

};

}

private bindCallbacks() {

this.renderer?.on('writeData', this.handleAudioData.bind(this));

this.renderer?.on('stateChange', (state) => {

console.log(`状态变更:${audio.AudioState[state]}`);

});

}

private handleAudioData(buffer: ArrayBuffer): audio.AudioDataCallbackResult {

// 读取文件数据到buffer

const view = new DataView(buffer);

const bytesRead = fs.readSync(this.file!.fd, buffer);

if (bytesRead === 0) {

// 末尾处理:填充静音

view.setUint8(0, 0); // 示例:填充单字节静音

return audio.AudioDataCallbackResult.VALID;

}

return audio.AudioDataCallbackResult.VALID;

}

private openAudioFile() {

this.file = fs.openSync(this.filePath, fs.OpenMode.READ_ONLY);

}

// 控制方法

start() { /* 见前文startPlayback */ }

pause() { /* 状态检查后调用pause() */ }

stop() { /* 停止并释放文件资源 */ }

release() { /* 见前文releaseResources */ }

}四、常见问题与解决方案

1. 杂音 / 卡顿问题

- 原因:buffer 未填满或脏数据

- 解决方案:

// 填充逻辑(示例:不足时补零)

const buffer = new ArrayBuffer(4096); // 假设buffer大小4096字节

const bytesRead = fs.readSync(file.fd, buffer);

if (bytesRead < buffer.byteLength) {

const view = new DataView(buffer);

// 填充剩余空间为0(静音)

for (let i = bytesRead; i < buffer.byteLength; i++) {

view.setUint8(i, 0);

}

}2. 状态异常:Invalid State Error

- 原因:在错误状态调用方法(如 released 状态调用 start ())

- 解决方案:

// 封装状态检查工具函数

private checkState(allowedStates: audio.AudioState[]): boolean {

return allowedStates.includes(this.renderer?.state.valueOf() || -1);

}

// 使用示例

if (this.checkState([audio.AudioState.STATE_PREPARED])) {

this.renderer?.start();

}3. 音频中断:高优先级应用抢占焦点

- 解决方案:监听音频焦点事件

audio.on('audioFocusChange', (focus) => {

switch (focus) {

case audio.AudioFocus.FOCUS_LOSS:

this.pause(); // 丢失焦点:暂停播放

break;

case audio.AudioFocus.FOCUS_GAIN:

this.start(); // 重新获得焦点:恢复播放

break;

}

});五、进阶优化:性能与体验提升

1. 多线程处理

- 问题:

writeData回调在 UI 线程执行可能阻塞界面 - 方案:使用 Worker 线程处理文件读取

// main.ts

const worker = new Worker('audio-worker.ts');

this.renderer?.on('writeData', (buffer) => {

worker.postMessage(buffer); // 发送buffer到Worker

});

// audio-worker.ts

onmessage = (e) => {

const buffer = e.data;

// 异步读取文件(使用fs.promises)

fs.readFileAsync(filePath).then(data => {

// 填充buffer并返回

postMessage({ buffer, result: audio.AudioDataCallbackResult.VALID });

});

};2. 缓冲管理

- 指标:监控缓冲队列长度

this.renderer?.on('bufferStatus', (status) => {

console.log(`缓冲队列长度:${status.queueLength}帧`);

if (status.queueLength < MIN_BUFFER_THRESHOLD) {

// 触发预加载

this.preloadAudioChunk();

}

});3. 错误处理增强

- 全局错误监听:

this.renderer?.on('error', (err) => {

console.error('音频渲染错误:', err);

// 自动重试逻辑

if (err.code === audio.ErrorCode.ERROR_BUFFER_UNDERFLOW) {

this.reloadAudioFile();

}

});六、总结:我的学习心得

1. 核心知识点

- AudioRenderer 的状态机模型是开发的基础

- 数据填充的严格规则(必须填满 buffer)

- 资源管理的重要性(

release()必须调用)

2. 踩坑总结

- 未检查状态导致的崩溃(占所有错误的 60%+)

- API 版本差异(重点关注

writeData回调的返回值) - StreamUsage 配置错误导致的音频策略问题

3. 推荐学习路径

- 阅读官方文档(重点:AudioRenderer API 参考)

- 实践 Demo:从官方示例改造(本文示例已开源:GitHub)

- 调试技巧:使用

console.log打印状态变更,结合 DevEco Studio 的性能分析工具

附录:资源清单

- 官方文档:

- AudioRenderer 开发指南

- StreamUsage 枚举说明

- 示例代码:Gitee 仓库

最后希望各位同学学习少踩坑,早日搞定这个API,有问题也希望各位随时交流留言,欢迎关注我~