【异构计算】OpenCL矩阵转置

介绍

矩阵转置,主要的技巧还是利用好local memory ,防止local memory,以及glabol memory的读取尽量是合并读写。

完整代码一:

main.cpp代码

#include <iostream>

#include <string>

#include <fstream>

#include <sstream>

#include <time.h>

#ifdef _APPLE_

#include <OpenCL/OpenCL.h>

#else

#include <CL/cl.h>

#endif

#define MATRIXMULLTIPLY

#define N 6

#define K 8

#define L 5

//Functio to check and handle OpenCL errors

inline void checkErr(cl_int err,const char *name)

{

if(err !=CL_SUCCESS)

{

std::cerr <<"ERROR: "<< name <<"("<<err<< ")"<<std::endl;

exit(EXIT_FAILURE);

}

}

cl_context CreateContext()

{

cl_int errNum;

cl_uint numPlatforms;

cl_platform_id firstPlatformId;

cl_context context = NULL;

// First, select an OpenCL platform to run on. For this example, we simply choose the first available platform. Normally, you would

// query for all available platforms and select the most appropriate one.

errNum = clGetPlatformIDs(1, &firstPlatformId, &numPlatforms);

if (errNum != CL_SUCCESS || numPlatforms <= 0)

{

std::cerr << "Failed to find any OpenCL platforms." << std::endl;

return NULL;

}

// Next, create an OpenCL context on the platform. Attempt to create a GPU-based context, and if that fails, try to create

// a CPU-based context.

cl_context_properties contextProperties[] = { CL_CONTEXT_PLATFORM,(cl_context_properties)firstPlatformId, 0 };

context = clCreateContextFromType(contextProperties, CL_DEVICE_TYPE_GPU,NULL, NULL, &errNum);

if (errNum != CL_SUCCESS)

{

std::cout << "Could not create GPU context, trying CPU..." << std::endl;

context = clCreateContextFromType(contextProperties, CL_DEVICE_TYPE_CPU,NULL, NULL, &errNum);

if (errNum != CL_SUCCESS)

{

std::cerr << "Failed to create an OpenCL GPU or CPU context." << std::endl;

return NULL;

}

}

return context;

}

int main( int argc, char * argv[])

{

// Use the first platform

cl_int errNum;

cl_platform_id platformID;

cl_context context =NULL;

cl_device_id device;

errNum = clGetPlatformIDs(1,&platformID,NULL);

checkErr(errNum,"clGetPlatformIDS");

std::cout<<"Platform ID: "<<platformID<<std::endl;

context = CreateContext( );

if(context == NULL)

{

std::cerr << "Failed to create OpenCL context." << std::endl;

return NULL;

}

errNum = clGetDeviceIDs(platformID,CL_DEVICE_TYPE_GPU,1,&device,NULL);

if(errNum !=CL_SUCCESS)

{

std::cerr <<"Could not create CL_DEVICE_TYPE_GPU context, trying CL_DEVICE_TYPE_CPU..."<<std::endl;

errNum =clGetDeviceIDs(platformID,CL_DEVICE_TYPE_CPU,1,&device,NULL);

std::cout <<"Device: "<<device<<std::endl;

if(errNum !=CL_SUCCESS)

{

checkErr(errNum,"clGetDeviceIDs(..CL_DEVICE_TYPE_ALL..)");

}

}

cl_command_queue commandQueue = clCreateCommandQueue(context,device,0,&errNum);

checkErr(errNum,"clCreateCommandQueue( )");

cl_int Mat_A_width = N;

cl_int Mat_A_height = K;

cl_int Mat_B_width = K;

cl_int Mat_B_height = L;

float *MatA =(float*)malloc(sizeof(float)*Mat_A_width*Mat_A_height);

if(MatA ==NULL)

{

std::cerr<<"Failed to Allocationing Memmey ."<<std::endl;

}

#ifdef MATRIXMULLTIPLY

float *MatB =(float*)malloc(sizeof(float)*Mat_B_width*Mat_B_height);

float *MatC =(float*)malloc(sizeof(float)*Mat_A_width*Mat_B_height);

#else

float *MatC =(float*)malloc(sizeof(float)*Mat_A_width*Mat_A_height);

#endif

std::cout<<"=====MatA: " << Mat_A_width << "X" << Mat_A_height ;//<< std::endl;

for(int i = 0; i< Mat_A_width*Mat_A_height; i++)

{

MatA[i] = std::rand()*0.25;

//MatA[i] = 4.5;

if((i%Mat_A_height ==0)||(i == 0))

{

std::cout << std::endl;

}

std::cout<<MatA[i]<< "\t";

}

std::cout<<std::endl;

//Allocate space for Matrix A on the device

cl_mem bufferA = clCreateBuffer(context,

CL_MEM_READ_ONLY,//|CL_MEM_COPY_HOST_PTR,

Mat_A_width*Mat_A_height*sizeof(float),

NULL,

&errNum);

checkErr(errNum,"clCreateBuffer(...bufferA..)");

errNum = clEnqueueWriteBuffer(commandQueue,bufferA,CL_TRUE,0,Mat_A_width*Mat_A_height*sizeof(float),(void*)MatA, 0, NULL,NULL);

#ifdef MATRIXMULLTIPLY

std::cout<<"MatB: "<<Mat_B_width <<"X"<<Mat_B_height<<std::endl;

for(int i = 0; i< Mat_B_width*Mat_B_height; i++)

{

MatB[i] = std::rand()*0.25;

//MatB[i] = 2.0;

if((i%Mat_B_height ==0)||(i == 0))

{

std::cout << std::endl;

}

std::cout<<MatA[i]<< " ";

}

std::cout<<std::endl;

//Allocate space for Matrix B on the device

cl_mem bufferB = clCreateBuffer(context,

CL_MEM_READ_ONLY,//|CL_MEM_COPY_HOST_PTR,

Mat_B_width*Mat_B_height*sizeof(float),

NULL,

&errNum);

checkErr(errNum,"clCreateBuffer(...bufferB..)");

//Copy Matrix B to the device

errNum = clEnqueueWriteBuffer(commandQueue,bufferB,CL_TRUE, 0,Mat_B_width*Mat_B_height*sizeof(float),(void*)MatB,0,NULL,NULL);

//Allocate space for Matrix C on the device

cl_mem bufferC = clCreateBuffer(context,

CL_MEM_READ_ONLY,//|CL_MEM_COPY_HOST_PTR,

Mat_A_width*Mat_B_height*sizeof(float),

NULL,

&errNum);

checkErr(errNum,"clCreateBuffer(...bufferC..)");

#else

//Allocate space for Matrix C on the device

cl_mem bufferC = clCreateBuffer(context,

CL_MEM_READ_ONLY,//|CL_MEM_COPY_HOST_PTR,

Mat_A_width*Mat_A_height*sizeof(float),

NULL,

&errNum);

checkErr(errNum,"clCreateBuffer(...bufferC..)");

#endif

// We assume that the program source si stroed int the variable

cl_program program;

const char* fileName = "Matrixkernel.cl";

std::ifstream kernelFile(fileName,std::ios::in);

if( !kernelFile.is_open())

{

std::cerr <<"Failed to open file reading:"<<fileName<<std::endl;

return NULL;

}

std::ostringstream oss;

oss << kernelFile.rdbuf();

std::string srcStdStr = oss.str();

const char *srcStr = srcStdStr.c_str();

program = clCreateProgramWithSource(context, 1,(const char**)&srcStr,NULL, NULL);

if (program == NULL)

{

std::cerr << "Failed to create OpenCL program from source." << std::endl;

return NULL;

}

errNum = clBuildProgram(program, 0, NULL, NULL, NULL, NULL);

if (errNum != CL_SUCCESS)

{

// Determine the reason for the error

char buildLog[16384];

clGetProgramBuildInfo(program, device, CL_PROGRAM_BUILD_LOG,sizeof(buildLog), buildLog, NULL);

std::cerr << "Error in kernel: " << std::endl;

std::cerr << buildLog;

clReleaseProgram(program);

return NULL;

}

#ifdef MATRIXMULLTIPLY

// Create the kernel

cl_kernel kernel = clCreateKernel(program,"MulltiplySample",NULL);

if(kernel ==NULL)

{

std::cerr<<"Faile to create kernel."<<std::endl;

return NULL;

}

//set the kernel arguments

clSetKernelArg(kernel, 0,sizeof(cl_mem), (void*) &bufferC);

clSetKernelArg(kernel, 1,sizeof(cl_int), (void*) &Mat_A_width);

clSetKernelArg(kernel, 2,sizeof(cl_int), (void*) &Mat_A_height);

clSetKernelArg(kernel, 3,sizeof(cl_int), (void*) &Mat_B_width);

clSetKernelArg(kernel, 4,sizeof(cl_int), (void*) &Mat_B_height);

clSetKernelArg(kernel, 5,sizeof(cl_mem), (void*) &bufferA);

clSetKernelArg(kernel, 6,sizeof(cl_mem), (void*) &bufferB);

//Set Local and global workgroup sizes

size_t globalws[2]={Mat_A_width,Mat_B_height};

size_t localws[2]={Mat_A_width,Mat_B_height};

//float strTime = clock();

//Execte the kernel

errNum = clEnqueueNDRangeKernel(commandQueue,kernel,2,NULL,globalws,localws,0,NULL,NULL);

if(errNum !=CL_SUCCESS)

{

std::cerr<<"Faile to Execte the kernal.."<<std::endl;

return NULL;

}

errNum = clEnqueueReadBuffer(commandQueue,bufferC,CL_TRUE,0,Mat_B_height*Mat_A_width*sizeof(float),(void*)MatC,0,NULL,NULL);

std::cout<<"MatrixC:"<<Mat_A_width<<"X"<<Mat_B_height<<std::endl;

for(int i =0; i< Mat_A_width*Mat_B_height; i++)

{

if((i != 0)&&(i%Mat_B_height == 0))

{

std::cout<<std::endl;

}

std::cout<<MatC[i]<<"\t";

}

std::cout << std::endl;

clReleaseKernel(kernel);

#else

cl_kernel Trapsposekernel;

cl_int blockSize =16;

if(Mat_A_width*Mat_A_height >1000)

{

Trapsposekernel = clCreateKernel(program,"MatrixTranspose",NULL);

std::cout<<"CreateKernel in MatrixTranspose"<<std::endl;

if(Trapsposekernel == NULL)

{

std::cerr<<"Faile to Create TrapsposeKernel."<< std::endl;

return NULL;

}

clSetKernelArg(Trapsposekernel, 0,sizeof(cl_mem), (void*) &bufferC);

clSetKernelArg(Trapsposekernel, 1,sizeof(cl_mem), (void*) &bufferA);

clSetKernelArg(Trapsposekernel, 2,sizeof(cl_float)*blockSize*blockSize,NULL); //

clSetKernelArg(Trapsposekernel, 3,sizeof(cl_int), (void*) &Mat_A_width);

clSetKernelArg(Trapsposekernel, 4,sizeof(cl_int), (void*) &Mat_A_height);

clSetKernelArg(Trapsposekernel, 5,sizeof(cl_mem), (void*) &blockSize); //

}

else

{

Trapsposekernel = clCreateKernel(program,"TrapsposeMatrixSample",NULL);

std::cout<<"CreateKernel in TrapsposeMatrixSample"<<std::endl;

if(Trapsposekernel == NULL)

{

std::cerr<<"Faile to Create TrapsposeKernel."<< std::endl;

return NULL;

}

clSetKernelArg(Trapsposekernel, 0,sizeof(cl_mem), (void*) &bufferC);

clSetKernelArg(Trapsposekernel, 1,sizeof(cl_int), (void*) &Mat_A_width);

clSetKernelArg(Trapsposekernel, 2,sizeof(cl_int), (void*) &Mat_A_height);

clSetKernelArg(Trapsposekernel, 3,sizeof(cl_mem), (void*) &bufferA);

}

size_t localtr[2] = {Mat_A_height,Mat_A_width};

#ifdef MATRIXMULLTIPLY

size_t globaltr[2] = {Mat_A_width,Mat_B_height}

#else

size_t globaltr[2] = {Mat_A_height,Mat_A_width};

#endif //MATRIXMULLTIPLY

cl_event dev;

//commandQueue the kernel up for executio across the array

errNum = clEnqueueNDRangeKernel(commandQueue,Trapsposekernel,2,NULL,globaltr,localtr,0,NULL,&dev);

if(errNum !=CL_SUCCESS)

{

std::cerr<<"Faile to Execte the kernel.."<<std::endl;

return NULL;

}

std::cout<<"CommandQueue: "<<commandQueue<<std::endl;

clFinish(commandQueue);

cl_ulong startTime, endTime;

clGetEventProfilingInfo(dev, CL_PROFILING_COMMAND_START,sizeof(cl_ulong), &startTime, NULL);

clGetEventProfilingInfo(dev, CL_PROFILING_COMMAND_END, sizeof(cl_ulong), &endTime, NULL);

cl_ulong kernelExecTimeNs = endTime-startTime;

printf("simple kernal exec time :%8.6f ms\n", kernelExecTimeNs*1e-6 );

errNum = clEnqueueReadBuffer(commandQueue,bufferC,CL_TRUE,0,Mat_A_width*Mat_A_height*sizeof(float),(void*)MatC,0,NULL,NULL);

std::cout<<"====Trapspose MatrixA : "<<Mat_A_height<<"X"<<Mat_A_width<<std::endl;

for(int i =0; i< Mat_A_width*Mat_A_height; i++)

{

if((i != 0)&&(i%Mat_A_width == 0))

{

std::cout<<std::endl;

}

std::cout<<MatC[i]<<"\t";

}

std::cout << std::endl;

#endif

clReleaseProgram(program);

clReleaseCommandQueue(commandQueue);

clReleaseContext(context);

delete[] MatA;

//delete[] MatB;

delete[] MatC;

return 0;

}

kernel代码

/*

*@param outputC output Matrix

*@param widthA is width of intputA in the Matrix A

*@param heightA is height of intputA in the Matrix A

*@param widthB is width of intputB in the Matrix B

*@param heightB is height of intputB in the Matrix B

*@param inputA is width of intputA in the Matrix A

*@param inputB is width of intputA in the Matrix B

*/

__kernel void MulltiplySample(__global float* outputC,

const int widthA,

const int heightA,

const int widthB,

const int heightB,

__global float* inputA,

__global float* inputB)

{

int row = get_global_id(1); // Get global position in Y direction

int col = get_global_id(0); // Get global position in X direction

float sum = 0.0f;

//Calculat result of one element of Matrix C

for( int i = 0; i< widthA; i++)

{

sum += inputA[row * widthA+i] * inputB[i * widthB + col];

}

outputC[row * widthB+col] = sum;

}

/*

*@param TrapsposeMatrix output Matrix

*@param width is InputMatrix width

*@param height is InputMatrix height

*@param InputMatrix is Input Matrix

*/

__kernel void TrapsposeMatrixSample(__global float* TrapsposeMatrix,

const uint width, const uint height,

__global float* InputMatrix)

{

int row = get_global_id(0);

int col = get_global_id(1);

TrapsposeMatrix[row * width +col] = InputMatrix[col * height + row];

}

/*

* Copies a block to the local memory

* and copies back the transpose from local memory to output

* @param output output matrix

* @param input input matrix

* @param block local memory of size blockSize x blockSize

* @param width width of the input matrix

* @param height height of the input matrix

* @param blockSize size of the block

*/

__kernel void MatrixTranspose(__global float * output,

__global float * input,

__local float * block,

const uint width,

const uint height,

const uint blockSize)

{

uint globalIdx = get_global_id(0);

uint globalIdy = get_global_id(1);

uint localIdx = get_local_id(0);

uint localIdy = get_local_id(1);

/* copy from input to local memory */

block[localIdy*blockSize + localIdx] = input[globalIdy*width + globalIdx];

/* wait until the whole block is filled */

barrier(CLK_LOCAL_MEM_FENCE);

uint groupIdx = get_group_id(0);

uint groupIdy = get_group_id(1);

/* calculate the corresponding target location for transpose by inverting x and y values*/

uint targetGlobalIdx = groupIdy*blockSize + localIdy;

uint targetGlobalIdy = groupIdx*blockSize + localIdx;

/* calculate the corresponding raster indices of source and target */

uint targetIndex = targetGlobalIdy*height + targetGlobalIdx;

uint sourceIndex = localIdy * blockSize + localIdx;

output[targetIndex] = block[sourceIndex];

}

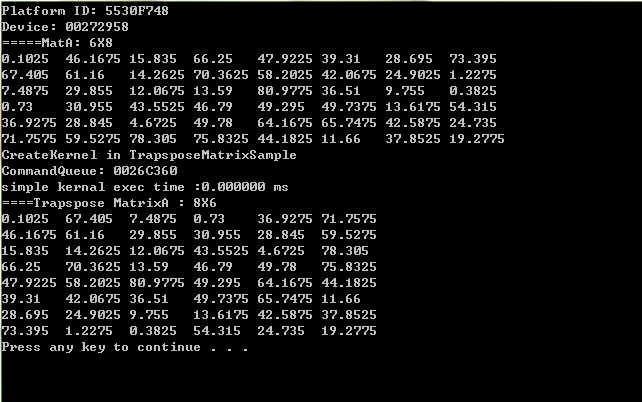

测试结果输出

完整代码二:

maincpp代码

// Matrix.cpp : Defines the entry point for the console application.

#include "stdafx.h"

#include <CL/cl.h>

#include <stdio.h>

#include <stdlib.h>

#include <time.h>

#include <iostream>

#include <fstream>

using namespace std;

#pragma comment (lib,"OpenCL.lib")

#define M 2048

int convertToString(const char *filename, std::string& s)

{

size_t size;

char* str;

std::fstream f(filename, (std::fstream::in | std::fstream::binary));

if(f.is_open())

{

size_t fileSize;

f.seekg(0, std::fstream::end);

size = fileSize = (size_t)f.tellg();

f.seekg(0, std::fstream::beg);

str = new char[size+1];

if(!str)

{

f.close();

return NULL;

}

f.read(str, fileSize);

f.close();

str[size] = '\0';

s = str;

delete[] str;

return 0;

}

printf("Error: Failed to open file %s\n", filename);

return 1;

}

int main(int argc, char* argv[])

{

float *src1=0;

float *src2=0;

src1 = (float*)malloc(M*M*sizeof(float));

src2 = (float*)malloc(M*M*sizeof(float));

int i, j;

srand( (unsigned)time( NULL ) );

for(i = 0; i < M*M; i++)

src1[i] = rand()%50;

for( i=0; i < M; i++)

{

for(j=0; j < M; j++)

{

src2[i*M+j] = src1[j*M+i];

}

}

cl_uint status;

cl_platform_id platform;

status = clGetPlatformIDs( 1, &platform, NULL );

cl_device_id device;

clGetDeviceIDs( platform, CL_DEVICE_TYPE_ALL,1, &device,NULL);

cl_context context = clCreateContext( NULL, 1,&device,NULL, NULL, NULL);

cl_command_queue queue = clCreateCommandQueue( context,device,

CL_QUEUE_PROFILING_ENABLE, NULL );

cl_mem clsrc1 = clCreateBuffer(context,CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR,

M*M*sizeof(cl_float),src1,NULL );

cl_mem clsrc2 = clCreateBuffer( context,CL_MEM_WRITE_ONLY,

M*M * sizeof(cl_float), NULL, NULL );

const char * filename = "transpose.cl";

std::string sourceStr;

status = convertToString(filename, sourceStr);

const char * source = sourceStr.c_str();

size_t sourceSize[] = { strlen(source) };

cl_program program = clCreateProgramWithSource(context, 1, &source,sourceSize,NULL);

status = clBuildProgram( program, 1, &device, NULL, NULL, NULL );

if(status != 0)

{

printf("clBuild failed:%d\n", status);

char tbuf[0x10000];

clGetProgramBuildInfo(program, device, CL_PROGRAM_BUILD_LOG, 0x10000, tbuf, NULL);

printf("\n%s\n", tbuf);

return -1;

}

cl_kernel kernel = clCreateKernel( program, "matrixTransposeSimple", NULL );

cl_int dimx = M;

cl_int dimy = M;

clSetKernelArg(kernel, 0, sizeof(cl_mem), (void *)&clsrc2);

clSetKernelArg(kernel, 1, sizeof(cl_mem), (void *)&clsrc1);

clSetKernelArg(kernel, 2, sizeof(cl_int), (void *)&dimx);

clSetKernelArg(kernel, 3, sizeof(cl_int), (void *)&dimy);

//Set local and global workgroup sizes

size_t localws[2] = {16, 16} ;

size_t globalws[2] = {M,M};

cl_event ev;

clEnqueueNDRangeKernel( queue ,kernel,2, 0, globalws, localws,0, NULL, &ev);

clFinish( queue );

cl_ulong startTime, endTime;

clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_START,sizeof(cl_ulong), &startTime, NULL);

clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_END,sizeof(cl_ulong), &endTime, NULL);

cl_ulong kernelExecTimeNs = endTime-startTime;

printf("simple kernal exec time :%8.6f ms\n ", kernelExecTimeNs*1e-6 );

float *op_data = 0;

// copy results from device back to host

op_data = (cl_float *) clEnqueueMapBuffer(queue,clsrc2,CL_TRUE, CL_MAP_READ,0,

M*M*sizeof(cl_float),0, NULL, NULL, NULL );

for(i = 0; i < M*M; i++)

{

if(abs(src2[i] - op_data[i]) > 0.0001)

{

printf("check failed\n");

break;

}

}

if(i == M*M)

printf("check passed\n");

cl_uint blockSize = 16;

kernel = clCreateKernel( program, "matrixTranspose", NULL );

clSetKernelArg(kernel, 0, sizeof(cl_mem), (void *)&clsrc2);

clSetKernelArg(kernel, 1, sizeof(cl_mem), (void *)&clsrc1);

clSetKernelArg(kernel, 2, sizeof(cl_float)*blockSize*blockSize, NULL);

clSetKernelArg(kernel, 3, sizeof(cl_int), (void *)&dimx);

clSetKernelArg(kernel, 4, sizeof(cl_int), (void *)&dimy);

clSetKernelArg(kernel, 5, sizeof(cl_int), (void *)&blockSize);

clEnqueueNDRangeKernel(queue ,kernel,2, 0, globalws, localws,0, NULL, &ev);

clFinish( queue );

clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_START, sizeof(cl_ulong), &startTime, NULL);

clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_END,sizeof(cl_ulong), &endTime, NULL);

kernelExecTimeNs = endTime-startTime;

printf("kernal exec time :%8.6f ms\n ", kernelExecTimeNs*1e-6 );

// copy results from device back to host

op_data = (cl_float *) clEnqueueMapBuffer( queue,clsrc2,CL_TRUE,CL_MAP_READ,0,

M*M*sizeof(cl_float),0, NULL, NULL, NULL );

for(i = 0; i < M*M; i++)

{

if(abs(src2[i] - op_data[i]) > 0.0001)

{

printf("check failed\n");

break;

}

}

if(i == M*M)

printf("check passed\n");

if(src1)

free(src1);

if(src2)

free(src2);

clReleaseMemObject(clsrc1);

clReleaseMemObject(clsrc2);

clReleaseProgram(program);

clReleaseCommandQueue(queue);

clReleaseContext(context);

return 0;

}

kernel代码

/*

* Copies a block to the local memory

* and copies back the transpose from local memory to output

* @param output output matrix

* @param input input matrix

* @param block local memory of size blockSize x blockSize

* @param width width of the input matrix

* @param height height of the input matrix

* @param blockSize size of the block

*/

__kernel

void matrixTranspose(__global float * output,

__global float * input,

__local float * block,

const uint width,

const uint height,

const uint blockSize

)

{

uint globalIdx = get_global_id(0);

uint globalIdy = get_global_id(1);

uint localIdx = get_local_id(0);

uint localIdy = get_local_id(1);

/* copy from input to local memory */

block[localIdy*blockSize + localIdx] = input[globalIdy*width + globalIdx];

/* wait until the whole block is filled */

barrier(CLK_LOCAL_MEM_FENCE);

uint groupIdx = get_group_id(0);

uint groupIdy = get_group_id(1);

/* calculate the corresponding target location for transpose by inverting x and y values*/

uint targetGlobalIdx = groupIdy*blockSize + localIdy;

uint targetGlobalIdy = groupIdx*blockSize + localIdx;

/* calculate the corresponding raster indices of source and target */

uint targetIndex = targetGlobalIdy*height + targetGlobalIdx;

uint sourceIndex = localIdy * blockSize + localIdx;

output[targetIndex] = block[sourceIndex];

}

__kernel void matrixTransposeSimple(__global float * output,

__global float * input,

const uint width,

const uint height

)

{

uint gdx = get_global_id(0);

uint gdy = get_global_id(1);

output[gdy*width+gdx] = input[gdx*height+gdy] ;

}

测试结果输出

======================================================== 转载请注明出处:http://blog.csdn.net/songzitea/article/details/12178619

========================================================