使用PyTorch实现LeNet-5并在Fashion-MNIST数据集上训练

本文将展示如何使用PyTorch实现经典的LeNet-5卷积神经网络,并在Fashion-MNIST数据集上进行训练和评估。代码包含完整的网络定义、数据加载、训练流程及结果可视化。

1. 导入依赖库

import torch

from torch import nn

from d2l import torch as d2l2. 定义LeNet-5网络结构

通过PyTorch的nn.Sequential构建网络,包含卷积层、池化层和全连接层:

class Reshape(nn.Module):

def forward(self, x):

return x.view(-1, 1, 28, 28) # 将输入重塑为1x28x28

net = nn.Sequential(

Reshape(),

nn.Conv2d(1, 6, kernel_size=5, padding=2), nn.Sigmoid(),

nn.AvgPool2d(kernel_size=2, stride=2),

nn.Conv2d(6, 16, kernel_size=5), nn.Sigmoid(),

nn.AvgPool2d(kernel_size=2, stride=2),

nn.Flatten(),

nn.Linear(16*5*5, 120), nn.Sigmoid(),

nn.Linear(120, 84), nn.Sigmoid(),

nn.Linear(84, 10)

)3. 验证各层输出形状

输入随机数据检查网络各层的输出形状:

X = torch.rand(size=(1, 1, 28, 28), dtype=torch.float32)

for layer in net:

X = layer(X)

print(f"{layer.__class__.__name__}输出形状:\t{X.shape}")输出结果:

Reshape output shape: torch.Size([1, 1, 28, 28])

Conv2d output shape: torch.Size([1, 6, 28, 28])

...

Linear output shape: torch.Size([1, 10])4. 加载Fashion-MNIST数据集

使用d2l库快速加载数据,设置批量大小为256:

batch_size = 256

train_data, test_data = d2l.load_data_fashion_mnist(batch_size)5. 定义评估函数

修改后的准确率评估函数支持GPU计算:

def evaluate_accuracy(net, data, device=None):

if isinstance(net, nn.Module):

net.eval()

if not device:

device = next(iter(net.parameters())).device

metric = d2l.Accumulator(2)

for X, y in data:

if isinstance(X, list):

X = [x.to(device) for x in X]

else:

X = X.to(device)

y = y.to(device)

metric.add(d2l.accuracy(net(X), y), y.numel())

return metric[0] / metric[1]6. 训练与评估模型

调用d2l.train_ch6进行训练,设置10个周期和学习率0.9:

lr, num_epochs = 0.9, 10

d2l.train_ch6(net, train_data, test_data, num_epochs, lr, d2l.try_gpu())输出结果:

loss 0.470, train acc 0.822, test acc 0.805

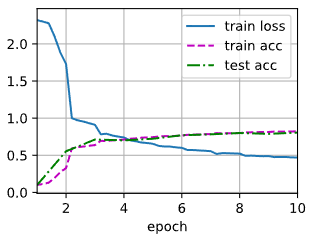

80458.2 examples/sec on cuda:07. 训练结果可视化

训练过程中会自动生成损失和准确率曲线: