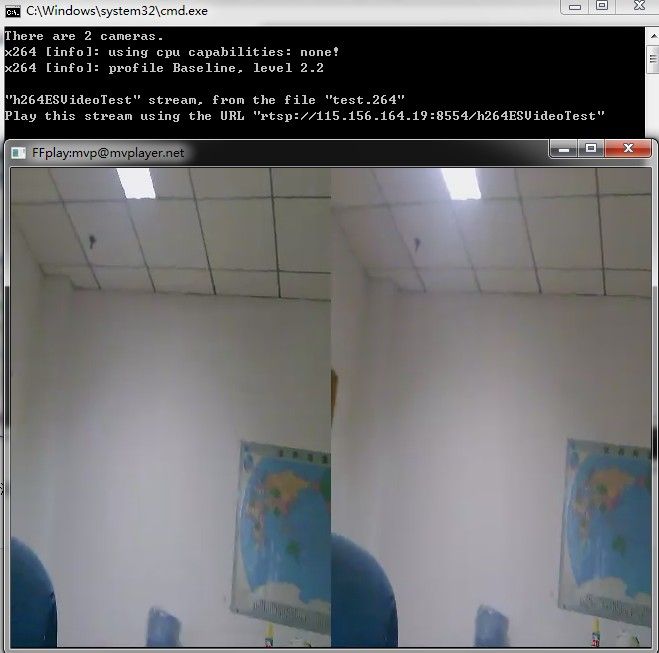

摄像头采集,264编码,live555直播(2)

加入 摄像头采集和264编码,再使用live555直播

1、摄像头采集和264编码

将x264改成编码一帧的接口,码流不写入文件而是直接写入内存中(int Encode_frame 函数中)。

/* * Filename: encodeapp.h * Auther: mlj * Create date: 2013/ 1/20 */ #ifndef _ENCODEAPP_H_ #define _ENCODEAPP_H_ #include "x264.h" #include <stdint.h> #define WRITEOUT_RECONSTRUCTION 1 typedef int32_t INT32; typedef signed char INT8; typedef struct _EncodeApp { x264_t *h; x264_picture_t pic; x264_param_t param; void *outBufs;//一帧码流的缓存 int outBufslength;//总大小 int bitslen;//实际码流大小 FILE *bits; // point to output bitstream file #ifdef WRITEOUT_RECONSTRUCTION FILE *p_rec ; #endif }EncodeApp; INT32 EncoderInit(EncodeApp* EncApp,INT8 *config_filename); INT32 EncoderEncodeFrame(EncodeApp* EncApp); INT32 EncoderDestroy(EncodeApp* EncApp); #endif

encodeapp.c

encodeapp.c

/* * Filename: encodeapp.c * Auther: mlj * Create date: 2013/ 1/20 */ #include <stdlib.h> #include <string.h> #include <stdint.h> #include <stdio.h> #include "..\inc\encodeapp.h" #pragma comment(lib,"libx264.lib") extern int Encode_frame(EncodeApp* EncApp, x264_t *h, x264_picture_t *pic ); INT32 EncoderEncodeFrame(EncodeApp* EncApp) { //printf("processing frame %d...",j); int i; //int i_frame, i_frame_total; int64_t i_file=0; /* Encode frames */ //EncApp->pic.i_pts = (int64_t)i_frame * (&EncApp->param)->i_fps_den; { /* Do not force any parameters */ EncApp->pic.i_type = X264_TYPE_AUTO; EncApp->pic.i_qpplus1 = 0; } i_file += Encode_frame(EncApp, EncApp->h, &EncApp->pic ); //fwrite(EncApp,EncApp->bits); #ifdef WRITEOUT_RECONSTRUCTION //write reconstruction #endif return 0; } INT32 EncoderDestroy(EncodeApp* EncApp) { x264_picture_clean( &EncApp->pic ); x264_encoder_close( EncApp->h ); if(EncApp->outBufs ) free(EncApp->outBufs ); fclose(EncApp->bits); #ifdef WRITEOUT_RECONSTRUCTION fclose(EncApp->p_rec); #endif return 0; }

//x264.c中改写 int Encode_frame(EncodeApp* EncApp, x264_t *h, x264_picture_t *pic ) { x264_picture_t pic_out; x264_nal_t *nal; int i_nal, i; int i_file = 0; if( x264_encoder_encode( h, &nal, &i_nal, pic, &pic_out ) < 0 ) { fprintf( stderr, "x264 [error]: x264_encoder_encode failed\n" ); } EncApp->bitslen = 0; for( i = 0; i < i_nal; i++ ) { int i_size; if( mux_buffer_size < nal[i].i_payload * 3/2 + 4 ) { mux_buffer_size = nal[i].i_payload * 2 + 4; x264_free( mux_buffer ); mux_buffer = x264_malloc( mux_buffer_size ); } i_size = mux_buffer_size; x264_nal_encode( mux_buffer, &i_size, 1, &nal[i] ); //i_file += p_write_nalu( EncApp->bits, mux_buffer, i_size ); if( EncApp->outBufslength < ( EncApp->bitslen+i_size) ) { void* temp= malloc( EncApp->bitslen+i_size); memcpy((unsigned char*)temp, (unsigned char*)(EncApp->outBufs), EncApp->bitslen); free(EncApp->outBufs); EncApp->outBufs = temp; } memcpy( (unsigned char*)(EncApp->outBufs)+ EncApp->bitslen,mux_buffer, i_size); EncApp->bitslen += i_size; } //p_write_nalu( EncApp->bits, (unsigned char*)EncApp->outBufs, EncApp->bitslen ); //if (i_nal) // p_set_eop( EncApp->bits, &pic_out ); return i_file; } INT32 EncoderInit(EncodeApp* EncApp,INT8 *config_filename) { int argc; cli_opt_t opt; static char *para[] = { "", "-q", "28", "-o", "test.264", "G:\\sequence\\walk_vga.yuv", "640x480", "--no-asm" }; char **argv = para;; //para[0] = argv[0]; //argv = para; argc = sizeof(para)/sizeof(char*); x264_param_default( &EncApp->param ); /* Parse command line */ if( Parse( argc, argv, &EncApp->param, &opt ) < 0 ) return -1; //param->i_frame_total = 100; // EncApp->param.i_width = 640; // EncApp->param.i_height = 480; //EncApp->param.rc.i_qp_constant = 28; if( ( EncApp->h = x264_encoder_open( &EncApp->param ) ) == NULL ) { fprintf( stderr, "x264 [error]: x264_encoder_open failed\n" ); return -1; } /* Create a new pic */ x264_picture_alloc( &EncApp->pic, X264_CSP_I420, EncApp->param.i_width, EncApp->param.i_height ); EncApp->outBufs = malloc(1024*1024); EncApp->outBufslength = 1024*1024; EncApp->bits =0 ; #ifdef WRITEOUT_RECONSTRUCTION EncApp->p_rec = NULL; #endif EncApp->bits = fopen("test_vc.264","wb"); #ifdef WRITEOUT_RECONSTRUCTION EncApp->p_rec = fopen("test_rec_vc.yuv", "wb"); #endif if(0==EncApp->bits) { printf("Can't open output files!\n"); return -1; } return 0; }

摄像头采集和264编码 源代码:http://download.csdn.net/user/mlj318

结果速度不是很快,640x480 采集加编码只能达到10fps.

相关配置:需要opencv库和libx264.lib.

包含目录 G:\workspace\video4windows\CameraDS and 264\inc;H:\TDDOWNLOAD\OPEN_CV\opencv\build\include\opencv2;H:\TDDOWNLOAD\OPEN_CV\opencv\build\include\opencv;H:\TDDOWNLOAD\OPEN_CV\opencv\build\include;

库目录 G:\workspace\video4windows\CameraDS and code\lib;H:\TDDOWNLOAD\OPEN_CV\opencv\build\x86\vc10\lib;

链接器 附加库目录 G:\workspace\video4windows\CameraDS and 264\lib;

链接器 附加依赖项

opencv_calib3d243d.lib

opencv_contrib243d.lib

opencv_core243d.lib

opencv_features2d243d.lib

opencv_flann243d.lib

opencv_gpu243d.lib

opencv_haartraining_engined.lib

opencv_highgui243d.lib

opencv_imgproc243d.lib

opencv_legacy243d.lib

opencv_ml243d.lib

opencv_nonfree243d.lib

opencv_objdetect243d.lib

opencv_photo243d.lib

opencv_stitching243d.lib

opencv_ts243d.lib

opencv_video243d.lib

opencv_videostab243d.lib

libx264.lib

2、再加入live555直播

class Cameras { public: void Init(); void GetNextFrame(); void Destory(); public: CCameraDS camera1; CCameraDS camera2; EncodeApp encodeapp; IplImage *pFrame1 ; IplImage *pFrame2 ; unsigned char *RGB1; unsigned char *RGB2; unsigned char *YUV1; unsigned char *YUV2; unsigned char *YUV_merge; }; void Cameras::Init() { // 1、考虑到已经存在了显示图像的窗口,那就不必再次驱动摄像头了,即便往下驱动那也是摄像头已被占用。 if(IsWindowVisible(FindWindow(NULL, g_szTitle))) { exit (-1); } //仅仅获取摄像头数目 int m_iCamCount = CCameraDS::CameraCount(); printf("There are %d cameras.\n", m_iCamCount); if(m_iCamCount==0) { fprintf(stderr, "No cameras.\n"); exit( -1); } //打开第一个摄像头 if(! camera1.OpenCamera(0, false, WIDTH,HEIGHT)) //不弹出属性选择窗口,用代码制定图像宽和高 { fprintf(stderr, "Can not open camera1.\n"); exit( -1); } if(! camera2.OpenCamera(1, false, WIDTH,HEIGHT)) //不弹出属性选择窗口,用代码制定图像宽和高 { fprintf(stderr, "Can not open camera2.\n"); exit( -1); } cvNamedWindow("camera1"); cvNamedWindow("camera2"); EncoderInit(&encodeapp,NULL); pFrame1 = camera1.QueryFrame(); pFrame2 = camera2.QueryFrame(); RGB1=(unsigned char *)malloc(pFrame1->height*pFrame1->width*3); YUV1=(unsigned char *)malloc(pFrame1->height*pFrame1->width*1.5); RGB2=(unsigned char *)malloc(pFrame2->height*pFrame2->width*3); YUV2=(unsigned char *)malloc(pFrame2->height*pFrame2->width*1.5); YUV_merge=(unsigned char *)malloc(pFrame2->height*pFrame2->width*1.5); } void Cameras::GetNextFrame() { { pFrame1 = camera1.QueryFrame(); pFrame2 = camera2.QueryFrame(); cvShowImage("camera1", pFrame1); cvShowImage("camera2", pFrame2); for(int i=0;i<pFrame1->height;i++) { for(int j=0;j<pFrame1->width;j++) { RGB1[(i*pFrame1->width+j)*3] = pFrame1->imageData[i * pFrame1->widthStep + j * 3 + 2];; RGB1[(i*pFrame1->width+j)*3+1]= pFrame1->imageData[i * pFrame1->widthStep + j * 3 + 1]; RGB1[(i*pFrame1->width+j)*3+2] = pFrame1->imageData[i * pFrame1->widthStep + j * 3 ]; RGB2[(i*pFrame1->width+j)*3] = pFrame2->imageData[i * pFrame1->widthStep + j * 3 + 2];; RGB2[(i*pFrame1->width+j)*3+1]= pFrame2->imageData[i * pFrame1->widthStep + j * 3 + 1]; RGB2[(i*pFrame1->width+j)*3+2] = pFrame2->imageData[i * pFrame1->widthStep + j * 3 ]; } } Convert(RGB1, YUV1,pFrame1->width,pFrame1->height); Convert(RGB2, YUV2,pFrame2->width,pFrame2->height); mergeleftrigth(YUV_merge,YUV1,YUV2,pFrame2->width,pFrame2->height); unsigned char *p1,*p2; p1=YUV_merge; p2=encodeapp.pic.img.plane[0];// for(int i=0;i<pFrame1->height;i++) { memcpy(p2,p1,pFrame1->width); p1+=pFrame1->width; p2+=WIDTH; } p2=encodeapp.pic.img.plane[1]; for(int i=0;i<pFrame1->height/2;i++) { memcpy(p2,p1,pFrame1->width/2); p1+=pFrame1->width/2; p2+=WIDTH/2; } p2=encodeapp.pic.img.plane[2]; for(int i=0;i<pFrame1->height/2;i++) { memcpy(p2,p1,pFrame1->width/2); p1+=pFrame1->width/2; p2+=WIDTH/2; } EncoderEncodeFrame(&encodeapp); } } void Cameras::Destory() { free(RGB1); free(RGB2); free(YUV1); free(YUV2); free(YUV_merge); camera1.CloseCamera(); camera2.CloseCamera(); cvDestroyWindow("camera1"); cvDestroyWindow("camera2"); EncoderDestroy(&encodeapp); }

void H264FramedLiveSource::doGetNextFrame() { //if( filesize(fp) > fMaxSize) // fFrameSize = fread(fTo,1,fMaxSize,fp); //else //{ // fFrameSize = fread(fTo,1,filesize(fp),fp); // fseek(fp, 0, SEEK_SET); //} //fFrameSize = fMaxSize; TwoWayCamera.GetNextFrame(); fFrameSize = TwoWayCamera.encodeapp.bitslen; if( fFrameSize > fMaxSize) { fNumTruncatedBytes = fFrameSize - fMaxSize; fFrameSize = fMaxSize; } else { fNumTruncatedBytes = 0; } memmove(fTo, TwoWayCamera.encodeapp.outBufs, fFrameSize); nextTask() = envir().taskScheduler().scheduleDelayedTask( 0, (TaskFunc*)FramedSource::afterGetting, this);//表示延迟0秒后再执行 afterGetting 函数 return; }

源代码:http://download.csdn.net/user/mlj318

640x480 只能达到3.5fps.

相关配置:需要opencv库和libx264.lib.

包含目录 H:\TDDOWNLOAD\OPEN_CV\opencv\build\include\opencv2;H:\TDDOWNLOAD\OPEN_CV\opencv\build\include\opencv;H:\TDDOWNLOAD\OPEN_CV\opencv\build\include;G:\workspace\avs\live555test -send\live555test\inc;G:\workspace\avs\live555test\live555test\BasicUsageEnvironment\include;G:\workspace\avs\live555test\live555test\UsageEnvironment\include;G:\workspace\avs\live555test\live555test\liveMedia\include;G:\workspace\avs\live555test\live555test\groupsock\include;

库目录 G:\workspace\avs\live555test\live555test\lib;H:\TDDOWNLOAD\OPEN_CV\opencv\build\x86\vc10\lib;

链接器 附加库目录 G:\workspace\video4windows\CameraDS and 264\lib;

链接器 附加依赖项

opencv_calib3d243d.lib

opencv_contrib243d.lib

opencv_core243d.lib

opencv_features2d243d.lib

opencv_flann243d.lib

opencv_gpu243d.lib

opencv_haartraining_engined.lib

opencv_highgui243d.lib

opencv_imgproc243d.lib

opencv_legacy243d.lib

opencv_ml243d.lib

opencv_nonfree243d.lib

opencv_objdetect243d.lib

opencv_photo243d.lib

opencv_stitching243d.lib

opencv_ts243d.lib

opencv_video243d.lib

opencv_videostab243d.lib

libx264.lib

出现的错误:

1>LIBCMTD.lib(sprintf.obj) : error LNK2005: _sprintf 已经在 MSVCRTD.lib(MSVCR100D.dll) 中定义

1>LIBCMTD.lib(crt0dat.obj) : error LNK2005: _exit 已经在 MSVCRTD.lib(MSVCR100D.dll) 中定义

1>LIBCMTD.lib(crt0dat.obj) : error LNK2005: __exit 已经在 MSVCRTD.lib(MSVCR100D.dll) 中定义

LIBCMTD.lib与 MSVCRTD.lib 冲突,在链接器- 输入 -忽略特定默认库中加上 LIBCMTD.lib 即可。