Hadoop CDH5 Flume部署

Hadoop有一套日志收集系统,flume,下一代的日志收集叫flume-ng,老版本的叫flume-og。这一篇就在CDH5.0上尝试flume-ng 1.4。还是基于之前升级后的CDH5集群操作。

1 安装flume

flume-ng,主要由三组包构成:

1 flume-ng 运行flume所需的所有部件

2 flume-ng-agent 管理flume服务启动停止的脚本

3 flume-ng-doc flume的文档

在U-3/4/5上安装flume

apt-get install flume-ng apt-get install flume-ng-agent apt-get install flume-ng-doc2 配置flume

安装完成后,flume的配置文件在/etc/flume-ng/conf目录下,官方已经提供了几个样板配置文件

cp /etc/flume-ng/conf/flume-conf.properties.template /etc/flume-ng/conf/flume.conf cp /etc/flume-ng/conf/flume-env.sh.template /etc/flume-ng/conf/flume-env.sh3 查看flume是否安装成功

flume-ng help4 启动flume服务

#有两种启动方法 # 1 service flume-ng-agent <start | stop | restart> # 2 flume-ng agent -c /etc/flume-ng/conf -f /etc/flume-ng/conf/flume.conf -n agent在调试的时候建议使用第二种方法

5 开始三

1 收集的日志默认和flume的日志

1 在U-3修改配置文件/etc/flume-ng/conf/flume.conf,内容如下

agent.sources = r1 agent.channels = c1 agent.sinks = k1 agent.sources.r1.type = avro agent.sources.r1.bind = 0.0.0.0 agent.sources.r1.port = 5901 agent.sources.r1.channels = c1 agent.sinks.k1.type = logger agent.sinks.k1.channel = c1 agent.channels.c1.type = memory agent.channels.c1.capacity = 10 agent.channels.c1.transactionCapacity = 10

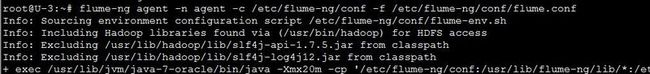

2 在U-3上启动flume服务

flume-ng agent -n agent -c /etc/flume-ng/conf -f /etc/flume-ng/conf/flume.conf

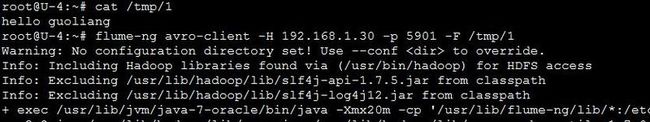

3 在U-4利用flume模拟数据发送端

flume-ng avro-client -H 192.168.1.30 -p 5901 -F /tmp/1

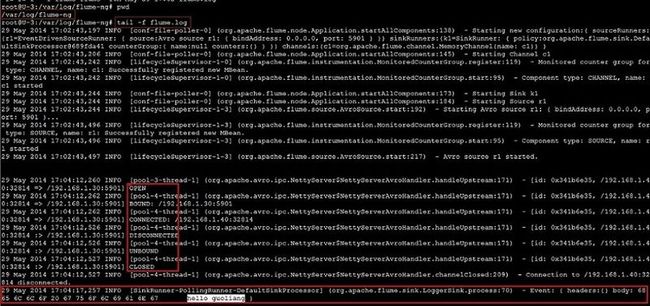

4 在U-3查看收集的日志

tail -f /var/log/flume-ng/flume.log

2 收集的日志存放在指定的目录

1 在U-3修改配置文件/etc/flume-ng/conf/flume2.conf,内容如下

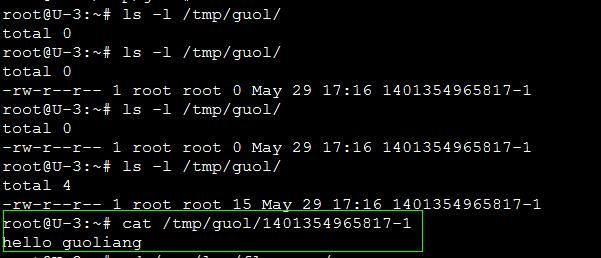

agent.sources = r1 agent.channels = c1 agent.sinks = k1 agent.sources.r1.type = avro agent.sources.r1.bind = 0.0.0.0 agent.sources.r1.port = 5901 agent.sources.r1.channels = c1 agent.sinks.k1.type = file_roll agent.sinks.k1.sink.directory = /tmp/guol agent.sinks.k1.sink.rollInterval = 0 agent.sinks.k1.channel = c1 agent.channels.c1.type = memory agent.channels.c1.capacity = 10 agent.channels.c1.transactionCapacity = 10

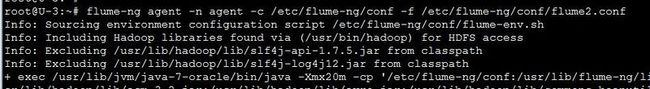

2 在U-3上启动flume服务

flume-ng agent -n agent -c /etc/flume-ng/conf -f /etc/flume-ng/conf/flume2.conf

3 在U-4利用flume模拟数据发送端

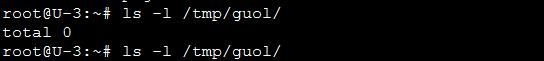

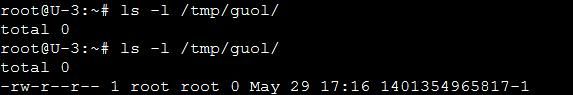

flume-ng avro-client -H 192.168.1.30 -p 5901 -F /tmp/14 在U-3查看收集的日志

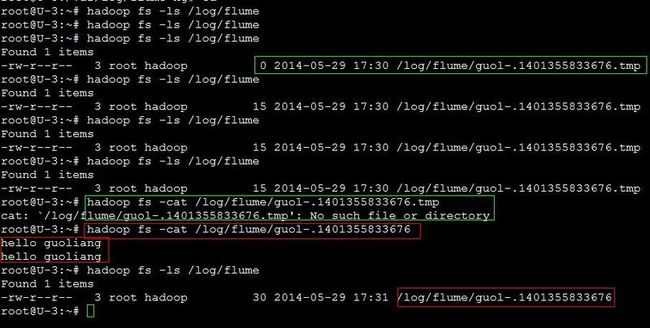

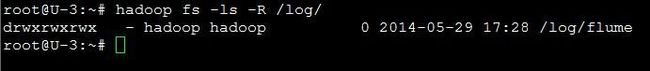

3 收集的日志存放在HDFS上

1 在U-3修改配置文件/etc/flume-ng/conf/flume3.conf,内容如下

agent.sources = r1 agent.channels = c1 agent.sinks = k1 agent.sources.r1.type = avro agent.sources.r1.bind = 0.0.0.0 agent.sources.r1.port = 5901 agent.sources.r1.channels = c1 agent.sinks.k1.type = hdfs agent.sinks.k1.hdfs.path = hdfs://mycluster/log/flume/ agent.sinks.k1.hdfs.filePrefix = guol- agent.sinks.k1.hdfs.fileType = DataStream agent.sinks.k1.sink.rollInterval = 0 agent.sinks.k1.channel = c1 agent.channels.c1.type = memory agent.channels.c1.capacity = 10 agent.channels.c1.transactionCapacity = 10

2 在U-3上启动flume服务

flume-ng agent -n agent -c /etc/flume-ng/conf -f /etc/flume-ng/conf/flume3.conf

3 在U-4利用flume模拟数据发送端

flume-ng avro-client -H 192.168.1.30 -p 5901 -F /tmp/1

**以上命令我连续运行了两遍,所以在收集的日志中会显示两条日志记录

4 在U-3查看收集的日志