Nutch1.7源码再研究之---4 Nutch的Inject过程详解(续)

进过上一节的源码解释,我们将自己设置的冷启动url文件成功的转换为(url,datum).

结果是二进制文件,有兴趣的可以自己去看内容。

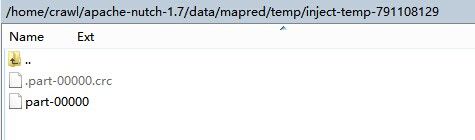

这是产生的二进制文件的截图。

-----------------------------------------------------------------------------------------------------------

下面的代码是:

JobConf mergeJob = CrawlDb.createJob(getConf(), crawlDb);

FileInputFormat.addInputPath(mergeJob, tempDir);

mergeJob.setReducerClass(InjectReducer.class);

JobClient.runJob(mergeJob);

CrawlDb.install(mergeJob, crawlDb);

这里是做一个reduce操作的Hadoop工作,具体看 InjectReducer.class。

------------------- 不过先看下CrawlDb.createJob(getConf(), crawlDb);

代码如下:

public static JobConf createJob(Configuration config, Path crawlDb)

throws IOException {

Path newCrawlDb =

new Path(crawlDb,

Integer.toString(new Random().nextInt(Integer.MAX_VALUE)));

JobConf job = new NutchJob(config);

job.setJobName("crawldb " + crawlDb);

Path current = new Path(crawlDb, CURRENT_NAME);

if (FileSystem.get(job).exists(current)) {

FileInputFormat.addInputPath(job, current);

}

job.setInputFormat(SequenceFileInputFormat.class);

job.setMapperClass(CrawlDbFilter.class);

job.setReducerClass(CrawlDbReducer.class);

FileOutputFormat.setOutputPath(job, newCrawlDb);

job.setOutputFormat(MapFileOutputFormat.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(CrawlDatum.class);

// https://issues.apache.org/jira/browse/NUTCH-1110

job.setBoolean("mapreduce.fileoutputcommitter.marksuccessfuljobs", false);

return job;

}

可见这里针对Hadoop的 job,设置了若干参数,具体不细说。

要注意的2点:

1如果之前已经有一个current文件夹,也要加入到输入路径。

2 reduce类修改为InjectReducer.class.

----------------------------好,先看map class

mapper-class --------class org.apache.nutch.crawl.CrawlDbFilter

代码如下:

public void map(Text key, CrawlDatum value,

OutputCollector<Text, CrawlDatum> output,

Reporter reporter) throws IOException {

//注意,这里的key,value可以是来自我们自定义的url产生的目录,

也可能是来自上一轮抓取产生的current文件夹。

String url = key.toString(); //获取url

// https://issues.apache.org/jira/browse/NUTCH-1101 check status first, cheaper than normalizing or filtering

if (url404Purging && CrawlDatum.STATUS_DB_GONE == value.getStatus()) {

url = null;

} //如果上一轮没有找到导致返回了404状态,且设置了丢弃404,则丢弃此URL

if (url != null && urlNormalizers) {

try {

url = normalizers.normalize(url, scope); // normalize the url

} catch (Exception e) {

LOG.warn("Skipping " + url + ":" + e);

url = null;

}

} //如果需要归一化URL,则进行归一化操作。

if (url != null && urlFiltering) {

try {

url = filters.filter(url); // filter the url

} catch (Exception e) {

LOG.warn("Skipping " + url + ":" + e);

url = null;

}

} //如果需要过滤,也进行一下过滤。

if (url != null) { // if it passes

newKey.set(url); // collect it

output.collect(newKey, value);

} //通过之后,则重新收集起来。

}还是很简单的!

--- 接下来看reduce类 :InjectReducer.class.

public void reduce(Text key, Iterator<CrawlDatum> values,

OutputCollector<Text, CrawlDatum> output, Reporter reporter)

throws IOException {

boolean oldSet = false;

boolean injectedSet = false;

while (values.hasNext()) {

CrawlDatum val = values.next();

if (val.getStatus() == CrawlDatum.STATUS_INJECTED) {

injected.set(val);

injected.setStatus(CrawlDatum.STATUS_DB_UNFETCHED);

injectedSet = true;

} else {

old.set(val);

oldSet = true;

}

}

//根据每一个crawlDatum的状态来执行不同的分支,如果是injected得到的,则赋值给injected...并修改状态为UNFETCHED.

否则则是从老的一轮得来的,不修改状态,之前什么状态现在也是什么状态。

CrawlDatum res = null; //用来指向最终的唯一一个crawlDatum.

/**

* Whether to overwrite, ignore or update existing records

* @see https://issues.apache.org/jira/browse/NUTCH-1405

*/

// Injected record already exists and overwrite but not update

if (injectedSet && oldSet && overwrite) {

res = injected; //如果都存在,且容许覆盖,则指向较新的crawlDatum.

if (update) {

LOG.info(key.toString() + " overwritten with injected record but update was specified.");

}

}

// Injected record already exists and update but not overwrite

if (injectedSet && oldSet && update && !overwrite) {

//不可以覆盖,只容许更新

res = old; //还是沿用老的吧

old.putAllMetaData(injected); //更新injected的一些自带数据。

old.setScore(injected.getScore() != scoreInjected ? injected.getScore() : old.getScore());

//更新分数

old.setFetchInterval(injected.getFetchInterval() != interval ? injected.getFetchInterval() : old.getFetchInterval());

//更新下一次的抓取时间间隔...

}

// Old default behaviour

if (injectedSet && !oldSet) {

res = injected;

} else {

res = old;

} //其它则随便选择了...

output.collect(key, res); //写出即可。

}

---

接下来是

CrawlDb.install(mergeJob, crawlDb);

代码如下:

public static void install(JobConf job, Path crawlDb) throws IOException {

boolean preserveBackup = job.getBoolean("db.preserve.backup", true);

Path newCrawlDb = FileOutputFormat.getOutputPath(job);//获取mergejob的输出目录

FileSystem fs = new JobClient(job).getFs();//获取文件系统

Path old = new Path(crawlDb, "old");//上一轮产生的old文件夹

Path current = new Path(crawlDb, CURRENT_NAME);//current文件夹

if (fs.exists(current)) {

if (fs.exists(old)) fs.delete(old, true);

fs.rename(current, old);

}//删除old,然后命名current为old,因为已经产生了newCrawlDb

fs.mkdirs(crawlDb);

fs.rename(newCrawlDb, current);//命名为current.众望所归

if (!preserveBackup && fs.exists(old)) fs.delete(old, true);//决定是否保存old文件夹

Path lock = new Path(crawlDb, LOCK_NAME);

LockUtil.removeLockFile(fs, lock);//删除锁文件,释放占有权。

}

-------------------------

最后一段代码

// clean up

FileSystem fs = FileSystem.get(getConf());

fs.delete(tempDir, true);//删除url构造crawlDatum产生的文件夹

long end = System.currentTimeMillis();

LOG.info("Injector: finished at " + sdf.format(end) + ", elapsed: " + TimingUtil.elapsedTime(start, end));

//至此,nutch的注入过程全部讲解完毕! :)