默认Facet是统计落入某一组域值的总数的,然后按照总数从大到小排序,判定规则是域值是否相同,其实还可以根据域值是否在某个范围内来判定是否落入某一个分组。这里说的范围就是通过Range定义的,比如:

/**1小时之前的毫秒数*/

final LongRange PAST_HOUR = new LongRange("Past hour", this.nowSec - 3600L,

true, this.nowSec, true);

/**6小时之前的毫秒数*/

final LongRange PAST_SIX_HOURS = new LongRange("Past six hours",

this.nowSec - 21600L, true, this.nowSec, true);

/**24小时之前的毫秒数*/

final LongRange PAST_DAY = new LongRange("Past day", this.nowSec - 86400L,

true, this.nowSec, true);

这里定义了3个数字范围,比如1个小时之前的毫秒数至当前时间毫秒数之间的范围,剩余两个类似,然后就可以根据定义的范围来创建Facet:

//定义3个Facet: 统计

//[过去一小时之前-->当前时间] [过去6小时之前-->当前时间] [过去24小时之前-->当前时间]

Facets facets = new LongRangeFacetCounts("timestamp", fc,

new LongRange[] { this.PAST_HOUR, this.PAST_SIX_HOURS,this.PAST_DAY });

这样就能根据自定义范围去统计了,只要当前域的域值落在这个范围内,则这个facet分组内计数加1.完整示例代码如下:

package com.yida.framework.lucene5.facet;

import java.io.IOException;

import org.apache.lucene.analysis.core.WhitespaceAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.LongField;

import org.apache.lucene.document.NumericDocValuesField;

import org.apache.lucene.facet.DrillDownQuery;

import org.apache.lucene.facet.FacetResult;

import org.apache.lucene.facet.Facets;

import org.apache.lucene.facet.FacetsCollector;

import org.apache.lucene.facet.FacetsConfig;

import org.apache.lucene.facet.range.LongRange;

import org.apache.lucene.facet.range.LongRangeFacetCounts;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.MatchAllDocsQuery;

import org.apache.lucene.search.NumericRangeQuery;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.RAMDirectory;

public class RangeFacetsExample {

private final Directory indexDir = new RAMDirectory();

private IndexSearcher searcher;

/**当前时间的毫秒数*/

private final long nowSec = System.currentTimeMillis();

/**1小时之前的毫秒数*/

final LongRange PAST_HOUR = new LongRange("Past hour", this.nowSec - 3600L,

true, this.nowSec, true);

/**6小时之前的毫秒数*/

final LongRange PAST_SIX_HOURS = new LongRange("Past six hours",

this.nowSec - 21600L, true, this.nowSec, true);

/**24小时之前的毫秒数*/

final LongRange PAST_DAY = new LongRange("Past day", this.nowSec - 86400L,

true, this.nowSec, true);

/**

* 创建测试索引

* @throws IOException

*/

public void index() throws IOException {

IndexWriter indexWriter = new IndexWriter(this.indexDir,

new IndexWriterConfig(new WhitespaceAnalyzer())

.setOpenMode(IndexWriterConfig.OpenMode.CREATE));

/**

* 每次按[1000*i]这个斜率递减创建一个索引

*/

for (int i = 0; i < 100; i++) {

Document doc = new Document();

long then = this.nowSec - i * 1000;

doc.add(new NumericDocValuesField("timestamp", then));

doc.add(new LongField("timestamp", then, Field.Store.YES));

indexWriter.addDocument(doc);

}

this.searcher = new IndexSearcher(DirectoryReader.open(indexWriter,

true));

indexWriter.close();

}

/**

* 获取FacetConfig配置对象

* @return

*/

private FacetsConfig getConfig() {

return new FacetsConfig();

}

public FacetResult search() throws IOException {

/**创建Facet结果收集器*/

FacetsCollector fc = new FacetsCollector();

TopDocs topDocs = FacetsCollector.search(this.searcher, new MatchAllDocsQuery(), 20, fc);

ScoreDoc[] scoreDocs = topDocs.scoreDocs;

for(ScoreDoc scoreDoc : scoreDocs) {

int docId = scoreDoc.doc;

Document doc = searcher.doc(docId);

System.out.println(scoreDoc.doc + "\t" + doc.get("timestamp"));

}

//定义3个Facet: 统计

//[过去一小时之前-->当前时间] [过去6小时之前-->当前时间] [过去24小时之前-->当前时间]

Facets facets = new LongRangeFacetCounts("timestamp", fc,

new LongRange[] { this.PAST_HOUR, this.PAST_SIX_HOURS,

this.PAST_DAY });

return facets.getTopChildren(10, "timestamp", new String[0]);

}

/**

* 使用DrillDownQuery进行Facet统计

* @param range

* @return

* @throws IOException

*/

public TopDocs drillDown(LongRange range) throws IOException {

DrillDownQuery q = new DrillDownQuery(getConfig());

q.add("timestamp", NumericRangeQuery.newLongRange("timestamp",

Long.valueOf(range.min), Long.valueOf(range.max),

range.minInclusive, range.maxInclusive));

return this.searcher.search(q, 10);

}

public void close() throws IOException {

this.searcher.getIndexReader().close();

this.indexDir.close();

}

public static void main(String[] args) throws Exception {

RangeFacetsExample example = new RangeFacetsExample();

example.index();

System.out.println("Facet counting example:");

System.out.println("-----------------------");

System.out.println(example.search());

System.out.println("\n");

//只统计6个小时之前的Facet

System.out

.println("Facet drill-down example (timestamp/Past six hours):");

System.out.println("---------------------------------------------");

TopDocs hits = example.drillDown(example.PAST_SIX_HOURS);

System.out.println(hits.totalHits + " totalHits");

example.close();

}

}

我们在通过FacetsCollector.search进行搜索时,其实是可以传入排序器的,这时我们在创建索引时就需要使用SortedSetDocValuesFacetField,这样FacetsCollector.search返回的TopDocs就是经过排序的了,这一点需要注意。具体示例代码如下:

package com.yida.framework.lucene5.facet;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.lucene.analysis.core.WhitespaceAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.facet.DrillDownQuery;

import org.apache.lucene.facet.FacetResult;

import org.apache.lucene.facet.Facets;

import org.apache.lucene.facet.FacetsCollector;

import org.apache.lucene.facet.FacetsConfig;

import org.apache.lucene.facet.sortedset.DefaultSortedSetDocValuesReaderState;

import org.apache.lucene.facet.sortedset.SortedSetDocValuesFacetCounts;

import org.apache.lucene.facet.sortedset.SortedSetDocValuesFacetField;

import org.apache.lucene.facet.sortedset.SortedSetDocValuesReaderState;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.MatchAllDocsQuery;

import org.apache.lucene.search.Sort;

import org.apache.lucene.search.SortField;

import org.apache.lucene.search.SortField.Type;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.RAMDirectory;

public class SimpleSortedSetFacetsExample {

private final Directory indexDir = new RAMDirectory();

private final FacetsConfig config = new FacetsConfig();

private void index() throws IOException {

IndexWriter indexWriter = new IndexWriter(this.indexDir,

new IndexWriterConfig(new WhitespaceAnalyzer())

.setOpenMode(IndexWriterConfig.OpenMode.CREATE));

Document doc = new Document();

doc.add(new SortedSetDocValuesFacetField("Author", "Bob"));

doc.add(new SortedSetDocValuesFacetField("Publish Year", "2010"));

indexWriter.addDocument(this.config.build(doc));

doc = new Document();

doc.add(new SortedSetDocValuesFacetField("Author", "Lisa"));

doc.add(new SortedSetDocValuesFacetField("Publish Year", "2010"));

indexWriter.addDocument(this.config.build(doc));

doc = new Document();

doc.add(new SortedSetDocValuesFacetField("Author", "Lisa"));

doc.add(new SortedSetDocValuesFacetField("Publish Year", "2012"));

indexWriter.addDocument(this.config.build(doc));

doc = new Document();

doc.add(new SortedSetDocValuesFacetField("Author", "Susan"));

doc.add(new SortedSetDocValuesFacetField("Publish Year", "2012"));

indexWriter.addDocument(this.config.build(doc));

doc = new Document();

doc.add(new SortedSetDocValuesFacetField("Author", "Frank"));

doc.add(new SortedSetDocValuesFacetField("Publish Year", "1999"));

indexWriter.addDocument(this.config.build(doc));

indexWriter.close();

}

private List<FacetResult> search() throws IOException {

DirectoryReader indexReader = DirectoryReader.open(this.indexDir);

IndexSearcher searcher = new IndexSearcher(indexReader);

SortedSetDocValuesReaderState state = new DefaultSortedSetDocValuesReaderState(

indexReader);

FacetsCollector fc = new FacetsCollector();

Sort sort = new Sort(new SortField("Author", Type.INT, false));

//FacetsCollector.search(searcher, new MatchAllDocsQuery(), 10, fc);

TopDocs topDocs = FacetsCollector.search(searcher,new MatchAllDocsQuery(),null,10,sort,true,false,fc);

Facets facets = new SortedSetDocValuesFacetCounts(state, fc);

List<FacetResult> results = new ArrayList<FacetResult>();

results.add(facets.getTopChildren(10, "Author", new String[0]));

results.add(facets.getTopChildren(10, "Publish Year", new String[0]));

indexReader.close();

return results;

}

private FacetResult drillDown() throws IOException {

DirectoryReader indexReader = DirectoryReader.open(this.indexDir);

IndexSearcher searcher = new IndexSearcher(indexReader);

SortedSetDocValuesReaderState state = new DefaultSortedSetDocValuesReaderState(

indexReader);

DrillDownQuery q = new DrillDownQuery(this.config);

q.add("Publish Year", new String[] { "2010" });

FacetsCollector fc = new FacetsCollector();

FacetsCollector.search(searcher, q, 10, fc);

Facets facets = new SortedSetDocValuesFacetCounts(state, fc);

FacetResult result = facets.getTopChildren(10, "Author", new String[0]);

indexReader.close();

return result;

}

public List<FacetResult> runSearch() throws IOException {

index();

return search();

}

public FacetResult runDrillDown() throws IOException {

index();

return drillDown();

}

public static void main(String[] args) throws Exception {

System.out.println("Facet counting example:");

System.out.println("-----------------------");

SimpleSortedSetFacetsExample example = new SimpleSortedSetFacetsExample();

List<FacetResult> results = example.runSearch();

System.out.println("Author: " + results.get(0));

System.out.println("Publish Year: " + results.get(0));

System.out.println("\n");

System.out.println("Facet drill-down example (Publish Year/2010):");

System.out.println("---------------------------------------------");

System.out.println("Author: " + example.runDrillDown());

}

}

前面我们在定义Facet时,即可以不指定域名,比如:

Facets facets = new FastTaxonomyFacetCounts(taxoReader, this.config, fc);

这时默认会根据我们定义的FacetField去决定定义几个Facet进行统计,你可以指定只对指定域定义Facet,这样就可以值返回你关心的Facet统计数据,比如:

Facets author = new FastTaxonomyFacetCounts("author", taxoReader,

this.config, fc);

具体完整示例代码如下:

package com.yida.framework.lucene5.facet;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.lucene.analysis.core.WhitespaceAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.facet.FacetField;

import org.apache.lucene.facet.FacetResult;

import org.apache.lucene.facet.Facets;

import org.apache.lucene.facet.FacetsCollector;

import org.apache.lucene.facet.FacetsConfig;

import org.apache.lucene.facet.taxonomy.FastTaxonomyFacetCounts;

import org.apache.lucene.facet.taxonomy.TaxonomyReader;

import org.apache.lucene.facet.taxonomy.directory.DirectoryTaxonomyReader;

import org.apache.lucene.facet.taxonomy.directory.DirectoryTaxonomyWriter;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.MatchAllDocsQuery;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.RAMDirectory;

/**

* 根据指定域加载特定Facet Count

* @author Lanxiaowei

*

*/

public class MultiCategoryListsFacetsExample {

private final Directory indexDir = new RAMDirectory();

private final Directory taxoDir = new RAMDirectory();

private final FacetsConfig config = new FacetsConfig();

public MultiCategoryListsFacetsExample() {

//定义 域别名

this.config.setIndexFieldName("Author", "author");

this.config.setIndexFieldName("Publish Date", "pubdate");

// 设置Publish Date为多值域

this.config.setHierarchical("Publish Date", true);

}

/**

* 创建测试索引

* @throws IOException

*/

private void index() throws IOException {

IndexWriter indexWriter = new IndexWriter(this.indexDir,

new IndexWriterConfig(new WhitespaceAnalyzer())

.setOpenMode(IndexWriterConfig.OpenMode.CREATE));

DirectoryTaxonomyWriter taxoWriter = new DirectoryTaxonomyWriter(

this.taxoDir);

Document doc = new Document();

doc.add(new FacetField("Author", new String[] { "Bob" }));

doc.add(new FacetField("Publish Date", new String[] { "2010", "10",

"15" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

doc = new Document();

doc.add(new FacetField("Author", new String[] { "Lisa" }));

doc.add(new FacetField("Publish Date", new String[] { "2010", "10",

"20" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

doc = new Document();

doc.add(new FacetField("Author", new String[] { "Lisa" }));

doc.add(new FacetField("Publish Date",

new String[] { "2012", "1", "1" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

doc = new Document();

doc.add(new FacetField("Author", new String[] { "Susan" }));

doc.add(new FacetField("Publish Date",

new String[] { "2012", "1", "7" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

doc = new Document();

doc.add(new FacetField("Author", new String[] { "Frank" }));

doc.add(new FacetField("Publish Date",

new String[] { "1999", "5", "5" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

indexWriter.close();

taxoWriter.close();

}

private List<FacetResult> search() throws IOException {

DirectoryReader indexReader = DirectoryReader.open(this.indexDir);

IndexSearcher searcher = new IndexSearcher(indexReader);

TaxonomyReader taxoReader = new DirectoryTaxonomyReader(this.taxoDir);

FacetsCollector fc = new FacetsCollector();

FacetsCollector.search(searcher, new MatchAllDocsQuery(), 10, fc);

List<FacetResult> results = new ArrayList<FacetResult>();

//定义author域的Facet,

//FastTaxonomyFacetCounts第一个构造参数指定域名称,

//则只统计指定域的Facet总数,不指定域名称,则默认会统计所有FacetField域的总数

//这就跟SQL中的select * from...和 select name from ...差不多

Facets author = new FastTaxonomyFacetCounts("author", taxoReader,

this.config, fc);

results.add(author.getTopChildren(10, "Author", new String[0]));

//定义pubdate域的Facet

Facets pubDate = new FastTaxonomyFacetCounts("pubdate", taxoReader,

this.config, fc);

results.add(pubDate.getTopChildren(10, "Publish Date", new String[0]));

indexReader.close();

taxoReader.close();

return results;

}

public List<FacetResult> runSearch() throws IOException {

index();

return search();

}

public static void main(String[] args) throws Exception {

System.out

.println("Facet counting over multiple category lists example:");

System.out.println("-----------------------");

List<FacetResult> results = new MultiCategoryListsFacetsExample().runSearch();

System.out.println("Author: " + results.get(0));

System.out.println("Publish Date: " + results.get(1));

}

}

上面说的Facet数字统计方式都是按照出现次数计算总和,其实lucene还提供了js表达式方式自定义Facet数据统计,比如:

/**当前索引文档评分乘以popularity域值的平方根 作为 Facet的统计值,默认是统计命中索引文档总数*/

Expression expr = JavascriptCompiler.compile("_score * sqrt(popularity)");

即表示每个域的域值相同的落入一组,但此时每一组的数字总计不再是计算总个数,而是按照指定的js表达式去计算然后求和,比如上述表达式即表示当前索引文档评分乘以popularity域值的平方根 作为 Facet的统计值,_score是内置变量代指当前索引文档的评分,sqrt是内置函数表示开平方根,lucene expression表达式是通过JavascriptCompiler进行编译的,而JavascriptCompiler是借助大名鼎鼎的开源语法分析器antlr实现的,与antlr类似的还有JFlex,自己google了解去,关于语法分析这个话题就超出本篇主题了。下面说说lucene expression都支持哪些函数和操作符:

大意就是它是基于Javascript语法的特定数字表达式,它支持:

1,Integer,float数字,十六进制和八进制字符

2.支持常用的数学操作符,加减乘除取模等

3.支持位运算符

4.支持布尔运算符

5.支持比较运算符

6.支持常用的数学函数,比如abs取绝对值,log对数,max取最大值,sqrt开平方根等

7.支持三角函数,比如sin,cos等等

8.支持地理函数,如haversin公式即求地球上任意两个点之间的距离,具体请自己去Google haversin公式

9.辅助函数,貌似跟第6条重复,搞什么飞机,我只是翻译下而已,跟我没关系

10.支持任意的外部变量,这一条有点费解,还好我在源码里找到了相关说明

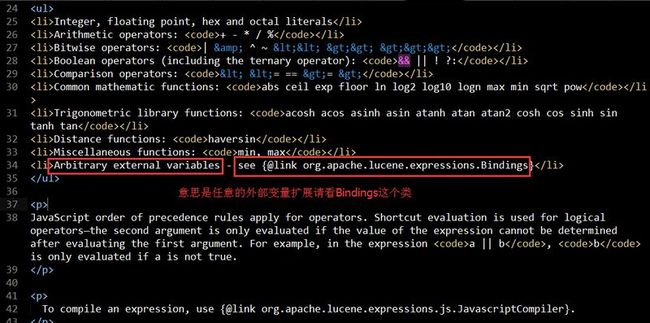

查阅org.apache.lucene.expressions.js包下面的package.html,看到里面有这样一句提示:

打开Bindings类源码,没看到什么有用的信息,然后我无意在JavascriptCompiler类源码中找到如下一段说明,让我茅塞顿开:

/**

* An expression compiler for javascript expressions.

* <p>

* Example:

* <pre class="prettyprint">

* Expression foo = JavascriptCompiler.compile("((0.3*popularity)/10.0)+(0.7*score)");

* </pre>

* <p>

* See the {@link org.apache.lucene.expressions.js package documentation} for

* the supported syntax and default functions.

* <p>

* You can compile with an alternate set of functions via {@link #compile(String, Map, ClassLoader)}.

* For example:

* <pre class="prettyprint">

* Map<String,Method> functions = new HashMap<>();

* // add all the default functions

* functions.putAll(JavascriptCompiler.DEFAULT_FUNCTIONS);

* // add cbrt()

* functions.put("cbrt", Math.class.getMethod("cbrt", double.class));

* // call compile with customized function map

* Expression foo = JavascriptCompiler.compile("cbrt(score)+ln(popularity)",

* functions,

* getClass().getClassLoader());

* </pre>

*

* @lucene.experimental

*/

重点在这里:

Map<String,Method> functions = new HashMap<String,Method>();

// add all the default functions

functions.putAll(JavascriptCompiler.DEFAULT_FUNCTIONS);

// add cbrt()

functions.put("cbrt", Math.class.getMethod("cbrt", double.class));

// call compile with customized function map

Expression foo = JavascriptCompiler.compile("cbrt(score)+ln(popularity)",

functions,

getClass().getClassLoader());

通过示例代码,不难理解,意思就是我们可以把我们Java里类的某个方法通过JavascriptCompilter进行编译,就能像直接调用js内置函数一样,毕竟js内置函数是有限的,但内置的js函数不能满足你的要求,你就可以把java里自定义方法进行编译在js里执行。示例的代码就是把Math类里的cbrt方法(求一个double数值的立方根)编译为js内置函数,然后你就可以像使用js内置函数一样使用java里任意类的方法了。下面是一个使用lucene expressions完成Facet统计的完整示例:

package com.yida.framework.lucene5.facet;

import java.io.IOException;

import java.text.ParseException;

import org.apache.lucene.analysis.core.WhitespaceAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.NumericDocValuesField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.expressions.Expression;

import org.apache.lucene.expressions.SimpleBindings;

import org.apache.lucene.expressions.js.JavascriptCompiler;

import org.apache.lucene.facet.FacetField;

import org.apache.lucene.facet.FacetResult;

import org.apache.lucene.facet.Facets;

import org.apache.lucene.facet.FacetsCollector;

import org.apache.lucene.facet.FacetsConfig;

import org.apache.lucene.facet.taxonomy.TaxonomyFacetSumValueSource;

import org.apache.lucene.facet.taxonomy.TaxonomyReader;

import org.apache.lucene.facet.taxonomy.directory.DirectoryTaxonomyReader;

import org.apache.lucene.facet.taxonomy.directory.DirectoryTaxonomyWriter;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.MatchAllDocsQuery;

import org.apache.lucene.search.SortField;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.RAMDirectory;

public class ExpressionAggregationFacetsExample {

private final Directory indexDir = new RAMDirectory();

private final Directory taxoDir = new RAMDirectory();

private final FacetsConfig config = new FacetsConfig();

private void index() throws IOException {

IndexWriter indexWriter = new IndexWriter(this.indexDir,

new IndexWriterConfig(new WhitespaceAnalyzer())

.setOpenMode(IndexWriterConfig.OpenMode.CREATE));

DirectoryTaxonomyWriter taxoWriter = new DirectoryTaxonomyWriter(

this.taxoDir);

Document doc = new Document();

doc.add(new TextField("c", "foo bar", Field.Store.NO));

doc.add(new NumericDocValuesField("popularity", 5L));

doc.add(new FacetField("A", new String[] { "B" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

doc = new Document();

doc.add(new TextField("c", "foo foo bar", Field.Store.NO));

doc.add(new NumericDocValuesField("popularity", 3L));

doc.add(new FacetField("A", new String[] { "C" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

doc = new Document();

doc.add(new TextField("c", "foo foo bar", Field.Store.NO));

doc.add(new NumericDocValuesField("popularity", 8L));

doc.add(new FacetField("A", new String[] { "B" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

indexWriter.close();

taxoWriter.close();

}

private FacetResult search() throws IOException, ParseException {

DirectoryReader indexReader = DirectoryReader.open(this.indexDir);

IndexSearcher searcher = new IndexSearcher(indexReader);

TaxonomyReader taxoReader = new DirectoryTaxonomyReader(this.taxoDir);

/**当前索引文档评分乘以popularity域值的平方根 作为 Facet的统计值,默认是统计命中索引文档总数*/

Expression expr = JavascriptCompiler.compile("_score * sqrt(popularity)");

SimpleBindings bindings = new SimpleBindings();

bindings.add(new SortField("_score", SortField.Type.SCORE));

bindings.add(new SortField("popularity", SortField.Type.LONG));

FacetsCollector fc = new FacetsCollector(true);

FacetsCollector.search(searcher, new MatchAllDocsQuery(), 10, fc);

//以Expression表达式的计算值定义一个Facet

Facets facets = new TaxonomyFacetSumValueSource(taxoReader,

this.config, fc, expr.getValueSource(bindings));

FacetResult result = facets.getTopChildren(10, "A", new String[0]);

indexReader.close();

taxoReader.close();

return result;

}

public FacetResult runSearch() throws IOException, ParseException {

index();

return search();

}

public static void main(String[] args) throws Exception {

System.out.println("Facet counting example:");

System.out.println("-----------------------");

FacetResult result = new ExpressionAggregationFacetsExample()

.runSearch();

System.out.println(result);

}

}

前面说到的Lucene Expression是通过一个表达式来计算统计值然后求和,我们也可以使用AssociationFacetField域来关联一个任意值,然后根据这个值来求和统计,类似这样:

//3 --> lucene[不再是统计lucene这个域值的出现总次数,而是统计IntAssociationFacetField的第一个构造参数assoc总和]

doc.add(new IntAssociationFacetField(3, "tags", new String[] { "lucene" }));

doc.add(new FloatAssociationFacetField(0.87F, "genre", new String[] { "computing" }));

其实创建一个AssociationFacetField等价于创建了一个StringField和一个BinaryDocValuesField(创建这个域是为了辅助统计Facet Count) ,有关AssociationFacetField的完整示例代码如下:

package com.yida.framework.lucene5.facet;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.lucene.analysis.core.WhitespaceAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.facet.DrillDownQuery;

import org.apache.lucene.facet.FacetResult;

import org.apache.lucene.facet.Facets;

import org.apache.lucene.facet.FacetsCollector;

import org.apache.lucene.facet.FacetsConfig;

import org.apache.lucene.facet.taxonomy.FloatAssociationFacetField;

import org.apache.lucene.facet.taxonomy.IntAssociationFacetField;

import org.apache.lucene.facet.taxonomy.TaxonomyFacetSumFloatAssociations;

import org.apache.lucene.facet.taxonomy.TaxonomyFacetSumIntAssociations;

import org.apache.lucene.facet.taxonomy.TaxonomyReader;

import org.apache.lucene.facet.taxonomy.directory.DirectoryTaxonomyReader;

import org.apache.lucene.facet.taxonomy.directory.DirectoryTaxonomyWriter;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.MatchAllDocsQuery;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.RAMDirectory;

public class AssociationsFacetsExample {

private final Directory indexDir = new RAMDirectory();

private final Directory taxoDir = new RAMDirectory();

private final FacetsConfig config;

public AssociationsFacetsExample()

{

this.config = new FacetsConfig();

this.config.setMultiValued("tags", true);

this.config.setIndexFieldName("tags", "$tags");

this.config.setMultiValued("genre", true);

this.config.setIndexFieldName("genre", "$genre");

}

private void index() throws IOException

{

IndexWriterConfig iwc = new IndexWriterConfig(new WhitespaceAnalyzer()).setOpenMode(IndexWriterConfig.OpenMode.CREATE);

IndexWriter indexWriter = new IndexWriter(this.indexDir, iwc);

DirectoryTaxonomyWriter taxoWriter = new DirectoryTaxonomyWriter(this.taxoDir);

Document doc = new Document();

//3 --> lucene[不再是统计lucene这个域值的出现总次数,而是统计IntAssociationFacetField的第一个构造参数assoc总和]

doc.add(new IntAssociationFacetField(3, "tags", new String[] { "lucene" }));

doc.add(new FloatAssociationFacetField(0.87F, "genre", new String[] { "computing" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

doc = new Document();

//1 --> lucene

doc.add(new IntAssociationFacetField(1, "tags", new String[] { "lucene" }));

doc.add(new IntAssociationFacetField(2, "tags", new String[] { "solr" }));

doc.add(new FloatAssociationFacetField(0.75F, "genre", new String[] { "computing" }));

doc.add(new FloatAssociationFacetField(0.34F, "genre", new String[] { "software" }));

indexWriter.addDocument(this.config.build(taxoWriter, doc));

indexWriter.close();

taxoWriter.close();

}

private List<FacetResult> sumAssociations() throws IOException

{

DirectoryReader indexReader = DirectoryReader.open(this.indexDir);

IndexSearcher searcher = new IndexSearcher(indexReader);

TaxonomyReader taxoReader = new DirectoryTaxonomyReader(this.taxoDir);

FacetsCollector fc = new FacetsCollector();

FacetsCollector.search(searcher, new MatchAllDocsQuery(), 10, fc);

//定义了两个Facet

Facets tags = new TaxonomyFacetSumIntAssociations("$tags", taxoReader, this.config, fc);

Facets genre = new TaxonomyFacetSumFloatAssociations("$genre", taxoReader, this.config, fc);

List<FacetResult> results = new ArrayList<FacetResult>();

results.add(tags.getTopChildren(10, "tags", new String[0]));

results.add(genre.getTopChildren(10, "genre", new String[0]));

indexReader.close();

taxoReader.close();

return results;

}

private FacetResult drillDown() throws IOException

{

DirectoryReader indexReader = DirectoryReader.open(this.indexDir);

IndexSearcher searcher = new IndexSearcher(indexReader);

TaxonomyReader taxoReader = new DirectoryTaxonomyReader(this.taxoDir);

DrillDownQuery q = new DrillDownQuery(this.config);

q.add("tags", new String[] { "solr" });

FacetsCollector fc = new FacetsCollector();

FacetsCollector.search(searcher, q, 10, fc);

Facets facets = new TaxonomyFacetSumFloatAssociations("$genre", taxoReader, this.config, fc);

FacetResult result = facets.getTopChildren(10, "$genre", new String[0]);

indexReader.close();

taxoReader.close();

return result;

}

public List<FacetResult> runSumAssociations() throws IOException

{

index();

return sumAssociations();

}

public FacetResult runDrillDown() throws IOException

{

index();

return drillDown();

}

public static void main(String[] args) throws Exception

{

System.out.println("Sum associations example:");

System.out.println("-------------------------");

List<FacetResult> results = new AssociationsFacetsExample().runSumAssociations();

System.out.println("tags: " + results.get(0));

System.out.println("genre: " + results.get(1));

}

}

Facet就说这么多了,一些细节方面大家请看demo代码里面的注释,如果我哪里没说到的而你又感觉很迷惑无法理解的,请提出来,大家一起交流学习。如果我有哪里说的不够准确,也希望大家能够积极指正多多批评。 Demo源码请看底下的附件!!!

如果你还有什么问题请加我Q-Q:7-3-6-0-3-1-3-0-5,

或者加裙![]() 一起交流学习!

一起交流学习!