用Hadoop分析专利数据集

要想让Hadoop做的工作有意义就需要耐人寻味的数据。大家可以到

http://www.nber.org/patents/上下载专利数据集。本文使用了专利引用数据集cite75_99.txt.

这个数据集约有250MB,虽然没有一个真正的集群,但这个数据量的数据足以让我们心情澎湃的去练习MapReduce。而且一个流行的开发策略是为生产环境中的大数据集建立一个较小的,抽样的数据子集,称为开发数据集。这样,我们以单机或者伪分布模式编写程序来处理它们时,就能很容易去运行并调试。

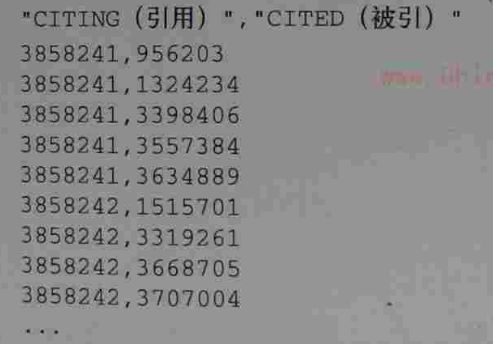

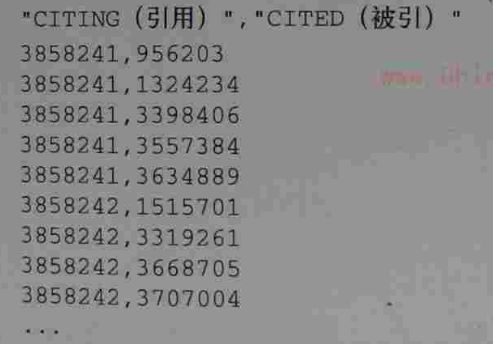

cite75_99.txt里面的内容如下所示:

每行有两个数字,代表前面的新专利引用了后面的专利。我准备实现两个M/R任务,首先统计每个以前的专利被哪几个新专利引用,然后统计每个以前的专利被引用了多少次。

每行有两个数字,代表前面的新专利引用了后面的专利。我准备实现两个M/R任务,首先统计每个以前的专利被哪几个新专利引用,然后统计每个以前的专利被引用了多少次。

一:统计每个以前的专利被哪几个新专利引用

不多说,直接上代码 :

:

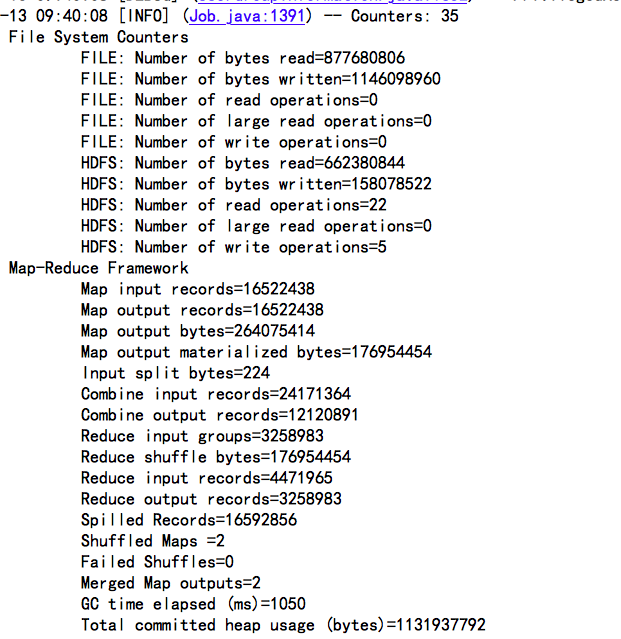

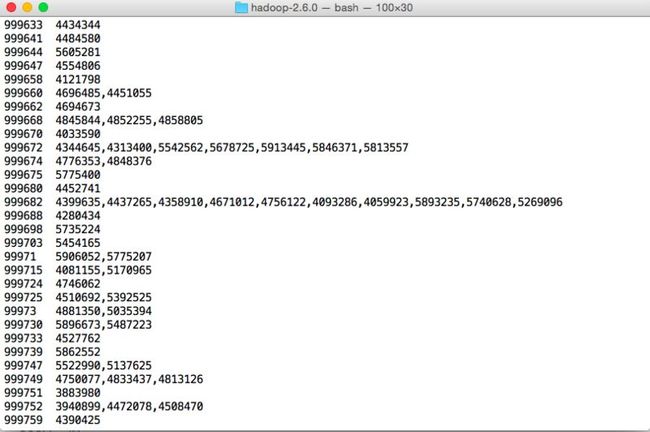

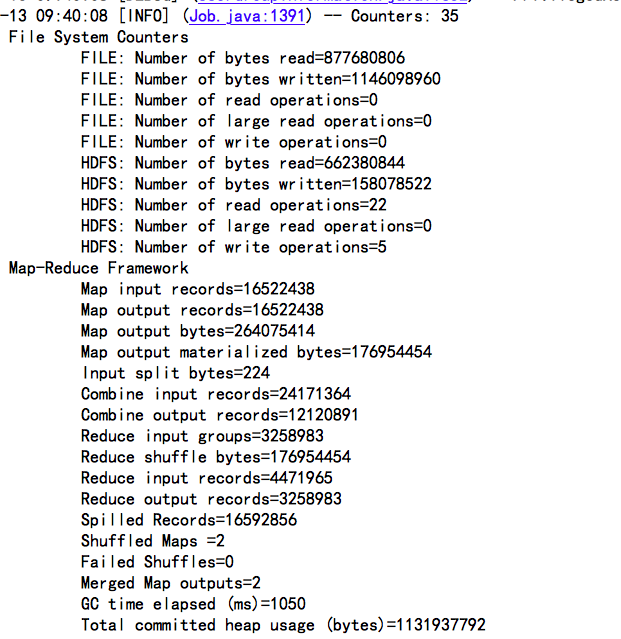

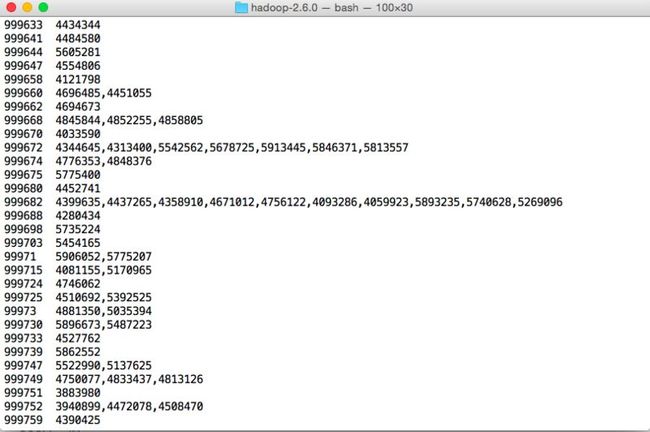

代码很简单,就不解释了。运行我们可以直接在eclipse上点击Run Configurations然后添加输入输出文件夹,没有问题之后,然后Run on Hadoop,这样就能看到日志信息(当然要添加一个log4j.properties)。下面是counters信息和统计结果:

有强迫症的话就打包然后用命令行执行咯。

二:统计每个以前的专利被引用了多少次。

代码:

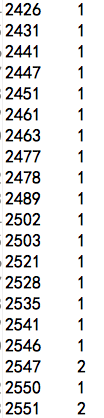

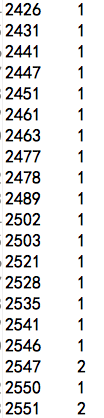

直接上结果:

这还是很简单的M/R,但是拿来入门还是很不错的。

这个数据集约有250MB,虽然没有一个真正的集群,但这个数据量的数据足以让我们心情澎湃的去练习MapReduce。而且一个流行的开发策略是为生产环境中的大数据集建立一个较小的,抽样的数据子集,称为开发数据集。这样,我们以单机或者伪分布模式编写程序来处理它们时,就能很容易去运行并调试。

cite75_99.txt里面的内容如下所示:

每行有两个数字,代表前面的新专利引用了后面的专利。我准备实现两个M/R任务,首先统计每个以前的专利被哪几个新专利引用,然后统计每个以前的专利被引用了多少次。

每行有两个数字,代表前面的新专利引用了后面的专利。我准备实现两个M/R任务,首先统计每个以前的专利被哪几个新专利引用,然后统计每个以前的专利被引用了多少次。

一:统计每个以前的专利被哪几个新专利引用

不多说,直接上代码

public class Potent_ByWhichCitation extends Configured implements Tool {

public static class CitationMapper extends Mapper<Object, Text, Text, Text> {

@Override

protected void map(Object key, Text value,

Mapper<Object, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// 根据逗号拆分

String[] str = value.toString().split(",");

context.write(new Text(str[1]), new Text(str[0]));

}

}

public static class IntSumReducer extends Reducer<Text, Text, Text, Text> {

@Override

protected void reduce(Text key, Iterable<Text> values,

Reducer<Text, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

String csv = "";

for (Text val : values) {

if (csv.length() > 0)

csv += ',';

csv += val.toString();

}

context.write(key, new Text(csv));

}

}

public static void main(String[] args) throws Exception {

int res = ToolRunner.run(new Configuration(), new Potent_ByWhichCitation(), args);

System.exit(res);

}

@Override

public int run(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args)

.getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}

@SuppressWarnings("deprecation")

Job job = new Job(conf, "potent analyse");

job.setJarByClass(Potent_CountsByCitation.class);

job.setMapperClass(CitationMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

return 0;

}

}

代码很简单,就不解释了。运行我们可以直接在eclipse上点击Run Configurations然后添加输入输出文件夹,没有问题之后,然后Run on Hadoop,这样就能看到日志信息(当然要添加一个log4j.properties)。下面是counters信息和统计结果:

有强迫症的话就打包然后用命令行执行咯。

二:统计每个以前的专利被引用了多少次。

代码:

public class Potent_CountsByCitation {

public static class CitationMapper extends

Mapper<Object, Text, IntWritable, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private IntWritable citation = new IntWritable();

@Override

protected void map(Object key, Text value,

Mapper<Object, Text, IntWritable, IntWritable>.Context context)

throws IOException, InterruptedException {

// 根据逗号拆分

String[] str = value.toString().split(",");

System.out.println(str[1]+" ");

citation.set(Integer.parseInt(str[1].toString()));

context.write(citation, one);

}

}

public static class IntSumReducer extends

Reducer<IntWritable, IntWritable, IntWritable, IntWritable> {

private IntWritable result = new IntWritable();

@Override

protected void reduce(

IntWritable key,

Iterable<IntWritable> values,

Reducer<IntWritable, IntWritable, IntWritable, IntWritable>.Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values)

sum += val.get();

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args)

.getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}

@SuppressWarnings("deprecation")

Job job = new Job(conf, "potent analyse");

job.setJarByClass(Potent_CountsByCitation.class);

job.setMapperClass(CitationMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

直接上结果:

这还是很简单的M/R,但是拿来入门还是很不错的。